1 Introduction to AI

Over the past seventy years, Artificial Intelligence (AI) has evolved from a theoretical concept first explored by Alan Turing into a transformative technology that touches nearly every aspect of our modern world. This chapter offers a comprehensive examination of AI’s journey, current state, and future trajectory.

We begin by exploring AI’s historical foundations, starting with Turing’s groundbreaking question of whether machines could think like humans. From the coining of the term “AI” at Dartmouth College in 1955 through the development of early systems like the Logic Theorist and Perceptron, to today’s sophisticated deep learning models, we trace the key innovations that have shaped the field. This evolution reveals not just AI’s technical progress, but also how our understanding of machine intelligence has deepened over time.

To build a thorough understanding of AI, we examine it through three distinct lenses: technological, psychological, and cognitive-behavioral. Each perspective illuminates different aspects of artificial intelligence, from its computational foundations to its relationship with human thought processes. By considering these multiple viewpoints, we can better grasp both AI’s current capabilities and its fundamental nature.

The chapter then breaks down how modern AI systems function, exploring core concepts like algorithms, machine learning, deep learning, natural language processing, and robotics. Through practical examples and real-world applications, we demonstrate how these technologies work together to create intelligent systems that can perceive, learn, and act in increasingly sophisticated ways.

Finally, we present two frameworks for categorizing AI: one based on how closely systems mirror human behavior, and another focused on their technological capabilities. These complementary approaches help us understand both the current state of AI technology and its potential future developments, providing a foundation for analyzing AI’s ongoing impact across industries and society.

A Brief History of AI

Over the past seventy years, researchers have worked hard to explore if machines can think like humans, a question first posed by Alan Turing. Turing created the famous “Turing test” to see if a computer could mimic human conversation. This test laid the groundwork for what we now call artificial intelligence (AI). The term AI was first used in 1955 during a summer research workshop at Dartmouth College. AI is defined as making a machine act in ways that would be considered intelligent if a human were doing the same. In the late 1950s, AI research focused on teaching computers to solve math and logic problems, leading to developments like the Logic Theorist, the Perceptron, and object recognition systems.

Since the 1980s, AI has grown into a booming industry, especially as researchers shifted their focus to machine learning. Machine learning is a part of AI where a computer program learns from experience to perform tasks better over time. It has revolutionized many fields, including biology, education, engineering, finance, healthcare, and marketing. Machine learning algorithms use large amounts of data to find patterns and make predictions, recommendations, and decisions. For example, companies like Amazon and Netflix use machine learning to power their recommendation systems.

The latest advancements in AI are due to deep learning. Deep learning involves using neural networks with many hidden layers to process data in complex ways. This method allows algorithms to refine themselves with new data, similar to how the human brain works. Deep learning has significantly improved AI’s ability to make accurate predictions and is used in areas like image recognition, social media analysis, and autonomous driving.

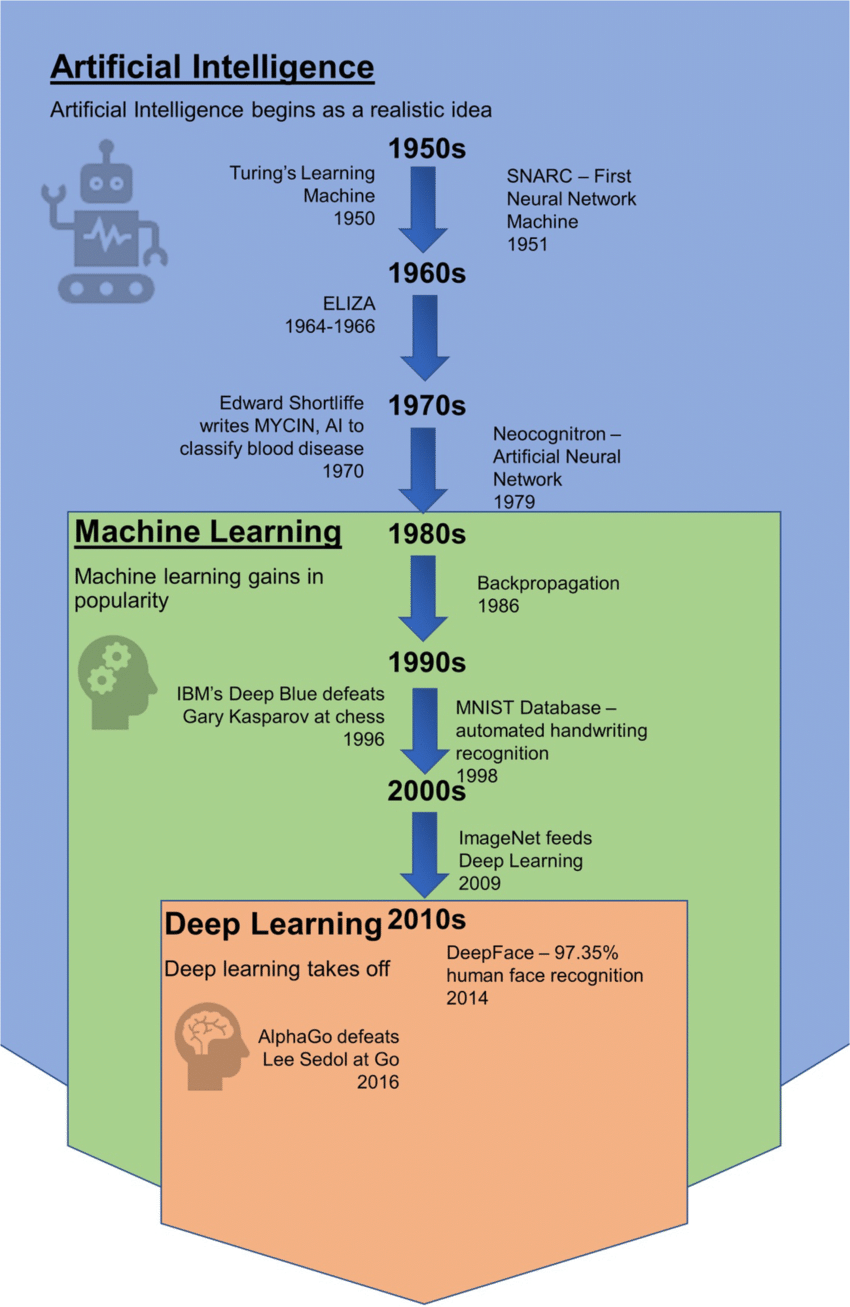

The three key milestones in the evolution of AI are illustrated in Figure 1.1.

Figure 1.1. The evolution of AI.

Three Historical Perspectives on Understanding AI

Following the brief historical overview, we can trace how our understanding of artificial intelligence has evolved through three major perspectives. Each represents a different era and approach to conceptualizing AI, and together they show how the field has matured from a narrow technical endeavor to a rich interdisciplinary pursuit.

1. AI as a Computational Technology (1950s-1960s)

The earliest AI pioneers approached artificial intelligence primarily as a computational and engineering challenge. This perspective dominated the field’s founding years and established AI as a technical discipline.

John McCarthy, who coined the term “artificial intelligence” in 1956, exemplified this view when he defined AI as “the science and engineering of making intelligent machines, especially intelligent computer programs.” This definition deliberately separated AI from psychology and biology—it was about building intelligent systems, not necessarily understanding or replicating human cognition.

From this technological perspective, intelligence was reconceptualized as information processing. Key characteristics of this view included:

- Problem-solving focus: Early AI concentrated on well-defined problems with clear rules—chess, theorem proving, and mathematical puzzles. The Logic Theorist (1956), often considered the first AI program, proved mathematical theorems by manipulating symbols according to logical rules.

- Symbol manipulation: Intelligence was seen as the ability to manipulate symbolic representations according to formal rules. Allen Newell and Herbert Simon’s “Physical Symbol System Hypothesis” (1976) argued that symbol manipulation was both necessary and sufficient for intelligence.

- Goal-oriented behavior: Richard Sutton and others defined intelligence functionally as “the ability to achieve goals in the world” through computational processes. What mattered wasn’t how thinking happened, but whether machines could produce intelligent outputs.

- Flexibility and adaptation: Even early researchers recognized that intelligent systems needed to handle uncertainty and incomplete information. Unlike traditional algorithms that required complete data, AI systems needed to make reasonable decisions with partial information.

This technological perspective gave us foundational concepts still used today: search algorithms, knowledge representation, planning systems, and logical reasoning. However, by the 1970s, researchers realized that many aspects of intelligence—like vision, language understanding, and common-sense reasoning—were far harder than initially anticipated.

2. AI as Cognitive Simulation (1970s-1990s)

As AI faced its first “winter” in the 1970s, researchers began drawing more heavily from psychology and cognitive science. This shift represented a fundamental change: instead of just building intelligent machines, AI researchers started asking how human intelligence actually works.

This cognitive perspective viewed AI as the computational modeling of human mental processes. Key developments included:

- Cognitive architectures: Systems like ACT-R (Adaptive Control of Thought-Rational) and SOAR attempted to model the complete structure of human cognition, including memory systems, attention, and learning mechanisms.

- Mental functions as modules: AI began breaking down intelligence into specific cognitive capabilities:

- Perception: Pattern recognition, computer vision (like David Marr’s computational theory of vision)

- Memory: Short-term vs. long-term storage, semantic networks, frames

- Reasoning: Mental models, analogical reasoning, case-based reasoning

- Problem-solving: Means-ends analysis, heuristic search

- Learning: Concept formation, explanation-based learning

- Knowledge representation: Researchers developed sophisticated ways to encode human knowledge, including semantic networks, frames, scripts, and ontologies. The CYC project (started 1984) attempted to encode all human common-sense knowledge.

- Expert systems: These systems captured human expertise in narrow domains. MYCIN (1976) diagnosed blood infections, while DENDRAL (1965) identified molecular structures. They represented AI’s attempt to model how human experts think and reason.

This era established that intelligence wasn’t just about processing power or clever algorithms—it required vast amounts of knowledge and sophisticated ways of organizing and using that knowledge. The cognitive perspective also highlighted the importance of learning, leading to the machine learning revolution of the 1990s.

3. AI as Intelligent Behavior (1990s-Present)

The modern perspective, crystallized in Russell and Norvig’s influential textbook (1995), shifts focus from internal processes to external behavior and performance. This behavioral perspective asks not “Does it think like a human?” but “Does it behave intelligently?”

This perspective recognizes four different approaches to AI, based on two dimensions:

The Behavioral Framework

Dimension 1: Thinking vs. Acting

- Thinking: Focus on reasoning and internal cognitive processes

- Acting: Focus on behavior and external performance

Dimension 2: Humanly vs. Rationally

- Humanly: Mimicking human performance (including errors and limitations)

- Rationally: Optimal performance according to logic and probability

This creates four approaches:

- Acting Humanly: The Turing Test approach

- Goal: Fool humans into thinking the machine is human

- Success: Natural language processing, knowledge representation, automated reasoning, machine learning

- Example: Modern chatbots like ChatGPT that can maintain convincing conversations

- Thinking Humanly: Cognitive modeling approach

- Goal: Program computers to think like humans

- Method: Use psychological experiments to develop precise theories of mind

- Example: Neural networks inspired by brain structure

- Thinking Rationally: The “laws of thought” approach

- Goal: Build systems that use formal logic to derive conclusions

- Challenge: Not all intelligent behavior is logical; humans often act on intuition

- Example: Automated theorem provers, formal verification systems

- Acting Rationally: The rational agent approach

- Goal: Build agents that achieve the best expected outcome

- Advantage: More general than “laws of thought” – can include reflexes, intuition

- Example: Recommendation systems, autonomous vehicles, game-playing AI

Why This Shift Matters

The behavioral perspective has several advantages:

- Practical focus: It emphasizes what AI can do rather than how closely it mimics human cognition

- Measurable success: Performance can be objectively evaluated

- Flexibility: Different approaches suit different problems

- Includes non-human intelligence: Recognizes that optimal intelligence might differ from human intelligence

This perspective dominates modern AI. Today’s systems—from AlphaGo to GPT models—are evaluated primarily on their performance, not their similarity to human cognition. Machine learning systems find patterns humans might never discover. Computer vision systems can exceed human accuracy. Translation systems work through statistical patterns rather than linguistic understanding.

The Evolution and Integration of Perspectives

These three perspectives aren’t mutually exclusive—they represent an evolution and broadening of how we understand AI:

- 1950s-1960s: AI as computational technology focused on symbol manipulation and problem-solving

- 1970s-1990s: AI as cognitive simulation tried to replicate human mental processes

- 1990s-Present: AI as intelligent behavior emphasizes performance over process

Modern AI integrates all three:

- We use computational techniques (perspective 1)

- Inspired by cognitive science (perspective 2)

- To create systems that behave intelligently (perspective 3)

For example, a modern language model like GPT:

- Uses computational technology (transformer architectures, massive parallel processing)

- Incorporates cognitive insights (attention mechanisms, context understanding)

- Is evaluated on behavioral performance (conversation quality, task completion)

This evolution shows how AI has matured from a narrow technical field to a rich interdisciplinary endeavor that draws from computer science, psychology, neuroscience, philosophy, and mathematics. Understanding these perspectives helps us appreciate both where AI came from and where it might be heading.

Types of AI: Two categorizations

Having explored how our conceptual understanding of AI has evolved through three historical perspectives—from computational technology to cognitive simulation to intelligent behavior—we now turn to how we classify and categorize actual AI systems today. While the historical perspectives helped us understand what AI is and how we think about it, we need practical frameworks for categorizing the AI systems we build and encounter.

Modern AI researchers and practitioners use two primary classification systems, each serving different purposes:

- Behavioral-Cognitive Classification: Categorizing AI by how closely it mirrors human cognitive capabilities

- Capability Scope Classification: Categorizing AI by the breadth and power of its abilities

These aren’t competing systems but complementary frameworks. Just as a biologist might classify animals by their evolutionary relationships (phylogeny) or by their ecological roles (functional groups), we classify AI systems in multiple ways to understand different aspects of their nature and potential.

Why We Need Classification Systems

The historical perspectives we examined show how our understanding of AI has evolved, but they don’t give us practical tools for categorizing the AI systems we encounter daily. Consider these questions:

- How do we distinguish between Siri and GPT-4?

- What separates a chess-playing program from a self-driving car’s AI?

- How do we prepare for AI systems that don’t yet exist?

Classification systems help us:

- Understand current capabilities: What can today’s AI actually do?

- Identify limitations: What can’t current systems accomplish?

- Plan for the future: What developments should we anticipate?

- Make strategic decisions: Which type of AI suits our needs?

Let’s explore these two classification systems in detail.

Types of AI according to the similarity with human behaviors

Artificial Intelligence (AI) has evolved significantly, with various types of AI systems developed to mimic human intelligence and behavior to different degrees. This categorization of AI based on similarity to human behaviors provides a framework for understanding the capabilities and limitations of different AI systems.

From the simplest reactive machines to the theoretical concept of self-aware AI, each type represents a step forward in AI’s ability to process information, learn, and interact with its environment. This progression not only showcases the advancements in AI technology but also highlights the ongoing challenges and future possibilities in the field.

In this overview, we’ll explore four main types of AI based on their similarity to human behaviors:

- Reactive Machines

- Limited Memory

- Theory of Mind

- Self-Aware AI

Each type offers unique capabilities and applications, particularly in the field of marketing, where AI is increasingly being used to enhance customer experiences, optimize strategies, and drive business outcomes. Let’s delve into each type to understand their characteristics, applications, and implications for the future of AI and marketing.

Reactive Machines

Reactive machines are the simplest form of AI, designed with limited capabilities and functions. They have no internal memory and cannot learn from past experiences. These machines react only to immediate stimuli in their environment, making them task-specific. An algorithm developed for one purpose in a reactive machine cannot be adapted for any other task. Reactive machines lack memory-based functionality and do not have a representation of their environment. This means they cannot improve over time or remember goals needed to achieve an objective. Their knowledge is limited to a “stimulus-response” (S-R) model, which operates on the principle of “if X is sensed, then do Y.” Due to this, reactive machines are often referred to as “S-R agents.”

A basic email auto-responder AI reacts to certain triggers in customer emails. If a customer sends an email with the subject “Order Status” (stimulus), the AI responds with a preset message, such as “Thank you for your inquiry. Your order is being processed” (response). It does not remember the customer’s previous interactions or modify its responses—it operates purely based on the current message, using a simple stimulus-response framework.

Reactive machines act based on what they see without learning from past events or anticipating future ones. If the input remains the same, their output will always be consistent. They are effective at performing specific tasks but cannot be easily adapted to other tasks or situations. They lack an understanding of the broader world and cannot handle tasks beyond their assigned functions. Despite their limitations, reactive machines are still quite useful. One of their main advantages is predictability, which ensures a level of certainty. This predictability is valuable when safety and reliability are priorities in AI-powered machines. For example, IBM’s Deep Blue, a chess-playing computer developed in the 1980s, is a well-known reactive machine. Deep Blue defeated world champion Garry Kasparov by identifying chess pieces, tracking their movements, predicting future moves, and selecting the optimal one. However, Deep Blue couldn’t process past data; it only considered the current positions of the chess pieces and chose from the possible next moves. This example shows how reactive machines can achieve significant improvements in specific functions even without learning or adapting.

Limitations and Applications in Marketing

Reactive machines can only perform the tasks they are programmed for and cannot handle complex, multi-step processes or adapt to new tasks. This makes them less flexible than more advanced AI systems. In marketing, reactive machines can be used for specific, repetitive tasks such as customer service chatbots that provide predefined answers to frequently asked questions.

The predictability of reactive machines is an advantage in scenarios where consistent performance is crucial. For example, in automated customer support, predictable responses ensure that customers receive accurate information. They are used in scenarios requiring high reliability, such as automated email responses, where consistency and correctness are more important than adaptability.

Strategic Implications

- Cheaper Implementation: Reactive machines are typically cheaper to implement and maintain due to their simplicity, making them ideal for straightforward tasks that do not require complex processing. This efficiency and cost-effectiveness can be particularly useful for small businesses or for specific functions within larger organizations where advanced AI might be overkill.

- Increased Consistency and Reliability: In terms of customer interaction and experience, reactive machines can provide consistent, reliable interactions but may fall short in delivering personalized or nuanced experiences. They are best suited for initial customer contact points or handling routine inquiries, while more advanced AI can be reserved for complex customer service needs.

Examples in Marketing

- In marketing, reactive machines find their niche in applications where specific and repetitive tasks are necessary. One example is automated customer support. Simple chatbots provide answers to common questions, like order status inquiries or store hours, without the ability to learn or adapt to new questions.

- Another example is programmatic advertising. Algorithms place ads based on predefined rules and criteria, such as displaying a specific ad when a certain keyword is detected on a webpage. These systems react to immediate data inputs (keywords) without learning from past ad placements.

Limited Memory

Limited memory is a type of machine learning used widely today. It refers to machines that can analyze data, learn from historical inputs or actions, and build knowledge from those experiences. Unlike reactive machines, limited memory systems not only react to the current environment but also improve their performance over time based on past data and experiences. This kind of system needs training data and observations to learn and make better decisions or predictions. Types of learning under limited memory include reinforcement learning and supervised/unsupervised learning.

A common example of limited memory is image recognition. Systems are trained with thousands or even millions of labeled pictures to recognize and identify objects accurately. The more images the system is trained on, the better it becomes at recognizing new images. Another example is self-driving cars, which use recent data like speed, speed limits, and the distance from other vehicles to make real-time decisions and avoid accidents. Chatbots, which improve customer service by providing quick responses and guiding users to the correct services, also rely on limited memory.

Despite its advantages over reactive machines, limited memory has some limitations. It requires vast amounts of data to learn even simple tasks. Moreover, it can only retain information for a short period. Unlike humans, these systems cannot store long-term memories, meaning they must be retrained from scratch if the environment changes. For instance, self-driving cars retain memory only for the duration of a journey and cannot build on past experiences to improve driving skills over time. Humans, by contrast, can transfer knowledge and skills from one area to another more easily.

Applications in Marketing

Among the various types of AI, limited memory is the most commonly used in marketing. It employs machine learning techniques like deep neural networks and ensemble trees to predict future demand and understand customer behavior. These techniques allow marketers to gather information efficiently and respond quickly to customer needs. Big data analytics, powered by limited memory’s data mining techniques, provides valuable consumer insights.

For example, chatbots and voice bots are transforming customer service by providing quick, accurate responses and guiding users to the correct services based on past interactions. Virtual assistants in self-driving cars use recent data to provide navigation assistance and improve the driving experience, showcasing how limited memory AI can enhance user interaction.

Strategic Implications

-

- Efficiency and Personalization: Limited memory systems allow for efficient data processing and personalized marketing efforts. By analyzing past consumer behavior, marketers can tailor their strategies to individual preferences, increasing engagement and conversion rates.

- Predictive Analytics: These systems are crucial for predictive analytics, helping businesses forecast trends and consumer demands. This enables companies to adjust their strategies proactively, ensuring they stay ahead of the competition.

Examples in Marketing

-

- Recommendation Systems: Online retailers like Amazon use limited memory AI to recommend products to customers based on their browsing history and previous purchases. This not only enhances the shopping experience but also boosts sales by suggesting relevant items.

- Dynamic Pricing: Airlines and hotels use machine learning algorithms to adjust prices in real-time based on demand, competition, and other factors. Limited memory AI helps analyze historical pricing data and current market conditions to set optimal prices.

Comparison with Reactive Machines

Limited memory AI systems are a step above reactive machines as they can learn from historical data and improve over time. While reactive machines are confined to predefined tasks and immediate stimuli, limited memory systems adapt and evolve, making them more versatile and useful in dynamic environments like marketing. Take, for example, chatbots from the two types of AI systems:

- Reactive Machine Chatbot: A reactive machine chatbot is a type of AI that responds to specific prompts or inputs but does not have the ability to store past interactions or learn from them. It operates purely based on predefined rules or responses, reacting to user inputs without any memory of previous interactions. For example, a simple FAQ bot that answers questions based on a fixed set of rules does not recall previous conversations. Reactive machines are limited to predefined tasks and immediate stimuli, making them less adaptable.

- Limited Memory Chatbot: In contrast, a limited memory chatbot can remember past interactions within a session or over a short period, using this memory to provide more contextually relevant responses. These chatbots store some information temporarily to improve user experience, such as remembering the user’s name or previous questions during a session. Although they do not have long-term memory capabilities, limited memory chatbots can adapt and evolve based on recent interaction history, making them more versatile and useful in dynamic environments like marketing. For example, a customer service bot that remembers details about your issue during a single session but forgets once the session ends demonstrates this capability.

Theory of Mind

Theory of Mind (ToM) in AI is a stage where systems can interact with the world similarly to humans, considering needs, emotions, beliefs, and thought processes. Unlike other types of AI, ToM accounts for human emotions. Traditional machine learning can recognize human actions but struggles when people change their minds due to their mental states and thoughts. This gap highlighted the need for AI that understands how emotions influence human actions and thoughts, leading to the development of ToM.

ToM involves discussions about the mind and mental states. In psychology, ToM is the ability to attribute mental states to oneself and others. Premack and Woodruff introduced this concept in 1978 to explore how young children understand their own and others’ mental states. The theory revealed gaps in perceptions, knowledge, and intentions between ourselves and others, which affect social interactions. Human social skills develop by attributing our desires, beliefs, and goals to others. Based on this theory, AI researchers aim to create machines capable of socializing in ways that weren’t possible before.

The idea of socially intelligent robots is widely discussed. Most researchers agree that ToM should enable machines to gather meaningful information from their social environment in real-time and respond with human-like behavior. These robots should observe and learn from humans, similar to how children do. They should internalize information, ask questions, form opinions, and make decisions autonomously. Robots should also develop social cues and express emotions and desires through interactions without relying solely on pre-programmed vocabulary. Additionally, they should recognize these states in others and act accordingly.

Strategic Implications

-

- Enhanced Consumer Insights: ToM AI provides deeper insights into consumer behavior by analyzing not just actions but the underlying emotional and psychological drivers.

-

- Human-Like Interactions: The ability of ToM AI to mimic human social interactions makes it invaluable in roles requiring empathy and nuanced communication.

Applications in Marketing

In marketing, the development of ToM AI holds significant promise. By understanding and predicting human emotions, needs, and thought processes, these advanced AI systems can create more personalized and emotionally resonant marketing strategies.

-

- Personalized Customer Interactions: ToM AI can enhance customer service by tailoring interactions based on the customer’s emotional state and preferences. This leads to more meaningful and satisfying customer experiences.

Example: Virtual shopping assistants that can detect frustration or confusion in a customer’s voice and adjust their responses to provide better assistance.

-

- Emotion-Driven Marketing Campaigns: Understanding consumer emotions allows marketers to craft campaigns that resonate on a deeper emotional level, increasing engagement and effectiveness.

Example: Ad campaigns that adapt in real-time based on the viewer’s emotional reactions, leading to higher conversion rates.

Comparison with Other AI Types

- ToM AI vs. Reactive Machines: While reactive machines operate on predefined rules and stimuli-response mechanisms, ToM AI can understand and react to emotional and cognitive states, making it more sophisticated and versatile.

- ToM AI vs. Limited Memory AI: Limited memory AI systems learn from past data but do not account for real-time emotional and psychological states. ToM AI bridges this gap by incorporating an understanding of human mental states into its decision-making processes.

Future Potential

Social robots, due to their human-like advancements, are in demand in areas like personal assistance, senior care, education, and public services. However, ToM is complex and still developing, with a long way to go before it achieves substantial and meaningful realization. Sophia, a social humanoid robot, exemplifies efforts in this direction. Sophia can recognize individuals, follow faces, and socialize using advanced vision, machine learning, artificial intelligence, animatronics, and natural language processing. However, some critics view Sophia as more of a puppet than a truly intelligent being. Despite the controversy, Sophia represents a step towards superintelligence.

Understanding these aspects helps marketers leverage the strengths of ToM AI while being aware of its limitations, allowing for strategic deployment in marketing operations. This includes using AI to enhance customer experiences, predict market trends, and personalize marketing efforts, ultimately driving better business outcomes.

Self-Aware AI

The most advanced level of artificial intelligence (AI) is self-aware AI. This type of AI would evolve to the point where it can develop self-awareness, similar to humans. Self-awareness refers to being consciously aware of one’s internal states and interactions with others. In AI, a machine is considered self-aware if it can think, feel, and understand its own emotions. Such machines would not only interact with the world and learn from it but also form representations of themselves. While this is still a theoretical concept, many AI scientists believe that creating self-aware AI is important. Self-aware systems could better manage their goals and complex environments.

AI scientists have been striving to build highly autonomous systems that can manage themselves. These advanced machines would need to be able to run independently, adjust to different situations, and handle workloads efficiently. They should understand user demands and manage tasks without needing user intervention. Furthermore, autonomous systems would have “self-healing” abilities, allowing them to detect, diagnose, and repair problems automatically. They could protect themselves against attacks or failures, learn from these issues, and prevent future problems.

Achieving self-aware AI requires a diverse and comprehensive understanding of intelligence. Success in creating such a system involves integrating cognition (planning, understanding, and learning) with metacognition (control and monitoring of cognition) and intelligent behaviors. AI needs to be adaptable and capable of switching methods if one approach fails. Knowledge from various fields, including engineering, neuroscience, and psychology, is crucial for developing self-aware AI.

Future Potential

Self-aware AI, although still theoretical, represents the pinnacle of AI development with profound implications for various industries, including marketing. While true self-aware machines do not yet exist, ongoing research continues to push the boundaries of what AI can achieve. For instance, efforts to develop robots like Sophia aim to incorporate elements of self-awareness, empathy, and social interaction, albeit on a rudimentary level.

Understanding these aspects helps marketers leverage the strengths of advanced AI while being aware of its limitations, allowing for strategic deployment in marketing operations. This includes using AI to enhance customer experiences, predict market trends, and personalize marketing efforts, ultimately driving better business outcomes.

Types of AI Based on Technology Levels

While the behavioral-cognitive classification focuses on how AI processes information, this system—popularized by philosopher Nick Bostrom and others—categorizes AI by the breadth of its capabilities. It answers: “What range of tasks can this AI perform?”

In this overview, we’ll examine three main types of AI based on their technological sophistication:

- Artificial Narrow Intelligence (ANI)

- Artificial General Intelligence (AGI)

- Artificial Super Intelligence (ASI)

Each of these categories represents a significant leap in AI capabilities, with implications that extend across various industries, including marketing. As we explore each type, we’ll consider their characteristics, applications (both current and potential), and the impact they may have on business strategies and consumer interactions.

This alternative categorization complements our understanding of AI’s relationship to human behavior, offering a more complete picture of the AI landscape and its future possibilities. Let’s delve into each type to grasp the full spectrum of AI technology and its evolving role in shaping our world.

Artificial Narrow Intelligence (ANI)

Also known as “weak AI,” Artificial Narrow Intelligence (ANI) refers to systems designed to handle a specific task. For example, voice assistants like Siri and Alexa can recognize speech and respond with relevant information, but they can’t perform tasks outside their programmed functions. ANI refers to AI designed to perform a specific task autonomously using human-like intelligence. According to Kurzweil (2005), narrow AI consists of systems that carry out specific intelligent behaviors in particular contexts. The term “narrow” indicates its limited capabilities. If the input changes, the system needs reprogramming to maintain its performance (Goertzel, 2014). ANI can only handle tasks it has been pre-programmed for. Unlike human intelligence, which can adapt to environmental changes, ANI cannot. It mimics human behavior based on given goals, contexts, and data but cannot transfer knowledge between different tasks as humans can (Taylor et al., 2008). Reactive machines and systems with limited memory fall into this category. Most current AI systems are ANI.

Artificial General Intelligence (AGI)

Known as “strong AI,” AGI refers to machines that possess the ability to understand, learn, and apply knowledge in ways that are comparable to human intelligence. Unlike ANI, AGI systems could potentially perform any intellectual task a human can do. Imagine a robot that can not only converse with you but also understand emotions, solve complex problems, and adapt to new situations just like a human being. It is a concept with various definitions and no clear consensus. It can refer to a system’s capabilities, the pursuit of creating such systems, or the study of their nature. Despite the lack of a precise definition, AGI is generally understood as possessing human-like intelligence.

Most researchers agree on several key characteristics of AGI:

- Versatility: AGI can achieve diverse goals and handle various tasks in complex and changing environments. For instance, an AGI system could excel at both driving a car and diagnosing medical conditions.

- Knowledge Generalization: AGI should be able to learn from previous experiences and apply that knowledge to different contexts. For example, an AGI that learns to play one video game should be able to transfer strategies and skills to another game.

- Autonomous Problem Solving: AGI is expected to solve problems independently, beyond human inputs and expectations. This means an AGI could potentially discover new scientific theories or invent novel technologies without direct human guidance.

These attributes suggest that AGI would be capable of learning, understanding, and performing intellectual tasks much like a human. The goal is to build a system with a broad and adaptable scope of intelligence.

The idea of AGI aligns with the early ambitions of AI’s founding figures like John McCarthy, Marvin Minsky, Allen Newell, and Herbert A. Simon, who envisioned creating systems comparable to the human brain. However, as progress stalled, researchers shifted focus to more achievable and specific problems, moving away from the grand vision of AGI.

Currently, achieving AGI is challenging due to limitations in techniques and resources. Despite skepticism, many scientists remain hopeful about the possibility of AGI. Researchers like Wang and Goertzel have indicated that with continued advancements, an AGI system could become a reality in the future.

Future Potential

Artificial General Intelligence (AGI), although still theoretical, would have profound implications for various industries, including marketing. While true AGI has not yet been realized, ongoing research continues to push the boundaries of what AI can achieve. For instance, advanced AI systems like DeepMind’s AlphaGo and AlphaZero demonstrate early signs of generalized learning and problem-solving.

Understanding these aspects helps marketers prepare for the transformative potential of AGI while being aware of its limitations, allowing for strategic planning and adaptation to future advancements in AI technology. This includes leveraging AGI to enhance consumer insights, personalize marketing efforts, and drive innovation in marketing strategies, ultimately leading to more effective and efficient marketing operations.

Artificial Super Intelligence (ASI)

ASI is another hypothetical form of AI that surpasses human intelligence in all aspects, including creativity, problem-solving, and emotional intelligence. This means that ASI would not only match the abilities of Artificial General Intelligence (AGI) but also significantly outperform human beings in tasks due to its superior memory, efficient data processing, and advanced analytical skills. Unlike the human brain, which is limited by biological constraints, ASI would operate on a computer substrate, offering benefits like modularity, transparency in operations, and superior computation abilities.

ASI, also known as transhuman AI, represents a stage where humans can transcend their natural limitations through AI technologies. Many scientists believe ASI could solve various human problems, including aging and incurable diseases. Some suggested that ASI would be more efficient, unbiased, resourceful, and continuously available, potentially even making human immortality achievable by transferring human consciousness into computer systems.

However, there are significant social and ethical concerns regarding ASI. Some emphasized that superintelligence should be developed for the benefit of all humanity and aligned with shared ethical principles. To mitigate fears of autonomous weapons and other potential dangers, researchers propose defining and embedding minimal standards of ethics into superintelligent AI systems. This includes setting goals that ensure the AI acts “friendly” and does not harm humans. For example, an AI could be given a set of ethical guidelines based on historical figures known for their morality, instructing it to avoid actions these individuals would disapprove of.

In essence, while ASI holds the promise of solving many human problems, it also presents potential risks. It is up to humanity to ensure that these risks are managed through ethical guidelines and common sense, maintaining control over the development and application of superintelligent AI.

How Does AI Work?

At its core, AI revolves around the use of data to learn and improve. By analyzing vast amounts of data, AI systems can identify patterns and relationships that might be difficult for humans to discern.

The learning process of AI typically involves algorithms, which are sets of rules or instructions that guide the AI in analyzing data and making decisions. In machine learning, a widely used branch of AI, algorithms are trained on datasets that can be either labeled (where the data is tagged with the correct answer) or unlabeled (where the AI finds patterns without explicit guidance). This training enables the AI to make predictions or categorize new information.

Deep learning, a more advanced subset of machine learning, uses artificial neural networks composed of multiple layers. These neural networks function in a way that resembles the human brain, allowing the AI to process information at different levels of abstraction. This layered approach helps AI systems learn complex tasks, such as recognizing objects in images, understanding spoken language, and translating text between languages.

As AI systems are exposed to more data and experience, they continuously learn and adapt, becoming better at the tasks they are designed to perform. For instance, AI is now used in applications ranging from personalized marketing recommendations on platforms like Amazon and Netflix to advanced language translation services such as Google Translate.

Basic Concepts of AI

Algorithms

In computer science, algorithms are a series of instructions or steps that computer programs follow to complete a specific task, such as turning data into useful information. An algorithm is any well-defined procedure that takes some values as input and produces some values as output. For example, an algorithm could be used to sort a list of numbers or find the shortest path between two points on a map.

A great real-life example of an algorithm is Google Maps. When you enter a starting point and destination, Google Maps uses algorithms to calculate the best route. The underlying algorithm, known as Dijkstra’s algorithm or its variations, finds the shortest or fastest path between two points on a map.

Machine Learning (ML)

Machine learning is a vast and rapidly evolving field where computers learn from data to make decisions with minimal human intervention. ML systems can perform a wide range of complex tasks, such as building search engines, filtering content on social media, making recommendations on websites, predicting traffic patterns, trading stocks, and diagnosing medical conditions. You can learn more about the basics of how machine learning operates here.

There are six main types of ML tasks:

- Supervised Learning: In supervised learning, a model is trained using a labeled dataset that includes both input variables (X) and output variables (Y). The goal is to learn a function that can predict the output based on new inputs accurately.

Example: Predicting house prices based on features like size, location, and number of bedrooms.

- Unsupervised Learning: In unsupervised learning, a model is training using unlabeled datasets and only input variables, and the goal is to find hidden patterns or extract useful information. Common tasks include clustering, where data points are grouped based on similarity, and dimensionality reduction, where high-dimensional data is simplified while retaining essential information.

Clustering example: Spotify uses clustering to group listeners based on their music preferences and listening habits. These clusters help create personalized playlists like “Discover Weekly” by grouping users with similar tastes.

Dimensionality reduction example: Facial Recognition: Social media platforms like Facebook use dimensionality reduction to simplify facial image data by reducing thousands of pixel values to key facial features. This allows for faster and more accurate matching of faces in photos.

- Semi-Supervised Learning: Semi-supervised learning combines a small amount of labeled data with a large amount of unlabeled data to improve model accuracy. This is particularly useful when labeled data is expensive or time-consuming to obtain.

Example: Google Translate uses semi-supervised learning to improve the quality of its translations. Initially, a small dataset of human-translated sentence pairs (labeled data) is used to train the model. This is combined with a large amount of monolingual text in different languages (unlabeled data) to further refine the language model. By leveraging the patterns and structures found in the unlabeled text, the system can learn to improve translations for languages with limited labeled datasets.

- Transfer learning: Transfer learning involves taking an existing model trained on one task and adapting it for a different but related task. This is useful when there is limited data for the new task but ample data for a similar task.

Example: Google Health’s AI system for breast cancer detection leveraged transfer learning by starting with a pre-trained model built on non-medical image data and fine-tuning it with a smaller dataset of annotated mammograms. This approach allowed the system to identify subtle patterns in medical images with high accuracy, despite the relatively limited availability of labeled medical data.

- Active Learning: In active learning, the model actively selects the most informative data for training to optimize learning with minimal data acquisition. This approach is ideal when gathering new data is costly or time-consuming. The goal is to maximize predictive accuracy with the minimum amount of data.

Example: Pfizer might train a machine learning model on a small set of known chemical compounds labeled as effective or ineffective for a specific disease. The active learning algorithm then identifies the most uncertain or informative compounds from a large pool of unlabeled candidates. These selected compounds are tested in the lab, and the results are fed back into the model to improve its predictions iteratively.

- Reinforcement Learning: In reinforcement learning, an agent interacts with its environment, taking actions and receiving feedback to optimize its performance. The agent learns to achieve a goal by maximizing cumulative rewards. This approach is used in applications like autonomous vehicles, where the system must navigate and make decisions based on real-time data, and in game playing, where the agent learns strategies to win games.

Example: Waymo, a self-driving car company, uses reinforcement learning to train its autonomous vehicles to navigate roads safely and efficiently. The vehicle (agent) interacts with its environment (real-world or simulated driving conditions) by taking actions such as steering, accelerating, or braking.

The system receives feedback in the form of rewards or penalties:

- Rewards for successful actions (e.g., maintaining a safe distance from other vehicles, following traffic rules).

- Penalties for undesirable outcomes (e.g., near misses, collisions, or breaking traffic laws).

Over time, the reinforcement learning model learns to optimize its behavior by maximizing cumulative rewards. This enables the vehicle to make complex real-time decisions, such as merging into traffic, navigating intersections, and handling unexpected obstacles, all while ensuring passenger safety and comfort.

Deep Learning

Deep learning is a subset of ML that uses artificial neural networks with many layers (hence “deep”) to process large amounts of data and learn complex patterns. These networks are designed to mimic how the human brain works, with layers of interconnected nodes (neurons) that process information. Deep learning can perform tasks like image recognition, speech recognition, and natural language processing. You can learn more about how deep learning works here.

- Image Recognition: Deep learning systems can identify objects, people, places, and other elements in images. For example, Facebook’s automatic friend tagging uses deep learning to suggest tags for people in photos by recognizing their faces.

- Speech Recognition: Systems like Google Voice, Siri, and Alexa use deep learning to understand and respond to spoken commands. They convert spoken language into text and process the information to generate responses, enabling tasks like voice search, setting reminders, and controlling smart home devices.

Natural Language Processing (NLP)

NLP combines AI and linguistics to enable computers to understand and generate human language. NLP systems can perform tasks such as automatic language translation, information retrieval, text summarization, question answering, and creating chatbots. For example, Google Translate uses NLP to translate text between languages, and chatbots use NLP to interact with users and answer their questions. A major challenge in NLP is the lack of resources and tools for many low-resource languages spoken by millions of people, while most resources are focused on high-resource languages like English and Mandarin. You can learn more about how large language models work here.

For example, ChatGPT uses NLP to analyze and generate human-like responses to user queries, making it effective for tasks like customer support and content creation. It processes input text, identifies intent, and crafts relevant answers, showcasing the power of conversational AI.

Robotics

AI has been closely connected with robotics since its early days. The goal is not only to build robots that can act like humans but also to think like them. Robotics involves the intelligent connection of perception to action. Modern robots, integrated with AI, can recognize, reason, and act based on sensor inputs. These intelligent robots can perform tasks such as navigating spaces, avoiding obstacles, and manipulating objects. For example, robots in manufacturing can assemble products with precision, and autonomous drones can navigate and deliver packages. Future developments aim to create robots that can operate in a wide range of challenging environments and assist in various tasks, potentially even serving as companions or aides for humans.

With the integration of AI, robots are no longer just machines that perform manual tasks. They are now equipped with sophisticated software that allows them to make decisions and take actions based on their surroundings. For instance, self-driving cars use a combination of sensors, cameras, and AI algorithms to navigate roads and avoid obstacles. AI-powered robots can also learn from their experiences, improving their performance over time.

Key Terms

| Category | Term | Definition |

|---|---|---|

| Core Concepts | Artificial Intelligence (AI) | The field of computer science focused on creating systems capable of performing tasks that would require intelligence if done by humans, such as problem-solving, decision-making, and language understanding. |

| Algorithms | A well-defined set of step-by-step rules or instructions that a computer follows to process input data and produce an output or solution to a specific problem. | |

| Machine Learning (ML) | A branch of AI that enables systems to improve their performance on tasks by learning patterns and relationships from data rather than being explicitly programmed with fixed rules. | |

| Deep Learning (DL) | A specialized subset of machine learning that uses multi-layered artificial neural networks to automatically learn hierarchical representations of data, allowing for the detection of highly complex patterns. | |

| Natural Language Processing (NLP) | The field of AI that combines linguistics and computer science to enable machines to understand, interpret, and generate human language in a meaningful and useful way. | |

| Robotics | The integration of AI with physical machines designed to perceive their environment, process information, and take actions, enabling them to perform tasks autonomously or semi-autonomously. | |

| Types of Machine Learning | Supervised Learning | A machine learning approach where models are trained using labeled datasets that pair inputs with correct outputs, enabling the system to make accurate predictions on new data. |

| Unsupervised Learning | A machine learning approach that analyzes unlabeled datasets to discover hidden structures, patterns, or groupings within the data without explicit outcome labels. | |

| Reinforcement Learning | A machine learning paradigm where an agent learns by interacting with its environment, receiving feedback in the form of rewards or penalties, and optimizing actions to maximize long-term success. | |

| Types of AI (by Behavior) | Reactive Machines | The simplest form of AI, designed to respond to immediate inputs or stimuli without memory of past experiences or the ability to adapt over time. |

| Limited Memory | AI systems that can make decisions by learning from historical data and recent experiences, but with memory that is temporary and not retained for long-term use. | |

| Theory of Mind (ToM) | A projected stage of AI development in which machines would be able to recognize, understand, and respond to human emotions, beliefs, and intentions, enabling more sophisticated social interactions. | |

| Self-Aware AI | A hypothetical stage of AI in which systems would achieve consciousness, self-recognition, and awareness of their internal states, allowing for autonomous decision-making at a human-like or higher level. | |

| Types of AI (by Technology) | Artificial Narrow Intelligence (ANI) | AI systems designed and trained for a specific, limited task, demonstrating competence within that domain but unable to generalize beyond it. |

| Artificial General Intelligence (AGI) | A theoretical form of AI that would possess human-like intelligence, enabling it to understand, learn, and apply knowledge flexibly across a wide variety of tasks and contexts. | |

| Artificial Superintelligence (ASI) | A speculative form of AI that would surpass human intelligence in all areas, including reasoning, creativity, problem-solving, and emotional intelligence, with profound societal implications. |

Key Takeaways

History and Evolution of AI:

- AI research began with Alan Turing’s question of machine intelligence

- Key milestones: Turing test (1950), AI term coined (1955), Logic Theorist development

- Evolution from rule-based systems to machine learning to deep learning

- Current focus on neural networks and complex pattern recognition

Understanding AI (What is AI?):

- Technological Perspective: • Focus on computational problem-solving • Emphasis on data processing and goal-driven actions • Flexibility in handling uncertain information

- Psychological Perspective: • Emulation of human cognitive tasks • Focus on perception, memory, reasoning, and learning • Applications in object recognition and decision-making

- Cognitive vs. Behavioral Perspective: • Four approaches: Acting/Thinking Humanly/Rationally • Balance between human-like behavior and rational decision-making • Recognition of human cognitive limitations

How AI Works:

- Core focus on data analysis and pattern recognition

- Learning through algorithmic processing

- Use of labeled and unlabeled datasets

- Continuous adaptation and improvement through experience

Think Deeper:

- How does the progression from reactive machines to self-aware AI reflect our understanding of human intelligence?

- What are the implications of developing AI systems with emotional understanding capabilities?

- How might the evolution from ANI to ASI impact society and human-machine interaction?