2 Ethics in AI and marketing

AI Ethics

This text has been adapted from Floridi et al (2021) and Hermann (2021). All images in this chapter comes from this article.

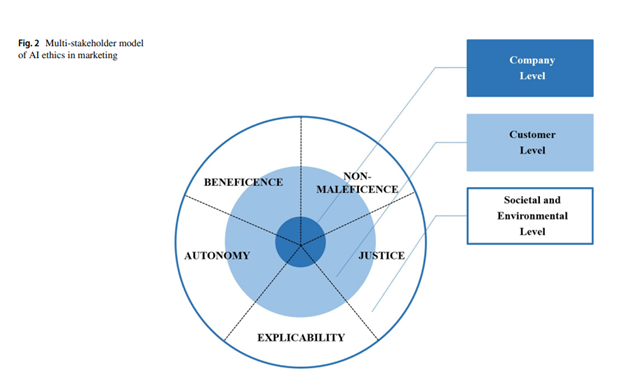

The discussion about the moral and ethical implications of AI has been ongoing since the 1960s. The rapid advancement of Artificial Intelligence (AI) has revolutionized the field of marketing, offering unprecedented opportunities for personalization, efficiency, and customer engagement. However, with these technological strides come significant ethical considerations that demand our attention. This chapter delves into the complex ethical landscape of AI in marketing, examining the intricate balance between innovation and responsibility. We will explore five fundamental ethical principles – beneficence, non-maleficence, autonomy, justice, and explicability – and how they apply to AI-driven marketing strategies. As we navigate through the potential benefits and risks, we’ll uncover the tensions between individual, corporate, and societal interests. From privacy concerns and environmental impacts to issues of bias and transparency, this chapter aims to provide a comprehensive understanding of the ethical challenges faced by marketers, developers, and policymakers in the age of AI. By critically examining these issues, we lay the groundwork for responsible AI implementation in marketing, ensuring that as we harness the power of this technology, we do so in a manner that respects ethical boundaries and promotes the greater good.

We explore the ethical implications and concerns of using AI in marketing from multiple perspectives, including those of companies, customers, and society at large, including environmental impacts. This approach allows us to evaluate the validity and applicability of ethical principles across different stakeholder levels and to identify any tensions that might arise due to differing stakeholder interests.

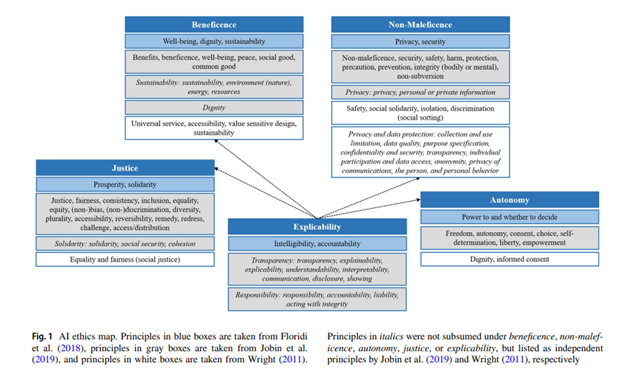

Floridi and colleagues have condensed ethical principles into five key areas: beneficence, non-maleficence, autonomy, justice, and explicability.

Beneficence involves promoting well-being and the common good, while non-maleficence focuses on preventing harm, whether accidental or intentional. These principles are not opposites but coexist to ensure AI benefits society while avoiding harm.

Autonomy concerns the balance between human and AI decision-making power, ensuring people can make choices freely without coercion.

Justice involves fairness, avoiding biases, and addressing past inequalities. It also relates to sharing AI’s benefits and fostering solidarity.

Explicability means understanding how AI works and who is responsible for its actions. This principle supports and enables the other ethical guidelines by ensuring AI systems are intelligible and accountable.

The need for explicability grows as AI systems become more complex and opaque. Understanding how AI functions and assigning responsibility is crucial for evaluating AI’s benefits and risks, deciding on human and AI agency, and ensuring accountability for any failures or biases.

We now discuss each in depth.

Beneficence

The principle of beneficence—doing good and promoting well-being—is central to ethical considerations in AI-powered marketing. This principle raises important questions about who benefits from AI marketing technologies and whether these benefits can be reconciled across different levels of impact. Let’s examine how beneficence manifests in AI marketing and the complex ethical considerations it presents.

- The growing use of AI technologies like chatbots raises concerns about their benefice, as their increasing carbon emissions harm the environment

Benefits at the Individual Level

AI enables unprecedented personalization in marketing through sophisticated data analysis and prediction. Marketing teams can now analyze customers’ digital footprints—including social media activity and smartphone data—to understand psychological traits and preferences. This capability allows companies to create highly personalized advertising, product recommendations, and marketing messages that resonate with individual customers.

Recommendation systems represent a prime example of AI beneficence in marketing. These systems analyze customers’ past behaviors and preferences, along with data from similar customers, to predict what products or services an individual might value. This benefits customers by filtering vast amounts of information and streamlining purchase decisions. For companies, these systems help convert browsers to buyers and facilitate cross-selling opportunities.

The Dual Nature of Benefits

The principle of beneficence in AI marketing manifests as a two-way exchange of value. Companies gain through increased sales efficiency and better customer targeting, while customers receive more relevant recommendations and a more personalized shopping experience. This mutual benefit suggests that AI marketing fulfills a fundamental aspect of beneficence: it serves a clear purpose and provides tangible advantages to both parties.

However, the concept of “good” in beneficence isn’t always straightforward. For instance, when recommendation systems use patterns from similar customers to influence individual choices, they create a form of indirect social influence. If customers fully understood these underlying mechanisms, their perception of the benefits might change. The “black box” nature of many AI systems can obscure this understanding, raising questions about whether true beneficence requires transparency.

The Challenge of Competing Benefits

A critical ethical challenge emerges when considering beneficence across different levels of impact. What benefits individuals may not benefit society or the environment as a whole. AI marketing systems typically optimize for individual customer satisfaction and increased consumption, which can conflict with broader societal goals.

For example, while AI-powered marketing might benefit individual customers through personalized shopping experiences, it can simultaneously contribute to environmental challenges through increased consumption. E-commerce platforms using AI recommendations may drive sales efficiently, but this efficiency comes with environmental costs in terms of packaging waste, transportation emissions, and energy consumption.

The Environmental Dimension

The environmental impact of AI-driven consumption presents a particular challenge to the principle of beneficence. While meeting individual consumer needs provides clear benefits, the resulting resource depletion and environmental damage raise questions about broader societal good. Online retail, powered by AI recommendations, often has a larger ecological footprint than traditional shopping due to factors like individual packaging and last-mile delivery.

Additionally, the technology itself consumes significant energy in its development and operation. These environmental costs must be considered when evaluating whether AI marketing truly fulfills the principle of beneficence on a societal level.

Balancing Individual and Collective Benefits

The tension between individual and collective benefits creates an ethical dilemma in AI marketing. While AI systems excel at maximizing individual utility through targeted recommendations based on past preferences (exploitation), they might better serve society by exploring and promoting more sustainable alternatives (exploration). This raises the question of whether true beneficence should prioritize immediate individual benefits or longer-term societal good.

Future Considerations

Understanding beneficence in AI marketing requires acknowledging these multiple layers of impact. While the technology clearly provides benefits at the individual level, its broader implications for society and the environment complicate the ethical equation. Moving forward, the challenge lies in developing AI marketing systems that can balance individual benefits with collective good, perhaps by incorporating sustainability and social responsibility into their optimization criteria.

The principle of beneficence thus demands a holistic view that considers both immediate individual benefits and broader societal impacts. This understanding can help guide the development of more ethical AI marketing practices that truly serve the greater good while maintaining their value to individuals and businesses.

Non-Maleficence

The principle of non-maleficence—avoiding harm—presents critical considerations in AI-powered marketing. While AI marketing systems can offer significant benefits, they also carry potential risks that must be carefully managed. Understanding these risks helps organizations implement AI marketing technologies more responsibly.

- This article highlights the principle of non-maleficence, showing how prioritizing pedestrian safety in self-driving cars reflects the need to manage risks responsibly in AI-powered technologies

The Relationship with Beneficence

Non-maleficence and beneficence are closely linked when considering AI marketing’s broader impacts. From an environmental and societal perspective, these principles often align—when AI-driven marketing fails to promote environmental good, it simultaneously risks causing environmental harm. However, at the individual and company level, the relationship becomes more complex. AI applications can simultaneously benefit users while potentially causing other forms of harm, creating an ethical tension that requires careful navigation.

Privacy and Data Protection Concerns

A primary consideration in non-maleficence is the protection of personal privacy and data security. Privacy risks in AI marketing can emerge in three key ways:

First, during data collection, companies might gather information without proper informed consent from customers. Second, stored data might be compromised through breaches or de-anonymization. Third, AI systems can draw potentially invasive inferences both directly from individual customer data and indirectly through interaction data with other customers, particularly in collaborative filtering systems.

The scale of data collection in AI marketing amplifies these privacy concerns. While more data generally leads to better predictions and personalization, it also increases privacy risks. This creates a fundamental tension between marketing effectiveness and privacy protection. Regulatory frameworks, such as the European Union’s General Data Protection Regulation (GDPR), attempt to address these concerns by requiring proactive privacy protection measures in the design and implementation of AI systems.

- The Target pregnancy scandal serves as a stark example of how companies have used machine learning technologies in marketing to invasively predict personal information, raising significant concerns about consumer privacy and ethical data usage.

Accuracy and Data Quality Issues

The non-maleficence principle also encompasses the importance of accuracy and data quality in AI marketing systems. These systems can only be as reliable as their underlying data. When input data contains biases, inaccuracies, or errors, it can lead to flawed conclusions and potentially harmful decisions.

A particular concern arises when marketing decisions are based on correlations found in large datasets without establishing causality. Making individual-level decisions based on population-level patterns can be problematic and potentially harmful to customers.

The Challenge of Algorithm Reliance

The relationship between customers and algorithmic recommendations presents another area where the principle of non-maleficence comes into play. Two key challenges emerge:

- Algorithm Overreliance: When customers depend too heavily on AI recommendations, they might accept inferior suggestions that diminish their well-being. This represents a “false positive” scenario where customers trust and follow recommendations that don’t truly serve their interests.

- Algorithm Aversion: Conversely, customers might reject valid AI recommendations due to skepticism or distrust, potentially missing out on beneficial opportunities. This “false negative” scenario can impact both customer well-being and company objectives.

Pricing and Competition Concerns

AI systems used in pricing decisions can also raise non-maleficence concerns. Without proper oversight, pricing algorithms might engage in tacit collusion or overestimate price sensitivities, leading to artificially inflated prices. This can harm not only individual customers but potentially contribute to broader economic issues through increased price levels across markets.

The Black Box Challenge

The complexity of AI systems—often referred to as the “black box” problem—makes it particularly difficult to assess potential harms. Both customers and companies may struggle to understand the extent of data capture, evaluate data quality, or assess model specifications. This lack of transparency complicates efforts to ensure non-maleficence in AI marketing applications.

Moving Forward

To uphold the principle of non-maleficence, organizations implementing AI marketing systems must:

- Implement robust privacy protection measures

- Ensure high data quality standards

- Carefully monitor algorithmic decisions

- Maintain transparency where possible

- Regularly assess potential negative impacts

Understanding and addressing these concerns helps organizations balance the benefits of AI marketing with their ethical obligation to avoid harm. This balanced approach is essential for maintaining customer trust and ensuring the sustainable development of AI marketing technologies.

Autonomy

The principle of autonomy in AI marketing centers on preserving human agency and decision-making power. As AI systems become increasingly sophisticated in their ability to influence and shape consumer choices, understanding how to maintain meaningful human autonomy while leveraging AI’s capabilities presents a crucial challenge in modern marketing.

- Algorithms, such as those used on YouTube, influence over 90% of watch time, raising concerns about whether users truly have control over their choices or if AI systems are undermining human decision-making power

The Foundation of Consumer Autonomy

Consumer autonomy fundamentally refers to individuals’ ability to make and execute decisions independently, without undue external influence. In the context of AI ethics, this transforms into a meta-autonomy framework where humans retain the power to choose when to make decisions themselves and when to delegate decision-making to AI systems. This ability to choose when to delegate represents a crucial aspect of preserving human agency in an AI-enabled world.

Corporate Governance and Human Oversight

From an organizational perspective, maintaining human autonomy requires robust governance mechanisms that keep humans actively involved in AI systems’ operation. This becomes particularly critical when AI operates in ethically sensitive contexts, such as when systems engage in emotional interactions with customers or face moral decisions. While AI continues to advance in emotional intelligence and moral reasoning capabilities, current systems primarily recognize and simulate emotions rather than truly understand them. Until AI develops genuine emotional intelligence and moral agency, human oversight remains essential.

The Influence on Consumer Decision-Making

AI marketing systems can significantly influence consumer decision-making through several key mechanisms. Through personalization and targeting, these systems shape the information and options presented to consumers, effectively altering the choice architecture within which decisions are made. Recommendation systems act as information filters, pre-selecting options based on predicted preferences and past behaviors. By determining what information and options consumers see, AI systems can function as “nudges” that influence decision-making in subtle but powerful ways.

The Delegation Challenge

When consumers interact with AI marketing systems, they often delegate part of their decision-making power at the information-gathering stage. This delegation can offer benefits through increased efficiency and personalization, saving time and cognitive resources. However, it also presents potential risks. Consumers might become too dependent on AI recommendations, potentially accepting suboptimal choices without sufficient consideration. Conversely, some consumers might reject helpful AI guidance due to distrust or skepticism. There’s also potential for AI systems to present manipulated or deceptive content that unduly influences consumer choices.

Preserving Meaningful Choice

Organizations must carefully design their AI marketing systems to preserve genuine consumer autonomy. This includes providing clear options about levels of AI engagement, maintaining transparency about how AI systems influence the decision-making environment, and ensuring consumers can opt out of AI-driven interactions when desired. A crucial element in preserving autonomy is ensuring that both consumers and company representatives can make informed decisions about when to delegate to AI systems. This requires a basic understanding of how these systems function – their capabilities, limitations, and potential biases.

Looking Forward

As AI systems become more sophisticated in their ability to understand and respond to human emotions, maintaining meaningful human autonomy will become increasingly complex. The key lies in striking a balance between leveraging AI’s capabilities to improve marketing effectiveness while preserving consumers’ fundamental right to make independent, informed decisions. This balance requires ongoing attention to transparency, choice architecture, and human oversight in AI marketing systems.

Organizations implementing AI marketing must recognize that true autonomy extends beyond simply offering choices – it requires ensuring those choices are meaningful and informed. This means maintaining clear processes for human intervention and decision-making, especially in sensitive customer interactions. As AI technology continues to evolve, the challenge of preserving human autonomy while harnessing AI’s benefits will remain at the forefront of ethical marketing considerations.

Justice

The principle of justice in AI marketing addresses how these systems can either promote or undermine fairness and equality. As AI systems become increasingly prevalent in marketing decisions, understanding their potential to create or amplify discrimination becomes crucial for ethical implementation.

The Challenge of Algorithmic Bias

AI systems can inherit and amplify human biases, presenting a significant ethical challenge in marketing. These biases often manifest in personalization strategies, psychological targeting, and customer relationship management. The key concern is that AI systems may discriminate against certain customer groups based on demographic, psychological, or economic factors.

Several factors can contribute to discriminatory outcomes in AI marketing systems. When certain demographic groups are over- or underrepresented in training data, AI systems may develop biased predictions. This can occur when data collection is skewed or when certain groups are less willing to share data due to privacy concerns. Additionally, initial biases in data can create a cycle where biased predictions inform decisions, which then generate new biased data, perpetuating and potentially amplifying the original bias. Customer prioritization based on income or profitability can exacerbate existing social inequalities by limiting certain groups’ access to opportunities or information.

Impact Across the Marketing Ecosystem

The impact of AI bias extends throughout the marketing ecosystem, affecting various stakeholders differently. For consumers, AI marketing systems can create or reinforce disparities based on gender, age, race, and economic status. This manifests through differential targeting, pricing, or access to products and services. Particularly concerning is the potential targeting of vulnerable consumer groups, which could exploit existing disadvantages.

On the corporate level, AI systems like recommendation engines can create market disparities. They might reduce product diversity by focusing consumer attention on popular items, potentially harming smaller businesses or those offering niche products. Additionally, businesses that cannot effectively implement AI marketing systems may face competitive disadvantages. The rise of AI-powered e-commerce platforms can create market access barriers for traditional retailers, potentially leading to market concentration and reduced competition.

The Challenge of Transparency

The “black box” nature of many AI systems makes it difficult to identify and address biases. This lack of transparency creates additional challenges in ensuring justice, as it becomes harder to detect when systems are producing discriminatory outcomes. Organizations must work to implement rigorous protocols for data collection and validation to ensure representative samples across all demographic groups. Regular monitoring of AI systems for potential discriminatory outcomes and establishing processes for correcting identified biases becomes crucial.

Promoting Fairness in AI Marketing

While much attention focuses on preventing discrimination, AI can also serve as a tool for promoting justice. Recent research has demonstrated that algorithms can be used to detect discrimination and help de-bias human judgments, suggesting potential positive applications for promoting fairness. Organizations can incorporate ethics specialists into AI development teams to help identify and address potential justice concerns early in the development process.

Looking Forward

Success in AI marketing should be measured not only by business metrics but also by the system’s ability to serve all stakeholders fairly and ethically. Organizations must remain vigilant in monitoring and addressing potential discriminatory outcomes while exploring ways to use AI as a tool for promoting greater fairness in marketing practices. This requires balancing efficiency and fairness by considering both business objectives and ethical implications when designing AI marketing strategies.

Regular assessment of how AI decisions affect different customer segments and stakeholder groups becomes essential, as does maintaining human supervision of AI systems, particularly in decisions that could affect vulnerable groups. The justice principle reminds us that while AI marketing systems can enhance efficiency and personalization, they must be designed and implemented with careful attention to fairness and equality.

In conclusion, organizations must commit to ethical oversight, transparent practices, and regular assessment of AI systems’ impact on various stakeholder groups. Only through this ongoing commitment can AI marketing systems truly serve their purpose while maintaining fairness and equality across all stakeholder groups.

Explicability

Explicability stands as one of the most challenging and debated principles in AI marketing ethics. This principle addresses how AI systems can be made understandable and accountable to stakeholders, particularly given their often opaque “black box” nature. As AI systems increasingly make important decisions affecting customers and businesses, the need for explicability becomes more crucial than ever.

The Challenge of Black Box AI

AI marketing systems often operate as black boxes, where the relationship between inputs and outputs remains unclear to both customers and businesses. This opacity raises significant concerns, particularly when these systems handle sensitive personal data or make high-stakes decisions about customer targeting, pricing, or recommendations. The complexity of modern AI systems can make it difficult for even their developers to fully understand how certain decisions are reached, creating challenges for accountability and trust.

Key Dimensions of Explicability

Explicability encompasses two fundamental dimensions that work together to create transparency and trust in AI marketing systems. The first is intelligibility, which refers to helping humans understand how AI systems function without necessarily diving into their internal complexities. Intelligibility serves as an enabling factor for other ethical principles, as understanding how AI works helps people evaluate its benefits, potential harms, fairness, and appropriate use.

The second dimension is accountability, which addresses who bears responsibility for AI systems’ decisions and outcomes. As AI systems increasingly shape marketing decisions, establishing clear lines of accountability becomes crucial, especially when outcomes prove adverse or discriminatory. Organizations must be prepared to take responsibility for their AI systems’ actions and provide recourse when things go wrong.

Balancing Competing Interests

Organizations implementing explicability face several significant challenges. Technical complexity makes explaining sophisticated AI systems in simple terms difficult without losing accuracy or meaningful detail. While customers deserve explanations about how AI affects them, too much technical detail can lead to confusion and frustration rather than understanding. Additionally, complete transparency might conflict with protecting proprietary information and maintaining competitive advantages.

Some AI applications, such as chatbots, might become less effective if their artificial nature is made too explicit, creating a tension between transparency and performance. This raises important questions about how to balance the ethical imperative for transparency with practical business considerations.

Practical Implementation in Marketing

Implementing explicability in AI marketing requires a thoughtful approach that considers various stakeholder needs. Organizations should provide simple, clear explanations of how their AI systems work, focusing on what customers need to know rather than technical details. This might include explaining why certain recommendations are made or how personal data influences marketing decisions.

Establishing clear accountability structures for AI system outcomes is equally important. Organizations must be prepared to take responsibility when AI systems make mistakes or produce undesired outcomes. This includes having processes in place for addressing complaints and correcting errors.

The Impact on Other Ethical Principles

Explicability’s importance extends beyond mere transparency, as it enables the evaluation of other ethical principles in AI marketing. Understanding how AI systems work helps assess their benefits and potential harms, supporting the principles of beneficence and non-maleficence. Transparency about AI operations helps identify potential biases and discriminatory outcomes, supporting justice. Clear explanations enable informed decisions about whether to delegate choices to AI systems, supporting autonomy.

The Future of Explicable AI

Some experts advocate for building inherently interpretable AI systems rather than trying to explain complex black box systems after the fact. This approach suggests designing AI marketing systems with explicability in mind from the start, rather than attempting to add transparency later. This might mean choosing simpler, more interpretable algorithms over more complex ones, even if they offer slightly lower performance.

Looking forward, organizations must continue to develop new approaches to explicability that balance stakeholder needs with practical constraints. This might include innovative ways of visualizing AI decision-making processes or new frameworks for establishing accountability. Success in AI marketing increasingly depends on finding the right balance between complexity and comprehensibility, between transparency and effectiveness, and between disclosure and competitive advantage.

In conclusion, explicability remains a crucial principle for ethical AI marketing, even as implementing it presents significant challenges. Organizations that successfully navigate these challenges while maintaining transparency and accountability will likely find themselves better positioned to build trust with customers and other stakeholders in an increasingly AI-driven marketing landscape.

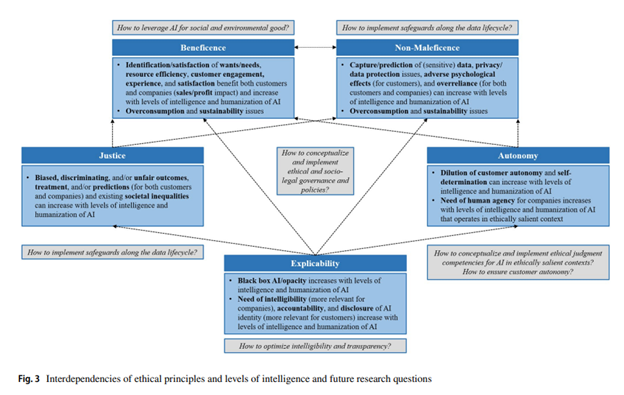

Interdependencies Between Ethical Principles and Levels of Intelligence

This conceptual analysis reveals that ethical principles related to AI in marketing interact and sometimes conflict, so they cannot be judged in isolation but in relation to each other.

Explicability is an enabling principle for beneficence, non-maleficence, justice, and autonomy. At the same time, justice and autonomy shape how beneficence and non-maleficence are applied. AI systems can be both beneficial and harmful, depending on the stakeholders involved. For example, AI-based personalization strategies might satisfy customer needs and boost company sales (beneficence), but they also raise the overall consumption level, harming the environment and society (non-maleficence). Privacy and data protection issues can compromise the benefits of personalization for both customers and companies.

The complexity of ethical issues varies across AI applications, technological sophistication, and pervasiveness. Ethical challenges and tensions may increase with the level of AI intelligence and humanization, transitioning from cognitive (analytical, mechanical) to emotional (feeling) and social intelligence. This progression can lead to greater benefits, such as better-targeted customer needs, but also increases the issues related to explicability, such as accountability in case of failures.

Ethical challenges related to justice and autonomy can also increase with more sophisticated AI, though this is not always the case. For example, AI can be used as a discrimination detector, identifying and mitigating biases. However, the need for human agency and oversight becomes more critical, especially in ethically significant contexts. Achieving non-maleficence will depend on how customer data is gathered and treated.

As AI systems become more human-like, they may be perceived with anthropomorphism and mind perception, attributing experience, warmth, agency, and competence to AI. Intense use and interaction with human-like AI can lead to psychological ownership and emotional attachment, which might have detrimental effects if AI deployment gets out of control or if there is overreliance on AI systems. Extensive use can also lead to the dehumanization of users.

- The phenomena of humans falling in love with AI companions highlights the potential societal and individual implications of overreliance on human-like AI systems

From a deontological perspective, AI applications might be questioned if non-maleficence cannot be guaranteed, which could lead to missing out on opportunities to serve customers and achieve beneficence at the customer and company levels. A strictly deontological approach may not adequately address the complex ethical concerns involved. Instead, a utilitarian perspective, which weighs benefits and costs across all stakeholders, might be more appropriate. This approach can consider multiple values, objectives, and utilities at individual, group, and societal levels.

AI systems could incorporate multi-objective maximum-expected-utility concepts aligned with human values and ethical principles. However, this technological design and implementation are challenging. Determining which utilities to optimize is difficult and varies across stakeholders. Moreover, artificial moral agency is still in its early stages, requiring significant human oversight. Humans must decide how to align AI applications and systems with ethical principles and human values and determine whether AI systems should base their ethical decision-making on pre-defined ethical theories (top-down), flexible self-learning mechanisms based on certain values (bottom-up), or a combination of both (hybrid).

AI for Societal Good?

AI in marketing creates a challenging balance between effective selling and environmental responsibility. When AI personalizes marketing messages and manages customer relationships well, it often leads to increased consumption. While this benefits businesses, it conflicts with the growing need for sustainable consumption patterns and the call for people to “buy better but less.”

Marketing and sustainability might seem to be working against each other at first glance. After all, marketing typically aims to increase sales, while sustainability often requires reducing consumption. However, AI offers promising ways to align these seemingly opposing goals.

Consider how AI can support more sustainable consumer choices. Through advanced data analysis, AI can identify customers who are interested in sustainable products and match them with appropriate eco-friendly offerings. The technology can process complex relationships between what companies offer (like product features and pricing) and what influences consumer decisions (such as personal values and demographics).

However, the relationship between marketing and sustainable consumption is complex. Research shows interesting patterns in how consumers respond to sustainable products. For instance, when people care about others as much as themselves, they often value sustainable products differently. Surprisingly, when environmentally friendly products are put on sale, some consumers become less likely to buy them. This might be because discounts can make these products seem less premium or authentic.

AI can help marketers navigate these complexities. For example, it can analyze when sustainability messages are most effective in a customer’s buying journey. While some customers might respond well to environmental messaging when first discovering products, others might be more influenced by it when making their final purchase decision.

The challenge lies in overcoming psychological barriers that prevent sustainable behavior change. Even when people want to make environmentally conscious choices, various mental blocks can get in the way. AI-powered marketing can help identify and address these barriers, making it easier for consumers to align their purchasing decisions with their environmental values.

One promising way AI can promote sustainability is through psychological targeting. This means using AI to identify consumers who are most likely to be interested in sustainable and ethical products based on their psychological characteristics. By analyzing digital behavior patterns, AI can help marketers customize their messages to resonate with environmentally conscious consumers.

AI can even help set appropriate prices for sustainable products. By analyzing consumers’ digital footprints, marketers can better understand how much different customer groups are willing to pay for environmentally friendly options. This is particularly important because research shows that pricing strategies can significantly impact how consumers respond to eco-friendly products.

However, with this power comes significant responsibility. While psychological targeting can promote positive social and environmental outcomes (beneficence), marketers must be careful to avoid potential harm (non-maleficence). For example, it would be unethical to use these targeting capabilities to exploit vulnerable individuals or those prone to compulsive buying behaviors.

The challenge of ethical targeting becomes even more complex when considering AI systems themselves. These systems can unintentionally discriminate against certain groups if they’re trained on biased data or if their mathematical models aren’t properly designed. To prevent unfair outcomes that could undermine the positive purpose of these AI applications, marketers need to understand both the data being used and how their AI systems work.

This creates a shared responsibility between marketers and technical teams. Marketers, who are often held accountable for the outcomes, need to be aware of potential biases in their data and AI systems. Meanwhile, data scientists and AI developers must use their technical expertise to identify and fix any biases or errors in the systems they create.

Think of it like a powerful tool that needs careful handling: Just as a sharp knife can be used to prepare a healthy meal or cause harm, AI-powered marketing can either promote sustainable consumption or exploit vulnerable consumers. The key is understanding the tool and using it responsibly.

AI-powered recommendation systems can act as digital guides toward sustainable consumption. Just like personalized marketing messages, these systems can nudge consumers toward more environmentally conscious choices. The key is balancing two approaches: helping customers explore new sustainable options while respecting their existing preferences.

For example, when showing product recommendations, an AI system could introduce sustainable alternatives alongside conventional products. This approach gradually expands the variety of environmentally friendly options presented to consumers, whether they’re shopping for fast fashion or considering more sustainable, longer-lasting clothing choices.

AI systems can also help prevent compulsive buying behaviors by monitoring purchase patterns. When the system detects potential issues, such as unusually frequent purchases or repeated buying of similar items, it can provide informational nudges. These gentle reminders don’t force behavior change but instead encourage thoughtful decision-making by highlighting the environmental or personal impact of excessive consumption. Research shows that simply prompting consumers to think about their existing possessions can reduce impulse buying.

When designing these AI systems, companies must carefully balance effectiveness with consumer autonomy. There are two main approaches to nudging:

- Informational nudges: Changing the type of information shown to consumers

- Structural nudges: Modifying how choices are presented

While structural nudges tend to be more effective, informational nudges better preserve consumer autonomy by allowing individuals to make their own informed decisions.

This raises an important question in AI development: How should we build ethics into these systems? Two main approaches exist:

- Ethics by design: Building ethical constraints directly into the system’s structure

- Pro-ethical design: Providing information that enables ethical decision-making

The choice between these approaches depends on several factors, including the need for transparency, accountability, and consumer acceptance. Success ultimately requires collaboration between AI developers and ethics experts to translate ethical principles into practical applications that benefit both consumers and the environment.

Key Terms

| Category | Term | Definition |

|---|---|---|

| Ethical Principles | Beneficence | Using AI to deliver clear benefits for individuals, organizations, and society. |

| Non-maleficence | Preventing avoidable harm from AI, including privacy loss, manipulation, unsafe behavior, and environmental damage. | |

| Autonomy | Preserving people’s ability to choose, understand options, and say no to AI recommendations. | |

| Justice | Ensuring fair treatment and equal access while avoiding discrimination across groups. | |

| Explicability | Making AI understandable and accountable, with reasons for decisions and clear responsibility. | |

| Privacy & Data | Privacy | People’s control over how their personal data is collected, used, and shared. |

| Informed Consent | Freely given permission to process data after clear explanation of purpose, risks, and choices. | |

| Data Minimization | Collect only what is needed and retain it only as long as necessary. | |

| Re-identification | Linking anonymous records back to real people, which undermines privacy. | |

| Fairness & Governance | Algorithmic Bias | Unfair outcomes caused by skewed data, labels, features, or objectives. |

| Black box model | A model whose reasoning is hard to interpret, making explanations and audits difficult. | |

| Accountability | Clear responsibility for AI outcomes and a path to fix problems. | |

| Human-in-the-loop | Humans supervise and can approve, correct, or override AI outputs. | |

| Autonomy & Influence | Choice Architecture | How options, defaults, and information are presented, which shapes decisions. |

| Nudge | A small change in presentation that steers choices without removing options. | |

| Delegation | Deciding which steps are handled by AI and which stay with people. | |

| Sustainability | Sustainable Consumption | Buying choices that reduce environmental harm across a product’s life cycle. |

| AI Carbon Footprint | Energy use and emissions from training and running AI systems. |

Summary: Ethics in AI and Marketing

Key Takeaways:

– Five fundamental ethical principles in AI marketing: beneficence, non-maleficence, autonomy, justice, and explicability.

– AI in marketing offers benefits like personalization but raises concerns about privacy, environmental impact, and bias.

– Ethical considerations vary across stakeholder levels (individual, corporate, societal) and AI sophistication.

– Transparency and accountability are crucial but challenging as AI systems become more complex.

– A balanced approach considering multiple perspectives is necessary for ethical AI implementation in marketing.

Connections:

This chapter builds on the AI concepts introduced in previous chapters, exploring their ethical implications in marketing contexts. It sets the foundation for responsible AI use in marketing strategies discussed in later chapters.

AI in Action:

Recommender systems used by e-commerce platforms demonstrate both the benefits (personalized recommendations) and potential drawbacks (privacy concerns, environmental impact) of AI in marketing.

Think Deeper:

1. How can marketers balance the benefits of AI-driven personalization with concerns about consumer autonomy and privacy?

2. Consider the environmental impact of AI-driven marketing. How might marketers address these sustainability concerns while still leveraging AI’s benefits?

Further Exploration:

– Research real-world examples of companies implementing ethical AI practices in their marketing strategies.

– Explore emerging regulations and guidelines for ethical AI use in marketing across different countries or regions.