3 AI and the Transformation of Consumer Experiences

This chapter is based on Puntoni et al. (2021)

As artificial intelligence becomes increasingly woven into the fabric of daily life, a crucial shift is occurring in how we must understand its impact. While much attention has focused on AI’s technical capabilities and underlying algorithms, the more pressing question now centers on how consumers actually experience and interact with these technologies. This experiential lens reveals that AI’s influence extends far beyond mere utility, touching on fundamental aspects of human identity, autonomy, and social connection.

Consider how AI shapes our daily routines: We wake up to personalized news feeds, navigate our commutes with AI-optimized routes, work alongside AI-powered tools, and end our days streaming content selected by recommendation algorithms. Each of these interactions represents more than just a technological convenience—it embodies a complex relationship between human and machine that can simultaneously empower and constrain, connect and alienate, serve and exploit.

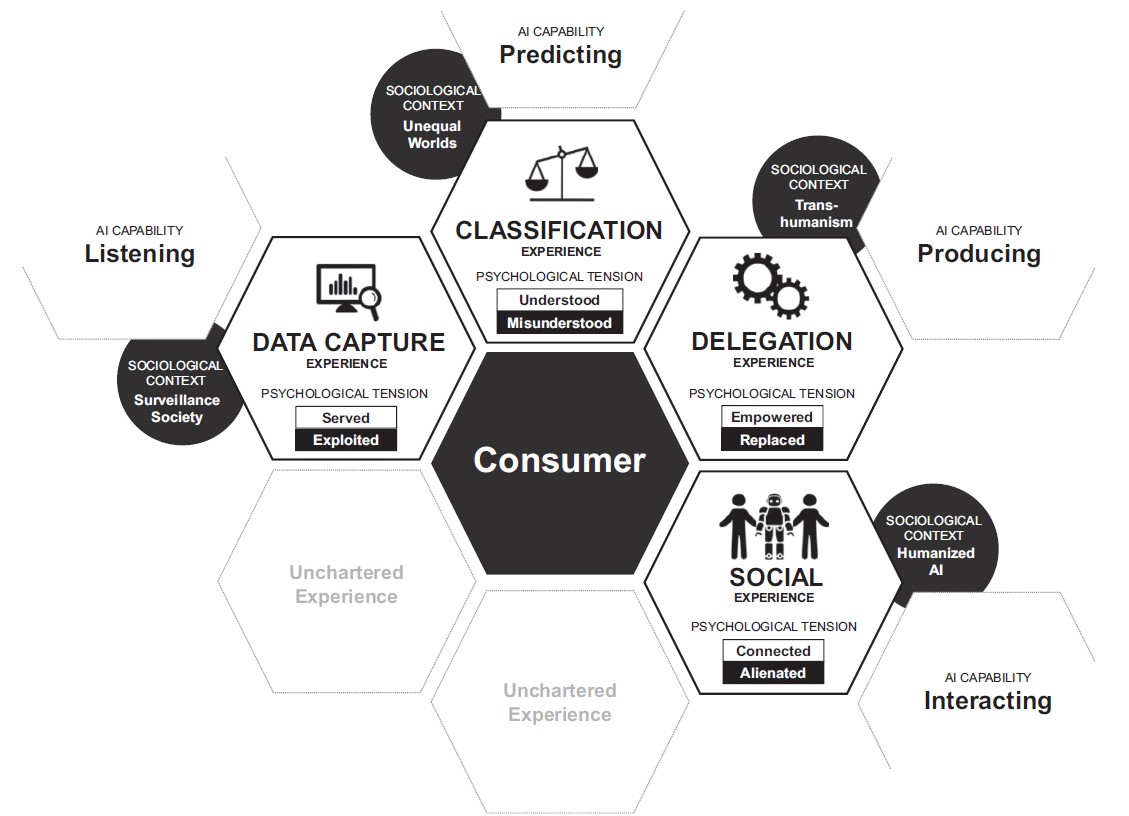

This chapter examines four fundamental dimensions of the consumer AI experience: data capture, classification, delegation, and social interaction. Each dimension creates distinct tensions in how consumers engage with AI systems. When sharing personal data, consumers face the paradox of being both served and exploited. As AI systems classify and categorize them, they wrestle with feeling understood versus misunderstood. In delegating tasks to AI, they balance the promise of empowerment against the fear of replacement. And through social interactions with AI, they navigate new forms of connection that can either enhance or diminish human relationships.

Understanding these tensions is crucial for both practitioners and researchers. For businesses, success in the AI era requires moving beyond technical performance metrics to consider how their systems affect consumers’ sense of identity, agency, and well-being. For scholars, these experiential dimensions offer rich territory for exploring how AI is reshaping fundamental concepts of consumer behavior and human-computer interaction.

Through both sociological and psychological lenses, this chapter unpacks each of these core tensions, examining how they manifest in consumer experiences and what they mean for the future of AI development. By understanding these dynamics, we can work toward AI implementations that enhance rather than diminish human capability and dignity, while creating value for both consumers and organizations.

Consumer Experience and AI Capabilities

To better understand the consumer AI experience, it is critical to shift focus from the underlying technology to how consumers engage with these capabilities. Consumer experience refers to the interactions between consumers and companies during the customer journey, encompassing emotional, cognitive, behavioral, sensorial, and social dimensions (Brakus, Schmitt, and Zarantonello 2009; Lemon and Verhoef 2016). This perspective broadens our understanding of AI’s role in consumer lives beyond utility and function to include its emotional and symbolic significance (Mick and Fournier 1998).

A framework proposed by researchers outlines four experiences that reflect consumer interactions with AI capabilities:

- Data Capture – The experience of sharing personal data with AI systems.

- Classification – The experience of receiving predictions or recommendations based on personal data.

- Delegation – The experience of allowing AI to perform tasks on behalf of the consumer.

- Social Interaction – The experience of communicating with AI systems as interactive partners.

The four AI capabilities – data capture, classification, delegation, and social interaction – each create distinct consumer experiences that are characterized by fundamental tensions. These tensions arise as AI simultaneously offers benefits while potentially undermining aspects of consumer autonomy and wellbeing:

- Data Capture: Tension between being served and exploited When AI systems collect consumer data, they can provide highly personalized services and experiences (served), but may also leave consumers feeling that their privacy has been invaded and their personal information commodified (exploited).

- Classification: Tension between being understood and misunderstood AI’s ability to analyze and categorize consumers can lead to helpful personalization that makes them feel truly understood, but can also result in reductive or biased categorizations that leave consumers feeling mischaracterized or discriminated against.

- Delegation: Tension between being empowered and replaced While AI can empower consumers by handling tedious or complex tasks, it can also create anxiety about human capabilities becoming obsolete or irrelevant.

- Social Experience: Tension between being connected and alienated AI-enabled social interactions can foster new forms of connection and engagement, but may simultaneously create experiences of exclusion or disconnection, particularly for marginalized groups.

Understanding these tensions through both sociological and psychological lenses is crucial for several reasons:

- The sociological perspective reveals how these tensions reflect and reshape broader social structures and power dynamics. For instance, data capture practices aren’t just about individual privacy concerns, but about fundamental shifts in how personal information becomes a form of capital in what scholars call “surveillance capitalism.”

- The psychological perspective illuminates how these tensions manifest in individual consumer experiences and behaviors. This helps explain why consumers might simultaneously embrace and resist AI technologies, or why the same AI capability might be experienced very differently by different individuals.

Each section of this chapter examines one of these core tensions through both lenses, followed by managerial recommendations for addressing them. This dual analysis helps future marketers understand not only how to implement AI effectively, but how to do so in ways that benefit both individual consumers and society as a whole.

From Puntoni et al. (2021)

The AI Data Capture Experience

The listening capability in AI enables systems to gather data about consumers and their environments. This process forms the basis of the data capture experience, which involves various ways data is transferred to AI systems. Consumers may intentionally provide data, but their understanding of how the data will be used often varies. Some share data with clarity and awareness of its purpose, while others surrender it amid high levels of uncertainty (Walker 2016). AI systems may also acquire data passively, gathering “digital shadows” that consumers leave behind in daily activities. For example, shoppers in stores equipped with facial recognition technology or users of iRobot Roomba vacuums—devices that map residential spaces—unknowingly contribute to data collection (Kuniavsky 2010).

Benefits of Data Capture

Despite these concerns, the data capture experience offers tangible benefits to consumers:

- Access to Customized Services: Sharing personal data enables tailored services, information, and entertainment, often provided for free. For example, Google Photos captures users’ memories and, in return, provides an AI-powered assistant that suggests context-specific actions, such as creating photo albums or sharing images.

- Simplified Decision-Making: AI systems can reduce the cognitive and emotional burden of decision-making by matching personal preferences with optimal choices. For instance, digital assistants can provide curated recommendations, sparing consumers the effort of evaluating numerous options (André et al. 2018).

- Opportunities for Self-Improvement: Customized AI-driven solutions can support personal development. One project from Alphabet integrates data from smartphones, genomes, wearables, and ambient sensors to deliver personalized healthcare recommendations (Kuchler 2020).

Challenges in Data Capture

The data capture process raises several concerns for consumers, often tied to the complexity and opacity of AI systems:

- Intrusive Data Collection: Modern data acquisition methods are increasingly pervasive and difficult to avoid.

- Aggregation Over Time: Even when consumers intentionally share data, they often remain unaware of how it is combined across contexts to create comprehensive profiles.

- Transparency and Regulation: Data brokers often operate in an unregulated environment, with limited accountability and little clarity about how data is used (Grafanaki 2017).

These factors can undermine consumers’ sense of ownership over their personal data and erode their personal control—the feeling that individuals, rather than external forces, dictate outcomes (DeCharms 1968). This loss of control has both sociological and psychological implications, which are critical to understanding the consumer experience.

The Double-Edged Sword of Data Capture

While the benefits are significant, helping provide a better service, the data capture experience often leaves consumers feeling exploited. They may lack awareness of how their data is being used or feel powerless to control the process. Striking a balance between empowering consumers with customized services and addressing concerns about privacy and control is essential for businesses leveraging AI. By fostering transparency and ensuring ethical data practices, companies can mitigate the risks associated with data capture and build trust with consumers.

Sociological Context: The Surveillance Society Narrative

The issue of losing ownership over personal data is often linked to a perceived loss of personal control, fueled by concerns about technology’s capacity to monitor and influence human behavior. This narrative has deep roots in popular culture, with dystopian stories like George Orwell’s 1984 and Philip K. Dick’s Minority Report portraying societies where constant surveillance erodes privacy and individual autonomy. Such depictions resonate with sociological critiques, which view data capture as central to the rise of a surveillance economy where personal information becomes a key form of capital (Zuboff 2019).

The Shift to a Surveillance Marketplace

A turning point in this narrative came when companies like Google began to reframe consumer data as a valuable economic resource. In the early 2000s, Google pioneered a business model that transformed consumer data—once a mere by-product of online activity—into an economic asset driving a new type of commerce. This commerce thrives on data surplus, which is processed using machine intelligence to create “prediction products” designed to anticipate consumer behaviors (Zuboff 2019).

For example, Facebook has leveraged consumer data to target ads based on personality traits inferred from digital activity, such as analyzing users’ likes. Studies have shown that small adjustments to such targeted content can significantly influence consumer behavior. For instance, tailoring survey questions based on personality data can boost response rates by up to 50% (Matz et al. 2017). In 2018, Facebook generated approximately $56 billion in revenue from personalized advertising (Moore and Murphy 2019).

Consumer Complicity and Behavioral Futures

To sustain and expand this model, technology companies strive to normalize surveillance by associating it with benefits like convenience, productivity, safety, and health (Bettany and Kerrane 2016). They also employ techniques like notifications, reminders, and nudges to encourage consumers to share more private information, pushing the boundaries of acceptable data collection (Giesler and Humphreys 2007).

This dynamic leads to a paradox where consumers, while seeking personalized services, become complicit in the commodification of their own private experiences. As AI systems predict and shape behavior, they effectively transform consumers into participants in a marketplace that undermines personal control and concentrates power in the hands of those who own the data.

Broader Implications

The surveillance society narrative highlights critical tensions between personalization and exploitation. By framing consumer data as a resource to be mined and monetized, technology companies perpetuate a system that prioritizes profit over privacy. This concentration of knowledge and power raises ethical concerns about autonomy, fairness, and the long-term societal impacts of surveillance-driven commerce. Understanding this context is essential for navigating the complexities of AI’s role in consumer behavior and the broader marketplace.

Psychological Perspective: The Exploited Consumer

Data capture experiences are often fraught with tension. On the one hand, consumers recognize that sharing data allows AI systems to provide personalized services and experiences. On the other hand, the lack of transparency surrounding how data is used can lead to feelings of exploitation. These feelings are driven by both actual and perceived loss of personal control, which have significant psychological consequences (Botti and Iyengar 2006).

Negative Affect: Demotivation and Helplessness

The first psychological consequence is negative affect, which can manifest as demotivation and helplessness. For example, consider the case of Leila, a sex worker who used Facebook while attempting to shield her identity. She was horrified to see that Facebook’s “People You May Know” feature recommended her regular clients. For individuals like Leila—along with domestic violence survivors or political activists—privacy invasions are not merely unsettling but can become life-threatening. As Leila explained, “the worst nightmare of sex workers is to have your real name out there, and Facebook connecting people like this is the harbinger of that nightmare” (Hill 2017).

Moral Outrage: A Reaction to Privacy Violations

A second consequence of losing personal control is moral outrage, which stems from the ethical implications of privacy violations. For example, a German consumer requested his personal data from Amazon and unexpectedly received transcripts of Alexa’s interpretations of voice commands—even though he did not own an Alexa device. When the incident was reported to a local magazine, the journalists discovered they could easily explore the private life of an unrelated individual without his knowledge. The magazine described the experience as “immoral, almost voyeuristic,” leaving their staff deeply unsettled (Brown 2018).

Psychological Reactance: Resistance to Loss of Control

The third consequence is psychological reactance, a state in which individuals become motivated to restore their sense of control after feeling restricted (Brehm 1966). Reactance can result in negative evaluations of and hostile behaviors toward the source of the restriction. For instance, Danielle, a U.S. consumer, installed Amazon Echo devices throughout her home, trusting the company’s claims that they would respect her privacy. When one of her Alexa devices recorded a private conversation and sent it to a random contact in her address book, she felt deeply violated. Danielle stated, “I felt invaded” and concluded, “I’m never plugging that device in again, because I can’t trust it” (Horcher 2018).

Managerial Recommendations: Addressing the Exploited Consumer

To mitigate the challenges associated with consumer exploitation in AI data capture experiences, organizations must prioritize organizational learning and experience design. These steps can help businesses understand the sociological and psychological costs involved while developing practices that enhance consumer trust and engagement.

Organizational Learning

Organizations need to increase their awareness of the issues related to consumer privacy and the imbalance of control over personal data. This involves:

- Listening to Consumers: Use tools like netnography or sentiment analysis to empathize with consumer concerns at scale. For instance, firms can engage with consumers who feel exploited to gain direct insights into their experiences.

- Collaborating with Experts: Partner with scholars and privacy advocates to implement frameworks such as an algorithm bill of rights (Hosanagar 2019). This could include rights like transparency, ensuring consumers know when algorithms influence decisions, the factors being considered, and how those factors are weighted (Samuel 2019a).

- Questioning Internal Practices: Companies should reflect on how their privacy policies align with the needs of vulnerable consumer groups. For example, organizations might reconsider default privacy settings after understanding how these settings disproportionately impact certain users (Martin and Murphy 2017).

- Engaging with Broader Stakeholders: Extend learning beyond the firm by sponsoring research into the effects of surveillance narratives on consumer trust and marketing practices. Share findings with industry associations, educators, and the media to foster communal efforts in improving data practices.

Experience Design

Leveraging insights from organizational learning, firms can improve AI data capture experiences by designing systems that prioritize transparency, control, and consumer education:

- Enhancing Transparency and Consent: Regulations like the European Union’s General Data Protection Regulation (GDPR) require companies to provide clear consent mechanisms, such as opt-in options for cookies and detailed explanations of how data will be used.

- Simplifying Choice Architectures: Overloading consumers with choices and information about consent can reduce personal control and exacerbate negative emotions (Iyengar and Lepper 2000). To address this, companies can implement interventions such as:

- Defaults: Default settings can simplify decisions but must be designed to align with diverse consumer preferences to avoid suboptimal outcomes. Personalizing defaults using AI can help consumers implement their preferences more effectively (Thaler and Benartzi 2004; Sunstein 2015).

- Streamlined Interfaces: AI-powered tools can guide users through data-sharing processes, ensuring they understand what they are opting into without feeling overwhelmed.

- Educating Consumers: Educating users about the trade-offs involved in data capture can build trust. For instance, Google’s redesigned Home app provides clear explanations of what data is collected and why, demonstrating transparency and fostering informed decision-making.

AI Data Capture in the wild

The AI Classification Experience

The predictive capability in AI enables systems to categorize and understand consumers through sophisticated pattern recognition. This process forms the foundation of the classification experience, where AI systems sort and label users based on their behaviors, preferences, and contextual data. While some consumers actively participate in this classification by providing direct feedback (like rating movies or products), others are passively categorized through their digital behavior patterns and demographic information (Kumar et al. 2019). For instance, streaming platforms like Netflix employ complex algorithms that analyze viewing patterns, timing, device usage, and location data to classify viewers into specific audience segments.

Benefits of Classification

Despite these concerns, the classification experience offers significant advantages:

- Enhanced Discovery: Being classified into specific groups can help consumers discover new products and content aligned with their interests. For example, Netflix’s thumbnail selection algorithm helps viewers find shows they might enjoy based on their viewing patterns (Yu 2019).

- Community Connection: Classification can create a sense of belonging by connecting consumers with others who share similar interests and preferences (Gai and Klesse 2019).

- Improved Service Delivery: Accurate classification enables businesses to provide more relevant and timely services, enhancing the overall customer experience.

Challenges in Classification

The classification process presents several concerns for consumers, particularly regarding accuracy and fairness:

- Algorithmic Bias: AI systems may perpetuate existing biases or create new ones through their classification methods.

- Group Stereotyping: Classifications can lead to oversimplified categorizations that fail to capture individual nuances.

- Limited Agency: Consumers often have little control over how they are classified or ability to correct misclassifications (Turner and Reynolds 2011).

These factors can affect consumers’ sense of individual identity and autonomy—the feeling that they are being reduced to predetermined categories rather than being recognized as unique individuals. This standardization of identity has both practical and psychological implications for the consumer experience.

The Dual Nature of Classification

While classification can enhance service delivery and personalization, it often creates tension between being understood and being misunderstood. Consumers may appreciate the benefits of targeted recommendations while feeling uncomfortable about being categorized or labeled. Finding equilibrium between leveraging classification for improved service and respecting individual identity is crucial for businesses using AI. By maintaining transparency about classification methods and providing mechanisms for consumer feedback and control, companies can better manage the psychological impact of AI classification while maintaining its benefits.

Sociological Context: The Unequal Worlds Narrative

AI-driven classification systems do not function in isolation but are influenced by societal structures, cultural myths, and systemic inequalities. Popular media often reflects these concerns. For instance, in Neill Blomkamp’s Elysium, a starkly divided society uses technology to sustain inequality and oppression, highlighting fears that algorithms can reinforce social divides. These narratives resonate with sociological critiques of AI, which explore how systems of automation and data quantification can amplify biases and perpetuate discrimination.

Researchers have shown that AI can unintentionally reinforce stereotypes, marginalize certain groups, and reduce complex human experiences into simplified, biased categories. Works like Noble’s Algorithms of Oppression and O’Neil’s Weapons of Math Destruction argue that algorithms may exacerbate inequality, especially for racial, gender, and socioeconomic minorities. This aligns with studies on intersectionality, which examine how overlapping forms of discrimination—such as race, gender, and class—are reflected in AI systems. Practical Examples of AI Bias in Action

Practical Examples of AI Bias in Action

Even when designed with neutral intentions, AI systems can perpetuate discrimination due to the data and assumptions embedded in their algorithms.

- Google’s Mission to “Organize the World’s Information”

Google’s goal to streamline global information appears politically neutral but can lead to biased outcomes. For example, search algorithms have been criticized for reinforcing stereotypes. A study found that searches for traditionally male-dominated jobs (e.g., “CEO” or “engineer”) disproportionately returned images of men, reflecting societal biases encoded in the data. Such outcomes illustrate how AI systems may unintentionally privilege certain groups while misrepresenting or marginalizing others. - AI in College Admissions

AI-enabled college admissions tools aim to combat human bias by standardizing the selection process. However, these tools often reduce applicants to sociodemographic profiles, which can lead to stereotyping. For instance, an algorithm might overemphasize socioeconomic factors or neighborhood statistics, unintentionally disadvantaging students from underrepresented communities. This can result in racial profiling or exclusion based on flawed data interpretations. - Bank Lending Decisions

AI systems used in banking to evaluate creditworthiness often incorporate data about an individual’s location or demographic group. For example, an algorithm might penalize borrowers from areas with high default rates, even if an individual applicant has a strong credit history. This approach systematically excludes certain groups, perpetuating cycles of poverty and inequality.

Broader Implications

These examples illustrate how AI systems can mirror and magnify societal biases. The underlying issue is that algorithms often reflect the values and priorities of the society in which they are developed. For instance, when AI prioritizes efficiency or profitability, it may disregard the nuanced realities of marginalized communities.

Such concerns are especially relevant in market-driven systems. As Noble (2018) argues, when algorithms optimize for profit, they compromise the ability to handle complex social issues. For example, Google’s search algorithms may prioritize trending or profitable content over accuracy or fairness, which can misrepresent certain groups or reinforce stereotypes.

Addressing these challenges requires transparency, critical oversight, and inclusive design processes. By examining real-world cases and their implications, students can better understand the importance of ensuring AI systems promote fairness and equity rather than entrenching existing inequalities.

Psychological Perspective: The Misunderstood Consumer

Consumers’ experiences with AI classification often involve a tension between feeling understood and feeling misunderstood. These feelings arise when AI systems either misclassify consumers or use classifications in ways perceived as discriminatory.

Misclassification and the Evolving Self

One common reason consumers feel misunderstood is when AI assigns them an identity they view as inaccurate or overly simplistic. Human identity is multifaceted, encompassing a mix of personal and social selves that evolve over time. Misclassification can occur when AI focuses on a single dimension or outdated behavior.

For example, a frustrated Spotify user shared their experience with algorithmic misclassification on a community forum:

“The recommendations s*ck: Listened to a few anime covers, now all my ‘Discover Weekly’ is filled with disgusting covers. I’m trying to ‘not like’ all of them, but it doesn’t work. . . . I’ve stopped listening to rock years ago and still get rock recommendations.”

This user felt misunderstood because Spotify’s algorithm categorized them based on past behaviors and failed to capture their evolving musical tastes. The inability to override the algorithm’s assumptions added to their frustration. Misclassification can especially backfire when it clashes with uniqueness motives—the desire to feel distinct and in control of one’s identity.

Perceived Discrimination in AI Classification

Another source of misunderstanding arises when consumers suspect AI systems use social categories in ways that lead to biased or discriminatory outcomes. This is particularly troubling in areas where AI decisions restrict access to resources, such as credit or employment.

Consider a widely shared example involving the Apple Card:

“The @AppleCard is such a f*ing sexist program. My wife and I filed joint tax returns, live in a community-property state, and have been married for a long time. Yet Apple’s black-box algorithm thinks I deserve 20x the credit limit she does.”

– David Heinemeier Hansson (@dhh), November 7, 2019

In this case, the user was outraged not only by the financial inequity but also by the perceived gender discrimination in the algorithm’s decision-making. Such experiences can negatively affect a consumer’s self-concept, particularly when systemic bias leads to feelings of being “fettered, alone, discriminated, and subservient,” as has been reported among minorities facing systemic financial restrictions.

Combined Effects: Misclassification and Discrimination

In some cases, misclassification and discrimination intersect, compounding consumer frustration and harm. Facial recognition software, for example, has faced criticism for inaccurate and biased results:

- Everyday Inconveniences: When Apple’s Face ID fails to recognize its user, the result is often mild frustration.

- Serious Ethical Violations: When facial recognition is used in policing or surveillance, errors can lead to severe consequences. For instance, Amazon’s Rekognition software misidentified 28 members of the U.S. Congress as criminals in a test, with false matches disproportionately affecting people of color. This issue prompted the Congressional Black Caucus to express concerns about Rekognition’s potential misuse: “Communities of color are more heavily and aggressively policed than white communities . . . . We are seriously concerned that wrong decisions will be made due to the skewed data set produced by what we view as unfair and, at times, unconstitutional policing practices.” The software’s failure highlighted the broader societal implications of biased AI systems. In response to public pressure, Amazon temporarily suspended police use of Rekognition in June 2020.

Managerial Recommendations: Understanding the Misunderstood Consumer

Organizations must address classification errors and biases in AI systems through proactive learning and inclusive design. These steps ensure that AI builds consumer trust and satisfaction rather than undermining it.

Organizational Learning

To effectively address classification errors, organizations need robust mechanisms to identify and respond to biases embedded in AI systems:

- Identifying Biases

Classification errors often generate immediate signals, such as consumer complaints or discrepancies in operational data. For instance, a college applicant who is wrongly rejected due to a biased admissions algorithm might prompt further investigation. Without critical examination, however, organizations may incorrectly view the rejection as justified by competitive criteria. - Conducting Internal Audits

Organizations should proactively assess their AI systems for risks such as unfair or discriminatory decisions. The U.S. Algorithmic Accountability Act of 2019 provides an example of regulatory efforts to address these concerns. Firms should not wait for mandates but instead conduct regular audits by collaborating with experts in computer science, sociology, and psychology to uncover and mitigate biases. - Promoting Transparency and Advocacy

Companies can enhance accountability by sharing their audit processes and findings. For example, organizations might engage in public advocacy to support consumer protection regulations, ensuring that these include strong measures to address algorithmic bias.

Experience Design

Insights from organizational learning should inform AI system design, reducing the likelihood of consumers feeling misunderstood. Managers can implement the following strategies:

- Diversifying Content Recommendations: Consumers often feel confined by AI recommendations based solely on past behaviors. Offering unexpected or varied options can help expand engagement and reduce frustration.

Example: Spotify’s “Taste Breakers” feature introduces users to music outside their usual preferences, challenging narrow categorizations and enhancing the user experience.

- Balancing Short- and Long-Term Goals

AI systems often focus on optimizing short-term behaviors, ignoring consumers’ broader aspirations. To improve alignment, firms should design AI to account for long-term goals.

Example: Instead of reinforcing past behaviors, such as recommending fast food to a consumer attempting to adopt healthier habits, AI could suggest alternatives that align with their wellness objectives.

- Validating AI Inferences

Allowing consumers to review and correct AI’s assumptions can reduce feelings of being misunderstood. This increased user participation improves trust and satisfaction.

Example: Platforms could periodically prompt users to confirm or update preferences, ensuring recommendations evolve with their tastes and identities.

- Designing for Inclusion

Firms must audit AI systems for discriminatory practices and design processes that promote diversity. Inclusive design ensures that systems meet the needs of all consumers.

Example: Employing individuals with disabilities to address accessibility biases in AI systems can improve inclusivity. Similarly, diversifying design teams ensures varied perspectives inform AI experiences.

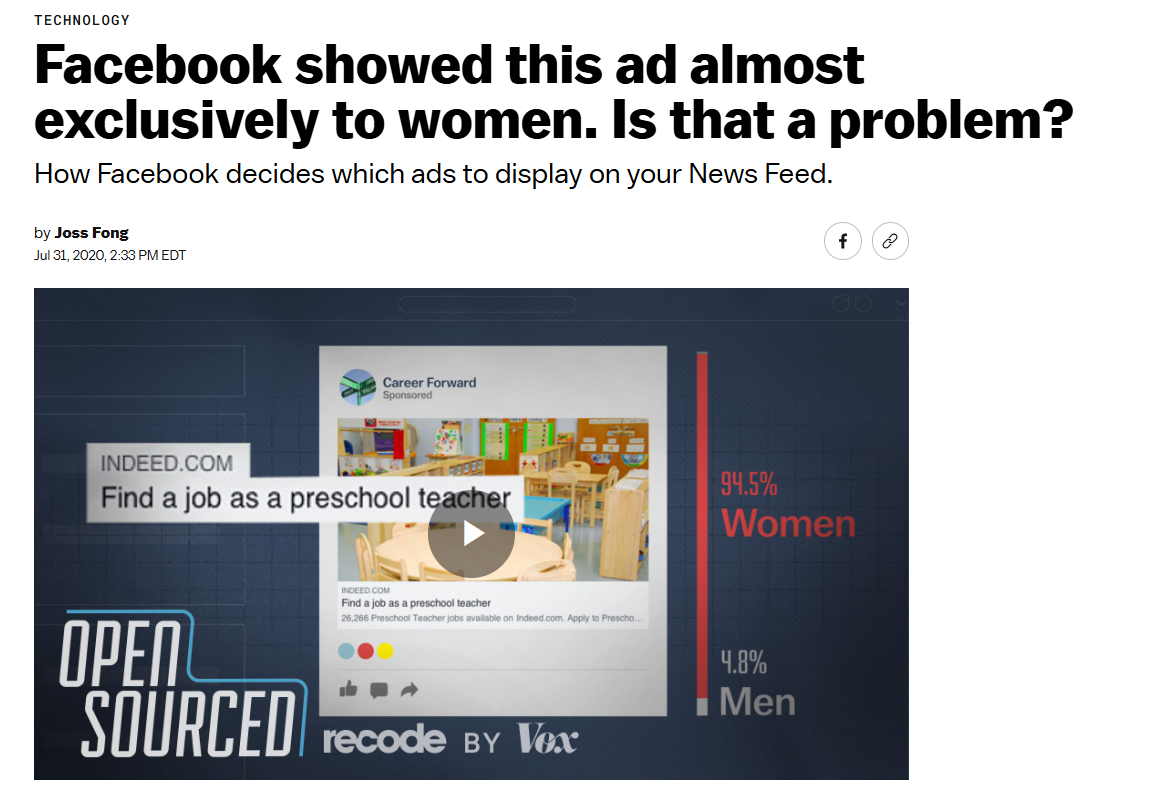

AI Classification in the wild

NOTE: Part 1 stops here and Part 2 starts below.

The AI Delegation Experience

The automation capability in AI enables systems to perform tasks on behalf of consumers, creating a new paradigm of human-machine collaboration. This process establishes the delegation experience, where consumers entrust AI systems with various responsibilities ranging from simple digital tasks to complex real-world actions. Some consumers actively delegate specific tasks, like using Google Assistant to schedule appointments, while others engage in passive delegation through systems that learn and automatically adjust to their preferences, such as smart home devices that regulate temperature (Bandura 1977). For example, AI-powered writing assistants now compose emails, while smart thermostats learn and implement temperature preferences without constant user input.

Benefits of Delegation

Despite these concerns, the delegation experience offers significant advantages:

- Time Optimization: By offloading routine or time-consuming tasks to AI, consumers can focus on more meaningful or enjoyable activities (Fishbach and Choi 2012).

- Enhanced Efficiency: AI can perform certain tasks more accurately and consistently than humans, leading to better outcomes.

- Skill Focusing: Consumers can concentrate on activities that match their abilities while delegating tasks where they might underperform (Botti and McGill 2011).

Challenges in Delegation

The delegation process raises several concerns for consumers, particularly regarding autonomy and dependency:

- Over-Reliance: Excessive delegation may lead to skill atrophy or reduced self-sufficiency in certain areas.

- Decision Fatigue: The abundance of delegation options can paradoxically create decision burden about what to delegate (Iyengar and Lepper 2000).

- Control Balance: Determining the appropriate level of delegation while maintaining meaningful engagement in important tasks.

These factors can impact consumers’ sense of competency and agency—the feeling that they maintain essential skills and control over their environment. This delegation-dependency balance has both practical and psychological implications for personal development and satisfaction.

The Balance of Delegation

While delegation can enhance productivity and life satisfaction, it often creates tension between being empowered and being replaced. Consumers may appreciate the efficiency gains while feeling concerned about becoming too dependent on AI systems. Finding the right balance between leveraging AI for task automation and maintaining personal competency is crucial for both consumers and businesses implementing AI solutions. By providing clear control mechanisms and ensuring transparent delegation processes, companies can help consumers maintain agency while benefiting from AI assistance.

Sociological Context: The Transhumanist Narrative

The possibility of being replaced by AI raises sociological concerns about delegation and human redundancy. These concerns are deeply connected to the “transhumanist” narrative, a recurring theme in popular culture and social science literature. Stories like Fritz Lang’s Metropolis, Isaac Asimov’s I, Robot, Mary Shelley’s Frankenstein, and James Cameron’s Terminator explore the risks of enhancing human capabilities through technology. These works caution that striving to transcend human limitations can result in technology evolving into a superhuman force that redefines perfection, often at the expense of human values.

The Shift Toward Limitless Performance

A defining feature of the transhumanist narrative is its focus on limitless performance. Critics argue that when AI is applied to domains like health, productivity, and aging, it often prioritizes extreme optimization over general well-being. This shift can marginalize human needs, creating “new logics of expulsion” where technological efficiency leads to economic redundancy. For example, in workplaces where AI-driven automation replaces human labor, entire populations may lose their place in the productive aspects of society, becoming surplus to economic requirements.

Contemporary Examples of Transhumanist Ideals

Transhumanist principles are embedded in many AI applications today. Products like the Roomba are marketed as superior to human labor, offering efficiency and convenience. Similarly, genetic testing services such as 23andMe promote visions of biologically optimized futures by suggesting that genetic data can improve health outcomes or even guide reproductive decisions. These technologies align with the transhumanist promise of reshaping human capabilities but often reinforce systems that prioritize progress and perfection over inclusivity and humanity.

The Role of Mythic Narratives in AI Adoption

Since the mid-20th century, technology companies have used mythic narratives to shape public perceptions of AI. These narratives often portray science and technology as forces capable of solving humanity’s greatest challenges, including achieving immortality. While these promises inspire innovation, they also normalize systemic dehumanization. For example, when AI-driven systems are designed to enforce rigid specifications or optimize behavior without individual consent, they erode personal autonomy and treat human replacement as a natural cost of progress.

Broader Implications

The transhumanist narrative reveals deeper societal tensions about the role of AI in shaping the future. By emphasizing perfection, efficiency, and progress, this perspective risks glorifying a form of technological determinism that undermines social justice and democracy. As AI systems contribute to the rise of a “useless class”—individuals whose skills are no longer valued—society may face increasing inequality and exclusion. Understanding this context is crucial to addressing the ethical and societal challenges posed by AI’s integration into human life.

Psychological Perspective: The Replaced Consumer

While delegation to AI can empower consumers by making tasks easier or more efficient, it also raises psychological concerns about being replaced. The ability of AI to substitute for human labor can feel threatening for three key reasons.

The Threat to Ownership and Accomplishment

Humans have an intrinsic desire to attribute outcomes to their own effort and skills, which fosters a sense of accomplishment (Bandura 1977). When AI performs tasks on their behalf, it can diminish this sense of ownership. Research on human–computer interaction shows that people often feel disempowered by machines, blaming them for failures while reserving credit for successes (Moon and Nass 1998). For activities tied to personal identity, delegating tasks to AI may even feel like cheating.

For instance, in the fishing industry, AI tools such as GPS for navigation, sonar for detecting fish, and advanced lures have revolutionized the process. However, such tools risk undermining the craft’s identity. Biologist Culum Brown captures this sentiment, saying:

“If you are going to use GPS to take you to a location, sonar to identify the fish and a lure which reflects light that humans can’t even see, you may as well just go to McDonald’s and order a fish sandwich.”

This statement underscores how AI might strip activities of their personal and skill-based significance.

Skill Erosion and Reduced Engagement

AI delegation can also prevent consumers from practicing and refining their skills, which negatively affects self-worth. This reliance on AI can lead to a “satisficing” mentality, where individuals settle for outcomes that are merely adequate rather than actively pursuing mastery.

For example, journalist John Seabrook described using Google’s Smart Compose feature while writing an email. He intended to write, “I am pleased,” but accepted the suggestion, “I am proud of you,” by hitting Tab. Reflecting on this moment, Seabrook questioned whether delegating creative tasks to AI was a step backward for his own abilities:

“If I allowed [Google’s] algorithms to navigate to the end of my sentences, how long would it be before the machine started thinking for me?”

This example illustrates how even minor acts of delegation, such as accepting autocomplete suggestions, can provoke deeper concerns about diminishing personal creativity.

Loss of Control and Self-Efficacy

A further consequence of delegating to AI is the loss of self-efficacy, which is essential for feeling in control (Bandura 1977). Engaging in creative or effortful tasks heightens self-efficacy by reinforcing personal agency (Dahl and Moreau 2007; Norton, Mochon, and Ariely 2012). Conversely, reliance on AI can lead to a perceived surrender of control.

This dynamic is evident in instances where GPS systems cause accidents due to over-reliance on machine guidance. For example, tourists in Australia drove into the ocean while trying to reach an island, stating that their GPS system “told us we could drive down there.” Such scenarios highlight how consumers can relinquish personal judgment in favor of machine-generated instructions, with potentially damaging results.

Managerial Recommendations: Understanding the Replaced Consumer

Organizational Learning

Companies must learn how to integrate the human desire for self-efficacy into their operations and strategies. This can be achieved in two primary ways:

- Engaging with interdisciplinary experts: Organizations should collaborate with family scholars, workplace psychologists, and health sociologists to explore the implications of replacing human tasks with AI. Understanding these effects can help businesses make informed decisions about how AI affects consumers’ psychological well-being and societal roles.

- Dialoguing with consumers: Firms should have open discussions with consumers to identify tasks they prefer to retain control over versus those they are comfortable delegating to AI. These insights are essential for designing AI solutions that respect consumer preferences and enhance adoption. For example, while AI outperforms human doctors in many technical areas, it struggles with certain critical social and technical skills, making human oversight indispensable in healthcare.

- Designing for self-efficace: Since self-efficacy is closely tied to control, businesses should design delegation experiences that enable consumers to make choices and take actions. A classic example of maintaining consumer involvement is Betty Crocker’s cake mix strategy, where requiring users to crack fresh eggs helped restore their sense of contribution, improving product adoption. Similarly, modern AI can offer subtle mechanisms for user input. For example, allowing users to correct or adjust an algorithm’s output—even minimally—can increase trust and adoption, as demonstrated in forecasting scenarios where users preferred accurate AI predictions after being given a chance to make minor modifications. Other examples include autonomous vehicles that could allow users to customize peripheral features like interior settings or route preferences, giving them a sense of control over the technology, and digital assistants in gaming that should avoid overly human-like behaviors, which might undermine players’ autonomy and immersion.

Organizational Culture and Design

To foster these insights across all levels of the organization, firms should implement structures that allow learnings from external collaborations and consumer feedback to permeate their technical teams. For instance:

- Hiring creative professionals such as artists, chefs, or artisans into AI-focused experience design roles can bring fresh perspectives that prioritize human values.

- Learning from organizations that emphasize human strengths—like museums, theaters, or humanities departments—can provide inspiration on integrating AI while preserving creativity, emotional intelligence, and nuanced judgment. For example, partnerships with cultural institutions can guide AI implementation strategies that celebrate traditional human values such as collaboration, creativity, and community rather than replacing them.

Experience Design

The insights from organizational learning should inform how companies design AI delegation experiences that respect consumer self-efficacy and identity. Effective division of labor between humans and AI can enhance consumer confidence and satisfaction. For instance:

- In healthcare, AI-powered surgical robots deliver precision, efficiency, and broader accessibility. However, their success depends on how well surgeons supervise and collaborate with these robots. Patients often hesitate to trust a fully automated process, emphasizing the need for clear human oversight during critical procedures.

- Beyond healthcare, AI should be positioned as a tool to amplify uniquely human abilities rather than replace them. This approach applies across industries, enabling businesses to meet consumer expectations for control, engagement, and self-expression while embracing AI’s transformative potential.

AI Delegation in the wild

The AI Social Experience

AI’s capability for engaging in reciprocal communication produces a “social experience.” This interaction can occur when consumers know they are communicating with AI (e.g., voice assistants like Siri) or when they initially believe they are interacting with a human representative (e.g., chatbots providing customer service). While these social interactions can foster convenience, efficiency, and even emotional connection, they also risk leaving consumers feeling alienated—especially when AI fails to address specific user needs or reinforces harmful stereotypes.

Benefits of Social AI

- Natural Interaction and Trust

AI interfaces that mimic human traits can make consumers feel more at ease. Anthropomorphic cues in AI (e.g., voice assistants using human speech patterns) can build trust, reduce perceived risk, and enhance consumer comfort (Waytz, Heafner, and Epley 2014). - Efficient Access to Services

In many contexts, the alternative to AI is no interaction at all. Automated chatbots and voice assistants provide “conversational commerce,” giving consumers round-the-clock access to firms. Such continuous availability and swift responses can streamline customer support, improving overall service efficiency. - Potential Emotional Connection

Advances in social robotics promise more comfortable and meaningful service interactions (Van Doorn et al. 2017). When humanlike features and behaviors are successfully integrated, consumers may feel that AI can understand their needs, fostering a deeper sense of connection.

Challenges in Social AI

- Alienating Interactions

Despite efficiency gains, AI interactions often fail to capture the nuance of human conversation. Poorly programmed chatbots or voice assistants can produce jarring, automated responses, causing frustration. For example, a chatbot’s insensitive or irrelevant replies can deepen consumers’ discomfort (Wong 2019). - Reinforcing Societal Inequities

AI-driven social experiences can inadvertently uphold discrimination. Chatbots and algorithms may adopt biased language or behaviors when their underlying data reflect societal prejudices. Microsoft’s Tay, which quickly began using offensive language on Twitter, exemplifies how easily AI can be co-opted to perpetuate harmful stereotypes (Me.me 2020). - Stereotypical Anthropomorphism

Humanizing AI often relies on simplistic gender or racial tropes. Female-voiced assistants, for instance, risk normalizing a vision of AI as “passive and subservient” (West, Kraut, and Chew 2019). Such design choices both reflect and reinforce patriarchal norms, potentially alienating women and racial minorities.

The Double-Edged Sword of Social Experience

Regarding the AI social experience, consumers’ may interpret benefits as feeling connected with AI. Other the other hand, they be also feel alienated. Negative reactions to simulated interactions can go well beyond occasional disappointment—especially when these interactions occur within rich cultural contexts where unbalanced intergroup relations and discrimination may be amplified rather than alleviated. In such cases, the very humanlike qualities designed to foster connection and trust may instead heighten concerns about bias and inequality, underscoring the delicate balance between creating meaningful engagement and inadvertently triggering feelings of exclusion or unease.

Sociological Context: The Humanized AI Narrative

Human fascination with machines that mimic human traits has significantly shaped the sociological narrative surrounding AI. This preference for humanized technology is deeply ingrained in cultural representations, particularly in science fiction, where artificial beings often embody idealized human characteristics. For instance, female robots—or “gynoids”—are frequently depicted as compliant and alluring in works like Blade Runner (as “basic pleasure models”), Westworld (as sex workers), and Cherry 2000 (where they are traded as commodities). These portrayals highlight how AI has often been imagined to fulfill societal and gendered fantasies rather than challenge them.

The Commercialization of Humanized AI

In the modern marketplace, this cultural fascination has evolved into a strategy for creating AI products designed to enhance user experience. Anthropomorphic chatbots and voice assistants are examples of this trend, employing human-like traits to foster trust and familiarity. Although users are typically less open and expressive when interacting with AI compared to human counterparts, the integration of human characteristics—such as conversational tone and empathetic responses—helps bridge this gap. This approach has led to the creation of AI products that are not merely tools but companions, often described as “artificial besties.”

For example, personal assistants like Siri, Alexa, and Google Assistant engage users with human-like speech and emotional cues. These interactions make the technology feel approachable, encouraging deeper user engagement. By mimicking human behavior, these AI systems aim to seamlessly integrate into everyday life, building emotional connections that increase reliance and trust.

Gender Bias in AI Design

However, the humanization of AI often reproduces societal biases, particularly around gender roles. Many AI systems adopt traditionally feminine personas, portraying traits like helpfulness, submissiveness, and emotional sensitivity. This dynamic reflects long-standing stereotypes, as seen in fictional depictions like the robot Maria in Metropolis, where female-coded machines are designed to serve and obey. These gendered assumptions are not merely byproducts of design but intentional choices that align with dominant societal norms and preferences.

This bias has tangible consequences. For example, Siri’s earlier programming allowed it to respond to sexist remarks like “you’re a slut” with “I’d blush if I could,” inadvertently reinforcing misogynistic attitudes. Such responses expose the underlying cultural biases embedded in AI systems, reflecting the values of the predominantly male technology sector. These biases can alienate women and marginalized groups, limiting the inclusivity of AI technologies.

The Challenge of Inclusivity

The humanized AI narrative underscores the potential for technology to both connect and exclude. While anthropomorphism enhances usability and fosters emotional engagement, it also risks reinforcing stereotypes and alienating diverse user groups. AI products, by catering to specific demographic preferences, often fail to consider the experiences and needs of broader populations, including women and racial minorities.

Addressing these challenges requires rethinking how AI systems are designed. Moving beyond stereotypical portrayals and dualistic gender norms could enable AI to foster greater social inclusivity. By questioning the assumptions that guide the humanization of AI, developers can create products that bridge social divides rather than deepen them. Recognizing and addressing these issues is crucial for ensuring that AI contributes positively to society and reflects the diversity of its users.

Psychological Perspective: The Alienated Consumer

AI-enabled social experiences have the potential to strengthen consumer–firm relationships but can also alienate consumers. Two primary types of alienation emerge in these interactions.

Alienation Through General Interaction Failures

The first type of alienation arises when AI systems fail to meet consumer expectations during routine interactions. These failures often highlight the inability of AI to handle complex or sensitive social cues. For example, the following exchange between a customer and the chatbot UX Bear demonstrates this issue:

Bot: “How would you describe the term ‘bot’ to your grandma?”

User: “My grandma is dead.”

Bot: “Alright! Thanks for your feedback. [Thumbs up emoji]”

Such instances illustrate why many consumers resist replacing human interactions with automated systems. Studies show that consumers often feel discomfort when interacting with “social robots” in service roles. For instance, customer responses in a field study became significantly more negative when participants were informed in advance that their interaction partner would be a machine rather than a human.

In response to such alienation, many consumers are gravitating toward more personal and authentic interactions, seeking alternatives that emphasize human connections in marketing and service experiences.

Alienation Through Exclusion and Inequity

The second type of alienation stems from AI systems failing to meet the needs of specific groups, often exacerbating social inequities. For example, the UK government’s automated Universal Credit program led to alienating experiences for individuals like Danny Brice, a claimant with learning disabilities and dyslexia:

“I call it the black hole. … I feel shaky. I get stressed about it. This is the worst system in my lifetime. They assess you as a number, not a person. Talking is the way forward, not a bloody computer. I feel like the computer is controlling me instead of a person. It’s terrifying.”

Similarly, AI systems that replicate societal biases can produce exclusionary or harmful outputs. Microsoft’s chatbot Tay, for example, quickly began producing offensive responses after being exposed to biased input from users:

User: “What race is the most evil to you?”

Bot: “Mexican and black.”

Such examples underscore how poorly designed AI can reflect and amplify the discrimination present in society, further alienating marginalized groups.

Even seemingly benign AI interactions can perpetuate harmful social norms. Journalist Sigal Samuel described her unsettling experience with Siri while investigating sexist behavior in AI systems:

User: “Siri, you’re ugly.”

Siri: “I am?”

User: “Siri, you’re fat.”

Siri: “It must be all the chocolate.”

User: “Don’t worry, Siri. This is just research for an article I’m writing!”

Siri: “What, me, worry?”

Such exchanges illustrate how AI can inadvertently reinforce societal pressures and biases, particularly regarding women’s appearance.

Broader Implications of Alienation

Alienating social experiences with AI not only damage consumer trust but also risk perpetuating broader societal inequalities. Research shows that consumers express more frustration with female-voiced AI during conversational failures, reflecting ingrained gender biases. These frustrations often manifest as objectifying or dismissive behaviors, reinforcing stereotypes about women as submissive or inadequate.

As AI continues to evolve, mimicking human interactions more closely, organizations face critical ethical challenges. Addressing these challenges requires careful design and oversight to ensure AI systems foster inclusivity and equity rather than exclusion and harm.

Managerial Recommendations: Understanding the Alienated Consumer

Organizational Learning

To address consumer alienation in AI interactions, organizations should focus on developing inclusive and ethically informed practices:

- Gathering Consumer Insights

Collect data from real-world AI interactions to identify patterns of alienation, such as heightened stress in customer responses or frequent dissatisfaction. For example, analyzing sentiment in chatbot interactions can reveal moments where AI fails to meet consumer needs, guiding improvements. - Engaging with Interdisciplinary Experts

Collaborate with psychologists, sociologists, and linguists to understand the root causes of alienation. Experts can help organizations avoid embedding harmful stereotypes into AI systems, such as defaulting to gendered voices or names. - Reevaluating Anthropomorphism

Reassess the assumption that humanizing AI always enhances consumer relationships. For instance, reducing reliance on anthropomorphic traits, such as overly human-like personas, can help avoid reinforcing stereotypes while still maintaining effective user experiences. - Integrating Ethical Considerations

Partner with ethics-focused organizations to examine the societal impact of AI systems. This includes considering how automated services might disproportionately exclude or harm vulnerable populations, even when meeting technical performance standards.

Experience Design

Using insights from organizational learning, firms can design AI interactions that reduce alienation and foster trust:

- Improving Responsiveness

Design AI systems that explain failures transparently and empathetically. For example, if an AI system encounters an error, it could provide a clear explanation and guidance on next steps to reduce consumer frustration. - Facilitating Human Escalation

Ensure seamless transitions from AI to human representatives when consumers encounter challenges. For instance, a service bot could offer an immediate option to connect with a live agent when it detects stress or repeated failed attempts to resolve an issue. - Creating Inclusive Designs

Develop gender-neutral voices and personas for AI systems to minimize reinforcement of stereotypes. Recent innovations in creating nonbinary AI voices demonstrate how inclusivity can enhance user experiences while avoiding bias. - Enhancing Equity in Services

Tailor AI systems to accommodate the needs of marginalized groups. For example, when deploying AI for essential services like social welfare programs, ensure the system is accessible to users with disabilities or low technological literacy, addressing barriers that might exclude them.

By adopting these strategies, organizations can create AI experiences that not only meet functional goals but also foster equitable and inclusive consumer relationships.

Key Terms

| Category | Term | Definition |

|---|---|---|

| Core Experience Dimensions | Data capture | Collection of personal and contextual data by AI, both actively provided and passively recorded from behaviors and devices. |

| Classification | Using patterns in data to sort people into segments, profiles, or predictions that drive recommendations and decisions. | |

| Delegation | Letting AI perform tasks on your behalf, from suggestions and scheduling to automated actions in the real world. | |

| Social interaction | Conversational engagement with AI agents or chatbots that simulate human dialogue to deliver service or support. | |

| Consumer Tensions | Served vs exploited | Benefit from personalization while risking privacy loss and commodification of personal information. |

| Understood vs misunderstood | Feeling accurately recognized by AI versus being reductively labeled or misclassified in ways that feel wrong. | |

| Empowered vs replaced | Gaining convenience and efficiency while fearing loss of skills, agency, or relevance. | |

| Connected vs alienated | Feeling supported by conversational AI versus frustrated or excluded by robotic or biased interactions. | |

| Consumer Psychology | Personal control | Sense that you are directing outcomes, not being controlled by opaque systems or defaults. |

| Psychological reactance | Motivated resistance that arises when people feel their choices or autonomy are restricted. | |

| Self-efficacy | Belief in your ability to perform tasks and achieve goals without overreliance on automation. | |

| Sociological Context | Surveillance capitalism | Business model that monetizes personal data to predict and shape behavior at scale. |

| Algorithmic bias | Unfair outcomes that result from skewed data, labels, features, or objectives embedded in AI systems. | |

| Anthropomorphism | Attributing human traits to AI, which can build trust but also reinforce stereotypes. | |

| Design and Governance | Choice architecture | Design of options, defaults, and information that influences decisions in AI-mediated experiences. |

| Human escalation | Providing an easy handoff from AI to a human agent when the system fails or stakes are high. | |

| Preference validation | Letting users review, correct, and update AI’s assumptions to keep recommendations aligned with evolving identities. | |

| Transparency | Plain-language explanations about data use, model influence, and why specific recommendations or decisions were made. |

Summary: AI and the Transformation of Consumer Experiences

Key Takeaways:

his chapter connects the technical capabilities of AI with their real-world impact on consumer experiences. It bridges theoretical understanding of AI with practical implementation challenges, setting up discussions of specific applications in later chapters.

- AI consumer experiences are shaped by four core capabilities: data capture, classification, delegation, and social interaction

- Each capability creates distinct tensions between benefits and potential harms (e.g., personalization vs. privacy)

- Successful AI implementation requires balancing automation benefits with maintaining human agency and control

- Consumer trust depends heavily on transparency, fairness, and clear communication about AI systems

- Organizations must consider both individual consumer needs and broader societal implications of AI deployment

- Design choices in AI systems can either reinforce or help overcome existing social biases and inequalities

AI in Action: Netflix’s recommendation system exemplifies these concepts in practice – it uses data capture to understand viewing habits, classification to categorize viewers, delegation to automate content selection, and social features to enhance engagement. However, it also demonstrates challenges like potential bias in recommendations and privacy concerns.

Think Deeper:

- How can organizations balance the efficiency gains of AI automation with maintaining meaningful human engagement?

- What responsibilities do companies have in ensuring their AI systems don’t perpetuate social inequalities or biases?

- How might the growing sophistication of AI systems affect consumer trust and acceptance?

Further Exploration:

- Examine case studies of companies successfully managing the tensions between AI capabilities and consumer concerns

- Investigate emerging best practices in ethical AI design and implementation

- Research how different cultures and demographics respond to AI-driven consumer experiences

- Study the evolution of consumer attitudes toward AI as the technology becomes more prevalent