Chapter 4: Sensation and Perception

How We See

Learning Objectives

- Describe the basic anatomy of the visual system

- Describe how light waves enable vision

Anatomy of the Visual System

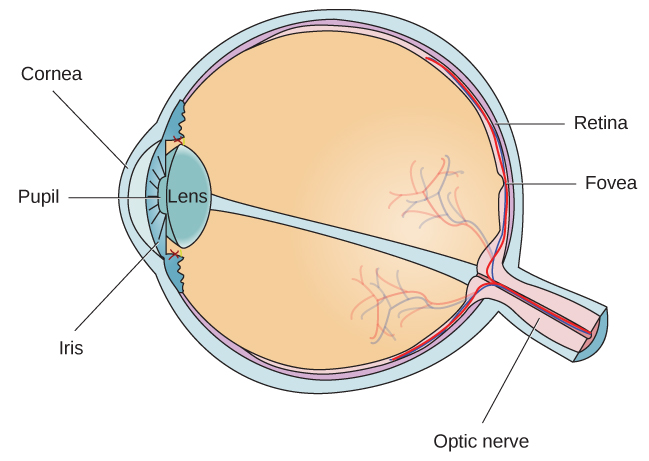

The eye is the major sensory organ involved in vision (Figure 1). Light waves are transmitted across the cornea and enter the eye through the pupil. The cornea is the transparent covering over the eye. It serves as a barrier between the inner eye and the outside world, and it is involved in focusing light waves that enter the eye. The pupil is the small opening in the eye through which light passes, and the size of the pupil can change as a function of light levels as well as emotional arousal. When light levels are low, the pupil will become dilated, or expanded, to allow more light to enter the eye. When light levels are high, the pupil will constrict, or become smaller, to reduce the amount of light that enters the eye. The pupil’s size is controlled by muscles that are connected to the iris, which is the colored portion of the eye.

After passing through the pupil, light crosses the lens, a curved, transparent structure that serves to provide additional focus. The lens is attached to muscles that can change its shape to aid in focusing light that is reflected from near or far objects. In a normal-sighted individual, the lens will focus images perfectly on a small indentation in the back of the eye known as the fovea, which is part of the retina, the light-sensitive lining of the eye. The fovea contains densely packed specialized photoreceptor cells (Figure 2). These photoreceptor cells, known as cones, are light-detecting cells. The cones are specialized types of photoreceptors that work best in bright light conditions. Cones are very sensitive to acute detail and provide tremendous spatial resolution. They also are directly involved in our ability to perceive color.

While cones are concentrated in the fovea, where images tend to be focused, rods, another type of photoreceptor, are located throughout the remainder of the retina. Rods are specialized photoreceptors that work well in low light conditions, and while they lack the spatial resolution and color function of the cones, they are involved in our vision in dimly lit environments as well as in our perception of movement on the periphery of our visual field.

We have all experienced the different sensitivities of rods and cones when making the transition from a brightly lit environment to a dimly lit environment. Imagine going to see a blockbuster movie on a clear summer day. As you walk from the brightly lit lobby into the dark theater, you notice that you immediately have difficulty seeing much of anything. After a few minutes, you begin to adjust to the darkness and can see the interior of the theater. In the bright environment, your vision was dominated primarily by cone activity. As you move to the dark environment, rod activity dominates, but there is a delay in transitioning between the phases. If your rods do not transform light into nerve impulses as easily and efficiently as they should, you will have difficulty seeing in dim light, a condition known as night blindness.

Rods and cones are connected (via several interneurons) to retinal ganglion cells. Axons from the retinal ganglion cells converge and exit through the back of the eye to form the optic nerve. The optic nerve carries visual information from the retina to the brain. There is a point in the visual field called the blind spot: Even when light from a small object is focused on the blind spot, we do not see it. We are not consciously aware of our blind spots for two reasons: First, each eye gets a slightly different view of the visual field; therefore, the blind spots do not overlap. Second, our visual system fills in the blind spot so that although we cannot respond to visual information that occurs in that portion of the visual field, we are also not aware that information is missing.

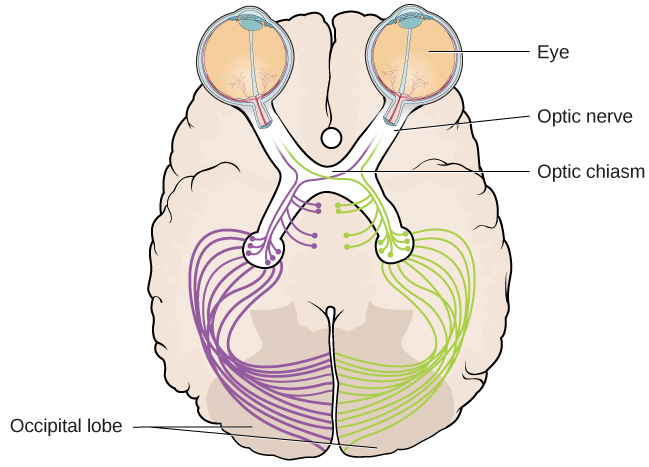

The optic nerve from each eye merges just below the brain at a point called the optic chiasm. As Figure 3 shows, the optic chiasm is an X-shaped structure that sits just below the cerebral cortex at the front of the brain. At the point of the optic chiasm, information from the right visual field (which comes from both eyes) is sent to the left side of the brain, and information from the left visual field is sent to the right side of the brain.

Once inside the brain, visual information is sent via a number of structures to the occipital lobe at the back of the brain for processing. Visual information might be processed in parallel pathways which can generally be described as the “what pathway” (the ventral pathway) and the “where/how” pathway (the dorsal pathway). The “what pathway” is involved in object recognition and identification, while the “where/how pathway” is involved with location in space and how one might interact with a particular visual stimulus (Milner & Goodale, 2008; Ungerleider & Haxby, 1994). For example, when you see a ball rolling down the street, the “what pathway” identifies what the object is, and the “where/how pathway” identifies its location or movement in space.

Amplitude and Wavelength

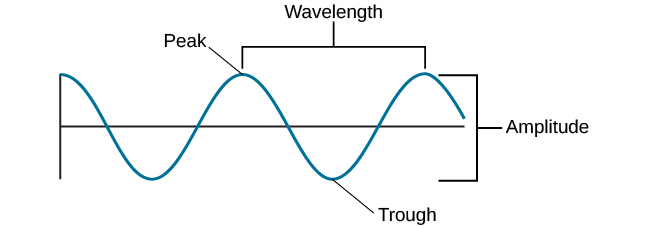

As mentioned above, light enters your eyes as a wave. It is important to understand some basic properties of waves to see how they impact what we see. Two physical characteristics of a wave are amplitude and wavelength (Figure 5). The amplitude of a wave is the height of a wave as measured from the highest point on the wave (peak or crest) to the lowest point on the wave (trough). Wavelength refers to the length of a wave from one peak to the next.

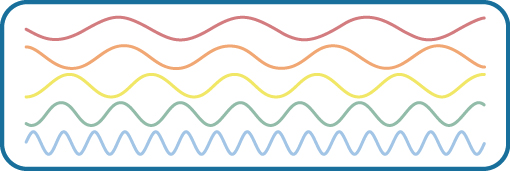

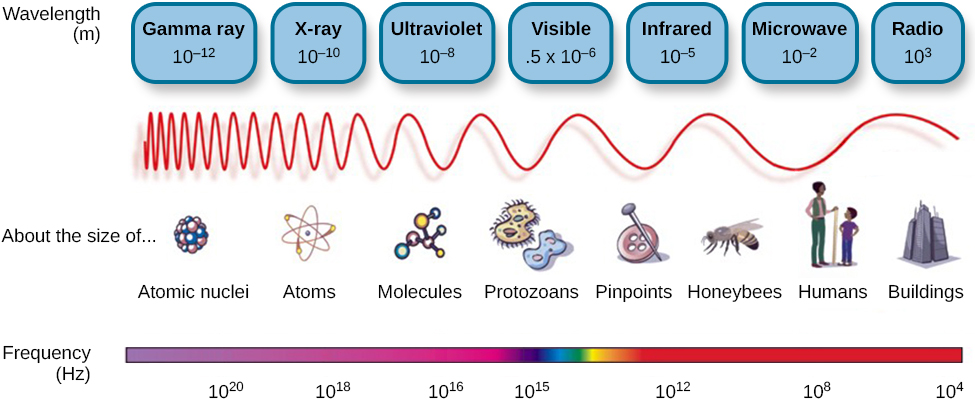

Wavelength is directly related to the frequency of a given wave form. Frequency refers to the number of waves that pass a given point in a given time period and is often expressed in terms of hertz (Hz), or cycles per second. Longer wavelengths will have lower frequencies, and shorter wavelengths will have higher frequencies (Figure 6).

Light Waves

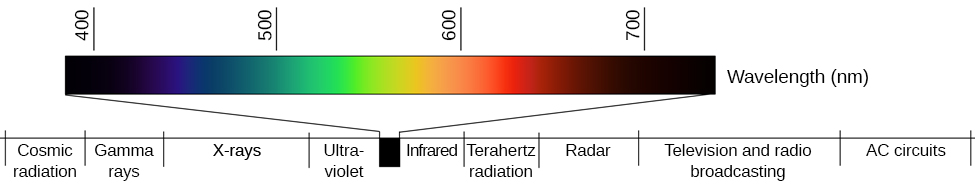

The visible spectrum is the portion of the larger electromagnetic spectrum that we can see. As Figure 7 shows, the electromagnetic spectrum encompasses all of the electromagnetic radiation that occurs in our environment and includes gamma rays, x-rays, ultraviolet light, visible light, infrared light, microwaves, and radio waves. The visible spectrum in humans is associated with wavelengths that range from 380 to 740 nm—a very small distance, since a nanometer (nm) is one billionth of a meter. Other species can detect other portions of the electromagnetic spectrum. For instance, honeybees can see light in the ultraviolet range (Wakakuwa, Stavenga, & Arikawa, 2007), and some snakes can detect infrared radiation in addition to more traditional visual light cues (Chen, Deng, Brauth, Ding, & Tang, 2012; Hartline, Kass, & Loop, 1978).

In humans, light wavelength is associated with perception of color (Figure 8). Within the visible spectrum, our experience of red is associated with longer wavelengths, greens are intermediate, and blues and violets are shorter in wavelength. (An easy way to remember this is the mnemonic ROYGBIV: red, orange, yellow, green, blue, indigo, violet.) The amplitude of light waves is associated with our experience of brightness or intensity of color, with larger amplitudes appearing brighter.

transparent covering over the eye

small opening in the eye through which light passes

colored portion of the eye

curved, transparent structure that provides additional focus for light entering the eye

small indentation in the retina that contains cones

light-sensitive lining of the eye

light-detecting cell

specialized photoreceptor that works best in bright light conditions and detects color

specialized photoreceptor that works well in low light conditions

carries visual information from the retina to the brain

point where we cannot respond to visual information in that portion of the visual field

X-shaped structure that sits just below the brain’s ventral surface; represents the merging of the optic nerves from the two eyes and the separation of information from the two sides of the visual field to the opposite side of the brain

height of a wave

(also, crest) highest point of a wave

lowest point of a wave

length of a wave from one peak to the next peak

number of waves that pass a given point in a given time period

cycles per second; measure of frequency

portion of the electromagnetic spectrum that we can see

all the electromagnetic radiation that occurs in our environment