Chapter 3: Demystifying Artificial Intelligence: Your Guide to the Synthetic Brain

This chapter takes on a very difficult challenge: I want non-technical readers to be able to understand the technical concepts behind modern artificial intelligence (AI). While early AI systems were based on rigid rule-based programming, today’s AI utilizes neural networks that actually learn. The software generates a “learning entity”, and the AI capabilities emerge through a training process rather than being hand-coded by programmers. This learning mimics the way humans acquire skills and knowledge. A key similarity between human brains and these AI systems is that both process information through electric signals. However, the human brain uses electrochemical signals propagated through biological neural networks, while AI systems use digital electronic signals propagated through artificial neuron-like connections. Unlike the organic human brain, AI systems do not have a physical brain but are software programs running on hardware. By modeling learning processes of the human brain, researchers have developed AI systems capable of complex functions like pattern recognition, decision making, and natural language processing. However, current AI remains much simpler than the human brain and cannot match its general intelligence. My goal is to explain key AI concepts simply so readers without a technical background can grasp how these synthetic minds learn and operate.

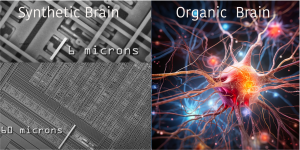

Synthetic AI Brain Vs. Our Human Organic Brain

In the pages that follow, you’ll encounter the terms “Synthetic Brain” to describe artificial intelligence and “Organic Brain” to depict the human mind. These phrases are employed to create a vivid conceptual boundary between the machines we’ve crafted and the biological reality we inhabit.

While they make for compelling and clear delineation, it’s crucial to note that these terms are not equivalent in function or ethical implication. Artificial intelligence, as of this writing, lacks the emotional depth, self-awareness, and moral value that characterize the human brain. On the other hand, using “Organic Brain” does not imply inherent superiority over artificial systems. These terms are simplifications, vehicles for narrative and analysis rather than precise scientific labels. Please bear this nuanced context in mind as you delve into the transformative world of AI in education.

Understanding AI Through the Lens of a Self-Driving Car

Now that we have explained how the synthetic brain is built, a real world example will help readers understand its function and capability. Imagine embarking on an ambitious project — designing a car that can navigate safely and effectively through diverse terrains across the United States. This isn’t just any car; it embodies the pinnacle of AI technology. This car has three foundational technical capabilities:

Technical Capabilities

- Vision – Provided by sensors that act as the car’s eyes, constantly scanning the environment.

- Processing Unit – The car’s compute center that receives, stores, and most importantly interprets or processes information based on both historical knowledge and what is receives from the sensors.

- Control Mechanism – The system that takes instructions from the processing unit computer to maneuver the car by controlling the steering wheel, accelerator, and brakes.

Traditional Approach vs. The Neural Net Approach

In the traditional method, programmers would laboriously write codes, a procedure prone to errors especially when unexpected changes in road conditions occur, necessitating constant updates. It involves:

- Receiving and interpreting sensor data.

- Utilizing the processing unit to decipher this data and formulate appropriate driving instructions.

- Executing these instructions physically through the control mechanism to steer the car.

However, this method has its drawbacks, including the extensive human intervention needed to manage dynamic road conditions, detours, etc. continually.

Enter the revolutionary Neural Net approach, an AI tool that simplifies this process remarkably. This approach leverages a car’s synthetic brain comprising multi-layered neural networks to control its vision, thinking process, and physical actions seamlessly. It mirrors human learning by processing millions of behavior examples from proficient human drivers, enabling it to infer the best response in any given situation.

Unveiling the Concepts of AI

Artificial Multi-Layered Brain

Imagine the car’s central computer resembling a well-seasoned driver’s brain, rich with layers of knowledge and understanding. Just as different regions of the human brain work in harmony to make sense of complex situations, the car uses its multi-layered synthetic brain to process data from its surroundings, decide on the safest and most efficient paths, and even learn from its experiences — much like a human learning to become a skilled driver over time.

Learning and Inference

Picture a young driver learning to drive: first by observing and learning from others, and then by practicing and applying those learnings in real scenarios. This is analogous to how the AI in the car operates; it learns by processing countless data on safe driving practices and then uses this knowledge, inferring the best actions to take in real-time while on the road.

Multi-Model Architecture

Consider the scenario where a team of expert drivers combines their knowledge to control a car, each bringing a unique skill set to the table to navigate complex routes seamlessly. The multi-model architecture in AI works similarly, harmonizing various specialized “units” or models, each responsible for understanding different aspects such as road signs, obstacle detection, and traffic flow, functioning in unison to drive the car safely and efficiently.

Back-Propagation

Imagine a driver recalling a mistake they made during a drive, analyzing what went wrong, and ensuring they avoid repeating the mistake in the future. In a similar vein, back-propagation in AI refers to a self-improving algorithm where the system identifies errors or miscalculations post-facto, learns from them, and adjusts its internal parameters to make more accurate decisions in the future.

Transfer Learning

Visualize a scenario where our car could instantly acquire the combined experience of thousands of drivers, gaining knowledge from a vast array of unique driving scenarios. Transfer learning enables just that — the car’s AI system can learn from the experiences and data of other vehicles, constantly enriching its own knowledge base and enhancing its ability to handle a wide variety of driving situations, much like gathering wisdom from a community of seasoned drivers.

One more metaphor: A High Tech Kitchen

In case the self driving metaphor was confusing I will provide one more:

A high tech kitchen.

Section 1: Setting the Physical Foundation

The Kitchen Blueprint (Multi-Layered Architecture)

Imagine the kitchen layout as akin to both a human brain and a neural network in AI, structured with various layers facilitating complex functions. Picture stations representing layers of neurons or brain synapses, interconnected, working sequentially to produce a result. Each station, designed meticulously, facilitates a harmonious flow of ingredients, akin to the flowing information through a neural network, from the raw inputs to the exquisite output presented in a dish.

The Chef’s Toolbox (Activation Functions)

In the chef’s toolbox, there are specific tools representing activation functions in AI. These tools define how the ingredients (data points) are transformed at each stage, acting as gatekeepers that control the flow, akin to synaptic transmissions regulating signals in our neural brains, facilitating a dance of flavors in dishes and allowing only meaningful data to proceed in an AI system.

Quality Ingredients (Data Augmentation)

Quality control begins with choosing diverse and rich ingredients, akin to data augmentation in AI. Just as a chef sharpens skills by practicing varied cutting techniques, AI benefits from varied data, offering different perspectives, enhancing its learning and predictive capabilities.

Section 2: The Functional Dynamics

Secret Recipes (Deep Learning)

Delve deeper into the kitchen’s sanctum to find secret recipes, representing deep learning algorithms. These recipes, carefully developed over generations, guide the chef in creating dishes with complexity and depth, akin to deep neural networks identifying intricate patterns from layers of data, continuously evolving to create outputs with unprecedented nuances.

The Chef and Apprentice Dance (Training and Inference)

In this vibrant kitchen, there’s a dynamic duo — the seasoned chef and the keen apprentice. The training day sees the chef guiding the apprentice through intricate recipes, a mirror to the training phase in AI, setting the stage for the inference process where the apprentice, now adept, can independently craft dishes, applying learned knowledge, echoing AI utilizing learned parameters to make predictions or decisions.

The Constant Evolution (Backpropagation Algorithm)

As in any culinary journey, not all paths lead to perfection. When dishes sometimes miss the mark, the chef retrospects, tracing steps back to rectify errors — a vivid portrayal of the backpropagation algorithm, where the system learns from mistakes, optimizing for better outcomes in subsequent attempts, fostering a culture of continuous learning and perfection.

Building on Classics (Transfer Learning)

Our chef respects the classics, yet is not afraid to innovate. By starting with a classic recipe and tweaking elements, the chef saves time while bringing novelty to the menu, akin to transfer learning in AI, where pre-existing models form the base for developing newer, tailored solutions, encouraging a blend of efficiency and creativity.

Masterful Communication (Natural Language Processing)

The chef, a master communicator, expertly navigates through languages of gestures and expressions to understand and respond to diner’s preferences, epitomizing Natural Language Processing in AI, which interprets and generates human language, fostering seamless interactions and delivering personalized experiences.

Ethical Kitchen (Ethical Guardrails)

At the heart of this kitchen lies a firm commitment to ethical practices, a representation of the ethical guardrails in AI. Just as the chef promises transparency and quality, AI is designed with principles to ensure safety, privacy, and adherence to ethical norms, promising a trustworthy and secure environment.

The Human Brain as the Blueprint for AI design

Certainly, the fusion of our understanding of the human brain and technological advancements has paved the way for the incredible phenomenon that is artificial intelligence (AI). This synergy revolves around a deep understanding of the human brain, a masterful tapestry woven with an estimated 100 trillion synapses through which neurons, the fundamental units of the brain and nervous system, communicate.

Drawing inspiration from this, AI developers ingeniously crafted synthetic neural networks to mirror, to an extent, this magnificent orchestration. At the heart of these networks lie “weights”, inspired by the role of synapses in the brain. These weights govern the strength and nature of connections, facilitating communication channels akin to the synapses in our brains, albeit on a much smaller scale. Yet, even with this limited scale, AI networks have managed to deliver staggering results, showcasing a powerful testament to the profound capabilities housed in the neural structures of the human brain.

Adding another layer of complexity, the neural networks in AI are designed based on a three-dimensional grid structure, a reverberation of the brain’s intricate arrangement of neurons in a three-dimensional matrix. This framework ensures a dense, multifaceted interplay of data and learning paths, creating a learning powerhouse that’s ever-evolving, adapting, and growing in sophistication.

AI doesn’t just leverage hardware advancements such as Nvidia’s GPUs; it combines them with software architectures that echo the brain’s neural and synaptic structures, albeit not replicating them fully. This perfect amalgamation has fostered a dynamic and continuously evolving tool, showcasing a harmonious blend of biology’s marvel with the frontier spirit of technology. The result is a rich, interconnected network, less potent than the human brain yet remarkably capable, functioning as a vibrant ecosystem that breathes life into the frontier of AI education, replete with possibilities and potentials that stretch as far as the mind can reach.

Mystery and Magical Results not fully understood?

In a twist that evokes shades of both awe and existential wonder, even the most brilliant minds behind AI technology can’t fully decipher what transpires within the layers of a neural network once it’s trained. Unlike traditional programming, where each line of code is a set instruction crafted by human hands, neural networks learn from data and make decisions through interconnected nodes, weighted through experience. This process, fascinatingly opaque, is often described as a “black box.” While the inputs and outputs are observable, the precise internal computations remain enigmatic. It’s akin to knowing the ingredients of a complex potion and seeing its effects, but not fully understanding the alchemy that transpires in the cauldron. Thus, the synthetic brain exists in a realm of intelligent mystery, compelling in its capabilities but equally intriguing in its inscrutability.

The “AI Transformer”—A key element of the Synthetic Brain.

In the hidden vaults of AI architecture, the “transformer” stands as a revolutionary blueprint, fundamentally altering how machines understand and generate human language. Devised originally for tasks like translating languages, its design sidesteps the sequential processing of its predecessors, capturing instead the nuances of context across a sentence or paragraph in one fell swoop. Think of it as an orchestral conductor, absorbing individual notes from multiple instruments and emitting a harmonious melody that captures the essence of the original score. In simpler terms, transformers have an uncanny ability to consider the ‘big picture’ of a data input, embracing not just individual elements but their intricate interrelations. This sophisticated architecture is the engine behind many of today’s most advanced AI models, its unseen neurons and synapses working tirelessly to make sense of the words you read and type.

Before we go on I want to give you one more chance to absorb the detail of each element of AI looking into the AI brain.

10 Key elements that make up the Architecture, Function, and Creation of the AI Synthetic Brain

- Multi-Layered Architecture: At its core, the synthetic brain uses neural networks, which consist of interconnected nodes or “neurons.” These neurons are organized in layers—input, hidden, and output—to process data in a nonlinear manner. In this context, processing data in a “nonlinear manner” means that the arrangement of neurons in layers allows for complex, non-sequential computations that go beyond simple one-to-one input-output relationships.

- Deep Learning Paradigm: The term “deep” in deep learning refers to the multiple layers that neural networks possess. The greater the depth, the more complex the data patterns the AI can identify and understand.

- Think of the “deep” in deep learning as akin to multiple levels of a library. Each level, or layer, of a neural network sorts through information, much like each floor of a library houses different types of books. The more floors you have, the more topics you can explore, enabling the AI to understand intricate and nuanced details in the data it processes.

- Training and Inference: The two primary phases in the life cycle of AI are training and inference. During training, the synthetic brain learns from labeled data or data that has been somehow refined. Inference is the process of using data to make predictions about new information. In AI, inference is used by machine learning models to make predictions about new data points. This is done by applying the model to the new data point and using the model’s parameters to make a prediction.

- Think of training and inference in AI like teaching a dog new tricks and then watching it perform. During the “training” phase, the synthetic brain learns specific skills from examples, much like how a dog learns to sit or shake when shown how. “Inference” is like letting the dog out into the yard and watching it use those learned tricks in new situations—sitting when a guest arrives, for example. The AI uses what it learned during training to make decisions when faced with new, unknown scenarios.

- Backpropagation Algorithm: This is the backbone of training neural networks. Errors are calculated at the output and then propagated back through the network, adjusting the weights of each neuron to improve the model’s accuracy.

- Think of the Backpropagation Algorithm as a coach reviewing game footage with a sports team. After each play, the coach identifies mistakes and what led to them, then “rewinds” back to tell each player how to adjust their actions for a better outcome next time. Similarly, in AI, this algorithm spots the errors at the output end, then works backward through the synthetic brain to fine-tune each ‘player’ or neuron, aiming for a more accurate performance in the next round.

- Activation Functions: These are mathematical equations that determine whether a neuron should be activated or not. Common activation functions include ReLU (Rectified Linear Unit) and Sigmoid.Imagine activation functions as talent scouts at an audition. They decide who gets to go on stage (be “activated”) based on how well the performer meets specific criteria. Just like scouts have different tastes—some may prefer comedy acts while others want dramatic monologues—activation functions like ReLU and Sigmoid have their own ‘preferences’ for letting neurons pass along information.

- Transfer Learning: Synthetic brains often use previously trained models or parts of models to solve a new but related problem. This saves computational power and time.Think of transfer learning as a chef using a tried-and-true sauce recipe to spice up a completely new dish. The chef doesn’t have to start from scratch, making the cooking process quicker and less wasteful. Similarly, synthetic brains use pieces of pre-existing knowledge to solve new problems more efficiently.

- Data Augmentation: To improve performance and generalization, synthetic brains employ techniques to modify training data slightly. For example, if the AI is learning to identify a cat, it might look at pictures of the cat from various angles and lighting conditions.Imagine a teacher using different props to teach the concept of a circle—a hula hoop, a pizza, a basketball. Each offers a slightly different perspective but still reinforces the core idea. In a similar fashion, synthetic brains use varied versions of the same data—like a cat seen from different angles—to better understand and recognize what they’re learning.

- Natural Language Processing (NLP): For AI to understand and generate human language, it employs NLP, a field that blends linguistics and computer science. This allows for chat functionalities, sentiment analysis, and language translation.Imagine NLP as the synthetic brain’s passport to the world of human conversation and emotion. It’s like giving the AI a toolbox filled with language skills, so it can do things like answer your questions in a chat, gauge the mood of a piece of writing, or switch between languages as easily as a seasoned traveler.

- Ethical and Security Guardrails: Synthetic brains are designed with certain ethical limits and are bound by protocols to ensure data privacy and safety. Encryption and multi-factor authentication are common security features.Companies like OpenAI are incorporating sophisticated keyword and context detection algorithms into their synthetic brains, such as ChatGPT, to preemptively identify and block queries that could lead to the dissemination of harmful or illegal content like hacking codes or dangerous substance creation. These algorithms are part of a broader strategy that aims to make the AI safer and more aligned with human values.

- Interdisciplinary Creation: The development of a synthetic brain isn’t just the work of computer scientists. It involves a multi-disciplinary team, including psychologists to understand human behavior, linguists for language processing, and ethicists to ponder moral implications.In this intricate dance of disciplines, it’s astonishing to witness how the research connected to artificial intelligence becomes a crucible, wherePsychology, Neuroscience, Ethics, and Philosophy not only intersect but evolve, forging new paths of understanding in each respective field. By understanding these foundational elements, one gains not just a basic literacy in AI technology but a deeper appreciation for the immense complexity and potential of the synthetic brain.