11

Chapter Outline

- Conceptual definitions (17 minute read)

- Operational definitions (36 minute read)

- Measurement quality (21 minute read)

- Ethical and social justice considerations (15 minute read)

Content warning: examples in this chapter contain references to ethnocentrism, toxic masculinity, racism in science, drug use, mental health and depression, psychiatric inpatient care, poverty and basic needs insecurity, pregnancy, and racism and sexism in the workplace and higher education.

11.1 Conceptual definitions

Learning Objectives

Learners will be able to…

- Define measurement and conceptualization

- Apply Kaplan’s three categories to determine the complexity of measuring a given variable

- Identify the role previous research and theory play in defining concepts

- Distinguish between unidimensional and multidimensional concepts

- Critically apply reification to how you conceptualize the key variables in your research project

In social science, when we use the term measurement, we mean the process by which we describe and ascribe meaning to the key facts, concepts, or other phenomena that we are investigating. At its core, measurement is about defining one’s terms in as clear and precise a way as possible. Of course, measurement in social science isn’t quite as simple as using a measuring cup or spoon, but there are some basic tenets on which most social scientists agree when it comes to measurement. We’ll explore those, as well as some of the ways that measurement might vary depending on your unique approach to the study of your topic.

An important point here is that measurement does not require any particular instruments or procedures. What it does require is a systematic procedure for assigning scores, meanings, and descriptions to individuals or objects so that those scores represent the characteristic of interest. You can measure phenomena in many different ways, but you must be sure that how you choose to measure gives you information and data that lets you answer your research question. If you’re looking for information about a person’s income, but your main points of measurement have to do with the money they have in the bank, you’re not really going to find the information you’re looking for!

The question of what social scientists measure can be answered by asking yourself what social scientists study. Think about the topics you’ve learned about in other classes you’ve taken or the topics you’ve considered investigating yourself. Let’s consider Melissa Milkie and Catharine Warner’s study (2011)[1] of first graders’ mental health. In order to conduct that study, Milkie and Warner needed to have some idea about how they were going to measure mental health. What does mental health mean, exactly? And how do we know when we’re observing someone whose mental health is good and when we see someone whose mental health is compromised? Understanding how measurement works in research methods helps us answer these sorts of questions.

As you might have guessed, social scientists will measure just about anything that they have an interest in investigating. For example, those who are interested in learning something about the correlation between social class and levels of happiness must develop some way to measure both social class and happiness. Those who wish to understand how well immigrants cope in their new locations must measure immigrant status and coping. Those who wish to understand how a person’s gender shapes their learning experiences must measure gender and workplace experiences (and get more specific about which experiences are under examination). You get the idea. Social scientists can and do measure just about anything you can imagine observing or wanting to study. Of course, some things are easier to observe or measure than others.

Observing your variables

In 1964, philosopher Abraham Kaplan (1964)[2] wrote The Conduct of Inquiry, which has since become a classic work in research methodology (Babbie, 2010).[3] In his text, Kaplan describes different categories of things that behavioral scientists observe. One of those categories, which Kaplan called “observational terms,” is probably the simplest to measure in social science. Observational terms are the sorts of things that we can see with the naked eye simply by looking at them. Kaplan roughly defines them as conditions that are easy to identify and verify through direct observation. If, for example, we wanted to know how the conditions of playgrounds differ across different neighborhoods, we could directly observe the variety, amount, and condition of equipment at various playgrounds.

Indirect observables, on the other hand, are less straightforward to assess. In Kaplan’s framework, they are conditions that are subtle and complex that we must use existing knowledge and intuition to define. If we conducted a study for which we wished to know a person’s income, we’d probably have to ask them their income, perhaps in an interview or a survey. Thus, we have observed income, even if it has only been observed indirectly. Birthplace might be another indirect observable. We can ask study participants where they were born, but chances are good we won’t have directly observed any of those people being born in the locations they report.

Sometimes the measures that we are interested in are more complex and more abstract than observational terms or indirect observables. Think about some of the concepts you’ve learned about in other classes—for example, ethnocentrism. What is ethnocentrism? Well, from completing an earlier class you might know that it has something to do with the way a person judges another’s culture. But how would you measure it? Here’s another construct: bureaucracy. We know this term has something to do with organizations and how they operate but measuring such a construct is trickier than measuring something like a person’s income. The theoretical concepts of ethnocentrism and bureaucracy represent ideas whose meanings we have come to agree on. Though we may not be able to observe these abstractions directly, we can observe their components.

Kaplan referred to these more abstract things that behavioral scientists measure as constructs. Constructs are “not observational either directly or indirectly” (Kaplan, 1964, p. 55),[4] but they can be defined based on observables. For example, the construct of bureaucracy could be measured by counting the number of supervisors that need to approve teacher reimbursements of routine personal spending on their classrooms. The greater the number of administrators that must sign off on routine matters, the greater the degree of bureaucracy. Similarly, we might be able to ask a person the degree to which they trust people from different cultures around the world and then assess the ethnocentrism inherent in their answers. We can measure constructs like bureaucracy and ethnocentrism by defining them in terms of what we can observe.[5]

The idea of coming up with your own measurement tool might sound pretty intimidating at this point. The good news is that if you find something in the literature that works for you, you can use it (with proper attribution, of course). If there are only pieces of it that you like, you can reuse those pieces (with proper attribution and describing/justifying any changes). You don’t always have to start from scratch! Indeed, I would encourage you not to start from scratch.

Exercises

Look at the variables in your research question.

- Classify them as direct observables, indirect observables, or constructs.

- Do you think measuring them will be easy or hard?

- What are your first thoughts about how to measure each variable? No wrong answers here, just write down a thought about each variable.

Measurement starts with conceptualization

In order to measure the concepts in your research question, we first have to understand what we think about them. As an aside, the word concept has come up quite a bit, and it is important to be sure we have a shared understanding of that term. A concept is the notion or image that we conjure up when we think of some cluster of related observations or ideas. For example, masculinity is a concept. What do you think of when you hear that word? Presumably, you imagine some set of behaviors and perhaps even a particular style of self-presentation. Of course, we can’t necessarily assume that everyone conjures up the same set of ideas or images when they hear the word masculinity. While there are many possible ways to define the term and some may be more common or have more support than others, there is no universal definition of masculinity. What counts as masculine may shift over time, from culture to culture, and even from individual to individual (Kimmel, 2008). This is why defining our concepts is so important.

Not all researchers clearly explain their theoretical or conceptual framework for their study, but they should! Without understanding how a researcher has defined their key concepts, it would be nearly impossible to understand the meaning of that researcher’s findings and conclusions. Back in Chapter 7, you developed a theoretical framework for your study based on a survey of the theoretical literature in your topic area. If you haven’t done that yet, consider flipping back to that section to familiarize yourself with some of the techniques for finding and using theories relevant to your research question. Continuing with our example on masculinity, we would need to survey the literature on theories of masculinity. After a few queries on masculinity, I found a wonderful article by Wong (2010)[6] that analyzed eight years of the journal Psychology of Men & Masculinity and analyzed how often different theories of masculinity were used. Not only can I get a sense of which theories are more accepted and which are more marginal in the social science on masculinity, I am able to identify a range of options from which I can find the theory or theories that will inform my project.

Exercises

Identify a specific theory (or more than one theory) and how it helps you understand…

- Your independent variable(s).

- Your dependent variable(s).

- The relationship between your independent and dependent variables.

Rather than completing this exercise from scratch, build from your theoretical or conceptual framework developed in previous chapters.

In quantitative methods, conceptualization involves writing out clear, concise definitions for our key concepts. These are the kind of definitions you are used to, like the ones in a dictionary. A conceptual definition involves defining a concept in terms of other concepts, usually by making reference to how other social scientists and theorists have defined those concepts in the past. Of course, new conceptual definitions are created all the time because our conceptual understanding of the world is always evolving.

Conceptualization is deceptively challenging—spelling out exactly what the concepts in your research question mean to you. Following along with our example, think about what comes to mind when you read the term masculinity. How do you know masculinity when you see it? Does it have something to do with men or with social norms? If so, perhaps we could define masculinity as the social norms that men are expected to follow. That seems like a reasonable start, and at this early stage of conceptualization, brainstorming about the images conjured up by concepts and playing around with possible definitions is appropriate. Doing so can also be used as a tool to explore your own personal biases and assumptions–something that can help you limit their influence on your work in ways that could corrupt your findings down the line. However, this reflective engagement is just the first step. At this point, you should be moving beyond brainstorming for your key variables because you have read a good amount of research about them.

In addition, we should consult previous research and theory to understand the definitions that other scholars have already given for the concepts we are interested in. This doesn’t mean we must use their definitions, but understanding how concepts have been defined in the past will help us to compare our conceptualizations with how other scholars define and relate concepts. Understanding prior definitions of our key concepts will also help us decide whether we plan to challenge those conceptualizations or rely on them for our own work. Finally, working on conceptualization is likely to help in the process of refining your research question to one that is specific and clear in what it asks. Conceptualization and operationalization (next section) are where “the rubber meets the road,” so to speak, and you have to specify what you mean by the question you are asking. As your conceptualization deepens, you will often find that your research question becomes more specific and clear.

If we turn to the literature on masculinity, we will surely come across work by Michael Kimmel, one of the preeminent masculinity scholars in the United States. After consulting Kimmel’s prior work (2000; 2008),[7] we might tweak our initial definition of masculinity. Rather than defining masculinity as “the social norms that men are expected to follow,” perhaps instead we’ll define it as “the social roles, behaviors, and meanings prescribed for men in any given society at any one time” (Kimmel & Aronson, 2004, p. 503).[8] Our revised definition is more precise and complex because it goes beyond addressing one aspect of men’s lives (norms), and addresses three aspects: roles, behaviors, and meanings. It also implies that roles, behaviors, and meanings may vary across societies and over time. Using definitions developed by theorists and scholars is a good idea, though you may find that you want to define things your own way.

As you can see, conceptualization isn’t as simple as applying any random definition that we come up with to a term. For example, note the difference between the research-based definition of masculinity and a basic dictionary definition: “the quality or nature of the male sex: the quality, state, or degree of being masculine or manly.”

Defining our terms may involve some brainstorming at the very beginning. But conceptualization must go beyond that, to engage with or critique existing definitions and conceptualizations in the literature. Once we’ve brainstormed about the images associated with a particular word, we should also consult prior work to understand how others define the term in question. After we’ve identified a clear definition that we’re happy with, we should make sure that every term used in our definition will make sense to others. Are there terms used within our definition that also need to be defined? If so, our conceptualization is not yet complete. Our definition includes the concept of “social roles,” so we should have a definition for what those mean and become familiar with role theory to help us with our conceptualization. If we don’t know what roles are, how can we study them?

Let’s say we do all of that. We have a clear definition of the term masculinity with reference to previous literature and we also have a good understanding of the terms in our conceptual definition…then we’re done, right? Not so fast. You’ve likely met more than one man in your life, and you’ve probably noticed that they are not the same, even if they live in the same society during the same historical time period. This could mean there are dimensions of masculinity. In terms of social scientific measurement, concepts can be said to have multiple dimensions when there are multiple elements that make up a single concept. With respect to the term masculinity, dimensions could be based on gender identity, gender performance, sexual orientation, etc.. In any of these cases, the concept of masculinity would be considered to have multiple dimensions.

While you do not need to spell out every possible dimension of the concepts you wish to measure, it is important to identify whether your concepts are unidimensional (and therefore relatively easy to define and measure) or multidimensional (and therefore require multi-part definitions and measures). In this way, how you conceptualize your variables determines how you will measure them in your study. Unidimensional concepts are those that are expected to have a single underlying dimension. These concepts can be measured using a single measure or test. Examples include simple concepts such as a person’s weight, time spent sleeping, and so forth.

One frustrating thing is that there is no clear demarcation between concepts that are inherently unidimensional or multidimensional. Even something as simple as age could be broken down into multiple dimensions including mental age and chronological age, so where does conceptualization stop? How far down the dimensional rabbit hole do we have to go? Researchers should consider two things. First, how important is this variable in your study? If age is not important in your study (maybe it is a control variable), it seems like a waste of time to do a lot of work drawing from developmental theory to conceptualize this variable. A unidimensional measure from zero to dead is all the detail we need. On the other hand, if we were measuring the impact of age on masculinity, conceptualizing our independent variable (age) as multidimensional may provide a richer understanding of its impact on masculinity. Finally, your conceptualization will lead directly to your operationalization of the variable, and once your operationalization is complete, make sure someone reading your study could follow how your conceptual definitions informed the measures you chose for your variables.

Exercises

Write a conceptual definition for your independent and dependent variables.

- Cite and attribute definitions to other scholars, if you use their words.

- Describe how your definitions are informed by your theoretical framework.

- Place your definition in conversation with other theories and conceptual definitions commonly used in the literature.

- Are there multiple dimensions of your variables?

- Are any of these dimensions important for you to measure?

Do researchers actually know what we’re talking about?

Conceptualization proceeds differently in qualitative research compared to quantitative research. Since qualitative researchers are interested in the understandings and experiences of their participants, it is less important for them to find one fixed definition for a concept before starting to interview or interact with participants. The researcher’s job is to accurately and completely represent how their participants understand a concept, not to test their own definition of that concept.

If you were conducting qualitative research on masculinity, you would likely consult previous literature like Kimmel’s work mentioned above. From your literature review, you may come up with a working definition for the terms you plan to use in your study, which can change over the course of the investigation. However, the definition that matters is the definition that your participants share during data collection. A working definition is merely a place to start, and researchers should take care not to think it is the only or best definition out there.

In qualitative inquiry, your participants are the experts on the concepts that arise during the study. Your job as the researcher is to accurately and reliably collect and interpret their understanding of the concepts they describe while answering your questions. Conceptualization of concepts is likely to change over the course of qualitative inquiry, as you learn more information from your participants. Indeed, getting participants to comment on, extend, or challenge the definitions and understandings of other participants is a hallmark of qualitative research. This is the opposite of quantitative research, in which definitions must be completely set in stone before the inquiry can begin.

The contrast between qualitative and quantitative conceptualization is instructive for understanding how quantitative methods (and positivist research in general) privilege the knowledge of the researcher over the knowledge of study participants and community members. Positivism holds that the researcher is the “expert,” and can define concepts based on their expert knowledge of the scientific literature. This knowledge is in contrast to the lived experience that participants possess from experiencing the topic under examination day-in, day-out. For this reason, it would be wise to remind ourselves not to take our definitions too seriously and be critical about the limitations of our knowledge.

Conceptualization must be open to revisions, even radical revisions, as scientific knowledge progresses. While I’ve suggested consulting prior scholarly definitions of our concepts, you should not assume that prior, scholarly definitions are more real than the definitions we create. Likewise, we should not think that our own made-up definitions are any more real than any other definition. It would also be wrong to assume that just because definitions exist for some concept that the concept itself exists beyond some abstract idea in our heads. Building on the paradigmatic ideas behind interpretivism and the critical paradigm, researchers call the assumption that our abstract concepts exist in some concrete, tangible way is known as reification. It explores the power dynamics behind how we can create reality by how we define it.

Returning again to our example of masculinity. Think about our how our notions of masculinity have developed over the past few decades, and how different and yet so similar they are to patriarchal definitions throughout history. Conceptual definitions become more or less popular based on the power arrangements inside of social science the broader world. Western knowledge systems are privileged, while others are viewed as unscientific and marginal. The historical domination of social science by white men from WEIRD countries meant that definitions of masculinity were imbued their cultural biases and were designed explicitly and implicitly to preserve their power. This has inspired movements for cognitive justice as we seek to use social science to achieve global development.

Key Takeaways

- Measurement is the process by which we describe and ascribe meaning to the key facts, concepts, or other phenomena that we are investigating.

- Kaplan identified three categories of things that social scientists measure including observational terms, indirect observables, and constructs.

- Some concepts have multiple elements or dimensions.

- Researchers often use measures previously developed and studied by other researchers.

- Conceptualization is a process that involves coming up with clear, concise definitions.

- Conceptual definitions are based on the theoretical framework you are using for your study (and the paradigmatic assumptions underlying those theories).

- Whether your conceptual definitions come from your own ideas or the literature, you should be able to situate them in terms of other commonly used conceptual definitions.

- Researchers should acknowledge the limited explanatory power of their definitions for concepts and how oppression can shape what explanations are considered true or scientific.

Exercises

Think historically about the variables in your research question.

- How has our conceptual definition of your topic changed over time?

- What scholars or social forces were responsible for this change?

Take a critical look at your conceptual definitions.

11.2 Operational definitions

Learning Objectives

Learners will be able to…

- Define and give an example of indicators and attributes for a variable

- Apply the three components of an operational definition to a variable

- Distinguish between levels of measurement for a variable and how those differences relate to measurement

- Describe the purpose of composite measures like scales and indices

Conceptual definitions are like dictionary definitions. They tell you what a concept means by defining it using other concepts. In this section we will move from the abstract realm (theory) to the real world (measurement). Operationalization is the process by which researchers spell out precisely how a concept will be measured in their study. It involves identifying the specific research procedures we will use to gather data about our concepts. If conceptually defining your terms means looking at theory, how do you operationally define your terms? By looking for indicators of when your variable is present or not, more or less intense, and so forth. Operationalization is probably the most challenging part of quantitative research, but once it’s done, the design and implementation of your study will be straightforward.

Indicators

Operationalization works by identifying specific indicators that will be taken to represent the ideas we are interested in studying. If we are interested in studying masculinity, then the indicators for that concept might include some of the social roles prescribed to men in society such as breadwinning or fatherhood. Being a breadwinner or a father might therefore be considered indicators of a person’s masculinity. The extent to which a man fulfills either, or both, of these roles might be understood as clues (or indicators) about the extent to which he is viewed as masculine.

Let’s look at another example of indicators. Each day, Gallup researchers poll 1,000 randomly selected Americans to ask them about their well-being. To measure well-being, Gallup asks these people to respond to questions covering six broad areas: physical health, emotional health, work environment, life evaluation, healthy behaviors, and access to basic necessities. Gallup uses these six factors as indicators of the concept that they are really interested in, which is well-being.

Identifying indicators can be even simpler than the examples described thus far. Political party affiliation is another relatively easy concept for which to identify indicators. If you asked a person what party they voted for in the last national election (or gained access to their voting records), you would get a good indication of their party affiliation. Of course, some voters split tickets between multiple parties when they vote and others swing from party to party each election, so our indicator is not perfect. Indeed, if our study were about political identity as a key concept, operationalizing it solely in terms of who they voted for in the previous election leaves out a lot of information about identity that is relevant to that concept. Nevertheless, it’s a pretty good indicator of political party affiliation.

Choosing indicators is not an arbitrary process. As described earlier, utilizing prior theoretical and empirical work in your area of interest is a great way to identify indicators in a scholarly manner. And your conceptual definitions will point you in the direction of relevant indicators. Empirical work will give you some very specific examples of how the important concepts in an area have been measured in the past and what sorts of indicators have been used. Often, it makes sense to use the same indicators as previous researchers; however, you may find that some previous measures have potential weaknesses that your own study will improve upon.

All of the examples in this chapter have dealt with questions you might ask a research participant on a survey or in a quantitative interview. If you plan to collect data from other sources, such as through direct observation or the analysis of available records, think practically about what the design of your study might look like and how you can collect data on various indicators feasibly. If your study asks about whether the participant regularly changes the oil in their car, you will likely not observe them directly doing so. Instead, you will likely need to rely on a survey question that asks them the frequency with which they change their oil or ask to see their car maintenance records.

Exercises

- What indicators are commonly used to measure the variables in your research question?

- How can you feasibly collect data on these indicators?

- Are you planning to collect your own data using a questionnaire or interview? Or are you planning to analyze available data like client files or raw data shared from another researcher’s project?

Remember, you need raw data. You research project cannot rely solely on the results reported by other researchers or the arguments you read in the literature. A literature review is only the first part of a research project, and your review of the literature should inform the indicators you end up choosing when you measure the variables in your research question.

Unlike conceptual definitions which contain other concepts, operational definition consists of the following components: (1) the variable being measured and its attributes, (2) the measure you will use, (3) how you plan to interpret the data collected from that measure to draw conclusions about the variable you are measuring.

Step 1: Specifying variables and attributes

The first component, the variable, should be the easiest part. At this point in quantitative research, you should have a research question that has at least one independent and at least one dependent variable. Remember that variables must be able to vary. For example, the United States is not a variable. Country of residence is a variable, as is patriotism. Similarly, if your sample only includes men, gender is a constant in your study, not a variable. A constant is a characteristic that does not change in your study.

When social scientists measure concepts, they sometimes use the language of variables and attributes. A variable refers to a quality or quantity that varies across people or situations. Attributes are the characteristics that make up a variable. For example, the variable hair color would contain attributes like blonde, brown, black, red, gray, etc. A variable’s attributes determine its level of measurement. There are four possible levels of measurement: nominal, ordinal, interval, and ratio. The first two levels of measurement are categorical, meaning their attributes are categories rather than numbers. The latter two levels of measurement are continuous, meaning their attributes are numbers.

Levels of measurement

Hair color is an example of a nominal level of measurement. Nominal measures are categorical, and those categories cannot be mathematically ranked. As a brown-haired person (with some gray), I can’t say for sure that brown-haired people are better than blonde-haired people. As with all nominal levels of measurement, there is no ranking order between hair colors; they are simply different. That is what constitutes a nominal level–gender and race are also measured at the nominal level.

What attributes are contained in the variable hair color? While blonde, brown, black, and red are common colors, some people may not fit into these categories if we only list these attributes. My wife, who currently has purple hair, wouldn’t fit anywhere. This means that our attributes were not exhaustive. Exhaustiveness means that all possible attributes are listed. We may have to list a lot of colors before we can meet the criteria of exhaustiveness. Clearly, there is a point at which exhaustiveness has been reasonably met. If a person insists that their hair color is light burnt sienna, it is not your responsibility to list that as an option. Rather, that person would reasonably be described as brown-haired. Perhaps listing a category for other color would suffice to make our list of colors exhaustive.

What about a person who has multiple hair colors at the same time, such as red and black? They would fall into multiple attributes. This violates the rule of mutual exclusivity, in which a person cannot fall into two different attributes. Instead of listing all of the possible combinations of colors, perhaps you might include a multi-color attribute to describe people with more than one hair color.

Making sure researchers provide mutually exclusive and exhaustive attributes is about making sure all people are represented in the data record. For many years, the attributes for gender were only male or female. Now, our understanding of gender has evolved to encompass more attributes that better reflect the diversity in the world. Children of parents from different races were often classified as one race or another, even if they identified with both cultures. The option for bi-racial or multi-racial on a survey not only more accurately reflects the racial diversity in the real world but validates and acknowledges people who identify in that manner. If we did not measure race in this way, we would leave empty the data record for people who identify as biracial or multiracial, impairing our search for truth.

Unlike nominal-level measures, attributes at the ordinal level can be rank ordered. For example, someone’s degree of satisfaction in their romantic relationship can be ordered by rank. That is, you could say you are not at all satisfied, a little satisfied, moderately satisfied, or highly satisfied. Note that even though these have a rank order to them (not at all satisfied is certainly worse than highly satisfied), we cannot calculate a mathematical distance between those attributes. We can simply say that one attribute of an ordinal-level variable is more or less than another attribute.

This can get a little confusing when using rating scales. If you have ever taken a customer satisfaction survey or completed a course evaluation for school, you are familiar with rating scales. “On a scale of 1-5, with 1 being the lowest and 5 being the highest, how likely are you to recommend our company to other people?” That surely sounds familiar. Rating scales use numbers, but only as a shorthand, to indicate what attribute (highly likely, somewhat likely, etc.) the person feels describes them best. You wouldn’t say you are “2” likely to recommend the company, but you would say you are not very likely to recommend the company. Ordinal-level attributes must also be exhaustive and mutually exclusive, as with nominal-level variables.

At the interval level, attributes must also be exhaustive and mutually exclusive and there is equal distance between attributes. Interval measures are also continuous, meaning their attributes are numbers, rather than categories. IQ scores are interval level, as are temperatures in Fahrenheit and Celsius. Their defining characteristic is that we can say how much more or less one attribute differs from another. We cannot, however, say with certainty what the ratio of one attribute is in comparison to another. For example, it would not make sense to say that a person with an IQ score of 140 has twice the IQ of a person with a score of 70, or that 20 degrees is twice as hot as 10 degrees. However, the difference between IQ scores of 80 and 100 is the same as the difference between IQ scores of 120 and 140 (and the difference between a temperature of 20 and 10 is the same as the difference between 35 and 25).

While we cannot say that someone with an IQ of 140 is twice as intelligent as someone with an IQ of 70 because IQ is measured at the interval level, we can say that someone with six siblings has twice as many as someone with three because number of siblings is measured at the ratio level. Finally, at the ratio level, attributes are mutually exclusive and exhaustive, attributes can be rank ordered, the distance between attributes is equal, and attributes have a true zero point. Thus, with these variables, we can say what the ratio of one attribute is in comparison to another. Examples of ratio-level variables include age and years of education. We know that a person who is 12 years old is twice as old as someone who is 6 years old. Height measured in meters and weight measured in kilograms are good examples. So are counts of discrete objects or events such as the number of siblings one has or the number of questions a student answers correctly on an exam. The differences between each level of measurement are visualized in Table 11.1.

| Nominal | Ordinal | Interval | Ratio | |

| Exhaustive | X | X | X | X |

| Mutually exclusive | X | X | X | X |

| Rank-ordered | X | X | X | |

| Equal distance between attributes | X | X | ||

| True zero point | X |

Levels of measurement=levels of specificity

We have spent time learning how to determine our data’s level of measurement. Now what? How could we use this information to help us as we measure concepts and develop measurement tools? First, the types of statistical tests that we are able to use are generally dependent on our data’s level of measurement. With nominal-level measurement, for example, the only available measure of central tendency is the mode. With ordinal-level measurement, the median or mode can be used as indicators of central tendency[9]. Interval and ratio-level measurement are typically considered the most desirable because they permit for any indicators of central tendency to be computed (i.e., mean, median, or mode). Also, ratio-level measurement is the only level that allows meaningful statements about ratios of scores. The higher the level of measurement, the more complex statistical tests we are able to conduct. This knowledge may help us decide what kind of data we need to gather, and how.

That said, we have to balance this knowledge with the understanding that sometimes, collecting data at a higher level of measurement could negatively impact our studies. For instance, sometimes providing answers in ranges may make prospective participants feel more comfortable responding to sensitive items. Imagine that you were interested in collecting information on topics such as income, number of sexual partners, number of times someone used illicit drugs, etc. You would have to think about the sensitivity of these items and determine if it would make more sense to collect some data at a lower level of measurement (e.g., asking if they are sexually active or not (nominal) versus their total number of sexual partners (ratio)).

Finally, sometimes when analyzing data, researchers find a need to change a data’s level of measurement. For example, a few years ago, a student was interested in studying the relationship between mental health and life satisfaction. This student used a variety of measures. One item asked about the number of mental health symptoms, reported as the actual number. When analyzing data, my student examined the mental health symptom variable and noticed that she had two groups, those with none or one symptoms and those with many symptoms. Instead of using the ratio level data (actual number of mental health symptoms), she collapsed her cases into two categories, few and many. She decided to use this variable in her analyses. It is important to note that you can move a higher level of data to a lower level of data; however, you are unable to move a lower level to a higher level.

Exercises

- Check that the variables in your research question can vary…and that they are not constants or one of many potential attributes of a variable.

- Think about the attributes your variables have. Are they categorical or continuous? What level of measurement seems most appropriate?

Step 2: Specifying measures for each variable

Let’s pick a research question and walk through the process of operationalizing variables to see how specific we need to get. I’m going to hypothesize that students in a class who are unmotivated are less likely to be satisfied with instruction. Remember, this would be a direct relationship—as motivation decreases, satisfaction decreases. In this question, motivation is my independent variable (the cause) and satisfaction with instruction is my dependent variable (the effect). Now we have identified our variables, their attributes, and levels of measurement, we move onto the second component: the measure itself.

So, how would you measure my key variables: motivation and satisfaction? What indicators would you look for? Some students might say that motivation could be measured by observing a participant’s body language. They may also say that a motivated person will often express feelings of engagement or energy. In addition, a satisfied person might be happy around instructors and often express gratitude. While these factors may indicate that the variables are present, they lack coherence. Unfortunately, what this “measure” is actually saying is that “I know motivation and satisfaction when I see them.” While you are likely a decent judge of motivation and satisfaction, you need to provide more information in a research study for how you plan to measure your variables. Your judgment is subjective, based on your own idiosyncratic experiences with motivation and satisfaction. They couldn’t be replicated by another researcher. They also can’t be done consistently for a large group of people. Operationalization requires that you come up with a specific and rigorous measure for seeing who is motivation or satisfied.

Finding a good measure for your variable depends on the kind of variable it is. Variables that are directly observable don’t come up very often in my students’ classroom projects, but they might include things like taking someone’s blood pressure, marking attendance or participation in a group, and so forth. To measure an indirectly observable variable like age, you would probably put a question on a survey that asked, “How old are you?” Measuring a variable like income might require some more thought, though. Are you interested in this person’s individual income or the income of their family unit? This might matter if your participant does not work or is dependent on other family members for income. Do you count income from social welfare programs? Are you interested in their income per month or per year? Even though indirect observables are relatively easy to measure, the measures you use must be clear in what they are asking, and operationalization is all about figuring out the specifics of what you want to know. For more complicated constructs, you will need compound measures (that use multiple indicators to measure a single variable).

How you plan to collect your data also influences how you will measure your variables. For researchers using secondary data like student records as a data source, you are limited by what information is in the data sources you can access. If your organization uses a given measurement for a learning outcome, that is the one you will use in your study. One of the benefits of collecting your own data is being able to select the measures you feel best exemplify your understanding of the topic.

Measuring unidimensional concepts

The previous section mentioned two important considerations: how complicated the variable is and how you plan to collect your data. With these in hand, we can use the level of measurement to further specify how you will measure your variables and consider specialized rating scales developed by social science researchers.

Measurement at each level

Nominal measures assess categorical variables. These measures are used for variables or indicators that have mutually exclusive attributes, but that cannot be rank-ordered. Nominal measures ask about the variable and provide names or labels for different attribute values like social work, counseling, and nursing for the variable profession. Nominal measures are relatively straightforward.

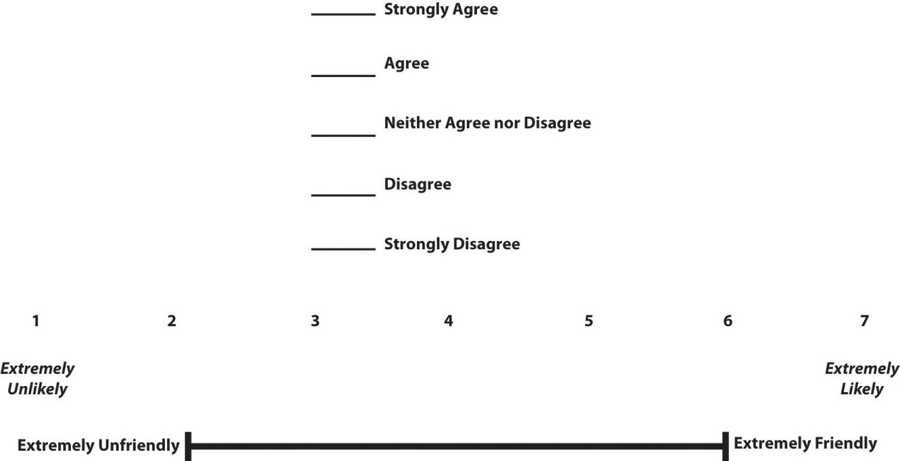

Ordinal measures often use a rating scale. It is an ordered set of responses that participants must choose from. Figure 11.1 shows several examples. The number of response options on a typical rating scale is usualy five or seven, though it can range from three to 11. Five-point scales are best for unipolar scales where only one construct is tested, such as frequency (Never, Rarely, Sometimes, Often, Always). Seven-point scales are best for bipolar scales where there is a dichotomous spectrum, such as liking (Like very much, Like somewhat, Like slightly, Neither like nor dislike, Dislike slightly, Dislike somewhat, Dislike very much). Sometime you want people to be forced to choose one way or another, so you might use a forced-choice scale of even numbered options (4, 6, or 8) that doesn’t offer a mid-point option. For bipolar questions, it is useful to offer an earlier question that branches them into an area of the scale; if asking about liking ice cream, first ask “Do you generally like or dislike ice cream?” Once the respondent chooses like or dislike, refine it by offering them relevant choices from the seven-point scale. Branching improves both reliability and validity (Krosnick & Berent, 1993).[10] Although you often see scales with numerical labels, it is best to only present verbal labels to the respondents but convert them to numerical values in the analyses. Avoid partial labels or length or overly specific labels. In some cases, the verbal labels can be supplemented with (or even replaced by) meaningful graphics. The last rating scale shown in Figure 11.1 is a visual-analog scale, on which participants make a mark somewhere along the horizontal line to indicate the magnitude of their response.

Interval measures are those where the values measured are not only rank-ordered, but are also equidistant from adjacent attributes. For example, the temperature scale (in Fahrenheit or Celsius), where the difference between 30 and 40 degree Fahrenheit is the same as that between 80 and 90 degree Fahrenheit. Likewise, if you have a scale that asks respondents’ annual income using the following attributes (ranges): $0 to 10,000, $10,000 to 20,000, $20,000 to 30,000, and so forth, this is also an interval measure, because the mid-point of each range (i.e., $5,000, $15,000, $25,000, etc.) are equidistant from each other. The intelligence quotient (IQ) scale is also an interval measure, because the measure is designed such that the difference between IQ scores 100 and 110 is supposed to be the same as between 110 and 120 (although in all honesty, we do not really know whether that is truly the case). Interval measures allow us to examine “how much more” is one attribute when compared to another, which is not possible with nominal or ordinal measures. You may find researchers who argue that ordinal rating scales are actually interval measures so that we can use different statistical techniques for analyzing them. As we will discuss in the latter part of the chapter, this is debatable because there is no way to know whether the difference between a 3 and a 4 on a rating scale is the same as the difference between a 2 and a 3. Those numbers are just placeholders for categories.

Ratio measures are those that have all the qualities of nominal, ordinal, and interval scales, and in addition, also have a “true zero” point (where the value zero implies lack or non-availability of the underlying construct). Think about how to measure the number of people working in human resources at a social work agency. It could be one, several, or none (if the company contracts out for those services). Measuring interval and ratio data is relatively easy, as people either select or input a number for their answer. If you ask a person how many eggs they purchased last week, they can simply tell you they purchased `a dozen eggs at the store, two at breakfast on Wednesday, or none at all.

Commonly used rating scales in questionnaires

The level of measurement will give you the basic information you need, but social scientists have developed specialized instruments for use in questionnaires, a common tool used in quantitative research. Although Likert scale is a term colloquially used to refer to almost any rating scale (e.g., a 0-to-10 life satisfaction scale), it has a much more precise meaning. In the 1930s, researcher Rensis Likert (pronounced LICK-ert) created a new approach for measuring people’s attitudes (Likert, 1932).[11] It involves presenting people with several statements—including both favorable and unfavorable statements—about some person, group, or idea. Respondents then express their agreement or disagreement with each statement on a 5-point scale: Strongly Agree, Agree, Neither Agree nor Disagree, Disagree, Strongly Disagree. Numbers are assigned to each response and then summed across all items to produce a score representing the attitude toward the person, group, or idea. For items that are phrased in an opposite direction (e.g., negatively worded statements instead of positively worded statements), reverse coding is used so that the numerical scoring of statements also runs in the opposite direction. The entire set of items came to be called a Likert scale, as indicated in Table 11.2 below.

Unless you are measuring people’s attitude toward something by assessing their level of agreement with several statements about it, it is best to avoid calling it a Likert scale. You are probably just using a rating scale. Likert scales allow for more granularity (more finely tuned response) than yes/no items, including whether respondents are neutral to the statement. Below is an example of how we might use a Likert scale to assess your attitudes about research as you work your way through this textbook.

| Strongly agree | Agree | Neutral | Disagree | Strongly disagree | |

| I like research more now than when I started reading this book. | |||||

| This textbook is easy to use. | |||||

| I feel confident about how well I understand levels of measurement. | |||||

| This textbook is helping me plan my research proposal. |

Semantic differential scales are composite (multi-item) scales in which respondents are asked to indicate their opinions or feelings toward a single statement using different pairs of adjectives framed as polar opposites. Whereas in the above Likert scale, the participant is asked how much they agree or disagree with a statement, in a semantic differential scale the participant is asked to indicate how they feel about a specific item. This makes the semantic differential scale an excellent technique for measuring people’s attitudes or feelings toward objects, events, or behaviors. Table 11.3 is an example of a semantic differential scale that was created to assess participants’ feelings about this textbook.

| 1) How would you rate your opinions toward this textbook? | ||||||

| Very much | Somewhat | Neither | Somewhat | Very much | ||

| Boring | Exciting | |||||

| Useless | Useful | |||||

| Hard | Easy | |||||

| Irrelevant | Applicable | |||||

This composite scale was designed by Louis Guttman and uses a series of items arranged in increasing order of intensity (least intense to most intense) of the concept. This type of scale allows us to understand the intensity of beliefs or feelings. Each item in the above Guttman scale has a weight (this is not indicated on the tool) which varies with the intensity of that item, and the weighted combination of each response is used as an aggregate measure of an observation.

Example Guttman Scale Items

- I often felt the material was not engaging Yes/No

- I was often thinking about other things in class Yes/No

- I was often working on other tasks during class Yes/No

- I will work to abolish research from the curriculum Yes/No

Notice how the items move from lower intensity to higher intensity. A researcher reviews the yes answers and creates a score for each participant.

Composite measures: Scales and indices

Depending on your research design, your measure may be something you put on a survey or pre/post-test that you give to your participants. For a variable like age or income, one well-worded question may suffice. Unfortunately, most variables in the social world are not so simple. Motivation and satisfaction are multidimensional concepts. Relying on a single indicator like a question that asks “Yes or no, are you motivated?” does not encompass the complexity of motivation, including issues with mood, energy and happiness. There is no easy way to delineate between multidimensional and unidimensional concepts, as its all in how you think about your variable. Satisfaction could be validly measured using a unidimensional ordinal rating scale. However, if satisfaction were a key variable in our study, we would need a theoretical framework and conceptual definition for it. That means we’d probably have more indicators to ask about like timeliness, respect, sensitivity, and many others, and we would want our study to say something about what satisfaction truly means in terms of our other key variables. However, if satisfaction is not a key variable in your conceptual framework, it makes sense to operationalize it as a unidimensional concept.

For more complicated measures, researchers use scales and indices (sometimes called indexes) to measure their variables because they assess multiple indicators to develop a composite (or total) score. Composite scores provide a much greater understanding of concepts than a single item could. Although we won’t delve too deeply into the process of scale development, we will cover some important topics for you to understand how scales and indices developed by other researchers can be used in your project.

Although they exhibit differences (which will later be discussed) the two have in common various factors.

- Both are ordinal measures of variables.

- Both can order the units of analysis in terms of specific variables.

- Both are composite measures.

Scales

The previous section discussed how to measure respondents’ responses to predesigned items or indicators belonging to an underlying construct. But how do we create the indicators themselves? The process of creating the indicators is called scaling. More formally, scaling is a branch of measurement that involves the construction of measures by associating qualitative judgments about unobservable constructs with quantitative, measurable metric units. Stevens (1946)[12] said, “Scaling is the assignment of objects to numbers according to a rule.” This process of measuring abstract concepts in concrete terms remains one of the most difficult tasks in empirical social science research.

The outcome of a scaling process is a scale, which is an empirical structure for measuring items or indicators of a given construct. Understand that multidimensional “scales”, as discussed in this section, are a little different from “rating scales” discussed in the previous section. A rating scale is used to capture the respondents’ reactions to a given item on a questionnaire. For example, an ordinally scaled item captures a value between “strongly disagree” to “strongly agree.” Attaching a rating scale to a statement or instrument is not scaling. Rather, scaling is the formal process of developing scale items, before rating scales can be attached to those items.

If creating your own scale sounds painful, don’t worry! For most multidimensional variables, you would likely be duplicating work that has already been done by other researchers. Specifically, this is a branch of science called psychometrics. You do not need to create a scale for motivation because scales such as the Intrinsic Motivation Inventory (IMI), General Causality Orientations Scale (GCOS), and the Sport Climate Questionnaire (SCQ) have been developed and refined over dozens of years to measure variables like motivation. As we will discuss in the next section, these scales have been shown to be reliable and valid. While you could create a new scale to measure motivation or satisfaction, a study with rigor would pilot test and refine that new scale over time to make sure it measures the concept accurately and consistently. This high level of rigor is often unachievable in student research projects because of the cost and time involved in pilot testing and validating, so using existing scales is recommended.

Unfortunately, there is no good one-stop-shop for psychometric scales. The Mental Measurements Yearbook provides a searchable database of measures for social science variables, though it is woefully incomplete and often does not contain the full documentation for scales in its database. You can access it from a university library’s list of databases. If you can’t find anything in there, your next stop should be the methods section of the articles in your literature review. The methods section of each article will detail how the researchers measured their variables, and often the results section is instructive for understanding more about measures. In a quantitative study, researchers may have used a scale to measure key variables and will provide a brief description of that scale, its names, and maybe a few example questions. If you need more information, look at the results section and tables discussing the scale to get a better idea of how the measure works. Looking beyond the articles in your literature review, searching Google Scholar using queries like “motivation scale” or “satisfaction scale” should also provide some relevant results. For example, searching for documentation for the Rosenberg Self-Esteem Scale (which we will discuss in the next section), I found this report from researchers investigating acceptance and commitment therapy which details this scale and many others used to assess mental health outcomes. If you find the name of the scale somewhere but cannot find the documentation (all questions and answers plus how to interpret the scale), a general web search with the name of the scale and “.pdf” may bring you to what you need. Or, to get professional help with finding information, always ask a librarian!

Unfortunately, these approaches do not guarantee that you will be able to view the scale itself or get information on how it is interpreted. Many scales cost money to use and may require training to properly administer. You may also find scales that are related to your variable but would need to be slightly modified to match your study’s needs. You could adapt a scale to fit your study, however changing even small parts of a scale can influence its accuracy and consistency. While it is perfectly acceptable in student projects to adapt a scale without testing it first (time may not allow you to do so), pilot testing is always recommended for adapted scales, and researchers seeking to draw valid conclusions and publish their results must take this additional step.

Indices

An index is a composite score derived from aggregating measures of multiple concepts (called components) using a set of rules and formulas. It is different from a scale. Scales also aggregate measures; however, these measures examine different dimensions or the same dimension of a single construct. A well-known example of an index is the consumer price index (CPI), which is computed every month by the Bureau of Labor Statistics of the U.S. Department of Labor. The CPI is a measure of how much consumers have to pay for goods and services (in general) and is divided into eight major categories (food and beverages, housing, apparel, transportation, healthcare, recreation, education and communication, and “other goods and services”), which are further subdivided into more than 200 smaller items. Each month, government employees call all over the country to get the current prices of more than 80,000 items. Using a complicated weighting scheme that takes into account the location and probability of purchase for each item, analysts then combine these prices into an overall index score using a series of formulas and rules.

Another example of an index is the Duncan Socioeconomic Index (SEI). This index is used to quantify a person’s socioeconomic status (SES) and is a combination of three concepts: income, education, and occupation. Income is measured in dollars, education in years or degrees achieved, and occupation is classified into categories or levels by status. These very different measures are combined to create an overall SES index score. However, SES index measurement has generated a lot of controversy and disagreement among researchers and may not easily generalize from nation to nation. For a discussion of SES in Canada, check out Measures of Socio-Economic Status in Educational Research: The Canadian Context.

The process of creating an index is similar to that of a scale. First, conceptualize (define) the index and its constituent components. Though this appears simple, there may be a lot of disagreement on what components (concepts/constructs) should be included or excluded from an index. For instance, in the SES index, isn’t income correlated with education and occupation? And if so, should we include one component only or all three components? Reviewing the literature, using theories, and/or interviewing experts or key stakeholders may help resolve this issue. Second, operationalize and measure each component. For instance, how will you categorize occupations, particularly since some occupations may have changed with time (e.g., there were no Web developers before the Internet)? As we will see in step three below, researchers must create a rule or formula for calculating the index score. Again, this process may involve a lot of subjectivity, so validating the index score using existing or new data is important.

Scale and index development are often taught in their own course in doctoral education, so it is unreasonable for you to expect to develop a consistently accurate measure within the span of a week or two. Using available indices and scales is recommended for this reason.

Differences between scales and indices

Though indices and scales yield a single numerical score or value representing a concept of interest, they are different in many ways. First, indices often comprise components that are very different from each other (e.g., income, education, and occupation in the SES index) and are measured in different ways. Conversely, scales typically involve a set of similar items that use the same rating scale (such as a five-point Likert scale about customer satisfaction).

Second, indices often combine objectively measurable values such as prices or income, while scales are designed to assess subjective or judgmental constructs such as attitude, prejudice, or self-esteem. Some argue that the sophistication of the scaling methodology makes scales different from indexes, while others suggest that indexing methodology can be equally sophisticated. Nevertheless, indexes and scales are both essential tools in social science research.

Scales and indices seem like clean, convenient ways to measure different phenomena in social science, but just like with a lot of research, we have to be mindful of the assumptions and biases underneath. What if a scale or an index was developed using only White women as research participants? Is it going to be useful for other groups? It very well might be, but when using a scale or index on a group for whom it hasn’t been tested, it will be very important to evaluate the validity and reliability of the instrument, which we address in the rest of the chapter.

Finally, it’s important to note that while scales and indices are often made up of nominal or ordinal variables, when we analyze them into composite scores, we will treat them as interval/ratio variables.

Exercises

- Look back to your work from the previous section, are your variables unidimensional or multidimensional?

- Describe the specific measures you will use (actual questions and response options you will use with participants) for each variable in your research question.

- If you are using a measure developed by another researcher but do not have all of the questions, response options, and instructions needed to implement it, put it on your to-do list to get them.

Step 3: How you will interpret your measures

The final stage of operationalization involves setting the rules for how the measure works and how the researcher should interpret the results. Sometimes, interpreting a measure can be incredibly easy. If you ask someone their age, you’ll probably interpret the results by noting the raw number (e.g., 22) someone provides and that it is lower or higher than other people’s ages. However, you could also recode that person into age categories (e.g., under 25, 20-29-years-old, generation Z, etc.). Even scales may be simple to interpret. If there is a scale of problem behaviors, one might simply add up the number of behaviors checked off–with a range from 1-5 indicating low risk of delinquent behavior, 6-10 indicating the student is moderate risk, etc. How you choose to interpret your measures should be guided by how they were designed, how you conceptualize your variables, the data sources you used, and your plan for analyzing your data statistically. Whatever measure you use, you need a set of rules for how to take any valid answer a respondent provides to your measure and interpret it in terms of the variable being measured.

For more complicated measures like scales, refer to the information provided by the author for how to interpret the scale. If you can’t find enough information from the scale’s creator, look at how the results of that scale are reported in the results section of research articles.

One common mistake I see often is that students will introduce another variable into their operational definition. This is incorrect. Your operational definition should mention only one variable—the variable being defined. While your study will certainly draw conclusions about the relationships between variables, that’s not what operationalization is. Operationalization specifies what instrument you will use to measure your variable and how you plan to interpret the data collected using that measure.

Operationalization is probably the trickiest component of basic research methods, so please don’t get frustrated if it takes a few drafts and a lot of feedback to get to a workable definition. At the time of this writing, the books original author was in the process of operationalizing the concept of “attitudes towards research methods.” Originally, he thought that he could gauge students’ attitudes toward research methods by looking at their end-of-semester course evaluations. As he became aware of the potential methodological issues with student course evaluations, he opted to use focus groups of students to measure their common beliefs about research. You may recall some of these opinions from Chapter 1, such as the common beliefs that research is boring, useless, and too difficult. After the focus group, he created a scale based on the opinions he gathered, and he plans to pilot test it with another group of students. After the pilot test, he expects that he will have to revise the scale again before he can implement the measure in a real research project.

Key Takeaways

- Operationalization involves spelling out precisely how a concept will be measured.

- Operational definitions must include the variable, the measure, and how you plan to interpret the measure.

- There are four different levels of measurement: nominal, ordinal, interval, and ratio (in increasing order of specificity).

- Scales and indices are common ways to collect information and involve using multiple indicators in measurement.

- A key difference between a scale and an index is that a scale contains multiple indicators for one concept, whereas an indicator examines multiple concepts (components).

- Using scales developed and refined by other researchers can improve the rigor of a quantitative study.

Exercises

Use the research question that you developed in the previous chapters and find a related scale or index that researchers have used. If you have trouble finding the exact phenomenon you want to study, get as close as you can.

- What is the level of measurement for each item on each tool? Take a second and think about why the tool’s creator decided to include these levels of measurement. Identify any levels of measurement you would change and why.

- If these tools don’t exist for what you are interested in studying, why do you think that is?

11.3 Measurement quality

Learning Objectives

Learners will be able to…

- Define and describe the types of validity and reliability

- Assess for systematic error

The previous chapter provided insight into measuring concepts in social science research. We discussed the importance of identifying concepts and their corresponding indicators as a way to help us operationalize them. In essence, we now understand that when we think about our measurement process, we must be intentional and thoughtful in the choices that we make. This section is all about how to judge the quality of the measures you’ve chosen for the key variables in your research question.

–>Reliability and Validity: Really Important Sections<–

(If I could make it flash, I would)

Reliability

First, let’s say we’ve decided to measure alcoholism by asking people to respond to the following question: Have you ever had a problem with alcohol? If we measure alcoholism this way, then it is likely that anyone who identifies as an alcoholic would respond “yes.” This may seem like a good way to identify our group of interest, but think about how you and your peer group may respond to this question. Would participants respond differently after a wild night out, compared to any other night? Could an infrequent drinker’s current headache from last night’s glass of wine influence how they answer the question this morning? How would that same person respond to the question before consuming the wine? In each cases, the same person might respond differently to the same question at different points, so it is possible that our measure of alcoholism has a reliability problem. Reliability in measurement is about consistency.

One common problem of reliability with social scientific measures is memory. If we ask research participants to recall some aspect of their own past behavior, we should try to make the recollection process as simple and straightforward for them as possible. Sticking with the topic of alcohol intake, if we ask respondents how much wine, beer, and liquor they’ve consumed each day over the course of the past 3 months, how likely are we to get accurate responses? Unless a person keeps a journal documenting their intake, there will very likely be some inaccuracies in their responses. On the other hand, we might get more accurate responses if we ask a participant how many drinks of any kind they have consumed in the past week.

Reliability can be an issue even when we’re not reliant on others to accurately report their behaviors. Perhaps a researcher is interested in observing how alcohol intake influences interactions in public locations. They may decide to conduct observations at a local pub by noting how many drinks patrons consume and how their behavior changes as their intake changes. What if the researcher has to use the restroom, and the patron next to them takes three shots of tequila during the brief period the researcher is away from their seat? The reliability of this researcher’s measure of alcohol intake depends on their ability to physically observe every instance of patrons consuming drinks. If they are unlikely to be able to observe every such instance, then perhaps their mechanism for measuring this concept is not reliable.

The following subsections describe the types of reliability that are important for you to know about, but keep in mind that you may see other approaches to judging reliability mentioned in the empirical literature.

Test-retest reliability

When researchers measure a construct that they assume to be consistent across time, then the scores they obtain should also be consistent across time. Test-retest reliability is the extent to which this is actually the case. For example, intelligence is generally thought to be consistent across time. A person who is highly intelligent today will be highly intelligent next week. This means that any good measure of intelligence should produce roughly the same scores for this individual next week as it does today. Clearly, a measure that produces highly inconsistent scores over time cannot be a very good measure of a construct that is supposed to be consistent (Whoops… pro-tip. Did you know the human race has been getting smarter over the past century[13]?).

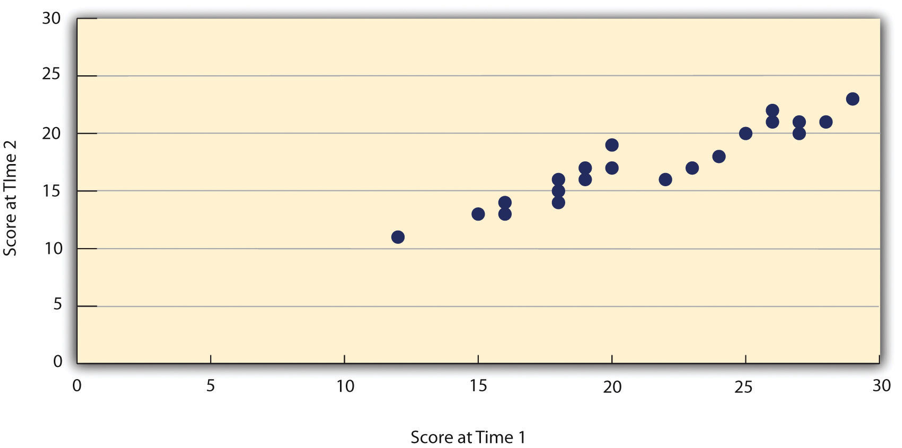

Assessing test-retest reliability requires using the measure on a group of people at one time, using it again on the same group of people at a later time. Unlike an experiment, you aren’t giving participants an intervention but trying to establish a reliable baseline of the variable you are measuring. Once you have these two measurements, you then look at the correlation between the two sets of scores. This is typically done by graphing the data in a scatterplot and computing the correlation coefficient. Figure 11.2 shows the correlation between two sets of scores of several university students on the Rosenberg Self-Esteem Scale, administered two times, a week apart. The correlation coefficient for these data is +.95. In general, a test-retest correlation of +.80 or greater is considered to indicate good reliability.

Again, high test-retest correlations make sense when the construct being measured is assumed to be consistent over time, which is the case for intelligence, self-esteem, and the Big Five personality dimensions. But other constructs are not assumed to be stable over time. The very nature of mood, for example, is that it changes. So a measure of mood that produced a low test-retest correlation over a period of a month would not be a cause for concern.

Internal consistency

Another kind of reliability is internal consistency, which is the consistency of people’s responses across the items on a multiple-item measure. In general, all the items on such measures are supposed to reflect the same underlying construct, so people’s scores on those items should be correlated with each other. On the Rosenberg Self-Esteem Scale, people who agree that they are a person of worth should tend to agree that they have a number of good qualities. If people’s responses to the different items are not correlated with each other, then it would no longer make sense to claim that they are all measuring the same underlying construct. This is as true for behavioral and physiological measures as for self-report measures. For example, people might make a series of bets in a simulated game of roulette as a measure of their level of risk seeking. This measure would be internally consistent to the extent that individual participants’ bets were consistently high or low across trials. A specific statistical test known as Cronbach’s Alpha provides a way to measure how well each question of a scale is related to the others.

Interrater reliability

Many behavioral measures involve significant judgment on the part of an observer or a rater. Interrater reliability is the extent to which different observers are consistent in their judgments. For example, if you were interested in measuring university students’ social skills, you could make video recordings of them as they interacted with another student whom they are meeting for the first time. Then you could have two or more observers watch the videos and rate each student’s level of social skills. To the extent that each participant does, in fact, have some level of social skills that can be detected by an attentive observer, different observers’ ratings should be highly correlated with each other.

Validity

Validity, another key element of assessing measurement quality, is the extent to which the scores from a measure represent the variable they are intended to. But how do researchers make this judgment? We have already considered one factor that they take into account—reliability. When a measure has good test-retest reliability and internal consistency, researchers should be more confident that the scores represent what they are supposed to. There has to be more to it, however, because a measure can be extremely reliable but have no validity whatsoever. For example, think about a math test of story problems designed to evaluate addition skill. If the story problems were written at the fifth grade reading level, but given to a first grade class, they would be reliable (students would consistently fail, all the time) but not valid (you wouldn’t get an accurate understanding of the student’s mathematical ability).

Discussions of validity usually divide it into several distinct “types.” But a good way to interpret these types is that they are other kinds of evidence—in addition to reliability—that should be taken into account when judging the validity of a measure.

Face validity

Face validity is the extent to which a measurement method appears “on its face” to measure the construct of interest. Most people would expect a self-esteem questionnaire to include items about whether they see themselves as a person of worth and whether they think they have good qualities. So a questionnaire that included these kinds of items would have good face validity. Although face validity can be assessed quantitatively—for example, by having a large sample of people rate a measure in terms of whether it appears to measure what it is intended to—it is usually assessed informally.

Face validity is at best a very weak kind of evidence that a measurement method is measuring what it is supposed to. One reason is that it is based on people’s intuitions about human behavior, which are frequently wrong. Math teachers might look at our test of story problems and see them as measuring addition skills, yet not realize the story problems are all written using language that is too complex for first grade students to grasp.

Content validity