3

Brenda Boyd

Penny Ralston-Berg

Abstract

This chapter explores the varying definitions of quality online course design in higher education, including institutional, faculty, student, and instructional designer perspectives. Focusing on the design aspects of quality, the chapter describes the foundations of design practice as defining measurable learning objectives; designing to achieve alignment between those defined objectives and the materials, activities, and assessments in an online course; and designing to encourage interaction between students, with the instructor, and with the course materials. This foundation can be built upon by considering additions of media or technology, credentialing, or opportunities for students to learn by doing. Enhancements often require collaboration between the faculty content experts and a diverse team of specialists including instructional designers, technologists, and media specialists. These teams often incorporate iterative methods to build, test, and revise designs prior to implementing enhancements in a live online course. Measurement of quality in the produced course can be achieved through alignment mapping to be sure all objectives are met, undertaking a course review to evaluate the course design in its completed state, and applying a set of quality standards to document evidence of quality.

Quality Measures of Great Instructional Design

Defining Quality

When exploring quality measures of instructional design, we must first explore the diverse definitions of quality online course design across different stakeholders in higher education. Definitions of quality vary among key stakeholders. Students may define quality as a course that is designed well, has consistent structure and presentation, has clear navigation, and contains materials, activities, and assessments that align with course objectives (Hixon, Barczyk, Ralston-Berg, & Buckenmeyer, 2016). Students may also define quality as a course in which they have received an adequate amount of instruction from the instructor as well as detailed, meaningful feedback to accompany grades (Gaytan, 2015). Faculty may see quality as courses that support interaction with students (Gaytan, 2015), or those that show student success in terms of improvement of student work over time and the ability of students to apply what they have learned in a professional manner (Frazer, Sullivan, Weatherspoon, & Hussey, 2017). At an institutional level, quality can be associated with meeting the institutional mission and vision, completing institutional goals, and being good stewards of resources while optimizing functionality (Schindler, Puls-Elvidge, Welzant, & Crawford, 2015). Quality can also be measured in terms of requirements and whether institutions or programs meet those requirements as determined by the six regional accrediting bodies in the United States: New England Association of Schools and Colleges (NEASC); Middle States Commission on Higher Education (MSCHE); Southern Association of Colleges and Schools (SACS); Higher Learning Commission (HLC); Northwest Commission on Colleges and Universities (NWCCU); and the Western Association of Schools and Colleges (WASC) Senior College and University Commission (WSCUC ), (Ryan, 2015). Institutions may also measure quality by the extent to which they help students learn and reach their potential (Schindler et al., 2015). Instructional designers may focus on quality in terms of creating a positive student and faculty experience within a course or program that is aligned with the purpose, includes well-designed sequences, and is validated through evaluation (Piña, 2018). Developing courses and programs that are easy to navigate and structured so that students will meet measurable outcomes provides a positive experience for both faculty teaching the course and students taking the course. Designers may also work toward alignment to ensure materials, activities, and assessments properly align with well-defined, measurable learning objectives. With these varying perceptions of quality, it is necessary to identify consistent, specific standards by which quality is measured. Quality online course design is critical to online learning because it sets a solid foundation for learning that the instructor can build upon throughout the life of the course.

Quality Standards

A seminal definition of quality related to instructional design practice is the creation of designs that are effective, efficient, and appealing (Merrill, Drake, Lacy, & Pratt, 1996) or effective, efficient, and engaging (Merrill, 2009). Effective means that objectives and desired outcomes are met; efficiency is measured in terms of designs being created within time, budget, and other constraints; and appealing refers to how the instruction engages learners (Ralston-Berg & Lara, 2012). The concepts of effective, efficient, and appealing (or engaging) instruction are foundations of instructional design practice (Merrill, 2009; Merrill et al., 1996). They should be equally balanced in any design. Designers use various tools such as course maps to ensure the design is effective, project management software to remain efficient, and user experience testing to determine engagement, moving these concepts from theory to practice. These three quality indicators also guide the design of learning interventions in terms of ensuring a proper curricular fit for any elements added to the course. For example, an interactive game may be engaging for students but not a good curricular fit if it does not align with the course learning objectives or takes too many resources to develop. These quality indicators are addressed within the design of a specific instructional course or product. In other words, quality is addressed at the course and activity levels.

There is also a need for quality to be measured at the program and institution levels. This is illustrated by a case from MarylandOnline. Initially, a measure was needed to assure institutions that the quality of a course at one Maryland institution was as good as another institution’s, for the purpose of seat-sharing and reciprocal agreements. The Quality Matters (QM) Rubric concept was created to fulfill this need—to review the design of online courses to ensure they were of comparable quality. The initial version of the QM Rubric was known as the 2005–06 Rubric and was created through a U.S. Department of Education Fund for the Improvement of Post-Secondary Education (FIPSE) grant awarded to MarylandOnline, a Maryland-based nonprofit organization consisting of community colleges and senior institutions (FIPSE, 2018). Agreeing upon that definition of quality took the collective wisdom of those involved as well as extensive research on quality indicators.

Quality Rubrics

As the collective understanding of what works in online course design grows through research, the community continues to raise the bar to ensure all learners can be successful in online courses. A quality education is expected by learners regardless of the modality in which the course is offered. Quality does not have to be perfect but must be better than just “good enough.” QM has defined quality at about an 85% level rather than being 100% perfect. The eight General Standards of the QM Rubric are: (1) Course Overview and Introduction; (2) Learning Objectives (Competencies); (3) Assessment and Measurement; (4) Instructional Materials; (5) Learning Activities and Learner Interaction; (6) Course Technology; (7) Learner Support; and (8) Accessibility and Usability. The QM Rubric also addressed the need for alignment of materials, activities, and assessments to measurable learning objectives. Today, the QM Rubric acts as an international standard for quality online course design, and the QM Certification Mark is an accepted indicator of quality online course design (Legon, 2015). The Quality Matters Rubric Specific Review Standards have also been applied to face-to-face courses. Since the creation of the initial QM Rubric, other course quality rubrics, such as the Rubric for Online Instruction from California State University at Chico (Piña & Bohn, 2015), the Illinois Online Network’s Quality Online Course Initiative (QOCI) Rubric (Illinois Online Network, 2012), and the Open SUNY Course Quality Review Rubric (OSCQR; Baldwin, Ching, & Hsu, 2018), have been adapted, developed, and disseminated. Creators of these additional rubrics have framed quality in terms of quality versus high quality, markers of excellence, criteria for online programs, and criteria for blended learning, as well as addressing processes for design and delivery (Bauer & Barrett-Greenly, 2018). Similar to the QM Rubric, other rubrics also include elements of course design, interaction, assessment, and learner support (Baldwin et al., 2018; Piña & Bohn, 2015). The purpose of creating rubrics is often to fulfill a need to define and describe those elements of online courses that should be included, as a baseline for institutions to build from. In many cases, institutional requirements or preferences (such as turnaround times for assignments or email responses, or inclusion of additional requirements such as commitments to religious traditions at private institutions) are included in these rubrics and measured as part of course design review. The measurement of quality and indicators of quality have become a priority for many institutions in higher education.

Continuous Improvement

Rubrics provide a means not only to measure the quality of course design and/or delivery but also to provide data and insights for improvement or iterative design. The spirit of the QM Rubric is continuous improvement—to work purposefully to continually improve online courses. This spirit is akin to the philosophy of the great coach Vince Lombardi, who said, “we are going to relentlessly chase perfection, knowing full well we will not catch it because nothing is perfect. But we are going to relentlessly chase it, because in the process we will catch excellence” (Carlson, 2004, p. 149). Courses will never be perfect, but we can be intentional about our continuous review and improvement of courses to strive for quality and excellence. Some QM-subscribing institutions offer workshops that focus on what the Specific Review Standards mean and how they can be applied to one’s own course, which provides an opportunity to develop a plan for improvement based on findings during the workshop. Another approach is to have faculty self-review their courses using the online tools QM provides. Some institutions conduct internal reviews of courses before submitting them for formal QM certification. This process can mimic the QM course review process and provides a framework for continuous improvement by making incremental changes to the course. It is important to remember that online courses are fluid entities to be (re)designed, delivered, evaluated, and improved over time through an intentional and iterative process.

Foundations of Design Practice

What is great instructional design? Steve Jobs once said, “Design is a funny word. Some people think design means how it looks. But of course, if you dig deeper, it’s really how it works” (Wolf, 1996, question 39). The same can be applied to instructional design: How do courses work? How do learners reach desired outcomes? What design elements can be incorporated into courses to ensure objectives are achieved? There are many definitions of and models for instructional design. As previously mentioned, successful design is effective, efficient, and appealing (or engaging) to learners (Merrill, 2009; Merrill et al., 1996). Instructional design can also be seen as a systematic process “that is employed to develop education and training programs in a consistent and reliable fashion” (Reiser & Dempsey, 2007, p. 11). Heiser and Ralston-Berg describe instructional design in online distance education as an “iterative and systematic process of problem-solving to align learning theories, learner expectations, teaching pedagogy, educational technology, and user experience design with curriculum and course outcomes” (2019 p. 282). The foundation of great design is in analyzing audience, needs, knowledge gaps, and situations to determine instructional goals and desired outcomes (Dick, Carey, & Carey, 2014). From there, effective, efficient, and engaging instructional programs can be designed and developed.

Measurable Objectives

What is it that we really want learners to know or be able to do at the completion of a course that they do not know or are not able to do at the start? The answer to this question helps determine measurable learning objectives (Acevedo, 2014). Measurable objectives are written so that what learners have achieved is observable, through an assignment or an assessment. These objectives drive the course design. It is also important that objectives be presented in student-centered language—meaning that what is expected of them is clear to students, and they are able to use the objectives to guide their study. Through the lens of design practice, objectives can also serve as “tiebreakers” during the design and development process. When considering the design of several options or possible solutions, designers refer to the objectives to be sure the design selected will be most effective, efficient, and engaging, to meet the desired outcomes.

Alignment

Alignment of all materials, activities, assessments, and other course elements with learning objectives is essential for success. Reeves (2006) states:

Regarding alignment in QM course reviews, these eight factors are examined as the alignment of objectives, materials, activities, assessments, and technology. Instructor and student roles are addressed by the overall course design so that expectations and means of interaction are clear for both groups.

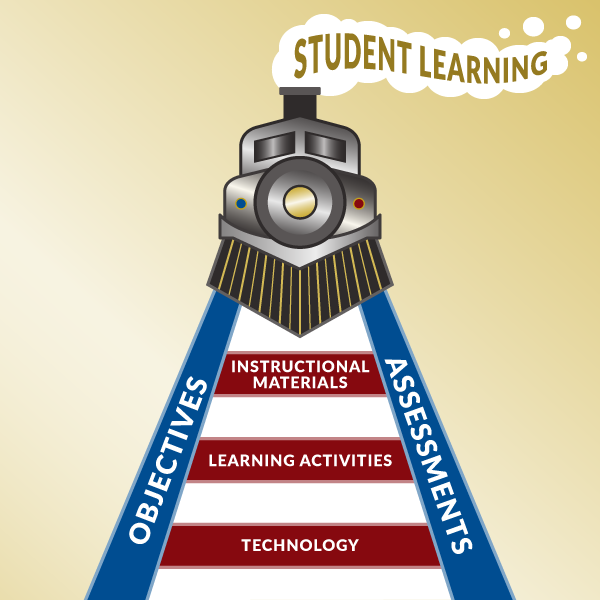

Six Specific Review Standards (SRSs) in the QM Rubric focus on the instructional design concept of alignment. Deviating from Reeves somewhat, the QM Rubric Standards focus on measurable learning objectives (two SRSs), assessment, instructional materials, learning activities, and technologies. Each SRS is interconnected with the others to build a solid course design that assures learners will meet the desired outcomes upon completion of the course. In the train graphic (Figure 1), we see the track is laid with measurable course- and module-level learning objectives (2.1 and 2.2) and assessments (3.1). The supports for the track are the instructional materials (4.1), the learning activities (5.1), and the course technologies (6.1). These parts of the course are sequenced to help learners move from the objective to the assessment. The assessments (3.1) measure the learning objectives as they were written, which makes the course design solid. Without any one of the six alignment standards, the track is less stable (Boyd & Van Buren, 2020).

Figure 1. The importance of alignment

Of course, learning is rarely this linear and arranged; however, when it is arranged according to these instructional design principles, a guided pathway is created for learners that enables them to succeed. For the intended instructional outcomes and learning objectives to be achieved, alignment must be present in the course design.

Interaction

Fundamental to a quality online course is the design of opportunity for interactions that lead to meaningful learning experiences. Instructional designers utilize some or all of three frameworks that help engage learners—through different kinds of interactions (Moore, 1989); different types of online presence in the community of inquiry framework (Garrison, Anderson, & Archer, 2010); and Gagné, Briggs, and Wager’s nine events of instruction (1992). To develop opportunities for interaction, designers draw on these different frameworks to address how learners interact with instructors, content, and other learners (Moore, 1989); how communities develop through social, cognitive, and teaching presence (Garrison et al., 2010); and how to engage learners by employing the mental conditions for learning (Gagné et al., 1992). It is important to note that these three frameworks are not mutually exclusive. They can and must work together to support opportunities for interaction. Gaining learners’ attention, then providing them with opportunities to interact with their peers, the instructor, and the content using social, cognitive, and teaching presence engages learners in experiences and interactions that compel them to continue learning for additional application to their lives.

Enhancing the Design Foundation

In terms of design practice, measurable objectives, alignment, and interaction establish a foundation from which students will learn. A research-based quality rubric may also serve as a helpful guide for course design and development. However, none of these foundational elements require the inclusion of multimedia, complex technologies, or advanced designs. In the spirit of continuous improvement, as courses are offered and feedback is received, course providers may choose to revise these foundationally sound courses. In doing so, the course may be enhanced with additional media, technology, or activities to provide additional or stronger means for learners to meet the desired outcomes and objectives.

When planning course enhancements, it is also important to consider faculty needs. Are there activities or assessments in the course that require a disproportionately large amount of time to effectively grade and provide feedback to students? If so, technology might aid in the delivery, submission, or grading process. Faculty members who find themselves answering frequent questions may choose to clarify instructions. For those faculty who spend an excessive amount of time explaining concepts and procedures, a video that provides the same information in a different way may better satisfy student needs. The addition of grading rubrics can also help to clarify expectations, allow students to self-assess their own work prior to submission for grading, and/or speed the grading process for faculty. It is important to periodically review where faculty and student time is spent to make adjustments that will improve course effectiveness and efficiency.

Media and Technology

In terms of gaining attention and providing opportunities for students to engage with content, the addition of media, games, simulation, or other technology can enhance a course.

Students may find objectives that require rote memorization of facts to be boring. Flashcards or simple games may help make the mundane task of memorizing facts more engaging. Contextual decision-making simulations allow students to make difficult decisions and experience the consequences of those decisions in a safe space while playing a specific role. Interaction with other players or characters while playing a role also allows students to practice professional communication and leadership techniques in the presence of stressors (Bauman & Ralston-Berg, 2015; Ralston-Berg & Lara, 2012).

Use of media provides access to distant, expensive, or dangerous situations that students may not be able to access on their own. For example, learners may view a tour of a distant location or the results of a dangerous chemical reaction. Video paired with communication tools gives students access to experts in the field whom they may not have had the opportunity to meet in person. It is important to remember that any technology or media added to enhance a course must meet an instructional need or help solve an instructional problem. New technologies and addition of media may seem exciting at first glance. However, if they are not clearly linked to learning objectives, they can easily become a distraction to students and a drain on time and resources.

To help prioritize and ensure a good fit for technology and media, consider where students have difficulty within the course, where they might benefit from professional practice of skills used on the job, or where they might benefit from ungraded practice (formative assessment) prior to graded activities. Defining these things helps focus time and resources on those areas which would be of most benefit to students and/or faculty. Such a prioritized list is also helpful in planning design and development plans over time. Students may also provide insights as to where media and technology could be added. When adding a new feature or enhancement to a course, ask students, “Where would you like to see more of this?” Their input can help with prioritizing where enhancements might be added next.

Credentialing

In the last few years, the movement toward competency-based higher education has highlighted the need to provide acknowledgment for skills that enable adults to show what they are learning during their learning process, rather than waiting for a certificate, diploma, or degree to signify accomplishment (Grant, 2014). Through the utilization of tools such as Credential Engine, learners can identify credentials that will set them apart in the job market for competitive jobs that require specialized skills and make visible informal learning. Learners who have completed coding camps or developed teamwork skills can make those accomplishments visible using digital credentials that can be shared publicly. Digital credentials, or badges, not only are a graphical representation of an accomplishment but include metadata that describes what the credential means and what it took to earn the credential. Some credentials include links to evidence that supports the accomplishment. Collection and display of evidence-backed digital credentials show potential employers what the learner knows and can do (Hickey, Andrews, & Chartrand, 2019). Inclusion of credentials on social media career platforms, such as LinkedIn, enables learners to share their digital credentials, which in turn enables potential employers to explore a learner’s prior learning and to determine their soft skills such as initiative and tenacity.

Learning by Doing

While digital credentials provide learners a way to make their learning visible, active learning engages them in the learning process by promoting learning through performing targeted skills and tasks. Active learning is defined as “students doing things and thinking about what they are doing” (Bonwell & Eison, 1991, p. 3). Active learning can take many forms, from short, simple reflective exercises to lengthy, complex group activities (Bonwell & Sutherland, 1996). The two basic components of active learning are action and reflection. Learning objectives that require students to apply, analyze, synthesize, then evaluate have increasing complexity (Bloom, 1956). These types of objectives often represent professional activities or practice students will perform on the job.

To adequately prepare students and to ensure they meet these types of learning objectives, students must demonstrate competency including the ability to perform certain tasks in specific situations. Through situational practice and assessment, they learn by doing (Aleckson & Ralston-Berg, 2011). In terms of course enhancement, activities may be revised from case studies or other types of activities that require students to analyze, plan, and discuss what they might do based on a prepared case or situation, to a more active simulation in which the situation changes as they progress through the activity. In the cases of critical thinking and decision-making, students may benefit from a situational simulation in which they are required to adjust their strategy and approach based on the consequences of their previous decisions. A simulation allows students to become more emotionally invested in the experience and to practice professional activities such as decision-making in a safe space (Bauman & Ralston-Berg, 2015; Ralston-Berg & Lara, 2012). To close out an active learning experience, students benefit from an activity debriefing in which they reflect on their experience and discuss how well they performed, what they might have done differently, and how the experience relates to their future professional practice.

Collaboration

Active learning in the online classroom doesn’t just occur on its own; collaboration is crucial to ensure opportunities for active learning are built into the course from the start. Collaboration can be defined as “a phenomenon that occurs when: DIVERSE members of a working team are able to SHARE their expertise such that EMERGENT ideas and designs are created and no one member can claim OWNERSHIP” (Aleckson & Ralston-Berg, 2011 ). There are benefits when professionals with diverse skills come together, share insights and expertise, and design solutions that they did not have in mind at the start of the process. Everyone works together to create a shared work. Online course design is generally where instructional designers provide the most value in the online learner’s experience. Instructional design’s impact on courses can be seen in the various models that are used to develop effective online courses. The CHLOE 3 Report, discussing the impact of instructional design in online courses, states: “there seems to be growing acceptance that instructional design expertise can help to make online courses more effective” (Garret, Legon, & Fredericksen, 2019). Faculty may not have the time, resources or specific skills to fully develop their ideas for enhancement of their courses into tangible solutions. Collaboration with instructional designers, media specialists, and other professionals can lead to quality enhancements and a meaningful learning experience for students (Aleckson & Ralston-Berg, 2011).

Iterative Methods

The key is to tie any enhancements or revisions to learning objectives and student data. Learning management systems provide many data points; however, it is imperative for the instructor to monitor the progress of learners, and use that data to make determinations about what is and is not working in the course and how it might be improved. Is the current course meeting the needs of students? Are students meeting the desired outcomes and achieving the defined learning objectives? This can be achieved by treating first runs of courses as pilots and gathering formative evaluation from learners with the intent of making improvements. Evidence from students is used to continuously improve the course. More complex solutions may require even more steps in the design process, including formative evaluation strategies such as focus groups or end-user testing for initial concepts, interface designs, prototypes, and fully developed pilot offerings (Aleckson & Ralston-Berg, 2011). For example, in the creation of a series of online immersive Italian language courses, the design and development team created a one-lesson prototype, and test users were asked to evaluate the interface usability, presentation of content, learning sequence, and technical functionality of the lesson (Ralston-Berg & Xia, 2015). Based on user feedback, the original prototype was revised, creating a second prototype which was tested with another test user group. The third version of the lesson was then used a model for the creation of lessons in all three courses. The two cycles of formative evaluation helped the team reach an effective, efficient, and engaging design.

Measurements of Quality

Standards and tools to measure quality can serve as an equalizer of sorts for creators of learning. Courses are measured according to specific items within each standard or tool and not by the number of faculty, staff, or hours dedicated to creating the course. All courses can meet standards—independent of circumstances, resources, and access to assistance.

Alignment Mapping

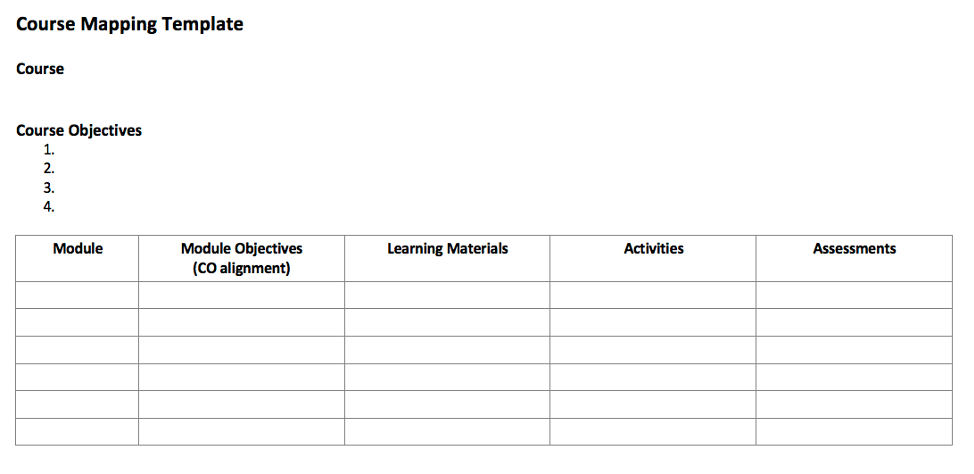

Instructional design that ensures alignment is fundamental to any course. This alignment is tracked in the course map (see Figure 2). The purpose of the course map is to make certain, in a bare-bones fashion, that all objectives will be measured and outline how the instructional materials and activities will scaffold learners to attain the objectives through aligned assessments. Course maps showing alignment within a course are used as part of the instructional design process in many contexts. Course maps may be used at the start of a new course design to plan the overall alignment in the course. They may also be used to map an existing course in preparation for revision—noting areas of the course which are out of alignment and prioritizing work to be completed to bring the course into alignment. A course map is also a valuable part of measuring the quality of a course.

Figure 2. Example: Simple course map (Apodaca & Forsythe, 2017 ).

Course maps can also be more elaborate and interactive, allowing users to highlight items that are not aligned. For example, orphaned objectives not associated with any content, activities, or assessments in a course can be identified (see Figure 3). Likewise, content or activities that are not linked to any specific objectives can be highlighted. This analysis of alignment can be applied across courses or programs. Highlighting places where alignment is lacking aids course designers and providers in their journey of continuous improvement toward quality.

Figure 3. Sample interactive course map. Screenshot from Coursetune c/o coursetune.com

Course Review

Examining this alignment is also one purpose of a course design review. A course review performed by peers provides an opportunity to get impartial feedback about a course based on specific standards or criteria. External reviews provide insight not only for the design of the course but also for the reviewers who might “idea shop” to see how others have made design decisions.

Application of Standards

Useful standards and tools related to the measurement of quality in instructional design and distance learning have been formulated by the International Board of Standards for Training, Performance and Instruction (IBSTPI), Quality Matters (QM), and the Online Learning Consortium (OLC). The IBSTPI standards focus on competencies that can be demonstrated by instructors, instructional designers, evaluators, training managers, and online learners (Klein, Spector, Grabowski, & Teja, 2004; Koszalka, Russ-Eft, & Reiser et al. 2013). The Higher Education Course Design Rubric by QM and the OSCQR Course Design Review Scorecard by OLC are comprehensive tools providing a large number of assessment items against which the features of online and blended/hybrid courses can be evaluated and improved (Online Learning Consortium, 2018; Quality Matters, 2018). The Association for Educational Communications and Technology (AECT ) Instructional Design Standards for Distance Learning are intended to inform and provide guidance before, during, and after the design and development of online and blended/hybrid courses. They can be used in tandem with other tools to assure that empirically sound principles of learning and instruction are baked into courses designed for learning at a distance. There is also a set of accompanying sample rubrics that have been developed for practical application of the standards (Piña, 2018).

Through the application of QM Standards to course design, recommendations for improvement as well as evidence of innovative or particularly well-done design are provided to the course representatives for continuous improvement. Designers use QM Standards not only for course review once a course has been developed and taught a few times, but also as a guide for the development of new online courses. Using the alignment framework in the QM Rubric as a starting point, additional known indicators of quality are included in the course design. Designing with the QM rubric in mind helps ensure designers and course providers have included all of the components of quality courses in their designs.

References

Acevedo, M. M. (2014). Collaborating with faculty to compose exemplary learning objectives. Internet Learning Journal, 3(1), 5-16.

Aleckson, J. D., & Ralston-Berg, P. (2011). MindMeld: Micro-collaboration between eLearning designers and instructor experts. Madison, WI: Atwood Publishers.

Apodaca, B., & Forsythe, K. (2017, September). Map your way to a quality course: Course mapping. Paper presented at the QM Connect Conference, Fort Worth, TX.

Baldwin, S., Ching, Y. H., & Hsu, Y. C. (2018). Online course design in higher education: A review of national and statewide evaluation instruments. TechTrends, 62(1), 46–57.

Bauer, S., & Barrett-Greenly. T. (2018). Checklists & rubrics. Retrieved from https://topkit.org/developing/checklists-rubrics/

Bauman, E. B., & Ralston-Berg, P. (2015). Virtual simulation. In J. C. Palaganas, J. C. Maxworthy, C. A. Epps, & M. E. Mancini (Eds.), Defining excellence in simulation programs (pp. 241-251). Philadelphia: Wolters Kluwer.

Bloom, B. S. (1956). Taxonomy of educational objectives. Vol. 1: Cognitive domain. New York: McKay, 20-24.

Bonwell, C. C., & Eison, J. A. (1991). Active learning: Creating excitement in the classroom (ASHE-ERIC Higher Education Report No. 1). Washington, DC: George Washington University (ED336049).

Bonwell, C. C., & Sutherland, T. E. (1996). The active learning continuum: Choosing activities to engage students in the classroom. New Directions for Teaching and Learning, 1996(67), 3–16.

Carlson, C. (2004). Game of My Life: 25 Stories of Packers Football (pp. 149).

Dick, W., Carey, L., & Carey, J. O. (2014). Systematic design of instruction (8th ed.). Boston, MA: Pearson.

FIPSE (2018). Grant Information. Retrieved from https://www.qualitymatters.org/qm-membership/faqs/fipse-grant-information

Fowlkes, J., & Boyd, B. (2015). Integrating alignment [PowerPoint slides]. Retrieved from https://www.slideshare.net/SBCTCProfessionalLearning/integrating-alignment-brenda

Frazer, C., Sullivan, D. H., Weatherspoon, D., & Hussey, L. (2017). Faculty perceptions of online teaching effectiveness and indicators of quality. Nursing Research and Practice, (pp. 1-6).

Gagné, R. M., Briggs, L. J., & Wager, W. W. (1992). Principles of instructional design (4th ed.). Fort Worth, TX: Harcourt Brace Jovanovich College Publishers.

Garrett, R., Legon, R., & Fredericksen, E. E. (2019). CHLOE 3 behind the numbers: The changing landscape of online education 2019. Retrieved from https://www.qualitymatters.org/qa-resources/resource-center/articles-resources/CHLOE-3-report-2019

Garrison, R., Anderson, T., & Archer, W. (2010). The first decade of the community of inquiry framework: A retrospective. The Internet and Higher Education, 13(1–2), 5–9.

Gaytan, J. (2015). Comparing faculty and student perceptions regarding factors that affect student retention in online education. American Journal of Distance Education, 29(1), 56–66.

Grant, S. L. (2014). What counts as learning: Open digital badges for new opportunities. Irvine, CA: Digital Media and Learning Research Hub.

Heiser, R., & Ralston-Berg, P. (2019). Active learning strategies for optimal learning. In M. G. Moore & W. C. Diehl (Eds.), Handbook of distance education (4th ed., pp. 281–294). New York: Routledge.

Hickey, D. T., Andrews, C. D., & Chartrand, G. T. (2019). Digital badges for capturing, recognizing, endorsing, and motivating broad forms of collaborative learning. In K. Lund, G. Niccolai, E. Lavoué, C. E. Hmelo-Silver, G. Gweon, & M. Baker (Eds.), A wide lens: Combining embodied, enactive, extended, and embedded learning in collaborative settings, 13th International Conference on Computer Supported Collaborative Learning (CSCL) 2019 (pp. 656–659). Lyon, France: International Society of the Learning Sciences.

Hixon , E., Barczyk, C., Ralston-Berg, P., & Buckenmeyer, J. (2016). Online course quality: What do nontraditional students value? Online Journal of Distance Learning Administration, 19(4). Retrieved from http://www.westga.edu/%7Edistance/ojdla/winter194/hixon_barczyk_ralston-berg_buckenmeyer194.html

Illinois Online Network. (2012). Rubric: Quality online course initiative. Retrieved from http:// www.ion.uillinois.edu/initiatives/qoci/ index.asp

Klein , J. D., Spector, J. M. S., Grabowski, B., & de la Teja, I. (2004). Instructor competencies: Standards for face-to-face, online, and blended. Greenwich, CT: IAP.

Koszalka, T. A., Russ-Eft, D. F., & Reiser, R. (2013). Instructional designer competencies: The standards. Greenwich, CT: IAP.

Legon, R. (2015). Measuring the impact of the Quality Matters Rubric™: A discussion of possibilities. American Journal of Distance Education, 29(3), 166–173.

Merrill , M. D. (2009). Finding e³ (effective, efficient, and engaging) instruction. Educational Technology 49(3), 15–26.

Merrill, M. D., Drake, L., Lacy, M. J., & Pratt, J. (1996). Reclaiming instructional design. Educational Technology, 36(5), 5–7.

Moore, M. G. (1989). Editorial: Three types of interaction. American Journal of Distance Education, 3(2), 1–7, doi:10.1080/08923648909526659

Online Learning Consortium. (2018). OSCQR course design review scorecard. Retrieved from https://onlinelearningconsortium.org/consult/oscqr-course-design-review/

Piña , A. A. (2018). AECT instructional design standards for distance learning. TechTrends, 62(3), 305-307.

Piña, A. A., & Bohn, L. (2015). Integrating accreditation guidelines and quality scorecard for evaluating online programs. Distance Learning, 12(4), 1–6 .

Quality Matters. (2018). Quality Matters program rubric—Higher education. Retrieved from https://www.qualitymatters.org/rubric

Ralston-Berg, P., & Lara, M. (2012). Fitting virtual reality and game-based learning into an existing curriculum. In E. Bauman (Ed.), Games and Simulation for Nursing Education (pp. 127-145). New York: Springer.

Ralston-Berg, P., & Xia, J. (2015, November). Can students learn to speak Italian from an online, story-based tour of Italy? Design showcase presented at the Association for Education and Communication Technology International Convention, Indianapolis, IN.

Reeves, T. C. (2006). How do you know they are learning? The importance of alignment in higher education. International Journal of Learning Technology, 2(4), 294–309.

Reiser, R. A., & Dempsey, J. V. (2007). Trends and issues in instructional design (2nd ed.). Upper Saddle River, NJ: Pearson Education, Inc.

Ryan, T. (2015). Quality assurance in higher education: A review of literature. Higher Learning Research Communications, 5(4) . https://doi.org/10.18870/hlrc.v5i4.257

Schindler, L., Puls-Elvidge, S., Welzant, H., & Crawford, L. (2015). Definitions of quality in higher education: A synthesis of the literature. Higher Learning Research Communications, 5(3), 3–13.

Wolf, G. (1996). Steve Jobs: The next insanely great thing. Wired Magazine, 4, 67–76.