2

The most recent version of this chapter is available at: https://opentext.ku.edu/teams/chapter/cooperation/

From the Noba Project

By Jake P. Moskowitz and Paul K. Piff

University of California, Irvine

Learning Objectives

- Define “cooperation”

- Distinguish between different social value orientations

- List 2 influences on cooperation

- Explain 2 methods psychologists use to research cooperation

Introduction

People cooperate with others throughout their life. Whether on the playground with friends, at home with family, or at work with colleagues, cooperation is a natural instinct (Keltner, Kogan, Piff, & Saturn, 2014). Children as young as 14 months cooperate with others on joint tasks (Warneken, Chen, & Tomasello 2006; Warneken & Tomasello, 2007). Humans’ closest evolutionary relatives, chimpanzees and bonobos, maintain long-term cooperative relationships as well, sharing resources and caring for each other’s young (de Waal & Lanting, 1997; Langergraber, Mitani, & Vigilant, 2007). Ancient animal remains found near early human settlements suggest that our ancestors hunted in cooperative groups (Mithen, 1996). Cooperation, it seems, is embedded in our evolutionary heritage.

Yet, cooperation can also be difficult to achieve; there are often breakdowns in people’s ability to work effectively in teams, or in their willingness to collaborate with others. Even with issues that can only be solved through large-scale cooperation, such as climate change and world hunger, people can have difficulties joining forces with others to take collective action. Psychologists have identified numerous individual and situational factors that influence the effectiveness of cooperation across many areas of life. From the trust that people place in others to the lines they draw between “us” and “them,” many different processes shape cooperation. This module will explore these individual, situational, and cultural influences on cooperation.

The Prisoner’s Dilemma

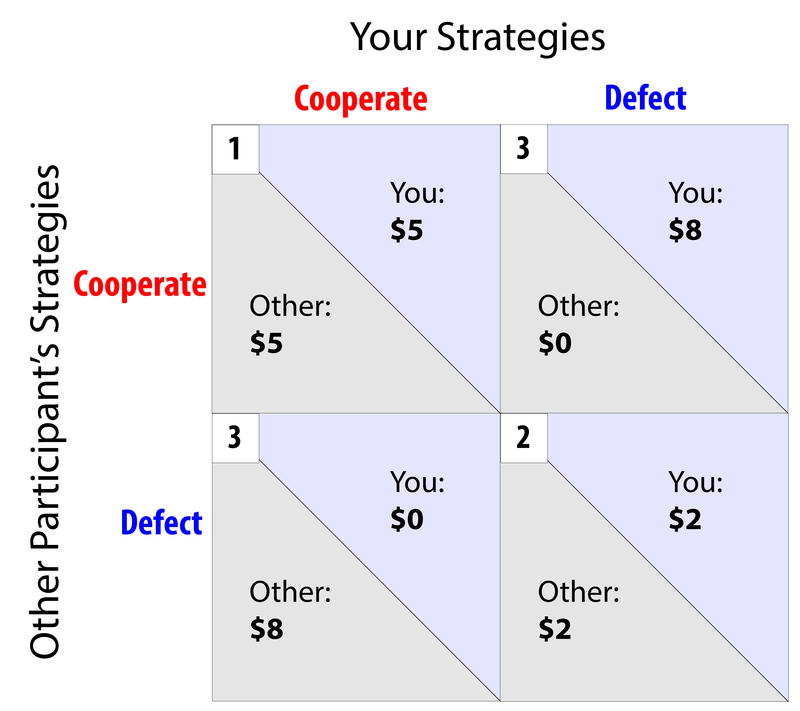

Imagine that you are a participant in a social experiment. As you sit down, you are told that you will be playing a game with another person in a separate room. The other participant is also part of the experiment but the two of you will never meet. In the experiment, there is the possibility that you will be awarded some money. Both you and your unknown partner are required to make a choice: either choose to “cooperate,” maximizing your combined reward, or “defect,” (not cooperate) and thereby maximize your individual reward. The choice you make, along with that of the other participant, will result in one of three unique outcomes to this task, illustrated below in Figure 1. If you and your partner both cooperate (1), you will each receive $5. If you and your partner both defect (2), you will each receive $2. However, if one partner defects and the other partner cooperates (3), the defector will receive $8, while the cooperator will receive nothing. Remember, you and your partner cannot discuss your strategy. Which would you choose? Striking out on your own promises big rewards but you could also lose everything. Cooperating, on the other hand, offers the best benefit for the most people but requires a high level of trust.

This scenario, in which two people independently choose between cooperation and defection, is known as the prisoner’s dilemma. It gets its name from the situation in which two prisoners who have committed a crime are given the opportunity to either (A) both confess their crime (and get a moderate sentence), (B) rat out their accomplice (and get a lesser sentence), or (C) both remain silent (and avoid punishment altogether). Psychologists use various forms of the prisoner’s dilemma scenario to study self-interest and cooperation. Whether framed as a monetary game or a prison game, the prisoner’s dilemma illuminates a conflict at the core of many decisions to cooperate: it pits the motivation to maximize personal reward against the motivation to maximize gains for the group (you and your partner combined).

For someone trying to maximize his or her own personal reward, the most “rational” choice is to defect (not cooperate), because defecting always results in a larger personal reward, regardless of the partner’s choice. However, when the two participants view their partnership as a joint effort (such as a friendly relationship), cooperating is the best strategy of all, since it provides the largest combined sum of money ($10—which they share), as opposed to partial cooperation ($8), or mutual defection ($4). In other words, although defecting represents the “best” choice from an individual perspective, it is also the worst choice to make for the group as a whole.

This divide between personal and collective interests is a key obstacle that prevents people from cooperating. Think back to our earlier definition of cooperation: cooperation is when multiple partners work together toward a common goal that will benefit everyone. As is frequent in these types of scenarios, even though cooperation may benefit the whole group, individuals are often able to earn even larger, personal rewards by defecting—as demonstrated in the prisoner’s dilemma example above.

You can see a small, real-world example of the prisoner’s dilemma phenomenon at live music concerts. At venues with seating, many audience members will choose to stand, hoping to get a better view of the musicians onstage. As a result, the people sitting directly behind those now-standing people are also forced to stand to see the action onstage. This creates a chain reaction in which the entire audience now has to stand, just to see over the heads of the crowd in front of them. While choosing to stand may improve one’s own concert experience, it creates a literal barrier for the rest of the audience, hurting the overall experience of the group.

Simple models of rational self-interest predict 100% defection in cooperative tasks. That is, if people were only interested in benefiting themselves, we would always expect to see selfish behavior. Instead, there is a surprising tendency to cooperate in the prisoner’s dilemma and similar tasks (Batson & Moran, 1999; Oosterbeek, Sloof, Van De Kuilen, 2004). Given the clear benefits to defect, why then do some people choose to cooperate, whereas others choose to defect?

Individual Differences in Cooperation

Social Value Orientation

One key factor related to individual differences in cooperation is the extent to which people value not only their own outcomes, but also the outcomes of others. Social value orientation (SVO) describes people’s preferences when dividing important resources between themselves and others (Messick & McClintock, 1968). A person might, for example, generally be competitive with others, or cooperative, or self-sacrificing. People with different social values differ in the importance they place on their own positive outcomes relative to the outcomes of others. For example, you might give your friend gas money because she drives you to school, even though that means you will have less spending money for the weekend. In this example, you are demonstrating a cooperative orientation.

People generally fall into one of three categories of SVO: cooperative, individualistic, or competitive. While most people want to bring about positive outcomes for all (cooperative orientation), certain types of people are less concerned about the outcomes of others (individualistic), or even seek to undermine others in order to get ahead (competitive orientation).

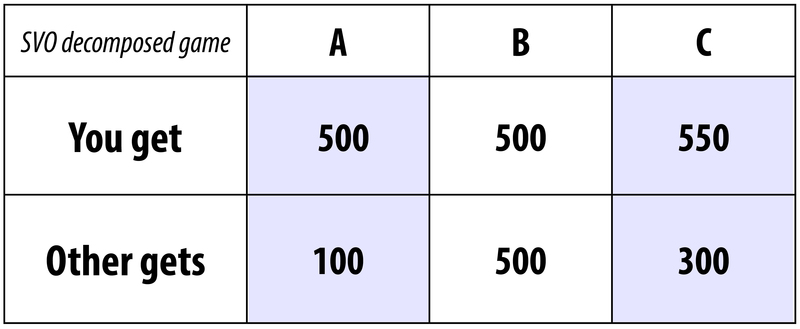

Are you curious about your own orientation? One technique psychologists use to sort people into one of these categories is to have them play a series of decomposed games—short laboratory exercises that involve making a choice from various distributions of resources between oneself and an “other.” Consider the example shown in Figure 2, which offers three different ways to distribute a valuable resource (such as money). People with competitive SVOs, who try to maximize their relative advantage over others, are most likely to pick option A. People with cooperative SVOs, who try to maximize joint gain for both themselves and others, are more likely to split the resource evenly, picking option B. People with individualistic SVOs, who always maximize gains to the self, regardless of how it affects others, will most likely pick option C.

.

Researchers have found that a person’s SVO predicts how cooperative he or she is in both laboratory experiments and the outside world. For example, in one laboratory experiment, groups of participants were asked to play a commons dilemma game. In this game, participants each took turns drawing from a central collection of points to be exchanged for real money at the end of the experiment. These points represented a common-pool resource for the group, like valuable goods or services in society (such as farm land, ground water, and air quality) that are freely accessible to everyone but prone to overuse and degradation. Participants were told that, while the common-pool resource would gradually replenish after the end of every turn, taking too much of the resource too quickly would eventually deplete it. The researchers found that participants with cooperative SVOs withdrew fewer resources from the common-pool than those with competitive and individualistic SVOs, indicating a greater willingness to cooperate with others and act in a way that is sustainable for the group (Kramer, McClintock, & Messick, 1986; Roch & Samuelson, 1997).

Research has also shown that people with cooperative SVOs are more likely to commute to work using public transportation—an act of cooperation that can help reduce carbon emissions—rather than drive themselves, compared to people with competitive and individualistic SVOs (Van Vugt, Meertens, & Van Lange, 1995; Van Vugt, Van Lange, & Meertens, 1996). People with cooperative SVOs also more frequently engage in behavior intended to help others, such as volunteering and giving money to charity (McClintock & Allison, 1989; Van Lange, Bekkers, Schuyt, Van Vugt, 2007). Taken together, these findings show that people with cooperative SVOs act with greater consideration for the overall well-being of others and the group as a whole, using resources in moderation and taking more effortful measures (like using public transportation to protect the environment) to benefit the group.

Empathic Ability

Empathy is the ability to feel and understand another’s emotional experience. When we empathize with someone else, we take on that person’s perspective, imagining the world from his or her point of view and vicariously experiencing his or her emotions (Davis, 1994; Goetz, Keltner, & Simon-Thomas, 2010). Research has shown that when people empathize with their partner, they act with greater cooperation and overall altruism—the desire to help the partner, even at a potential cost to the self. People that can experience and understand the emotions of others are better able to work with others in groups, earning higher job performance ratings on average from their supervisors, even after adjusting for different types of work and other aspects of personality (Côté & Miners, 2006).

When empathizing with a person in distress, the natural desire to help is often expressed as a desire to cooperate. In one study, just before playing an economic game with a partner in another room, participants were given a note revealing that their partner had just gone through a rough breakup and needed some cheering up. While half of the subjects were urged by the experimenters to “remain objective and detached,” the other half were told to “try and imagine how the other person feels.” Though both groups received the same information about their partner, those who were encouraged to engage in empathy—by actively experiencing their partner’s emotions—acted with greater cooperation in the economic game (Batson & Moran, 1999). The researchers also found that people who empathized with their partners were more likely to act cooperatively, even after being told that their partner had already made a choice to not cooperate (Batson & Ahmad, 2001)! Evidence of the link between empathy and cooperation has even been found in studies of preschool children (Marcus, Telleen, & Roke, 1979). From a very early age, emotional understanding can foster cooperation.

Although empathizing with a partner can lead to more cooperation between two people, it can also undercut cooperation within larger groups. In groups, empathizing with a single person can lead people to abandon broader cooperation in favor of helping only the target individual. In one study, participants were asked to play a cooperative game with three partners. In the game, participants were asked to (A) donate resources to a central pool, (B) donate resources to a specific group member, or (C) keep the resources for themselves. According to the rules, all donations to the central pool would be increased by 50% then distributed evenly, resulting in a net gain to the entire group. Objectively, this might seem to be the best option. However, when participants were encouraged to imagine the feelings of one of their partners said to be in distress, they were more likely to donate their tickets to that partner and not engage in cooperation with the group—rather than remaining detached and objective (Batson et al., 1995). Though empathy can create strong cooperative bonds between individuals, it can sometimes lead to actions that, despite being well-intentioned, end up undermining the group’s best interests.

Situational Influences of Cooperation

Communication and Commitment

Open communication between people is one of the best ways to promote cooperation (Dawes, McTavish, & Shaklee, 1977; Dawes, 1988). This is because communication provides an opportunity to size up the trustworthiness of others. It also affords us a chance to prove our own trustworthiness, by verbally committing to cooperate with others. Since cooperation requires people to enter a state of vulnerability and trust with partners, we are very sensitive to the social cues and interactions of potential partners before deciding to cooperate with them.

In one line of research, groups of participants were allowed to chat for five minutes before playing a multi-round “public goods” game. During the chats, the players were allowed to discuss game strategies and make verbal commitments about their in-game actions. While some groups were able to reach a consensus on a strategy (e.g., “always cooperate”), other groups failed to reach a consensus within their allotted five minutes or even picked strategies that ensured noncooperation (e.g., “every person for themselves”). The researchers found that when group members made explicit commitments to each other to cooperate, they ended up honoring those commitments and acting with greater cooperation. Interestingly, the effect of face-to-face verbal commitments persisted even when the cooperation game itself was completely anonymous (Kerr and Kaufman-Gilliland, 1994; Kerr, Garst, Lewandowski, & Harris, 1997). This suggests that those who explicitly commit to cooperate are driven not by the fear of external punishment by group members, but by their own personal desire to honor such commitments. In other words, once people make a specific promise to cooperate, they are driven by “that still, small voice”—the voice of their own inner conscience—to fulfill that commitment (Kerr et al., 1997).

Trust

When it comes to cooperation, trust is key (Pruitt & Kimmel, 1977; Parks, Henager, & Scamahorn, 1996; Chaudhuri, Sopher, & Strand, 2002). Working with others toward a common goal requires a level of faith that our partners will repay our hard work and generosity, and not take advantage of us for their own selfish gains. Social trust, or the belief that another person’s actions will be beneficial to one’s own interests (Kramer, 1999), enables people to work together as a single unit, pooling their resources to accomplish more than they could individually. Trusting others, however, depends on their actions and reputation.

One common example of the difficulties in trusting others that you might recognize from being a student occurs when you are assigned a group project. Many students dislike group projects because they worry about “social loafing”—the way that one person expends less effort but still benefits from the efforts of the group. Imagine, for example, that you and five other students are assigned to work together on a difficult class project. At first, you and your group members split the work up evenly. As the project continues, however, you notice that one member of your team isn’t doing his “fair share.” He fails to show up to meetings, his work is sloppy, and he seems generally uninterested in contributing to the project. After a while, you might begin to suspect that this student is trying to get by with minimal effort, perhaps assuming others will pick up the slack. Your group now faces a difficult choice: either join the slacker and abandon all work on the project, causing it to collapse, or keep cooperating and allow for the possibility that the uncooperative student may receive a decent grade for others’ work.

If this scenario sounds familiar to you, you’re not alone. Economists call this situation the free rider problem—when individuals benefit from the cooperation of others without contributing anything in return (Grossman & Hart, 1980). Although these sorts of actions may benefit the free rider in the short-term, free riding can have a negative impact on a person’s social reputation over time. In the above example, for instance, the “free riding” student may develop a reputation as lazy or untrustworthy, leading others to be less willing to work with him or her in the future.

Indeed, research has shown that a poor reputation for cooperation can serve as a warning sign for others not to cooperate with the person in disrepute. For example, in one experiment involving a group economic game, participants seen as being uncooperative were punished harshly by their fellow participants. According to the rules of the game, individuals took turns being either a “donor” or a “receiver” over the course of multiple rounds. If donors chose to give up a small sum of actual money, receivers would receive a slightly larger sum, resulting in an overall net gain. However, unbeknownst to the group, one participant was secretly instructed never to donate. After just a few rounds of play, this individual was effectively shunned by the rest of the group, receiving almost zero donations from the other members (Milinski, Semmann, Bakker, & Krambeck, 2001). When someone is seen being consistently uncooperative, other people have no incentive to trust him/her, resulting in a collapse of cooperation.

On the other hand, people are more likely to cooperate with others who have a good reputation for cooperation and are therefore deemed trustworthy. In one study, people played a group economic game similar to the one described above: over multiple rounds, they took turns choosing whether to donate to other group members. Over the course of the game, donations were more frequently given to individuals who had been generous in earlier rounds of the game (Wedekind & Milinski, 2000). In other words, individuals seen cooperating with others were afforded a reputational advantage, earning them more partners willing to cooperate and a larger overall monetary reward.

Group Identification

Another factor that can impact cooperation is a person’s social identity, or the extent to which he or she identifies as a member of a particular social group (Tajfel & Turner, 1979/1986). People can identify with groups of all shapes and sizes: a group might be relatively small, such as a local high school class, or very large, such as a national citizenship or a political party. While these groups are often bound together by shared goals and values, they can also form according to seemingly arbitrary qualities, such as musical taste, hometown, or even completely randomized assignment, such as a coin toss (Tajfel, Billig, Bundy, & Flament, 1971; Bigler, Brown, & Markell, 2001; Locksley, Ortiz, & Hepburn, 1980). When members of a group place a high value on their group membership, their identity (the way they view themselves) can be shaped in part by the goals and values of that group.

Research shows that when people’s group identity is emphasized (for example, when laboratory participants are referred to as “group members” rather than “individuals”), they are less likely to act selfishly in a commons dilemma game. In such experiments, so-called “group members” withdraw fewer resources, with the outcome of promoting the sustainability of the group (Brewer & Kramer, 1986). In one study, students who strongly identified with their university were less likely to leave a cooperative group of fellow students when given an attractive option to exit (Van Vugt & Hart, 2004). In addition, the strength of a person’s identification with a group or organization is a key driver behind participation in large-scale cooperative efforts, such as collective action in political and workers’ groups (Klandersman, 2002), and engaging in organizational citizenship behaviors (Cropanzano & Byrne, 2000).

Emphasizing group identity is not without its costs: although it can increase cooperation within groups, it can also undermine cooperation between groups. Researchers have found that groups interacting with other groups are more competitive and less cooperative than individuals interacting with other individuals, a phenomenon known as interindividual-intergroup discontinuity (Schopler & Insko, 1999; Wildschut, Pinter, Vevea, Insko, & Schopler, 2003). For example, groups interacting with other groups displayed greater self-interest and reduced cooperation in a prisoner’s dilemma game than did individuals completing the same tasks with other individuals (Insko et al., 1987). Such problems with trust and cooperation are largely due to people’s general reluctance to cooperate with members of an outgroup, or those outside the boundaries of one’s own social group (Allport, 1954; Van Vugt, Biel, Snyder, & Tyler, 2000). Outgroups do not have to be explicit rivals for this effect to take place. Indeed, in one study, simply telling groups of participants that other groups preferred a different style of painting led them to behave less cooperatively than pairs of individuals completing the same task (Insko, Kirchner, Pinter, Efaw, & Wildschut, 2005). Though a strong group identity can bind individuals within the group together, it can also drive divisions between different groups, reducing overall trust and cooperation on a larger scope.

Under the right circumstances, however, even rival groups can be turned into cooperative partners in the presence of superordinate goals. In a classic demonstration of this phenomenon, Muzafer Sherif and colleagues observed the cooperative and competing behaviors of two groups of twelve-year-old boys at a summer camp in Robber’s Cave State Park, in Oklahoma (Sherif, Harvey, White, Hood, & Sherif, 1961). The twenty-two boys in the study were all carefully interviewed to determine that none of them knew each other beforehand. Importantly, Sherif and colleagues kept both groups unaware of each other’s existence, arranging for them to arrive at separate times and occupy different areas of the camp. Within each group, the participants quickly bonded and established their own group identity—“The Eagles” and “The Rattlers”—identifying leaders and creating flags decorated with their own group’s name and symbols.

For the next phase of the experiment, the researchers revealed the existence of each group to the other, leading to reactions of anger, territorialism, and verbal abuse between the two. This behavior was further compounded by a series of competitive group activities, such as baseball and tug-of-war, leading the two groups to engage in even more spiteful behavior: The Eagles set fire to The Rattlers’ flag, and The Rattlers retaliated by ransacking The Eagles’ cabin, overturning beds and stealing their belongings. Eventually, the two groups refused to eat together in the same dining hall, and they had to be physically separated to avoid further conflict.

However, in the final phase of the experiment, Sherif and colleagues introduced a dilemma to both groups that could only be solved through mutual cooperation. The researchers told both groups that there was a shortage of drinking water in the camp, supposedly due to “vandals” damaging the water supply. As both groups gathered around the water supply, attempting to find a solution, members from each group offered suggestions and worked together to fix the problem. Since the lack of drinking water affected both groups equally, both were highly motivated to try and resolve the issue. Finally, after 45 minutes, the two groups managed to clear a stuck pipe, allowing fresh water to flow. The researchers concluded that when conflicting groups share a superordinate goal, they are capable of shifting their attitudes and bridging group differences to become cooperative partners. The insights from this study have important implications for group-level cooperation. Since many problems facing the world today, such as climate change and nuclear proliferation, affect individuals of all nations, and are best dealt with through the coordinated efforts of different groups and countries, emphasizing the shared nature of these dilemmas may enable otherwise competing groups to engage in cooperative and collective action.

Culture

Culture can have a powerful effect on people’s beliefs about and ways they interact with others. Might culture also affect a person’s tendency toward cooperation? To answer this question, Joseph Henrich and his colleagues surveyed people from 15 small-scale societies around the world, located in places such as Zimbabwe, Bolivia, and Indonesia. These groups varied widely in the ways they traditionally interacted with their environments: some practiced small-scale agriculture, others foraged for food, and still others were nomadic herders of animals (Henrich et al., 2001).

To measure their tendency toward cooperation, individuals of each society were asked to play the ultimatum game, a task similar in nature to the prisoner’s dilemma. The game has two players: Player A (the “allocator”) is given a sum of money (equal to two days’ wages) and allowed to donate any amount of it to Player B (the “responder”). Player B can then either accept or reject Player A’s offer. If Player B accepts the offer, both players keep their agreed-upon amounts. However, if Player B rejects the offer, then neither player receives anything. In this scenario, the responder can use his/her authority to punish unfair offers, even though it requires giving up his or her own reward. In turn, Player A must be careful to propose an acceptable offer to Player B, while still trying to maximize his/her own outcome in the game.

According to a model of rational economics, a self-interested Player B should always choose to accept any offer, no matter how small or unfair. As a result, Player A should always try to offer the minimum possible amount to Player B, in order to maximize his/her own reward. Instead, the researchers found that people in these 15 societies donated on average 39% of the sum to their partner (Henrich et al., 2001). This number is almost identical to the amount that people of Western cultures donate when playing the ultimatum game (Oosterbeek et al., 2004). These findings suggest that allocators in the game, instead of offering the least possible amount, try to maintain a sense of fairness and “shared rewards” in the game, in part so that their offers will not be rejected by the responder.

Henrich and colleagues (2001) also observed significant variation between cultures in terms of their level of cooperation. Specifically, the researchers found that the extent to which individuals in a culture needed to collaborate with each other to gather resources to survive predicted how likely they were to be cooperative. For example, among the people of the Lamelara in Indonesia, who survive by hunting whales in groups of a dozen or more individuals, donations in the ultimatum game were extremely high—approximately 58% of the total sum. In contrast, the Machiguenga people of Peru, who are generally economically independent at the family level, donated much less on average—about 26% of the total sum. The interdependence of people for survival, therefore, seems to be a key component of why people decide to cooperate with others.

Though the various survival strategies of small-scale societies might seem quite remote from your own experiences, take a moment to think about how your life is dependent on collaboration with others. Very few of us in industrialized societies live in houses we build ourselves, wear clothes we make ourselves, or eat food we grow ourselves. Instead, we depend on others to provide specialized resources and products, such as food, clothing, and shelter that are essential to our survival. Studies show that Americans give about 40% of their sum in the ultimatum game—less than the Lamelara give, but on par with most of the small-scale societies sampled by Henrich and colleagues (Oosterbeek et al., 2004). While living in an industrialized society might not require us to hunt in groups like the Lamelara do, we still depend on others to supply the resources we need to survive.

Conclusion

Cooperation is an important part of our everyday lives. Practically every feature of modern social life, from the taxes we pay to the street signs we follow, involves multiple parties working together toward shared goals. There are many factors that help determine whether people will successfully cooperate, from their culture of origin and the trust they place in their partners, to the degree to which they empathize with others. Although cooperation can sometimes be difficult to achieve, certain diplomatic practices, such as emphasizing shared goals and engaging in open communication, can promote teamwork and even break down rivalries. Though choosing not to cooperate can sometimes achieve a larger reward for an individual in the short term, cooperation is often necessary to ensure that the group as a whole––including all members of that group—achieves the optimal outcome.

Take a Quiz

An optional quiz is available to accompany this chapter here: https://nobaproject.com/modules/cooperation

Discussion Questions

- Which groups do you identify with? Consider sports teams, home towns, and universities. How does your identification with these groups make you feel about other members of these groups? What about members of competing groups?

- Thinking of all the accomplishments of humanity throughout history which do you believe required the greatest amounts of cooperation? Why?

- In your experience working on group projects—such as group projects for a class—what have you noticed regarding the themes presented in this module (eg. Competition, free riding, cooperation, trust)? How could you use the material you have just learned to make group projects more effective?

Vocabulary

- Altruism

- A desire to improve the welfare of another person, at a potential cost to the self and without any expectation of reward.

- Common-pool resource

- A collective product or service that is freely available to all individuals of a society, but is vulnerable to overuse and degradation.

- Commons dilemma game

- A game in which members of a group must balance their desire for personal gain against the deterioration and possible collapse of a resource.

- Cooperation

- The coordination of multiple partners toward a common goal that will benefit everyone involved.

- Decomposed games

- A task in which an individual chooses from multiple allocations of resources to distribute between him- or herself and another person.

- Empathy

- The ability to vicariously experience the emotions of another person.

- Free rider problem

- A situation in which one or more individuals benefit from a common-pool resource without paying their share of the cost.

- Interindividual-intergroup discontinuity

- The tendency for relations between groups to be less cooperative than relations between individuals.

- Outgroup

- A social category or group with which an individual does not identify.

- Prisoner’s dilemma

- A classic paradox in which two individuals must independently choose between defection (maximizing reward to the self) and cooperation (maximizing reward to the group).

- Rational self-interest

- The principle that people will make logical decisions based on maximizing their own gains and benefits.

- A person’s sense of who they are, based on their group membership(s).

- An assessment of how an individual prefers to allocate resources between him- or herself and another person.

- State of vulnerability

- When a person places him or herself in a position in which he or she might be exploited or harmed. This is often done out of trust that others will not exploit the vulnerability.

- Ultimatum game

- An economic game in which a proposer (Player A) can offer a subset of resources to a responder (Player B), who can then either accept or reject the given proposal.

Outside Resources

- Article: Weber, J. M., Kopelman, S., & Messick, D. M. (2004). A conceptual review of decision making in social dilemmas: Applying a logic of appropriateness. Personality and Social Psychology Review, 8(3), 281-307.

- http://psr.sagepub.com/content/8/3/281.abstract

- Book: Harvey, O. J., White, B. J., Hood, W. R., & Sherif, C. W. (1961). Intergroup conflict and cooperation: The Robbers Cave experiment. Norman, OK: University Book Exchange.

- http://psychclassics.yorku.ca/Sherif/index.htm

- Experiment: Intergroup Conflict and Cooperation: The Robbers Cave Experiment – An online version of Sherif, Harvey, White, Hood, and Sherif’s (1954/1961) study, which includes photos.

- http://psychclassics.yorku.ca/Sherif/

- Video: A clip from a reality TV show, “Golden Balls”, that pits players against each other in a high-stakes Prisoners’ Dilemma situation.

-

https://youtube.com/watch?v=p3Uos2fzIJ0%3Fcolor%3Dred%26amp%3Bmodestbranding%3D1%26amp%3Bshowinfo%3D0%26amp%3Borigin%3Dhttps%3A

- Video: Describes recent research showing how chimpanzees naturally cooperate with each other to accomplish tasks.

-

https://youtube.com/watch?v=fME0_RsEXiI%3Fcolor%3Dred%26modestbranding%3D1%26showinfo%3D0%26origin%3Dhttps%3A

- Video: The Empathic Civilization – A 10 minute, 39 second animated talk that explores the topics of empathy.

-

https://youtube.com/watch?v=xjarMIXA2q8%3Fcolor%3Dred%26modestbranding%3D1%26showinfo%3D0%26origin%3Dhttps%3A

- Video: Tragedy of the Commons, Part 1 – What happens when many people seek to share the same, limited resource?

-

https://youtube.com/watch?v=KZDjPnzoge0%3Fcolor%3Dred%26modestbranding%3D1%26showinfo%3D0%26origin%3Dhttps%3A

- Video: Tragedy of the Commons, Part 2 – This video (which is 1 minute, 27 seconds) discusses how cooperation can be a solution to the commons dilemma.

-

https://youtube.com/watch?v=IVwk6VIxBXg%3Fcolor%3Dred%26modestbranding%3D1%26showinfo%3D0%26origin%3Dhttps%3A

- Video: Understanding the Prisoners’ Dilemma.

-

https://youtube.com/watch?v=t9Lo2fgxWHw%3Fcolor%3Dred%26modestbranding%3D1%26showinfo%3D0%26origin%3Dhttps%3A

- Video: Why Some People are More Altruistic Than Others – A 12 minute, 21 second TED talk about altruism. A psychologist, Abigail Marsh, discusses the research about altruism.

-

https://youtube.com/watch?v=m4KbUSRfnR4%3Fcolor%3Dred%26modestbranding%3D1%26showinfo%3D0%26origin%3Dhttps%3A

- Web: Take an online test to determine your Social Values Orientation (SVO).

- http://vlab.ethz.ch/svo/index-normal.html

- Web: What is Social Identity? – A brief explanation of social identity, which includes specific examples.

- http://people.howstuffworks.com/what-is-social-identity.htm

References

- Allport, G.W. (1954). The nature of prejudice. Cambridge, MA: Addison-Wesley.

- Batson, C. D., & Ahmad, N. (2001). Empathy-induced altruism in a prisoner’s dilemma II: What if the target of empathy has defected? European Journal of Social Psychology, 31(1), 25-36.

- Batson, C. D., & Moran, T. (1999). Empathy-induced altruism in a prisoner’s dilemma. European Journal of Social Psychology, 29(7), 909-924.

- Batson, C. D., Batson, J. G., Todd, R. M., Brummett, B. H., Shaw, L. L., & Aldeguer, C. M. (1995). Empathy and the collective good: Caring for one of the others in a social dilemma. Journal of Personality and Social Psychology, 68(4), 619.

- Bigler R.S., Brown C.S., Markell M. (2001). When groups are not created equal: Effects of group status on the formation of intergroup attitudes in children. Child Development, 72(4), 1151–1162.

- Brewer, M. B., & Kramer, R. M. (1986). Choice behavior in social dilemmas: Effects of social identity, group size, and decision framing. Journal of Personality and Social Psychology, 50(3), 543-549.

- Chaudhuri, A., Sopher, B., & Strand, P. (2002). Cooperation in social dilemmas, trust and reciprocity. Journal of Economic Psychology, 23(2), 231-249.

- Cote, S., & Miners, C. T. (2006). Emotional intelligence, cognitive intelligence, and job performance. Administrative Science Quarterly, 51(1), 1-28.

- Cropanzano R., & Byrne Z.S. (2000). Workplace justice and the dilemma of organizational citizenship. In M. Van Vugt, M. Snyder, T. Tyler, & A. Biel (Eds.), Cooperation in Modern Society: Promoting the Welfare of Communities, States and Organizations (pp. 142-161). London: Routledge.

- Davis, M. H. (1994). Empathy: A social psychological approach. Westview Press.

- Dawes, R. M., & Kagan, J. (1988). Rational choice in an uncertain world. Harcourt Brace Jovanovich.

- Dawes, R. M., McTavish, J., & Shaklee, H. (1977). Behavior, communication, and assumptions about other people’s behavior in a commons dilemma situation. Journal of Personality and Social Psychology, 35(1), 1.

- De Waal, F.B.M., & Lanting, F. (1997). Bonobo: The forgotten ape. University of California Press.

- Goetz, J. L., Keltner, D., & Simon-Thomas, E. (2010). Compassion: An evolutionary analysis and empirical review. Psychological Bulletin, 136(3), 351-374.

- Grossman, S. J., & Hart, O. D. (1980). Takeover bids, the free-rider problem, and the theory of the corporation. The Bell Journal of Economics, 42-64.

- Henrich, J., Boyd, R., Bowles, S., Camerer, C., Fehr, E., Gintis, H., & McElreath, R. (2001). In search of homo economicus: Behavioral experiments in 15 small-scale societies. The American Economic Review, 91(2), 73-78.

- Insko, C. A., Kirchner, J. L., Pinter, B., Efaw, J., & Wildschut, T. (2005). Interindividual-intergroup discontinuity as a function of trust and categorization: The paradox of expected cooperation. Journal of Personality and Social Psychology, 88(2), 365-385.

- Insko, C. A., Pinkley, R. L., Hoyle, R. H., Dalton, B., Hong, G., Slim, R. M., … & Thibaut, J. (1987). Individual versus group discontinuity: The role of intergroup contact. Journal of Experimental Social Psychology, 23(3), 250-267.

- Keltner, D., Kogan, A., Piff, P. K., & Saturn, S. R. (2014). The sociocultural appraisals, values, and emotions (SAVE) framework of prosociality: Core processes from gene to meme. Annual Review of Psychology, 65, 425-460.

- Kerr, N. L., & Kaufman-Gilliland, C. M. (1994). Communication, commitment, and cooperation in social dilemma. Journal of Personality and Social Psychology, 66(3), 513-529.

- Kerr, N. L., Garst, J., Lewandowski, D. A., & Harris, S. E. (1997). That still, small voice: Commitment to cooperate as an internalized versus a social norm. Personality and Social Psychology Bulletin, 23(12), 1300-1311.

- Klandermans, B. (2002). How group identification helps to overcome the dilemma of collective action. American Behavioral Scientist, 45(5), 887-900.

- Kramer, R. M. (1999). Trust and distrust in organizations: Emerging perspectives, enduring questions. Annual Review of Psychology, 50(1), 569-598.

- Kramer, R. M., McClintock, C. G., & Messick, D. M. (1986). Social values and cooperative response to a simulated resource conservation crisis. Journal of Personality, 54(3), 576-582.

- Langergraber, K. E., Mitani, J. C., & Vigilant, L. (2007). The limited impact of kinship on cooperation in wild chimpanzees. Proceedings of the National Academy of Sciences, 104(19), 7786-7790.

- Locksley, A., Ortiz, V., & Hepburn, C. (1980). Social categorization and discriminatory behavior: Extinguishing the minimal intergroup discrimination effect. Journal of Personality and Social Psychology, 39(5), 773-783.

- Marcus, R. F., Telleen, S., & Roke, E. J. (1979). Relation between cooperation and empathy in young children. Developmental Psychology, 15(3), 346.

- McClintock, C. G., & Allison, S. T. (1989). Social value orientation and helping behavior. Journal of Applied Social Psychology, 19(4), 353-362.

- Messick, D. M., & McClintock, C. G. (1968). Motivational bases of choice in experimental games. Journal of Experimental Social Psychology, 4(1), 1-25.

- Milinski, M., Semmann, D., Bakker, T. C., & Krambeck, H. J. (2001). Cooperation through indirect reciprocity: Image scoring or standing strategy? Proceedings of the Royal Society of London B: Biological Sciences, 268(1484), 2495-2501.

- Mithen, S. (1999). The prehistory of the mind: The cognitive origins of art, religion and science. Thames & Hudson Publishers.

- Oosterbeek, H., Sloof, R., & Van De Kuilen, G. (2004). Cultural differences in ultimatum game experiments: Evidence from a meta-analysis. Experimental Economics, 7(2), 171-188.

- Parks, C. D., Henager, R. F., & Scamahorn, S. D. (1996). Trust and reactions to messages of intent in social dilemmas. Journal of Conflict Resolution, 40(1), 134-151.

- Pruitt, D. G., & Kimmel, M. J. (1977). Twenty years of experimental gaming: Critique, synthesis, and suggestions for the future. Annual Review of Psychology, 28, 363-392.

- Roch, S. G., & Samuelson, C. D. (1997). Effects of environmental uncertainty and social value orientation in resource dilemmas. Organizational Behavior and Human Decision Processes, 70(3), 221-235.

- Schopler, J., & Insko, C. A. (1999). The reduction of the interindividual-intergroup discontinuity effect: The role of future consequences. In M. Foddy, M. Smithson, S. Schneider, & M. Hogg (Eds.), Resolving social dilemmas: Dynamic, structural, and intergroup aspects (pp. 281-294). Philadelphia: Psychology Press.

- Sherif, M., Harvey, O. J., White, B. J., Hood, W. R., & Sherif, C. W. (1961). Intergroup conflict and cooperation: The Robbers Cave experiment. Norman, OK: University Book Exchange.

- Tajfel, H., & Turner, J. C. (1986). The social identity theory of intergroup behaviour. In S. Worchel & W. G. Austin (Eds.), Psychology of intergroup relations (pp. 7–24). Chicago, IL: Nelson-Hall.

- Tajfel, H., & Turner, J. C. (1979). An integrative theory of intergroup conflict. In W. G. Austin & S. Worchel (Eds.), The social psychology of intergroup relations (pp. 33–47). Monterey, CA: Brooks/Cole.

- Tajfel, H., Billig, M. G., Bundy, R. P., & Flament, C. (1971). Social categorization and intergroup behaviour. European Journal of Social Psychology, 1(2), 149-178.

- Van Lange, P. A., Bekkers, R., Schuyt, T. N., & Vugt, M. V. (2007). From games to giving: Social value orientation predicts donations to noble causes. Basic and Applied Social Psychology, 29(4), 375-384.

- Van Vugt M., Biel A., Snyder M., & Tyler T. (2000). Perspectives on cooperation in modern society: Helping the self, the community, and society. In M. Van Vugt, M. Snyder, T. Tyler, & A. Biel (Eds.), Cooperation in Modern Society: Promoting the Welfare of Communities, States and Organizations (pp. 3–24). London: Routledge.

- Van Vugt, M., & Hart, C. M. (2004). Social identity as social glue: The origins of group loyalty. Journal of Personality and Social Psychology, 86(4), 585-598.

- Van Vugt, M., Meertens, R. M., & Lange, P. A. (1995). Car versus public transportation? The role of social value orientations in a real-life social dilemma. Journal of Applied Social Psychology, 25(3), 258-278.

- Van Vugt, M., Van Lange, P. A., & Meertens, R. M. (1996). Commuting by car or public transportation? A social dilemma analysis of travel mode judgements. European Journal of Social Psychology, 26(3), 373-395.

- Warneken, F., & Tomasello, M. (2007). Helping and cooperation at 14 months of age. Infancy, 11(3), 271-294.

- Warneken, F., Chen, F., & Tomasello, M. (2006). Cooperative activities in young children and chimpanzees. Child Development, 77(3), 640-663.

- Wedekind, C., & Milinski, M. (2000). Cooperation through image scoring in humans. Science, 288(5467), 850-852.

- Wildschut, T., Pinter, B., Vevea, J. L., Insko, C. A., & Schopler, J. (2003). Beyond the group mind: A quantitative review of the interindividual-intergroup discontinuity effect. Psychological Bulletin, 129(5), 698-722.

Authors

Jake P. Moskowitz

Jake P. MoskowitzJake P. Moskowitz is a current doctoral student in the Psychology & Social Behavior program at the University of California, Irvine. His research focuses on the nature of prosocial behavior, and how factors such as identity, ideology, and morality impact human prosocial tendencies.

Paul K. Piff

Paul K. PiffPaul K. Piff, Ph.D. (UC Berkeley), is an Assistant Professor of Psychology and Social Behavior at the University of California, Irvine, where he also directs the Social Inequality and Cohesion Lab. Dr. Piff’s research examines the origins of human kindness and cooperation, and the social consequences of economic inequality.