Little Rock schools closed rather than allow integration. This 1958 photograph shows an African American high school girl watching school lessons on television. Library of Congress (LC-U9- 1525F-28).

I. Introduction

In 1958, Harvard economist and public intellectual John Kenneth Galbraith published The Affluent Society. Galbraith’s celebrated book examined America’s new post–World War II consumer economy and political culture. While noting the unparalleled riches of American economic growth, it criticized the underlying structures of an economy dedicated only to increasing production and the consumption of goods. Galbraith argued that the U.S. economy, based on an almost hedonistic consumption of luxury products, would inevitably lead to economic inequality as private-sector interests enriched themselves at the expense of the American public. Galbraith warned that an economy where “wants are increasingly created by the process by which they are satisfied” was unsound, unsustainable, and, ultimately, immoral. “The Affluent Society,” he said, was anything but.1

While economists and scholars debate the merits of Galbraith’s warnings and predictions, his analysis was so insightful that the title of his book has come to serve as a ready label for postwar American society. In the two decades after the end of World War II, the American economy witnessed massive and sustained growth that reshaped American culture through the abundance of consumer goods. Standards of living—across all income levels—climbed to unparalleled heights and economic inequality plummeted.2

And yet, as Galbraith noted, the Affluent Society had fundamental flaws. The new consumer economy that lifted millions of Americans into its burgeoning middle class also reproduced existing inequalities. Women struggled to claim equal rights as full participants in American society. The poor struggled to win access to good schools, good healthcare, and good jobs. The same suburbs that gave middle-class Americans new space left cities withering in spirals of poverty and crime and caused irreversible ecological disruptions. The Jim Crow South tenaciously defended segregation, and Black Americans and other minorities suffered discrimination all across the country.

The contradictions of the Affluent Society defined the decade: unrivaled prosperity alongside persistent poverty, life-changing technological innovation alongside social and environmental destruction, expanded opportunity alongside entrenched discrimination, and new liberating lifestyles alongside a stifling conformity.

II. The Rise of the Suburbs

Levittown in the early1950s. Flickr/Creative Commons.

The seeds of a suburban nation were planted in New Deal government programs. At the height of the Great Depression, in 1932, some 250,000 households lost their property to foreclosure. A year later, half of all U.S. mortgages were in default. The foreclosure rate stood at more than one thousand per day. In response, FDR’s New Deal created the Home Owners’ Loan Corporation (HOLC), which began purchasing and refinancing existing mortgages at risk of default. The HOLC introduced the amortized mortgage, allowing borrowers to pay back interest and principal regularly over fifteen years instead of the then standard five-year mortgage that carried large balloon payments at the end of the contract. The HOLC eventually owned nearly one of every five mortgages in America. Though homeowners paid more for their homes under this new system, home ownership was opened to the multitudes who could now gain residential stability, lower monthly mortgage payments, and accrue wealth as property values rose over time.3

Additionally, the Federal Housing Administration (FHA), another New Deal organization, increased access to home ownership by insuring mortgages and protecting lenders from financial loss in the event of a default. Lenders, however, had to agree to offer low rates and terms of up to twenty or thirty years. Even more consumers could afford homes. Though only slightly more than a third of homes had an FHA-backed mortgage by 1964, FHA loans had a ripple effect, with private lenders granting more and more home loans even to non-FHA-backed borrowers. Government programs and subsidies like the HOLC and the FHA fueled the growth of home ownership and the rise of the suburbs.

Government spending during World War II pushed the United States out of the Depression and into an economic boom that would be sustained after the war by continued government spending. Government expenditures provided loans to veterans, subsidized corporate research and development, and built the interstate highway system. In the decades after World War II, business boomed, unionization peaked, wages rose, and sustained growth buoyed a new consumer economy. The Servicemen’s Readjustment Act (popularly known as the G.I. Bill), passed in 1944, offered low-interest home loans, a stipend to attend college, loans to start a business, and unemployment benefits.

The rapid growth of home ownership and the rise of suburban communities helped drive the postwar economic boom. Builders created sprawling neighborhoods of single-family homes on the outskirts of American cities. William Levitt built the first Levittown, the prototypical suburban community, in 1946 in Long Island, New York. Purchasing large acreage, subdividing lots, and contracting crews to build countless homes at economies of scale, Levitt offered affordable suburban housing to veterans and their families. Levitt became the prophet of the new suburbs, and his model of large-scale suburban development was duplicated by developers across the country. The country’s suburban share of the population rose from 19.5 percent in 1940 to 30.7 percent by 1960. Home ownership rates rose from 44 percent in 1940 to almost 62 percent in 1960. Between 1940 and 1950, suburban communities with more than ten thousand people grew 22.1 percent, and planned communities grew at an astonishing rate of 126.1 percent.4 As historian Lizabeth Cohen notes, these new suburbs “mushroomed in territorial size and the populations they harbored.”5 Between 1950 and 1970, America’s suburban population nearly doubled to seventy-four million. Eighty-three percent of all population growth occurred in suburban places.6

The postwar construction boom fed into countless industries. As manufacturers converted from war materials back to consumer goods, and as the suburbs developed, appliance and automobile sales rose dramatically. Flush with rising wages and wartime savings, homeowners also used newly created installment plans to buy new consumer goods at once instead of saving for years to make major purchases. Credit cards, first issued in 1950, further increased access to credit. No longer stymied by the Depression or wartime restrictions, consumers bought countless washers, dryers, refrigerators, freezers, and, suddenly, televisions. The percentage of Americans that owned at least one television increased from 12 percent in 1950 to more than 87 percent in 1960. This new suburban economy also led to increased demand for automobiles. The percentage of American families owning cars increased from 54 percent in 1948 to 74 percent in 1959. Motor fuel consumption rose from some twenty-two million gallons in 1945 to around fifty-nine million gallons in 1958.7

On the surface, the postwar economic boom turned America into a land of abundance. For advantaged buyers, loans had never been easier to obtain, consumer goods had never been more accessible, single-family homes had never been so cheap, and well-paying jobs had never been more abundant. “If you had a college diploma, a dark suit, and anything between the ears,” a businessman later recalled, “it was like an escalator; you just stood there and you moved up.”8 But the escalator did not serve everyone. Beneath aggregate numbers, racial disparity, sexual discrimination, and economic inequality persevered, undermining many of the assumptions of an Affluent Society.

In 1939, real estate appraisers arrived in sunny Pasadena, California. Armed with elaborate questionnaires to evaluate the city’s building conditions, the appraisers were well versed in the policies of the HOLC. In one neighborhood, most structures were rated in “fair” repair, and appraisers noted a lack of “construction hazards or flood threats.” However, they concluded that the area “is detrimentally affected by 10 owner occupant Negro families.” While “the Negroes are said to be of the better class,” the appraisers concluded, “it seems inevitable that ownership and property values will drift to lower levels.”9

Wealth created by the booming economy filtered through social structures with built-in privileges and prejudices. Just when many middle- and working-class white American families began their journey of upward mobility by moving to the suburbs with the help of government programs such as the FHA and the G.I. Bill, many African Americans and other racial minorities found themselves systematically shut out.

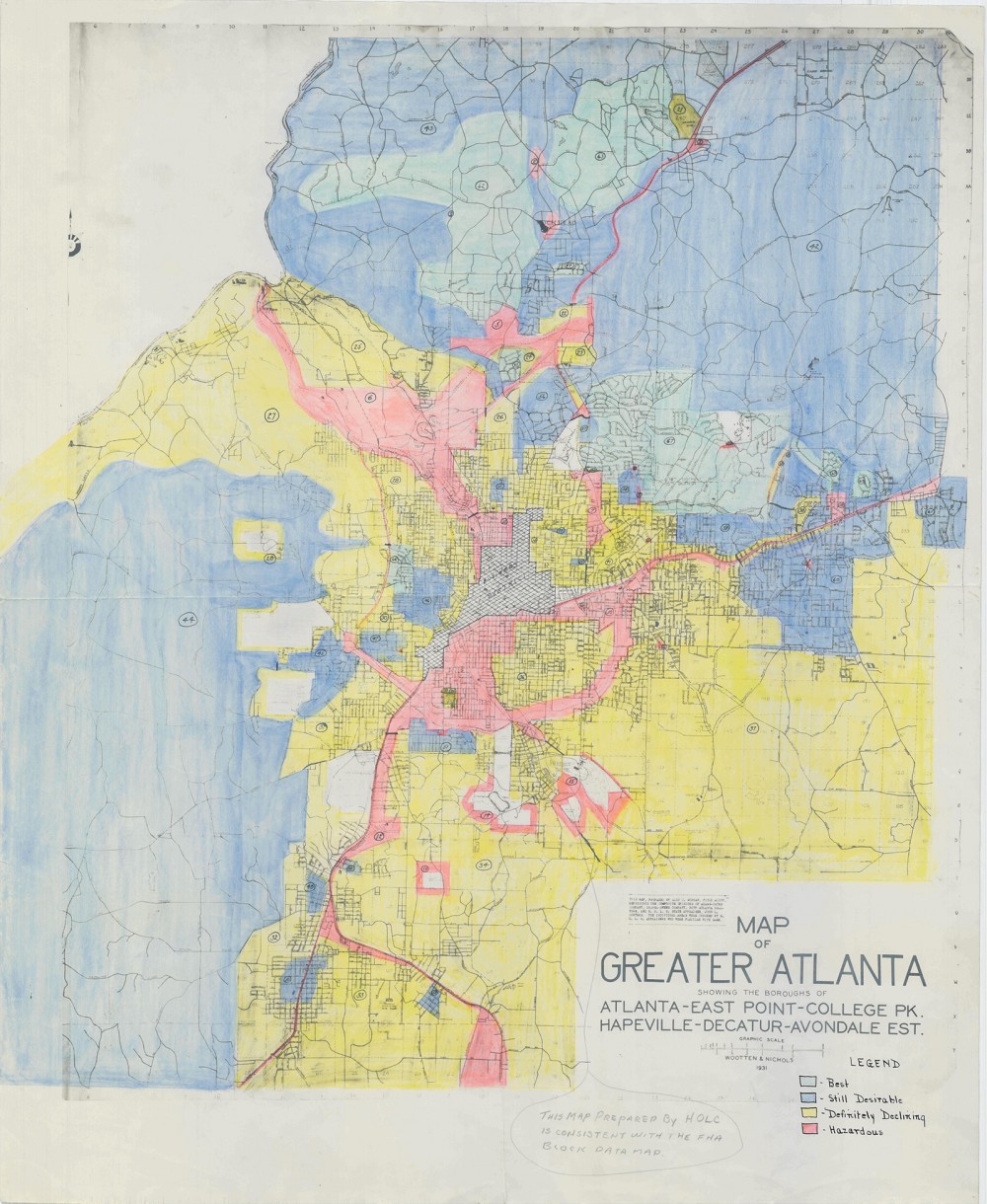

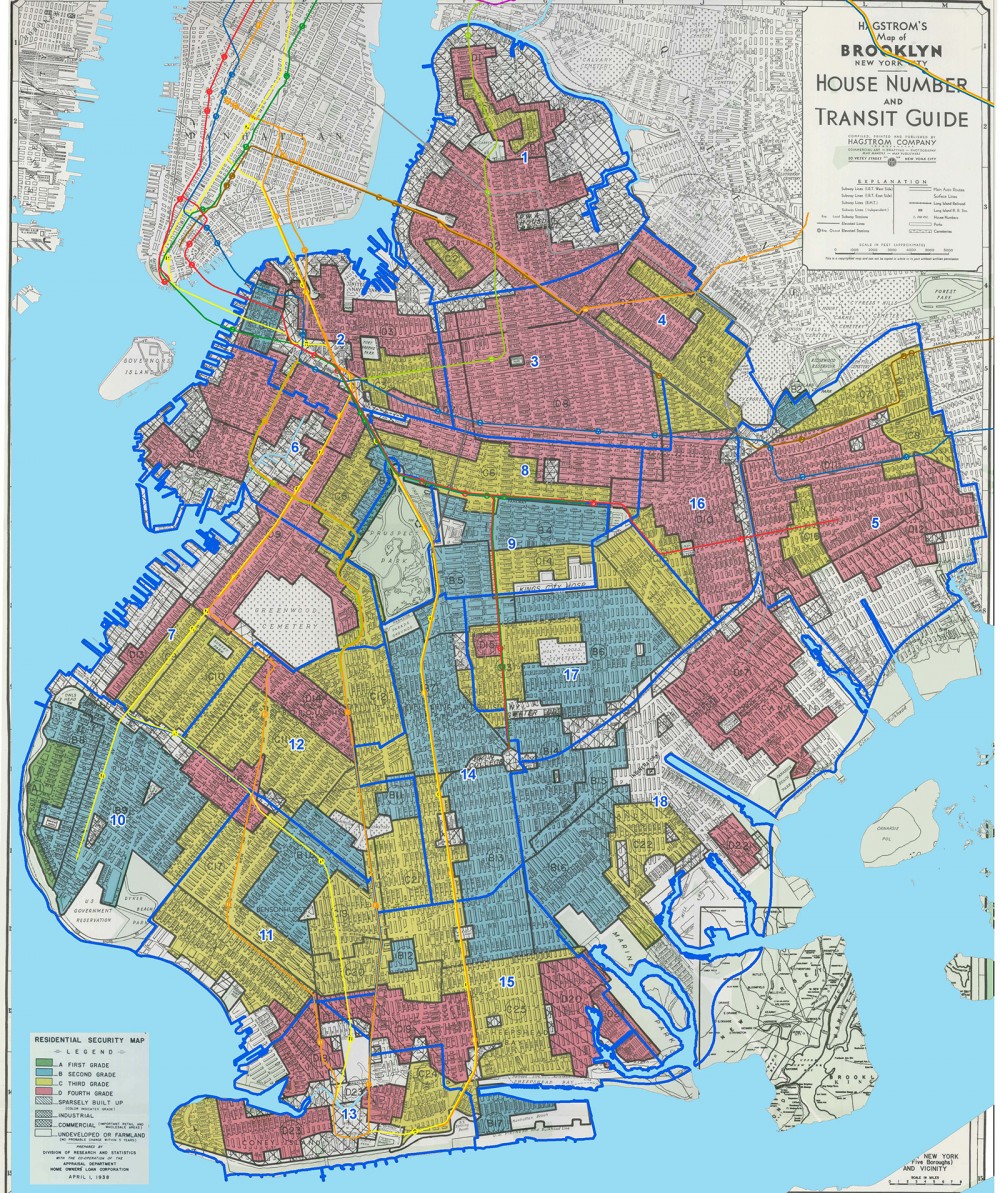

A look at the relationship between federal organizations such as the HOLC, the FHA, and private banks, lenders, and real estate agents tells the story of standardized policies that produced a segregated housing market. At the core of HOLC appraisal techniques, which reflected the existing practices of private real estate agents, was the pernicious insistence that mixed-race and minority-dominated neighborhoods were credit risks. In partnership with local lenders and real estate agents, the HOLC created Residential Security Maps to identify high- and low-risk-lending areas. People familiar with the local real estate market filled out uniform surveys on each neighborhood. Relying on this information, the HOLC assigned every neighborhood a letter grade from A to D and a corresponding color code. The least secure, highest-risk neighborhoods for loans received a D grade and the color red. Banks limited loans in such “redlined” areas.10

Black communities in cities such as Detroit, Chicago, Brooklyn, and Atlanta (mapped here) experienced redlining, the process by which banks and other organizations demarcated minority neighborhoods on a map with a red line. Doing so made visible the areas they believed were unfit for their services, directly denying Black residents loans, but also, indirectly, housing, groceries, and other necessities of modern life. National Archives.

1938 Brooklyn redlining map. National Archives.

Phrases like subversive racial elements and racial hazards pervade the redlined-area description files of surveyors and HOLC officials. Los Angeles’s Echo Park neighborhood, for instance, had concentrations of Japanese and African Americans and a “sprinkling of Russians and Mexicans.” The HOLC security map and survey noted that the neighborhood’s “adverse racial influences which are noticeably increasing inevitably presage lower values, rentals and a rapid decrease in residential desirability.”11

While the HOLC was a fairly short-lived New Deal agency, the influence of its security maps lived on in the FHA and Veterans Administration (VA), the latter of which dispensed G.I. Bill–backed mortgages. Both of these government organizations, which reinforced the standards followed by private lenders, refused to back bank mortgages in “redlined” neighborhoods. On the one hand, FHA- and VA-backed loans were an enormous boon to those who qualified for them. Millions of Americans received mortgages that they otherwise would not have qualified for. But FHA-backed mortgages were not available to all. Racial minorities could not get loans for property improvements in their own neighborhoods and were denied mortgages to purchase property in other areas for fear that their presence would extend the red line into a new community. Levittown, the poster child of the new suburban America, only allowed whites to purchase homes. Thus, FHA policies and private developers increased home ownership and stability for white Americans while simultaneously creating and enforcing racial segregation.

The exclusionary structures of the postwar economy prompted protest from African Americans and other minorities who were excluded. Fair housing, equal employment, consumer access, and educational opportunity, for instance, all emerged as priorities of a brewing civil rights movement. In 1948, the U.S. Supreme Court sided with African American plaintiffs and, in Shelley v. Kraemer, declared racially restrictive neighborhood housing covenants—property deed restrictions barring sales to racial minorities—legally unenforceable. Discrimination and segregation continued, however, and activists would continue to push for fair housing practices.

During the 1950s and early 1960s many Americans retreated to the suburbs to enjoy the new consumer economy and search for some normalcy and security after the instability of depression and war. But many could not. It was both the limits and opportunities of housing, then, that shaped the contours of postwar American society. Moreover, the postwar suburban boom not only exacerbated racial and class inequalities, it precipitated a major environmental crisis.

The introduction of mass production techniques in housing wrought ecological destruction. Developers sought cheaper land ever farther way from urban cores, wrecking havoc on particularly sensitive lands such as wetlands, hills, and floodplains. “A territory roughly the size of Rhode Island,” historian Adam Rome wrote, “was bulldozed for urban development” every year.12 Innovative construction strategies, government incentives, high consumer demand, and low energy prices all pushed builders away from more sustainable, energy-conserving building projects. Typical postwar tract-houses were difficult to cool in the summer and heat in the winter. Many were equipped with malfunctioning septic tanks that polluted local groundwater. Such destructiveness did not go unnoticed. By the time Rachel Carson published Silent Spring, a forceful denunciation of the excessive use of pesticides such as DDT in agricultural and domestic settings, in 1962, many Americans were already primed to receive her message. Stories of kitchen faucets spouting detergent foams and children playing in effluents brought the point home: comfort and convenience did not have to come at such cost. And yet most of the Americans who joined the early environmentalist crusades of the 1950s and 1960s rarely questioned the foundations of the suburban ideal. Americans increasingly relied upon automobiles and idealized the single-family home, blunting any major push to shift prevailing patterns of land and energy use.13

III. Race and Education

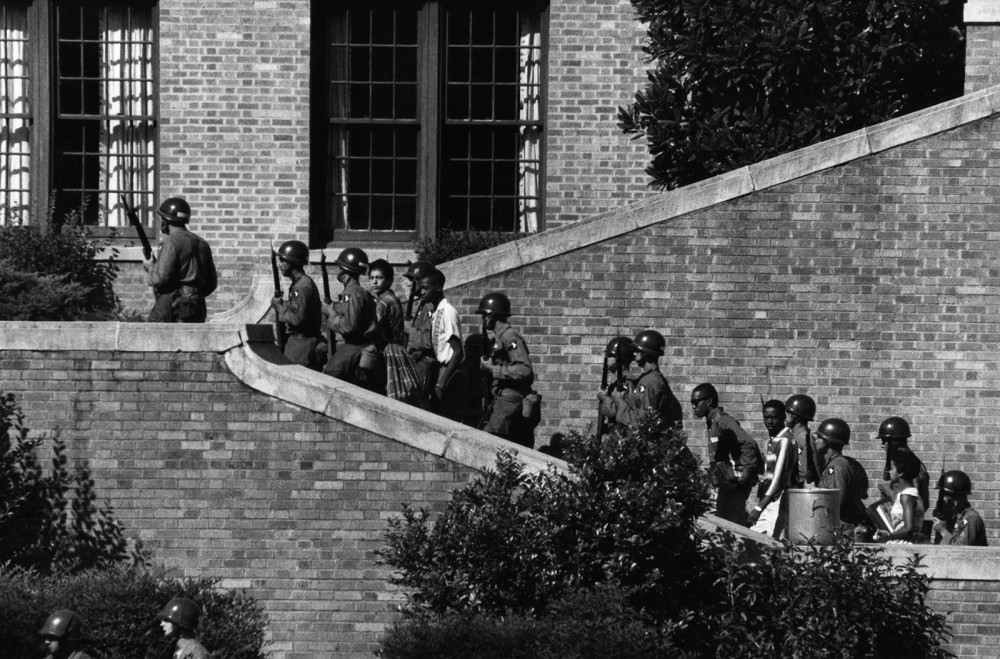

School desegregation was a tense experience for all involved, but none more so than the African American students who integrated white schools. The Little Rock Nine were the first to do so in Arkansas. Their escorts, the 101st Airborne Division of the U.S. Army, protected students who took that first step in 1957. Wikimedia.

Older battles over racial exclusion also confronted postwar American society. One long-simmering struggle targeted segregated schooling. In 1896, the Supreme Court declared the principle of “separate but equal” constitutional. Segregated schooling, however, was rarely “equal”: in practice, Black Americans, particularly in the South, received fewer funds, attended inadequate facilities, and studied with substandard materials. African Americans’ battle against educational inequality stretched across half a century before the Supreme Court again took up the merits of “separate but equal.”

On May 17, 1954, after two years of argument, re-argument, and deliberation, Chief Justice Earl Warren announced the Supreme Court’s decision on segregated schooling in Brown v. Board of Education (1954). The court found by a unanimous 9–0 vote that racial segregation violated the Equal Protection Clause of the Fourteenth Amendment. The court’s decision declared, “Separate educational facilities are inherently unequal.” “Separate but equal” was made unconstitutional.14

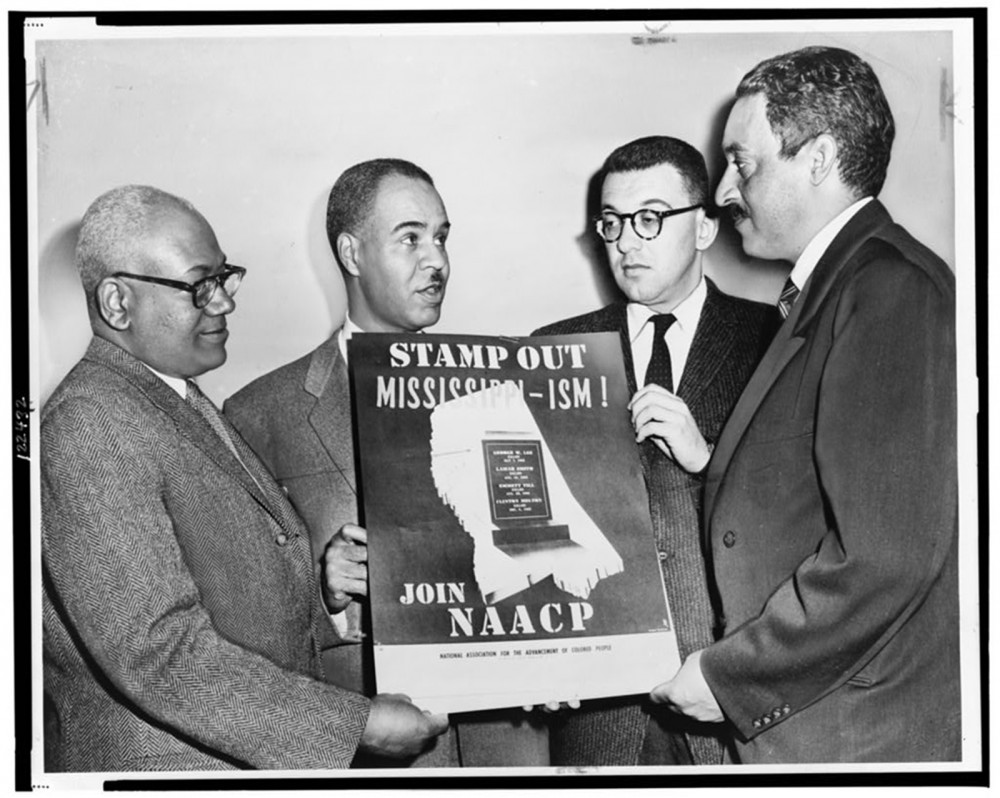

Decades of African American–led litigation, local agitation against racial inequality, and liberal Supreme Court justices made Brown possible. In the early 1930s, the NAACP began a concerted effort to erode the legal underpinnings of segregation in the American South. Legal, or de jure, segregation subjected racial minorities to discriminatory laws and policies. Law and custom in the South hardened antiblack restrictions. But through a series of carefully chosen and contested court cases concerning education, disfranchisement, and jury selection, NAACP lawyers such as Charles Hamilton Houston, Robert L. Clark, and future Supreme Court Justice Thurgood Marshall undermined Jim Crow’s constitutional underpinnings. These attorneys initially sought to demonstrate that states systematically failed to provide African American students “equal” resources and facilities, and thus failed to live up to Plessy. By the late 1940s activists began to more forcefully challenge the assumptions that “separate” was constitutional at all.

The NAACP was a key organization in the fight to end legalized racial discrimination. In this 1956 photograph, NAACP leaders, including Thurgood Marshall, who would become the first African American Supreme Court Justice, hold a poster decrying racial bias in Mississippi in 1956. Library of Congress.

Though remembered as just one lawsuit, Brown v. Board of Education consolidated five separate cases that had originated in the southeastern United States: Briggs v. Elliott (South Carolina), Davis v. County School Board of Prince Edward County (Virginia), Beulah v. Belton (Delaware), Bolling v. Sharpe (Washington, D.C.), and Brown v. Board of Education (Kansas). Working with local activists already involved in desegregation fights, the NAACP purposely chose cases with a diverse set of local backgrounds to show that segregation was not just an issue in the Deep South, and that a sweeping judgment on the fundamental constitutionality of Plessy was needed.

Briggs v. Elliott, the first case accepted by the NAACP, illustrated the plight of segregated Black schools. Briggs originated in rural Clarendon County, South Carolina, where taxpayers in 1950 spent $179 to educate each white student and $43 for each Black student. The district’s twelve white schools were cumulatively worth $673,850; the value of its sixty-one Black schools (mostly dilapidated, overcrowded shacks) was $194,575.15 While Briggs underscored the South’s failure to follow Plessy, the Brown suit focused less on material disparities between Black and white schools (which were significantly less than in places like Clarendon County) and more on the social and spiritual degradation that accompanied legal segregation. This case cut to the basic question of whether “separate” was itself inherently unequal. The NAACP said the two notions were incompatible. As one witness before the U.S. District Court of Kansas said, “The entire colored race is craving light, and the only way to reach the light is to start [black and white] children together in their infancy and they come up together.”16

To make its case, the NAACP marshaled historical and social scientific evidence. The Court found the historical evidence inconclusive and drew their ruling more heavily from the NAACP’s argument that segregation psychologically damaged Black children. To make this argument, association lawyers relied on social scientific evidence, such as the famous doll experiments of Kenneth and Mamie Clark. The Clarks demonstrated that while young white girls would naturally choose to play with white dolls, young Black girls would, too. The Clarks argued that Black children’s aesthetic and moral preference for white dolls demonstrated the pernicious effects and self-loathing produced by segregation.

Identifying and denouncing injustice, though, is different from rectifying it. Though Brown repudiated Plessy, the Court’s orders did not extend to segregation in places other than public schools and, even then, to preserve a unanimous decision for such an historically important case, the justices set aside the divisive yet essential question of enforcement. Their infamously ambiguous order in 1955 (what came to be known as Brown II) that school districts desegregate “with all deliberate speed” was so vague and ineffectual that it left the actual business of desegregation in the hands of those who opposed it.

In 1959, photographer John Bledsoe captured this image of the crowd on the steps of the Arkansas state capitol building protesting the federally mandated integration of Little Rock’s Central High School. This image shows how worries about desegregation were bound up with other concerns, such as the reach of communism and government power. Library of Congress.

In most of the South, as well as the rest of the country, school integration did not occur on a wide scale until well after Brown. Only in the 1964 Civil Rights Act did the federal government finally implement some enforcement of the Brown decision by threatening to withhold funding from recalcitrant school districts, but even then southern districts found loopholes. Court decisions such as Green v. New Kent County (1968) and Alexander v. Holmes (1969) finally closed some of those loopholes, such as “freedom of choice” plans, to compel some measure of actual integration.

When Brown finally was enforced in the South, the quantitative impact was staggering. In 1968, fourteen years after Brown, some 80 percent of school-age Black southerners remained in schools that were 90 to 100 percent nonwhite. By 1972, though, just 25 percent were in such schools, and 55 percent remained in schools with a simple nonwhite minority. By many measures, the public schools of the South became, ironically, the most integrated in the nation.17

As a landmark moment in American history, Brown’s significance perhaps lies less in immediate tangible changes—which were slow, partial, and inseparable from a much longer chain of events—than in the idealism it expressed and the momentum it created. The nation’s highest court had attacked one of the fundamental supports of Jim Crow segregation and offered constitutional cover for the creation of one of the greatest social movements in American history.

IV. Civil Rights in an Affluent Society

This segregated drinking fountain was located on the grounds of the Halifax County courthouse in North Carolina. Photograph, April 1938. Wikimedia.

Education was but one aspect of the nation’s Jim Crow machinery. African Americans had been fighting against a variety of racist policies, cultures, and beliefs in all aspects of American life. And while the struggle for Black inclusion had few victories before World War II, the war and the Double V campaign for victory against fascism abroad and racism at home, as well as the postwar economic boom led, to rising expectations for many African Americans. When persistent racism and racial segregation undercut the promise of economic and social mobility, African Americans began mobilizing on an unprecedented scale against the various discriminatory social and legal structures.

While many of the civil rights movement’s most memorable and important moments, such as the sit-ins, the Freedom Rides, and especially the March on Washington, occurred in the 1960s, the 1950s were a significant decade in the sometimes tragic, sometimes triumphant march of civil rights in the United States. In 1953, years before Rosa Parks’s iconic confrontation on a Montgomery city bus, an African American woman named Sarah Keys publicly challenged segregated public transportation. Keys, then serving in the Women’s Army Corps, traveled from her army base in New Jersey back to North Carolina to visit her family. When the bus stopped in North Carolina, the driver asked her to give up her seat for a white customer. Her refusal to do so landed her in jail in 1953 and led to a landmark 1955 decision, Sarah Keys v. Carolina Coach Company, in which the Interstate Commerce Commission ruled that “separate but equal” violated the Interstate Commerce Clause of the U.S. Constitution. Poorly enforced, it nevertheless gave legal coverage for the Freedom Riders years later and motivated further assaults against Jim Crow.

But if some events encouraged civil rights workers with the promise of progress, others were so savage they convinced activists that they could do nothing but resist. In the summer of 1955, two white men in Mississippi kidnapped and brutally murdered fourteen-year-old Emmett Till. Till, visiting from Chicago and perhaps unfamiliar with the “etiquette” of Jim Crow, allegedly whistled at a white woman named Carolyn Bryant. Her husband, Roy Bryant, and another man, J. W. Milam, abducted Till from his relatives’ home, beat him, mutilated him, shot him, and threw his body in the Tallahatchie River. Emmett’s mother held an open-casket funeral so that Till’s disfigured body could make national news. The men were brought to trial. The evidence was damning, but an all-white jury found the two not guilty. Mere months after the decision, the two boasted of their crime, in all of its brutal detail, in Look magazine. “They ain’t gonna go to school with my kids,” Milam said. They wanted “to make an example of [Till]—just so everybody can know how me and my folks stand.”18 The Till case became an indelible memory for the young Black men and women soon to propel the civil rights movement forward.

On December 1, 1955, four months after Till’s death and six days after the Keys v. Carolina Coach Company decision, Rosa Parks refused to surrender her seat on a Montgomery city bus and was arrested. Montgomery’s public transportation system had longstanding rules requiring African American passengers to sit in the back of the bus and to give up their seats to white passengers if the buses filled. Parks was not the first to protest the policy by staying seated, but she was the first around whom Montgomery activists rallied.

Activists sprang into action. Joanne Robinson, who as head of the Women’s Political Council had long fought against the city’s segregated busing, worked long into the night to with a colleague and two students from Alabama State College to mimeograph over 50,000 handbills calling for an immediate boycott. Montgomery’s Black community responded, and, in response, local ministers and civil rights workers formed the Montgomery Improvement Association (MIA) to coordinate an organized, sustained boycott of the city’s buses. The Montgomery Bus Boycott lasted from December 1955 until December 20, 1956, when the Supreme Court ordered their integration. The boycott not only crushed segregation in Montgomery’s public transportation, it energized the entire civil rights movement and established the leadership of the MIA’s president, a recently arrived, twenty-six-year-old Baptist minister named Martin Luther King Jr.

Motivated by the success of the Montgomery boycott, King and other Black leaders looked to continue the fight. In 1957, King, fellow ministers such as Ralph Abernathy and Fred Shuttlesworth, and key staffers such as Ella Baker and Septima Clark helped create and run the Southern Christian Leadership Conference (SCLC) to coordinate civil rights groups across the South in their efforts to organize and sustain boycotts, protests, and other assaults against Jim Crow discrimination.

As pressure built, Congress passed the Civil Rights Act of 1957, the first such measure passed since Reconstruction. The act was compromised away nearly to nothing, although it did achieve some gains, such as creating the Department of Justice’s Civil Rights Commission, which was charged with investigating claims of racial discrimination. And yet, despite its weakness, the act signaled that pressure was finally mounting on Americans to confront the legacy of discrimination.

Despite successes at both the local and national level, the civil rights movement faced bitter opposition. Those opposed to the movement often used violent tactics to scare and intimidate African Americans and subvert legal rulings and court orders. For example, a year into the Montgomery bus boycott, angry white southerners bombed four African American churches as well as the homes of King and fellow civil rights leader E. D. Nixon. Though King, Nixon, and the MIA persevered in the face of such violence, it was only a taste of things to come. Such unremitting hostility and violence left the outcome of the burgeoning civil rights movement in doubt. Despite its successes, civil rights activists looked back on the 1950s as a decade of mixed results and incomplete accomplishments. While the bus boycott, Supreme Court rulings, and other civil rights activities signaled progress, church bombings, death threats, and stubborn legislators demonstrated the distance that still needed to be traveled.

V. Gender and Culture in the Affluent Society

As shown in this 1958 advertisement for a “Westinghouse with Cold Injector,” a midcentury marketing frenzy targeted female consumers by touting technological innovations designed to make housework easier. Westinghouse.

America’s consumer economy reshaped how Americans experienced culture and shaped their identities. The Affluent Society gave Americans new experiences, new outlets, and new ways to understand and interact with one another.

“The American household is on the threshold of a revolution,” the New York Times declared in August 1948. “The reason is television.”19 Television was presented to the American public at the New York World’s Fair in 1939, but commercialization of the new medium in the United States lagged during the war years. In 1947, though, regular full-scale broadcasting became available to the public. Television was instantly popular, so much so that by early 1948 Newsweek reported that it was “catching on like a case of high-toned scarlet fever.”20 Indeed, between 1948 and 1955 close to two thirds of the nation’s households purchased a television set. By the end of the 1950s, 90 percent of American families had one and the average viewer was tuning in for almost five hours a day.21

The technological ability to transmit images via radio waves gave birth to television. Television borrowed radio’s organizational structure, too. The big radio broadcasting companies—NBC, CBS, and the American Broadcasting Corporation (ABC)—used their technical expertise and capital reserves to conquer the airwaves. They acquired licenses to local stations and eliminated their few independent competitors. The refusal of the Federal Communication Commission (FCC) to issue any new licenses between 1948 and 1955 was a de facto endorsement of the big three’s stranglehold on the market.

In addition to replicating radio’s organizational structure, television also looked to radio for content. Many of the early programs were adaptations of popular radio variety and comedy shows, including The Ed Sullivan Show and Milton Berle’s Texaco Star Theater. These were accompanied by live plays, dramas, sports, and situation comedies. Because of the cost and difficulty of recording, most programs were broadcast live, forcing stations across the country to air shows at the same time. And since audiences had a limited number of channels to choose from, viewing experiences were broadly shared. More than two thirds of television-owning households, for instance, watched popular shows such as I Love Lucy.

The limited number of channels and programs meant that networks selected programs that appealed to the widest possible audience to draw viewers and advertisers, television’s greatest financers. By the mid-1950s, an hour of primetime programming cost about $150,000 (about $1.5 million in today’s dollars) to produce. This proved too expensive for most commercial sponsors, who began turning to a joint financing model of thirty-second spot ads. The need to appeal to as many people as possible promoted the production of noncontroversial shows aimed at the entire family. Programs such as Father Knows Best and Leave it to Beaver featured light topics, humor, and a guaranteed happy ending the whole family could enjoy.22

Advertising was everywhere in the 1950s, including on TV shows such as the quiz show Twenty One, sponsored by Geritol, a dietary supplement. Library of Congress.

Television’s broad appeal, however, was about more than money and entertainment. Shows of the 1950s, such as Father Knows Best and I Love Lucy, idealized the nuclear family, “traditional” gender roles, and white, middle-class domesticity. Leave It to Beaver, which became the prototypical example of the 1950s television family, depicted its breadwinner father and homemaker mother guiding their children through life lessons. Such shows, and Cold War America more broadly, reinforced a popular consensus that such lifestyles were not only beneficial but the most effective way to safeguard American prosperity against communist threats and social “deviancy.”

Postwar prosperity facilitated, and in turn was supported by, the ongoing postwar baby boom. From 1946 to 1964, American fertility experienced an unprecedented spike. A century of declining birth rates abruptly reversed. Although popular memory credits the cause of the baby boom to the return of virile soldiers from battle, the real story is more nuanced. After years of economic depression, families were now wealthy enough to support larger families and had homes large enough to accommodate them, while women married younger and American culture celebrated the ideal of a large, insular family.

Underlying this “reproductive consensus” was the new cult of professionalism that pervaded postwar American culture, including the professionalization of homemaking. Mothers and fathers alike flocked to the experts for their opinions on marriage, sexuality, and, most especially, child-rearing. Psychiatrists held an almost mythic status as people took their opinions and prescriptions, as well as their vocabulary, into their everyday life. Books like Dr. Spock’s Baby and Child Care (1946) were diligently studied by women who took their career as housewife as just that: a career, complete with all the demands and professional trappings of job development and training. And since most women had multiple children roughly the same age as their neighbors’ children, a cultural obsession with kids flourished throughout the era. Women bore the brunt of this pressure, chided if they did not give enough of their time to the children—especially if it was because of a career—yet cautioned that spending too much time would lead to “Momism,” producing “sissy” boys who would be incapable of contributing to society and extremely susceptible to the communist threat.

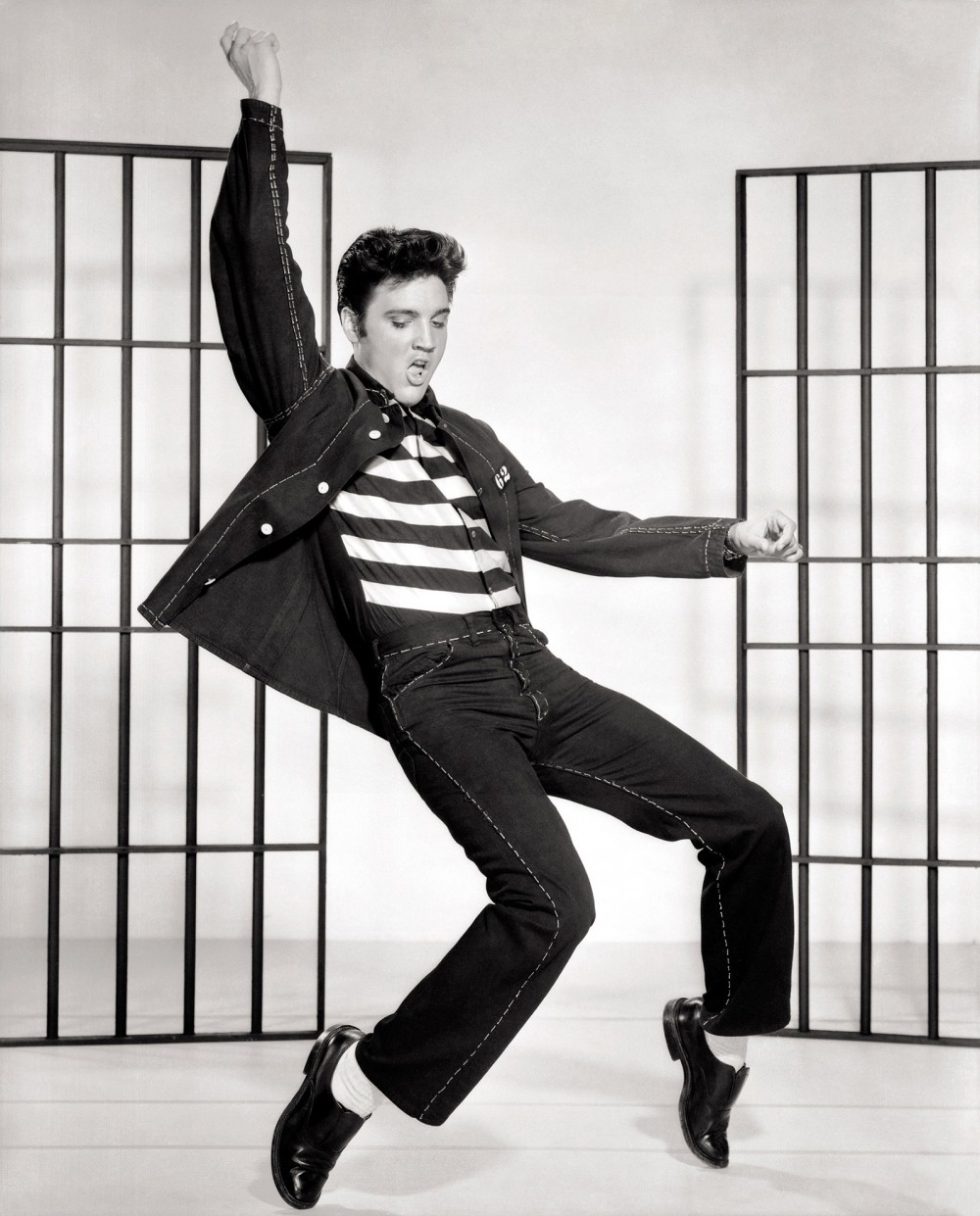

A new youth culture exploded in American popular culture. On the one hand, the anxieties of the atomic age hit America’s youth particularly hard. Keenly aware of the discontent bubbling beneath the surface of the Affluent Society, many youth embraced rebellion. The 1955 film Rebel Without a Cause demonstrated the restlessness and emotional incertitude of the postwar generation raised in increasing affluence yet increasingly unsatisfied with their comfortable lives. At the same time, perhaps yearning for something beyond the “massification” of American culture yet having few other options to turn to beyond popular culture, American youth embraced rock ’n’ roll. They listened to Little Richard, Buddy Holly, and especially Elvis Presley (whose sexually suggestive hip movements were judged subversive).

The popularity of rock ’n’ roll had not yet blossomed into the countercultural musical revolution of the coming decade, but it provided a magnet for teenage restlessness and rebellion. “Television and Elvis,” the musician Bruce Springsteen recollected, “gave us full access to a new language, a new form of communication, a new way of being, a new way of looking, a new way of thinking; about sex, about race, about identity, about life; a new way of being an American, a human being; and a new way of hearing music.” American youth had seen so little of Elvis’s energy and sensuality elsewhere in their culture. “Once Elvis came across the airwaves,” Springsteen said, “once he was heard and seen in action, you could not put the genie back in the bottle. After that moment, there was yesterday, and there was today, and there was a red hot, rockabilly forging of a new tomorrow, before your very eyes.”23

While many Black musicians such as Chuck Berry helped pioneer rock ’n’ roll, white artists such as Elvis Presley brought it into the mainstream American culture. Elvis’s good looks, sensual dancing, and sonorous voice stole the hearts of millions of American teenage girls, who were at that moment becoming a central segment of the consumer population. Wikimedia.

Other Americans took larger steps to reject the expected conformity of the Affluent Society. The writers, poets, and musicians of the Beat Generation, disillusioned with capitalism, consumerism, and traditional gender roles, sought a deeper meaning in life. Beats traveled across the country, studied Eastern religions, and experimented with drugs, sex, and art.

Behind the scenes, Americans were challenging sexual mores. The gay rights movement, for instance, stretched back into the Affluent Society. While the country proclaimed homosexuality a mental disorder, gay men established the Mattachine Society in Los Angeles and gay women formed the Daughters of Bilitis in San Francisco as support groups. They held meetings, distributed literature, provided legal and counseling services, and formed chapters across the country. Much of their work, however, remained secretive because homosexuals risked arrest and abuse if discovered.24

Society’s “consensus,” on everything from the consumer economy to gender roles, did not go unchallenged. Much discontent was channeled through the machine itself: advertisers sold rebellion no less than they sold baking soda. And yet others were rejecting the old ways, choosing new lifestyles, challenging old hierarchies, and embarking on new paths.

VI. Politics and Ideology in the Affluent Society

Postwar economic prosperity and the creation of new suburban spaces inevitably shaped American politics. In stark contrast to the Great Depression, the new prosperity renewed belief in the superiority of capitalism, cultural conservatism, and religion.

In the 1930s, the economic ravages of the international economic catastrophe knocked the legs out from under the intellectual justifications for keeping government out of the economy. And yet pockets of true believers kept alive the gospel of the free market. The single most important was the National Association of Manufacturers (NAM). In the midst of the depression, NAM reinvented itself and went on the offensive, initiating advertising campaigns supporting “free enterprise” and “The American Way of Life.”25 More importantly, NAM became a node for business leaders, such as J. Howard Pew of Sun Oil and Jasper Crane of DuPont Chemical Co., to network with like-minded individuals and take the message of free enterprise to the American people. The network of business leaders that NAM brought together in the midst of the Great Depression formed the financial, organizational, and ideological underpinnings of the free market advocacy groups that emerged and found ready adherents in America’s new suburban spaces in the postwar decades.

One of the most important advocacy groups that sprang up after the war was Leonard Read’s Foundation for Economic Education (FEE). Read founded FEE in 1946 on the premise that “The American Way of Life” was essentially individualistic and that the best way to protect and promote that individualism was through libertarian economics. Libertarianism took as its core principle the promotion of individual liberty, property rights, and an economy with a minimum of government regulation. FEE, whose advisory board and supporters came mostly from the NAM network of Pew and Crane, became a key ideological factory, supplying businesses, service clubs, churches, schools, and universities with a steady stream of libertarian literature, much of it authored by Austrian economist Ludwig von Mises.26

Shortly after FEE’s formation, Austrian economist and libertarian intellectual Friedrich Hayek founded the Mont Pelerin Society (MPS) in 1947. The MPS brought together libertarian intellectuals from both sides of the Atlantic to challenge Keynesian economics—the dominant notion that government fiscal and monetary policy were necessary economic tools—in academia. University of Chicago economist Milton Friedman became its president. Friedman (and his Chicago School of Economics) and the MPS became some of the most influential free market advocates in the world and helped legitimize for many the libertarian ideology so successfully evangelized by FEE, its descendant organizations, and libertarian popularizers such as the novelist Ayn Rand.27

Libertarian politics and evangelical religion were shaping the origins of a new conservative, suburban constituency. Suburban communities’ distance from government and other top-down community-building mechanisms—despite relying on government subsidies and government programs—left a social void that evangelical churches eagerly filled. More often than not the theology and ideology of these churches reinforced socially conservative views while simultaneously reinforcing congregants’ belief in economic individualism. Novelist Ayn Rand, meanwhile, whose novels The Fountainhead (1943) and Atlas Shrugged (1957) were two of the decades’ best sellers, helped move the ideas of individualism, “rational self-interest,” and “the virtue of selfishness” outside the halls of business and academia and into suburbia. The ethos of individualism became the building blocks for a new political movement. And yet, while the growing suburbs and their brewing conservative ideology eventually proved immensely important in American political life, their impact was not immediately felt. They did not yet have a champion.

In the post–World War II years the Republican Party faced a fork in the road. Its complete lack of electoral success since the Depression led to a battle within the party about how to revive its electoral prospects. The more conservative faction, represented by Ohio senator Robert Taft (son of former president William Howard Taft) and backed by many party activists and financiers such as J. Howard Pew, sought to take the party further to the right, particularly in economic matters, by rolling back New Deal programs and policies. On the other hand, the more moderate wing of the party, led by men such as New York governor Thomas Dewey and Nelson Rockefeller, sought to embrace and reform New Deal programs and policies. There were further disagreements among party members about how involved the United States should be in the world. Issues such as foreign aid, collective security, and how best to fight communism divided the party.

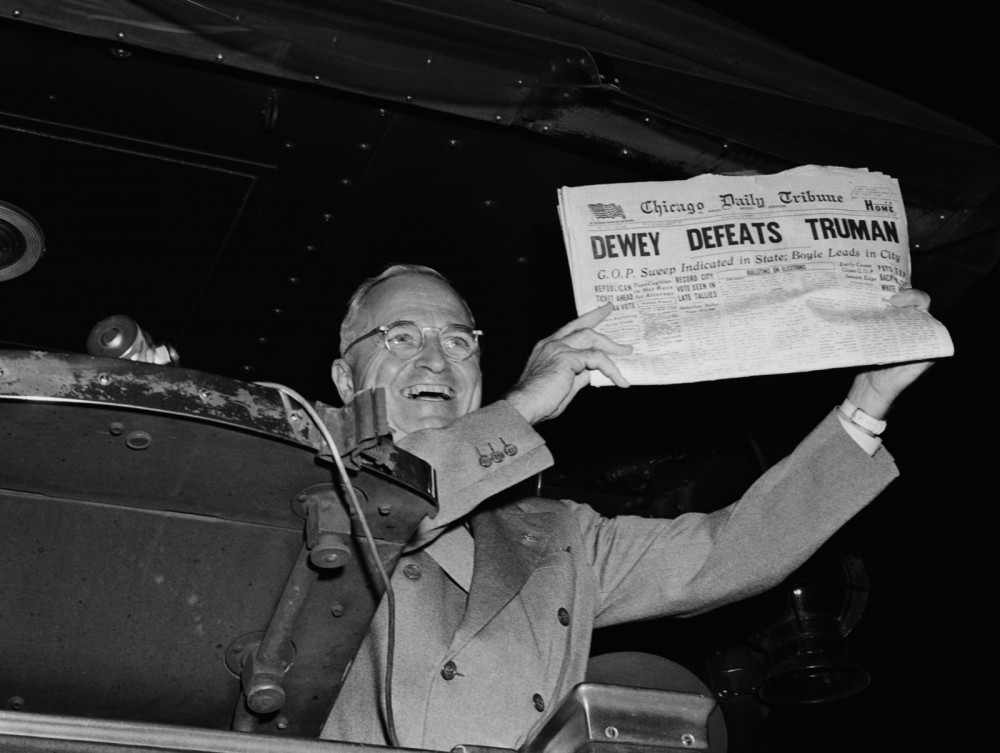

Just like the internet, don’t always trust what you read in newspapers. This obviously incorrect banner from the front page of the Chicago Daily Tribune on November 3, 1948 made its own headlines as the newspaper’s most embarrassing gaff. Photograph, 1948. http://media-2.web.britannica.com/eb-media/14/65214-050-D86AAA4E.jpg. Undated portrait of President Harry S. Truman. National Archives.

Initially, the moderates, or “liberals,” won control of the party with the nomination of Thomas Dewey in 1948. Dewey’s shocking loss to Truman, however, emboldened conservatives, who rallied around Taft as the 1952 presidential primaries approached. With the conservative banner riding high in the party, General Dwight Eisenhower (“Ike”), most recently North Atlantic Treaty Organization (NATO) supreme commander, felt obliged to join the race in order to beat back the conservatives and “prevent one of our great two Parties from adopting a course which could lead to national suicide.” In addition to his fear that Taft and the conservatives would undermine collective security arrangements such as NATO, he also berated the “neanderthals” in his party for their anti–New Deal stance. Eisenhower felt that the best way to stop communism was to undercut its appeal by alleviating the conditions under which it was most attractive. That meant supporting New Deal programs. There was also a political calculus to Eisenhower’s position. He observed, “Should any political party attempt to abolish social security, unemployment insurance, and eliminate labor laws and farm programs, you would not hear of that party again in our political history.”28

The primary contest between Taft and Eisenhower was close and controversial. Taft supporters claimed that Eisenhower stole the nomination from Taft at the convention. Eisenhower, attempting to placate the conservatives in his party, picked California congressman and virulent anticommunist Richard Nixon as his running mate. With the Republican nomination sewn up, the immensely popular Eisenhower swept to victory in the 1952 general election, easily besting Truman’s hand-picked successor, Adlai Stevenson. Eisenhower’s popularity boosted Republicans across the country, leading them to majorities in both houses of Congress.

The Republican sweep in the 1952 election, owing in part to Eisenhower’s popularity, translated into few tangible legislative accomplishments. Within two years of his election, the moderate Eisenhower saw his legislative proposals routinely defeated by an unlikely alliance of conservative Republicans, who thought Eisenhower was going too far, and liberal Democrats, who thought he was not going far enough. For example, in 1954 Eisenhower proposed a national healthcare plan that would have provided federal support for increasing healthcare coverage across the nation without getting the government directly involved in regulating the healthcare industry. The proposal was defeated in the house by a 238–134 vote with a swing bloc of seventy-five conservative Republicans joining liberal Democrats voting against the plan.29 Eisenhower’s proposals in education and agriculture often suffered similar defeats. By the end of his presidency, Ike’s domestic legislative achievements were largely limited to expanding social security; making Health, Education and Welfare (HEW) a cabinet position; passing the National Defense Education Act; and bolstering federal support to education, particularly in math and science.

As with any president, however, Eisenhower’s impact was bigger than just legislation. Ike’s “middle of the road” philosophy guided his foreign policy as much as his domestic agenda. He sought to keep the United States from direct interventions abroad by bolstering anticommunist and procapitalist allies. Ike funneled money to the French in Vietnam fighting the Ho Chi Minh–led communists, walked a tight line between helping Chiang Kai-Shek’s Taiwan without overtly provoking Mao Zedong’s China, and materially backed groups that destabilized “unfriendly” governments in Iran and Guatemala. The centerpiece of Ike’s Soviet policy, meanwhile, was the threat of “massive retaliation,” or the threat of nuclear force in the face of communist expansion, thereby checking Soviet expansion without direct American involvement. While Ike’s “mainstream” “middle way” won broad popular support, his own party was slowly moving away from his positions. By 1964 the party had moved far enough to the right to nominate Arizona senator Barry Goldwater, the most conservative candidate in a generation. The political moderation of the Affluent Society proved little more than a way station on the road to liberal reforms and a more distant conservative ascendancy.

VII. Conclusion

The postwar American “consensus” held great promise. Despite the looming threat of nuclear war, millions experienced an unprecedented prosperity and an increasingly proud American identity. Prosperity seemed to promise ever higher standards of living. But things fell apart, and the center could not hold: wracked by contradiction, dissent, discrimination, and inequality, the Affluent Society stood on the precipice of revolution.

Notes

- John Kenneth Galbraith, The Affluent Society (New York: Houghton Mifflin, 1958), 129.

- See, for example, Claudia Goldin and Robert A. Margo, “The Great Compression: The Wage Structure in the United States at Mid-Century,” Quarterly Journal of Economics 107 (February 1992), 1–34.

- Price Fishback, Jonathan Rose, and Kenneth Snowden, Well Worth Saving: How the New Deal Safeguarded Home Ownership (Chicago: University of Chicago Press, 2013).

- Leo Schnore, “The Growth of Metropolitan Suburbs,” American Sociological Review 22 (April 1957), 169.

- Lizabeth Cohen, A Consumers’ Republic: The Politics of Mass Consumption in Postwar America (New York: Random House, 2002), 202.

- Elaine Tyler May, Homeward Bound: American Families in the Cold War Era (New York, Basic Books, 1999), 152.

- Leo Fishman, The American Economy (Princeton, NJ: Van Nostrand, 1962), 560.

- John P. Diggins, The Proud Decades: America in War and in Peace, 1941–1960 (New York: Norton, 1989), 219.

- David Kushner, Levittown: Two Families, One Tycoon, and the Fight for Civil Rights in America’s Legendary Suburb (New York: Bloomsbury Press, 2009), 17.

- Thomas Sugrue, The Origins of the Urban Crisis: Race and Inequality in Postwar Detroit (Princeton, NJ: Princeton University Press, 2005).

- Becky M. Nicolaides, My Blue Heaven: Life and Politics in the Working–Class Suburbs of Los Angeles, 1920-1965 (Chicago: University of Chicago Press, 2002), 193.

- Adam W. Rome, The Bulldozer in the Countryside: Suburban Sprawl and the Rise of American Environmentalism (Cambridge: Cambridge University Press, 2001), 7.

- See also J. R. McNeill and Peter Engelke, The Great Acceleration: An Environmental History of the Anthropocene (Cambridge, MA: Harvard University Press, 2016); Andrew Needham, Power Lines: Phoenix and the Making of the Modern Southwest (Princeton: Princeton University Press, 2014); and Ted Steinberg, Down to Earth: Nature’s Role in American History (New York: Oxford University Press, 2002).

- Oliver Brown, et al. v. Board of Education of Topeka, et al., 347 U.S. 483 (1954).

- James T. Patterson and William W. Freehling, Brown v. Board of Education: A Civil Rights Milestone and Its Troubled Legacy (New York: Oxford University Press, 2001), 25; Pete Daniel, Standing at the Crossroads: Southern Life in the Twentieth Century (Baltimore: Johns Hopkins University Press, 1996), 161–164.

- Patterson and Freehling, Brown v. Board, xxv.

- Charles T. Clotfelter, After Brown: The Rise and Retreat of School Desegregation (Princeton, NJ: Princeton University Press, 2011), 6.

- William Bradford Huie, “The Shocking Story of Approved Killing in Mississippi,” Look (January 24, 1956), 46–50.

- Lewis L. Gould, Watching Television Come of Age: The New York Times Reviews by Jack Gould (Austin: University of Texas Press, 2002), 186.

- Gary Edgerton, Columbia History of American Television (New York: Columbia University Press, 2009), 90.

- Ibid., 178.

- Christopher H. Sterling and John Michael Kittross, Stay Tuned: A History of American Broadcasting (New York: Routledge, 2001), 364.

- Bruce Springsteen, “SXSW Keynote Address,” Rolling Stone (March 28, 2012), http://www.rollingstone.com/music/news/exclusive-the-complete-text-of-bruce-springsteens-sxsw-keynote-address-20120328.

- John D’Emilio, Sexual Politics, Sexual Communities, 2nd ed. (Chicago: University of Chicago Press, 2012), 102–103.

- See Richard Tedlow, “The National Association of Manufacturers and Public Relations During the New Deal,” Business History Review 50 (Spring 1976), 25–45; and Wendy Wall, Inventing the “American Way”: The Politics of Consensus from the New Deal to the Civil Rights Movement (New York: Oxford University Press, 2008).

- Gregory Eow, “Fighting a New Deal: Intellectual Origins of the Reagan Revolution, 1932–1952,” PhD diss., Rice University, 2007; Brian Doherty, Radicals for Capitalism: A Freewheeling History of the Modern American Libertarian Movement (New York: Public Affairs, 2007); and Kim Phillips Fein, Invisible Hands: The Businessmen’s Crusade Against the New Deal (New York: Norton, 2009), 43–55.

- Angus Burgin, The Great Persuasion: Reinventing Free Markets Since the Depression (Cambridge, MA: Harvard University Press, 2012); Jennifer Burns, Goddess of the Market: Ayn Rand and the American Right (New York: Oxford University Press, 2009).

- Allan J. Lichtman, White Protestant Nation: The Rise of the American Conservative Movement (New York: Atlantic Monthly Press, 2008), 180, 201, 185.

- Steven Wagner, Eisenhower Republicanism Pursuing the Middle Way (DeKalb: Northern Illinois University Press, 2006), 15.