Enhancing Student Productive Learning in Undergraduate Statistics Courses Using Multi-Submission Online Assignments

Justin W. Bonny

Assistant Professor, Department of Psychology, Morgan State University | justin.bonny@morgan.edu

A key principle of human learning is allowing individuals to adapt behaviors in response to outcomes. In the classroom, this typically takes the form of providing students with corrective feedback on assignments. Combined with allowing multiple attempts for assignments, this offers students the opportunity for productive learning. When faced with a challenging task, productive learning is when students make use of corrective feedback to identify and address knowledge gaps to persist and accomplish the learning objective. The opportunity to engage in productive learning can be provided with a variety of assignments. However, the modality of the classroom can have an impact on collecting genuine student responses and minimizing dishonest responses, such as copying behaviors. Whereas face-to-face classrooms allow for higher levels of control to elicit genuine student responses, remote environments created via online systems pose challenges. Copying behavior refers to when a student takes the responses of another and submits them as their own for an assignment. When a student uses copied responses during productive learning assignments it can be detrimental to learning outcomes (Chen et al., 2018). The goal of the present study was to evaluate student productive learning with calculation assignments in an online statistics course designed to solicit genuine student responses during the COVID-19 pandemic.

What was the Environment and Goal?

COVID-19: In-person to Remote Learning Modalities

In the United States, a survey of higher education faculty indicated that the majority of their institutions (89%) transitioned some or all courses to online from in-person in April 2020 in response to the COVID-19 pandemic (Johnson et al., 2021). The transition to online courses was unplanned and sudden, with little preparation time compared to what is typical at higher education institutions. As the pandemic continued, instructors at many institutions continued to maintain online course modalities in Fall 2020. The present research focused on students completing behavioral statistics courses as part of an undergraduate psychology program. Prior to the pandemic, sections of the course were taught in-person, on-campus in Baltimore, Maryland, USA. During the pandemic, the course was moved to an asynchronous, online modality. In this format, students engaged with course material, including assignments and lessons, via an online course learning management system. The students were able to complete course modules on their own accord. The two-course sequence began in Fall 2020 and continued in the online, asynchronous format through Spring 2021 and covered descriptive and inferential statistics, including t-tests through one-way analysis of variance (ANOVA).

Productive Learning

A challenge in the transition from an in-person to online statistics course was providing opportunities for productive learning. Prior to the pandemic, students could practice calculation problems during face-to-face learning activities. As students completed each step, the instructor would synchronously provide corrective feedback, allowing the students to identify and revise their calculations to achieve the correct answer. By providing instantaneous corrective feedback, students had the opportunity to engage in productive learning to complete the assignment. The present study investigated whether a similar opportunity for productive learning could be provided via online, asynchronous assignments during the pandemic.

Multi-submission Assignments and Genuine Student Responses

Two studies reviewed for the present research observed productive learning with multi-submission assignments in undergraduate courses. Students from in-person computer programming courses were offered the opportunity to revise and resubmit coding assignments after corrective feedback was provided (Holland-Minkley & Lombardi, 2016). Those who selected to complete the optional resubmission tended to have higher performance on learning assessments (e.g., exams). Similarly, when undergraduate students enrolled in a physics course completed online assignments that provided resubmissions and instantaneous corrective feedback via an online answer checker, students that made multiple submissions tended to achieve higher performance on the assignment as well as learning assessments (e.g., exams; Chen et al., 2018). This research suggests that students who engage in productive learning tend to better attain course outcomes and such opportunities can be implemented online.

A concern raised about online assignments designed for productive learning by Chen and colleagues (2018) was in regard to genuine student responses. Researchers noted that some students displayed evidence of copying behavior, with high performance on their first attempt close to the assignment deadline, compared to other students. These students tended to perform lower on the learning assessment exam. This indicated that a key component to engaging students in productive learning was for students to submit genuine responses.

The Present Study

The goal of the present research was to evaluate whether students engaged in productive learning when completing multi-submission online statistics assignments during the COVID-19 pandemic. Two approaches were used to develop assignments to maximize the chance that students engaged in productive learning. First, each assignment was composed of a statistics calculation problem that required students to provide intermediate responses that followed the calculation steps taught in the course with corrective feedback provided after each submission. Second, each student had a unique dataset to maximize the chance of collecting genuine responses. In this manner, if students engaged in copying behavior, they would submit incorrect answers. Calculation assignments were posted online via the Canvas learning management system (LMS) and were procedurally generated via the Canvas application programming interface (API). Student engagement and performance on the assignments were used to test two hypotheses associated with productive learning: students would make multiple submissions for each assignment and assignment scores would improve across submissions.

What Was Observed?

Method

Participants

A total of 43 students across two sequential behavioral statistics courses were included in the present research. Some students completed both courses whereas others completed one course. The course sequence occurred in Fall 2020 and Spring 2021, while the COVID-19 pandemic was still in effect in the United States. The courses were offered in an online, asynchronous format and students completed assignments remotely using the Canvas LMS. The research protocol for the study was approved by the university institutional review board.

Learning Assignments

Each learning assignment required students to solve a computational problem by calculating and interpreting statistics. A scenario was presented to students that required calculating statistics using formulas and approaches taught via course materials (Table 1). For each assignment, the calculation steps were cumulative to provide students with corrective feedback on each step such that they could identify at what point they made an error in responding to the problem. Students had a near-unlimited number of submissions they could make (set to 99 attempts in the LMS). To increase the chance of genuine responses, students had to log into the LMS to view and submit assignments using university-issued credentials. Each student had a unique dataset for each problem. For example, for the one-sample t-test assignment, all students had to calculate whether the sample was significantly different than a known population mean, but the sample data varied by student (Student ‘A’ sample: 22, 18, 26, 6, 6; Student ‘B’ sample: 7, 18, 9, 12, 6). To create a unique assignment for each student, an R script procedurally generated and posted each assignment to the LMS using the API.

Table 1. Calculation assignment names and statistical concepts in sequence of administration and mean student performance and attempts (standard deviation provided in parentheses).

| Name | Statistical Concepts | Semester | Student Performance | Student Attempts |

| Homework 1 (H1) | Sample mean, median, mode (central tendency) | Fall 2020 | .99 (.04) | 1.72 (.94) |

| Homework 2 (H2) | Sample range, variance, standard deviation (dispersion) | Fall 2020 | .90 (.19) | 3.44 (2.20) |

| Homework 3 (H3) | One-sample t-tests | Fall 2020 | .79 (.11) | 3.72 (2.72) |

| Homework 4 (H4) | Independent samples t-tests | Fall 2020 | .88 (.12) | 6.24 (3.41) |

| Homework 5 (H5) | Dependent samples t-tests | Spring 2021 | .81 (.24) | 5.97 (3.98) |

| Homework 6 (H6) | One-way analysis of variance (between subjects) tests | Spring 2021 | .87 (.21) | 5.97 (4.15) |

| Homework 7 (H7) | Pearson r correlation tests | Spring 2021 | .87 (.11) | 5.94 (3.85) |

| Homework 8 (H8) | Chi-square goodness of fit tests | Spring 2021 | .98 (.07) | 3.79 (2.40) |

Measures

To assess productive learning, LMS metadata was collected for each assignment submission attempt. To be included as an attempt, the submission needed to contain at least one question that had a response entered. Attempt performance measures included the current submission attempt and the proportion of assignment questions with correct responses (e.g., 5 question responses out of 10 questions and 4 correct = 4/10 = .40).

Procedure

Approximately one week before the deadline, each assignment was posted to the LMS. Students were then able to submit attempts up until the deadline. Each attempt had an unlimited amount of time and immediately after the attempt was submitted the LMS displayed which responses were correct and incorrect, but did not display the correct answers. The student’s highest scoring attempt was kept as the grade for the assignment.

Results

A total of 1121 submission attempts were made by students across all assignments. Statistical analyses were two-tailed (alpha = .05) and conducted using the R packages ‘lme4’ (Bates et al., 2015), ‘lmerTest’ (degrees of freedom for tests were estimated via Satterthwaite’s method; Kuznetsova et al., 2017), and plots generated using ‘ggplot’ (Wickham, 2016).

There was evidence that assignments varied in student performance, F(7, 202.93) = 7.92, p < .001, and number of attempts F(7, 201.44) = 11.57, p < .001 (linear mixed models with random intercept of student; Table 1). Assignments H1 and H8 had the highest proportion correct, with H3 having the lowest performance (significant Tukey pairwise comparisons: H1 vs. H3, H5, H6; H8 vs. H3, H5, H7). Assignment H1 had the lowest number of attempts with H4 through H7 having the highest (significant Tukey pairwise comparisons: H1 vs. H4, H5, H6, H7, H8; H2 vs. H4 through H7; H3 vs. H4 through H7; H8 vs. H4 through H7).

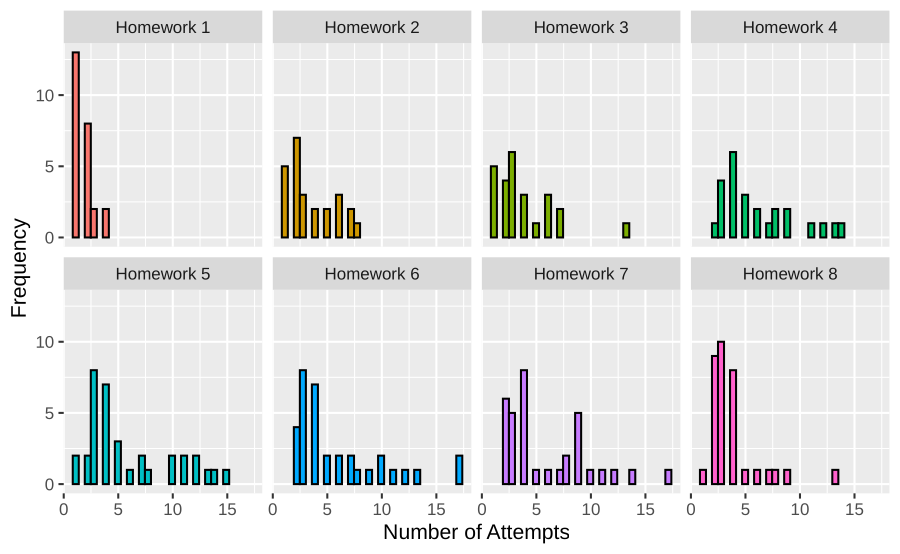

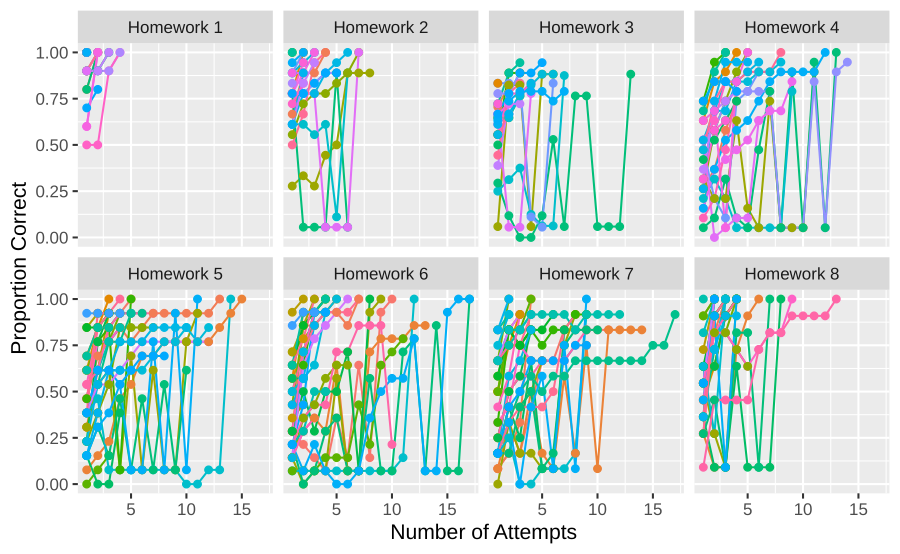

When accounting for factors (linear mixed model with random intercept for each), students submitted significantly more attempts than a single attempt, M = 4.73, SD = 3.51, t(12.22) = 5.44, p < .001 (see Figure 1). Furthermore, the proportion of assignment questions with correct responses was significantly greater for the final, M = .88, SD = .17, compared to initial, M = .53, SD = .27, attempts submitted by students, t(421.38) = 21.35, p < .001 (linear mixed model with random intercept for student and assignment). There was evidence of individual differences with the number of attempts to reach maximum proportion correct varying across students (Figure 2)

Figure 1. Histograms of number of student attempts submitted for each assignment.

Figure 2. Proportion of assignment question responses marked correct by the number of attempts submitted by students. Line color indicates the attempts made by different students.

Discussion

The present study evaluated productive learning in online behavioral statistics assignments that allowed multiple submissions and provided corrective feedback. Evidence of productive learning was observed with students making multiple attempts and improving performance from the initial to final attempt. However, students substantially varied in the number of submissions made to achieve their maximum performance. The results suggested that, when provided the opportunity to correct their mistakes, students engaged with assignments, making several attempts, and learned from their mistakes on challenging assignments.

Silver Linings and Next Steps

The present study indicated that the use of multi-submission assignments administered online was successful in providing opportunities for students to engage in productive learning in behavioral statistics courses during the COVID-19 pandemic. The implementation process and results revealed several considerations for future iterations of similar assignments.

Assignment Difficulty

There were less opportunities for students to engage in productive learning with easy assignments. Assignments with higher performance also tended to have fewer submission attempts compared to assignments with lower performance, in line with prior research (Holland-Minkley & Lombardi, 2016). In accordance with productive learning, if there is no challenge to overcome, there is no need for learners to use corrective feedback to improve underlying knowledge. It is possible that students who correctly responded to all questions on the initial attempt engaged in productive learning outside of the LMS environment. For example, a student could have calculated the results for an assignment and then checked and corrected their work before submitting it. This type of productive learning would not be measurable via the LMS data collected in the present study. Instructors whose goal is to have students engage in productive learning via assignments should design them to be sufficiently difficult to require multiple submission attempts to earn full points.

Self-Regulated Learning

Key to productive learning via assignments is student engagement and utilization of corrective feedback. Students lower in self-regulated learning, which includes lesser ability to monitor progress, manage time, and identify knowledge gaps, tend to be less likely to engage in productive learning opportunities (Chen et al., 2018). The individual variations in the present study may have been due to differences in student self-regulated learning, beyond differences in statistical knowledge. Instructors that want to maximize the effectiveness of productive learning assignments should consider providing instructional material to develop the skills necessary for students to successfully engage in self-regulated learning.

Future Directions

Future research investigating productive learning can address the following questions. The present study was correlational in nature and lacked a control group. Experimental designs can be used to identify how multi-submission compared to single-submission and online versus in-person assignments affect student productive learning. A limitation to the present and prior research (Chen et al., 2018) was the use of multi-submission learning assignments that require questions with a pre-determined answer, such as calculating a single numerical value or multiple-choice questions. However, other types of courses are less able to use such questions and instead rely on free response problems, such as short answers or essays. A major limitation to expanding multi-submission assignments that provide real-time corrective feedback to free response questions is the amount of grading time and effort required of the instructor. Future investigations should evaluate whether including advances in text analysis and machine learning (Galhardi & Brancher, 2018) can provide methods for delivering real-time corrective feedback for free response assignments to afford additional opportunities for productive learning.

References

Bates, D. M., Mächler, M., Bolker, B. M., & Walker, S. C. (2015). Fitting linear mixed-effects models using lme4. Journal of Statistical Software, 67(1), 1–48.

Chen, X., Breslow, L., & DeBoer, J. (2018). Analyzing productive learning behaviors for students using immediate corrective feedback in a blended learning environment. Computers & Education, 117(C), 59–74.

Galhardi, L. B., & Brancher, J. D. (2018). Machine learning approach for automatic short answer grading: A systematic review. In G. R. Simari, E. Fermé, F. Gutiérrez Segura, & J. A. Rodríguez Melquiades (Eds.), Advances in Artificial Intelligence—IBERAMIA 2018, 380–391. Springer International Publishing.

Holland-Minkley, A. M., & Lombardi, T. (2016). Improving engagement in introductory courses with homework resubmission. Proceedings of the 47th ACM Technical Symposium on Computing Science Education, 534–539.

Johnson, N., Seaman, J., & Veletsianos, G. (2021). Teaching During a Pandemic: Spring Transition, Fall Continuation, Winter Evaluation [Report]. Bay View Analytics. Retrieved April 8, 2022 from https://www.bayviewanalytics.com/reports/pulse/teachingduringapandemic.pdf

Kuznetsova, A., Brockhoff, P. B., & Christensen, R. H. B. (2017). lmerTest package: Tests in linear mixed effects models. Journal of Statistical Software, 82(13), 1–19.

Wickham, H. (2016). ggplot2: Elegant graphics for data analysis. Springer-Verlag.