6

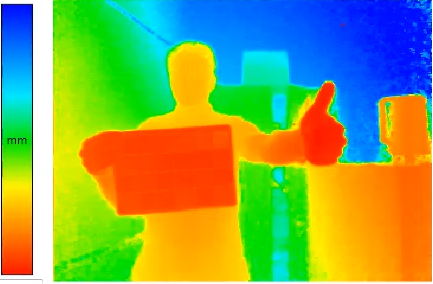

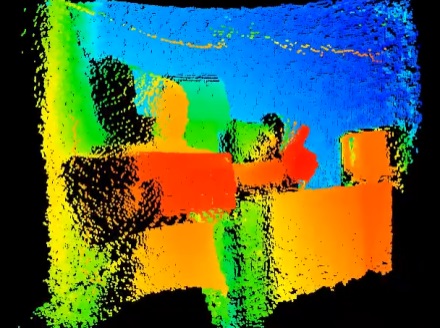

Figure 5 Visible Light, Heat Map and Point Cloud Images for Same Scene – Courtesy Melexis Inc.

In early AR/VR systems the available sensor technology severely limited the attainable levels of immersion and “presence”. Size, weight and power consumption of the early sensors restricted the construction of low weight headsets. Advances in integrated circuit manufacturing techniques are dramatically accelerating the capabilities of VR and AR systems.

In order for a VR headset system to accurately adjust the projected image on the viewscreen, or an AR system to place the projected holographs, the computer must know the relative location and motion of the wearer’s head. An Inertial Measurement Unit (IMU) is typically used to accomplish this task. An IMU combines three types of sensors; an accelerometer, a gyroscope and a magnetometer. Each of these sensing elements must also sense the measured property in 3 axis, (X,Y,Z). Combining these signals facilitates error correction and yields accurate measurements to track head position and movement. Each sensor employs a fabrication technology known as “MEMS”, Micro Electro-Mechanical Systems. This fabrication technology is based on Integrated Circuit Photolithography techniques.

A simple explanation of IC fabrication is by analogy to screenprinting. Various masks are used to selectively pattern paper-thin discs of highly purified Silicon with chemical impurities which then create very specific electrical properties. By geometrically arranging these patterned areas and interconnecting them with thin “wires” of atomically deposited aluminum, gold or copper it produces functional electronic components. All of these traditional integrated circuits are “solid state” and have no moving parts to wear out and are largely responsible for our existing consumer electronic society.

MEMS devices follow similar fabrication techniques with some significant differences. In addition to fabricating resistors, capacitors and transistors, the engineers use selective etching and sacrificial structures to fabricate microscopically small mechanical elements which can move. The earliest MEMS sensors etched a very thin silicon membrane on the surface of which had previously been patterned resistors. When this membrane is deflected, the electrical resistance values change and can be measured, amplified and correlated to the amount of pressure or vacuum on the membrane’s surface. In similar manner, small masses suspended on thin bridge structures or cantilevers can be used to measure deflections caused by acceleration. A gyroscope can be implemented by creating multi-fingered, inter-digitated structures which, when vibrated at set frequencies, will then be able to detect the Coriolis force and by sensing changes in frequency determine rates of rotation.

Lastly, MEMS magnetometers use magnetic Hall effect sensors and Integrated Magneto Concentrators (IMCTM) devices to create an electronic compass. Combining these three sensing principles into a so-called Inertial Measurement Unit (IMU) provides the fundamental ability to accurately detect position or attitude in space as well as rates of change of position in real time and with fine precision. High precision, low cost, low power MEMS IMUs only became available in the mid-2000s and, when coupled with similar advances in GPS (Global Positioning System) technology, enabled smart phones to function as sophisticated navigation instruments active in many different software applications. Examples of applications using this capability, other than the familiar GoogleMaps, include cycling and running apps which measure distance travelled and elevation gained or lost. They can also plot the routes of an athlete during workouts, showing comparative assessments of performance.

Other apps that use sensing features include star finders, satellite tracking apps and mountain peak identification apps. These all rely on the device knowing its position from GPS information as well as measuring the specific orientation in space and direction the camera is being aimed by the user in order to correctly identify the object(s) in the camera’s field of view at that moment.

VR systems also exploit the native IMU function of smartphones in mobile immersive headsets. In tethered immersive headsets, an IMU is included as part of the electronics package. In VR immersive headsets, the computer controls all image information related to gaze direction based on the head position of the wearer. As long as the wearer is seated or stationary there is no need for any other position sensing. However many games benefit from capturing data on body motion and mechanics. Such position detection is often accomplished by requiring additional markers or light sources to be placed at fixed locations around the playing space. These markers or light sources can be sensed by a camera IC or specialized light sensor to triangulate the larger movements of the player, within a relative small cubic volume of a few tens of square feet. This scheme is often referred as “outside-in” tracking.

Augmented reality requires much more complex sensing to enable the computer generated elements to appear realistically in the scenes of interest. Early methods to render graphics properly in reference to the viewed scene relied on barcode like markers or predetermined 2D images which were used as references by the headsets camera and keyed the placement of the graphical element linked to the specific marker Most current AR headsets rely on one or more special types of cameras. Some use Time of Flight Cameras. Others use Binocular Depth Sensing and still others employ so called structured-light sensors. Some use a combination of all these sensors.

Time of Flight (ToF) Cameras are a special kind of Complementary Metal Oxide Semiconductor (CMOS) or Charge Coupled Device (CCD) image sensor pixel architecture. Their operation relies on modulated InfraRed (IR) light and measurement of the phase delay of the reflected light to calculate distance traveled from the camera to an object in the scene. This data set provides a “point cloud” (Fig. 5, center image) representing the reflecting surfaces in the path of the IR modulated light from the camera (Hansard, 2012).

The images in Fig. 5 were captured with a ToF camera. On the left is image as seen in 2D reflected IR illumination. On the right is a 2D “heat map” version where colors represent distance to the objects illuminated with IR light in the scene. The middle version is a pseudo 3D representation of the point cloud data. The blue color is the rear wall of the room, green areas are a side wall and cabinet, orange and yellow are the foreground objects – a person with optical reference pattern and hand extended as well as a coffee cup on top of a box. Commercial examples are produced by Melexis (MLX75023 QVGA Time of Flight sensor). Fully realized cameras are made by Softkinetic (DS325/DS525).

Another type of depth sensing technology is a Structured Light Sensor. This technology projects a defined pattern of light (IR or visible) onto the scene. The distortion of the patterned light can be analyzed mathematically to triangulate the distance to any point in the scene. The camera pixel data is analyzed to determine the difference between the pattern projected and the return pattern, accounting for camera optics, to determine the surfaces in the scene and their respective distance from the image sensor. One or two sensor structured light architectures are common but two sensor versions provide improved performance (Zanuttigh, 2016, P. 43-49). Commercial examples of structured light sensors are the Kinect camera from Microsoft, the Intel F200 or R200 and Occipitel’s Structure Sensor.

The ability to map the environment and know the position and motion of the device in the environment is referred to in the AR/VR industry as Simultaneous Localization and Mapping (SLAM) (Paula, 2015). These two sensor technologies, among others, are the foundation of the current generation of AR implementations and will play a pivotal role in future improvements in AR headsets and AR capable devices.