Learning Objectives

- Situate the historical development of scholarly publishing and how it became a lucrative business.

- Articulate how profit motivations of money and prestige influence the scholarly publishing system and render it resistant to change.

In the previous chapter, we examined some of the power dynamics at play within the scholarly publishing system: namely, that for-profit, commercial publishers dominate the industry despite the fact that the academy is largely responsible for producing the content. Alongside power and control, there is also profit in the form of money and prestige. This chapter looks more closely at how profit drives the scholarly publishing machine.

Profit in Money

In his article “Is the staggeringly profitable business of scientific publishing bad for science?”, Stephen Buranyi maps the history and transformation of the modern scholarly publishing industry from the mid-twentieth century to present day. Starting around the 1950s, governments increasingly invested in scientific progress by funding universities, military, and federal agencies. New disciplines sprouted, along with new journals devoted to their study. Because scholarly journals and their articles are unique — “one article cannot substitute for another” — businessmen such as financier Robert Maxwell saw an opportunity to treat these materials as capital, as products to be sold just like other goods and services. And if one journal brought profit, why not make more? Maxwell reasoned that universities (specifically, their libraries) would have to buy each one, creating what he dubbed “a perpetual financing machine”.[1] This marked a radical change from earlier generations, when scientific societies viewed their publishing activities as a form of philanthropy — serving their respective fields and society more broadly by circulating new knowledge — with less attention to economics or profit.[2]

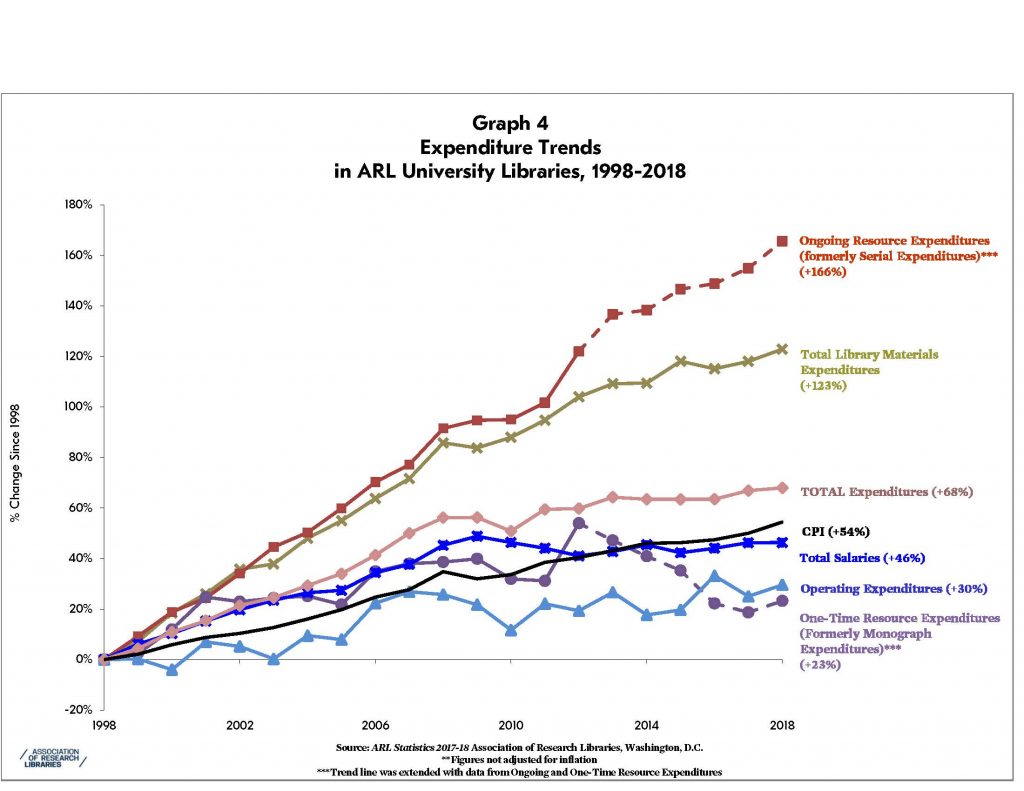

The machine Maxwell described grew more and more profitable over time as publishers charged more and more for the journals. As prices skyrocketed, academic libraries increasingly could not — cannot — afford scholarly journals. Year after year, for-profit publishers charge exorbitant subscription fees while libraries’ budgets stay flat or decline. Thus, journal subscriptions eat more and more of libraries’ budgets, leaving less purchasing power for other academic resources like books. This phenomena came to be known as the “serials crisis” (journals are also known as serials) and has been the topic of much discussion and debate within library and scholarly publishing communities throughout the past three decades. The graph below illustrates how sharply journal/serial expenditures by academic libraries have risen in recent years.

When academic libraries cannot afford journals, they might cancel some of their subscriptions. However, the publishing industry anticipated this possibility and sought to circumvent it by “bundling” together several journals into one subscription package. Once a library signed a contract for a “Big Deal,” it could no longer cancel a single journal title by itself. The library either paid for and got access to everything in the bundle, or nothing at all. Again, libraries were — are — at the mercy of the market. Publishers can charge what they wish and libraries are essentially held hostage to those prices.

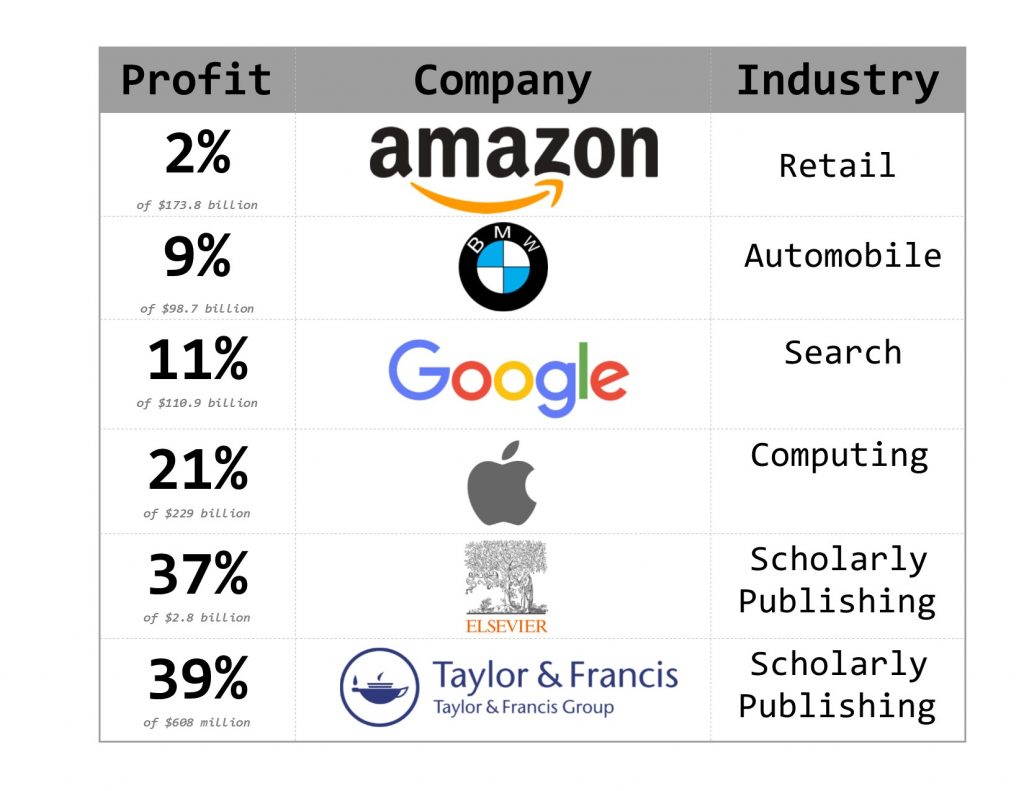

Why do publishers charge such high prices for journals? Actual production costs are low, because remember, 1) the content itself (the articles) is largely produced, freely and voluntarily, by the those in the academy, and 2) the digital revolution removed most printing and distribution costs. Therefore, high prices + low overhead = extremely high profit margins for publishers.[3][4] The table below compares profit margins of scholarly publishers with other well-known companies:

In the midst of these high prices and profit margins, however, the Open Access movement emerged as a threat. Publishers recognized that if authors demanded to make their work openly available, readers and libraries would no longer have to pay to read the scholarly literature. Rather, they could access — and share — it for free. And as a result, publishers’ profits would decline. How could they stop this from happening?

One way was to introduce article processing charges (APCs), as mentioned in the previous chapter. APCs are fees that authors must pay (either with their own money, or institutional or grant funds) in order to have their article published openly. University libraries, however, noticed that in some cases, payment of APCs did not reduce subscription fees. Instead, sometimes publishers charged twice for the same content. This practice has been dubbed “double dipping” to “describe a publisher gaining from two income streams, APCs and subscriptions, in a way that its overall income from the same customer rises.”[5] “Double dipping” is complicated further by the fact that price and contract negotiations between publishers and libraries are complex proceedings often protected by non-disclosure agreements, so that one library might be paying very different prices than another — but the two can’t discuss it to compare. This lack of transparency reduces libraries’ agency in making informed spending and budgetary decisions and helps keep publishers in a position of control.

In this way, APCs allow publishers to profit even from Open Access. And, on top of that, they have another very important advantage: prestige.

Profit in Prestige

The currency of scientists and scholars is not money, but prestige. Scholarly publishing is a competitive endeavor, and prestige offers greater career security and advancement. If we look back to the 1970s, we see that some journals, such as MIT’s molecular biology publication Cell, developed a reputation for their selectivity. By rejecting rather than accepting submissions, these journals formed a sort of exclusive club that only select authors could join when their work was published.[6] This emphasis on selectivity and exclusivity laid the foundation for journal hierarchies that persist to this day. Some journals are regarded as more rigorous, respected, or prestigious than others, and publication in those journals is the most sought-after by authors.

So, what makes one journal more prestigious than another? Perhaps the most established form of measurement is the impact factor. The idea is that a journal’s impact factor (its JIF) can be measured by comparing how many times its articles have been cited during the previous two years to how many articles overall the journal published during that same interval. Higher citation counts yield a higher impact factor, and a higher impact factor (so the reasoning goes) suggests a greater impact on the field, and hence a more prestigious journal. At least, that’s how it works in theory. In reality, the JIF is problematic. In recent years, scholars who study scholarly publishing have noted several shortcomings that interfere with the metric’s integrity. In particular, the JIF doesn’t translate well across disciplines. For example, it only takes into account the number of citations from the previous two years, but articles in some disciplines, such as the social sciences, experience steady rates of citations far into the future. Furthermore, the mean JIF can vary significantly from one discipline to another. What may be a high JIF for a Mathematics journal could be a low JIF in a field like Biomedical Research. In other words, the JIF is not a lingua franca in academia and scholarly publishing, nor should it be treated as such. [8]

Despite these concerns, the JIF persists, though other metrics — also known as bibliometrics or altmetrics — for evaluating journals and authors have recently come onto the scene (see Exercise below). But the idea behind all of them is that scholarly output is something that can be measured and assigned a value. To best market themselves and their work — in pursuit of a new job, tenure, promotion, an award, a grant opportunity, etc. — authors are incentivized to publish their work in the most highly regarded journals in their field. By doing so, they have a better chance for reward. These motivations can shape authors’ research, from the research question they choose to pursue, to the type or style of article they choose to write, to the previous studies they cite and address, and so on. For their part, journal editors may favor certain kinds of articles over others, hoping that their selections will bring greater attention and prestige to the journal. They may look more favorably on submissions that address trendy topics, that report positive rather than negative results, or that have the potential to capture media attention.

In this way, prestige influences academia and the scholarly publishing system and interferes with the creation and production of new knowledge. This is far from the ideal, in which science and scholarship would grow out of the most pressing and important research questions and be evaluated on the merits of the work itself. Fortunately, a growing number of universities realize this is a problem and are seeking new, alternative ways to recognize and reward faculty authors by instituting more holistic evaluation measures.[9] For example, according to SPARC’s June 2022 “Member Update” newsletter:

Exercise: Metrics

There are many bibliometric and altmetric measurements and tools. In order to become familiar with some of them, please choose one from the list below to explore and share with the class. Using the Metrics Toolkit https://www.metrics-toolkit.org/ as well as other individual websites devoted to specific metric measures, consider the following: (note: not all questions will apply to all metrics)

- What does it measure? Is it an author-level metric, journal-level metric, altmetric, or something else?

- How is it calculated?

- What is its history? Has it been around a long time, or is it relatively new?

- How did it come into use? Who or what invented it? Who or what promotes its use?

- How/where can you find it? Is it easily accessible to everyone, or is it only available through a proprietary or subscription database?

- Is it typically used within specific disciplines? If so, what are they?

- What are its strengths? What does it reveal?

- What are its shortcomings or complications? What does it miss?

Metrics

- h-index

- SCImago

- CiteScore

- Altmetric “donut”

- Journal Impact Factor

- ImpactStory

- Google Scholar citation count

- Plum Analytics

- Another metric you’d like to propose

Additional Readings & Resources

Langin, K. (2019, July 25). For academics, what matters more: journal prestige or readership? Science. https://www.science.org/content/article/academics-what-matters-more-journal-prestige-or-readership

Morales, E., McKiernan, E. C., Niles, M. T., Schimanski, L., & Alperin, J. P. (2021). How faculty define quality, prestige, and impact of academic journals. PLoS ONE 16(10): e0257340. https://doi.org/10.1371/journal.pone.0257340

Pai, M. (2020, Nov. 30). How prestige journals remain elite, exclusive and exclusionary. Forbes. https://www.forbes.com/sites/madhukarpai/2020/11/30/how-prestige-journals-remain-elite-exclusive-and-exclusionary/?sh=2556a4134d48

Taubert, N., Bruns, A., Lenke, C. and & Stone, G. (2021). Waiving article processing charges for least developed countries: A keystone of a large-scale open access transformation. Insights 34(1): 1-13. http://doi.org/10.1629/uksg.526

- Buranyi, S. (2017, June 27). Is the staggeringly profitable business of scientific publishing bad for science? Guardian, https://doi.org/10.1371/journal.pone.0127502 ↵

- Fyfe, A. (2021). Self-help for learned journals: Scientific societies and the commerce of publishing in the 1950s. History of Science, https://doi.org/10.1177/0073275321999901 ↵

- Buranyi, S. (2017, June 27). Is the staggeringly profitable business of scientific publishing bad for science? Guardian, https://doi.org/10.1371/journal.pone.0127502 ↵

- Hagve, M. (2020, Aug. 17). The money behind academic publishing. Tidsskr Nor Legeforen, 10.4045/tidsskr.20.0118 ↵

- Pinfield, S., Salter, J. & Bath, P. A. (2016). The “total cost of publication” in a hybrid open-access environment: Institutional approaches to funding journal article-processing charges in combination with subscriptions. Journal of the Association for Information Science and Technology, 67: 1751-1766. https://doi.org/10.1002/asi.23446 ↵

- Buranyi, S. (2017, June 27). Is the staggeringly profitable business of scientific publishing bad for science? Guardian, https://doi.org/10.1371/journal.pone.0127502 ↵

- Guédon, J-C. (2001). In Oldenburg's long shadow: Librarians, research scientists, publishers, and the control of scientific publishing. Association of Research Libraries. p. 16. https://www.arl.org/resources/in-oldenburgs-long-shadow/ ↵

- Chawla, D. S. (2018, April 3). What’s wrong with the journal impact factor in 5 graphs. natureindex. https://doi.org/10.1371/journal.pone.0127502 ↵

- Miedema, F. (2021, July 22). Viewpoint: As part of global shift, Utrecht University is changing how it evaluates its researchers. Science Business. https://sciencebusiness.net/viewpoint/viewpoint-part-global-shift-utrecht-university-changing-how-it-evaluates-its-researchers ↵

- SPARC. (1 June 2022). "The University of Maryland department of psychology leads the way in aligning open science with promotion & tenure guidelines." https://sparcopen.org/news/2022/the-university-of-maryland-department-of-psychology-leads-the-way-in-aligning-open-science-with-promotion-tenure-guidelines/ ↵