7 Feedbacks and Stability

Key Concepts

In this chapter we will:

- Be introduced to system dynamics, feedbacks and resilience

- Learn that systems can have different possible outcomes, and can tip from one stability domain to another

- Become aware that concepts like resilience and robustness are frequently used in sustainability studies

7.1 Flipping Lakes

In this chapter we introduce core concepts of systems thinking. Those are important since our framework to study coupled infrastructure systems is very much influenced by those concepts. When we want to study the governance of coupled infrastructure systems, those systems operate in an environment exposed to disturbances and surprises. The world we live in experiences a lot of variability, whether it is weather variation, infectious diseases spreading within your community, accidents and unexpected outcomes of sport events. Life happens, and if we think about governance, we have to take into account that there is a lot of variation in the world around us that could impact the way we best govern a system. Assuming the world is nice, orderly and predictable, is a recipe for disaster.

To explain the concepts introduced in this chapter, we start with an illustrative example of lakes. Lakes are islands on land. Lakes are a favorite study object for ecologists since they are relatively self-contained ecosystems in which a range of plant species, fish species, and biochemistry interact. There are many different types of lakes and one can study which attributes of lakes generate different patterns of species abundance. One of the key areas of study is the process of eutrophication. If a large quantity of nutrients enters a lake due to runoff from the heavy fertilizer use on nearby farms, the lake water can suddenly flip from being crystal clear to looking like pea soup. This is an example of an ecosystem that can exhibit different long-run patterns of species composition (or “states”): a clear lake with little algae and a green lake dominated by algae (Figure 7.1).

One ecological mystery that has interested limnologists for a long time is why lakes suddenly flip from a clear blue to pea soup. The study of resilience of ecosystems has provided some insights on why this happens. Lakes, especially shallow lakes, have tipping points related to the amount of nutrients they can process beyond which the lake flips. When a lake turns to pea soup, it is not only less attractive to swimmers, it also creates an environment with reduced biodiversity that favors weeds and limits the number of fish species. We know that we need to control nutrient use to avoid creating undesirable states in nearby lakes, but the use of fertilization for agriculture also has benefits. Understanding how to avoid flipping the clear lake system into pea soup is critical and can provide lessons for other types of problems. For example, we may want to avoid pushing the climate system toward dangerously rapid change, or causing coral reefs to flip from a healthy state with many fish species to one dominated by slimy weeds.

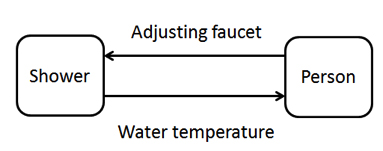

7.2 Introduction to Feedback

In this chapter we discuss systems thinking and the concept of resilience. Systems are composed of component parts that interact with each other. For example, a herd of cows consumes grass from a pasture. The component parts in this case are the herd of cows and the pasture. As cows consume grass the biomass from the pasture is reduced. On the other hand, the cows produce manure which fertilizes the pasture leading to an increase in biomass. This simple example illustrates how the component parts interact and cause each other to change. Another example is a person controlling the temperature of the water while in the shower (Figure 7.2). If the person wants to increase the temperature, she will increase the volume of hot water and/or reduce the volume of cold water. There might be some delay between when a faucet is adjusted and hot water actually begins coming out of the faucet. An impatient person might open the hot water faucet too much and burn herself. Getting the right temperature requires that the person adjusting the faucet reacts appropriately to the information gathered from the shower (water temperature) in order to adjust the controls (opening the hot water faucet) appropriately. The interaction between the person and the shower is a system based on feedback. The notion of feedback is illustrated in Figure 7.2. The two component parts of the system, which are often represented as “state variables,” are here represented by boxes and the interactions between the variables by arrows. You can imagine signals flowing in a circle. A temperature signal flows from the shower water to the person who translates it into a position signal for the faucet knob, and the cycle begins again. This cycling of signals is the reason for the term “feedback loop.”

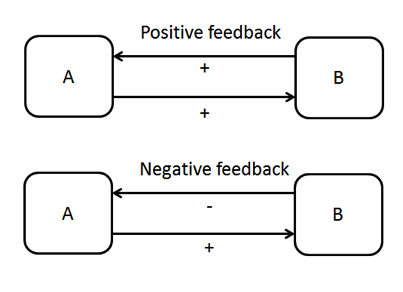

In the language of “state variables,” a change in variable A causes a change in variable B which subsequently impacts variable A again. Feedbacks can be positive or negative (Figure 12.3). A positive feedback occurs when a change in one variable, after going through the feedback pathways, returns to induce an additional change in that variable in the same direction as the original change; if the additional change is in the opposite direction, we call that a negative feedback.

An example of a positive feedback is money on a savings account. As long as you don’t take any money out of a savings account, the money will generate interest which is put back on your account and the next year you earn interest over a larger sum. Hence the interest you earn on interest from the previous year is an example of a positive feedback. An example of a negative feedback is the shower example. If the water is too hot, you reduce the amount of hot water, and if it is too cold you increase the amount of hot water in order to push the temperature toward your preferred value or set point. Here, “negative” refers to deviations away from the set point. The person generates negative feedback when they respond to the positive deviation by adjusting the faucet to reduce the deviation.

If we look back at Figure 4.5 on the Institutional Analysis and Development Framework we can also consider the IAD a systems representation of governance problems. The outcomes of the action situation feed back into the contextual variables or directly into the action situation again. In this chapter we discuss the systems perspective more explicitly since there are a number of related concepts that are increasingly used in sustainability studies.

7.3 Resilience

Humans are part of a system of human-environmental interactions. Humans influence the rest of the system by appropriation of resources (i.e., removing system elements), pollution (i.e., adding system elements), landscape alterations (reconfiguring system elements), etc. There are characteristics of systems that help us to understand how they may evolve over the long term and how they are affected by these human activities.

Consider a young forest where the trees are small and there is sufficient light and nutrients for the trees to grow. The trees in the forest initially grow fast and the forest starts to mature over time. The growth rates of the trees slow down when the trees get bigger, block the sunlight for each other and compete for nutrients. Add to this idealistic description of tree growth the fact that forests cope with many types of disturbances such as pests, forest fires, and tornados and this situation becomes more complicated. If a forest experiences a fire why does it cause more damage in some forests than others? How does management of the forest influence the size of forest fires? And if forest fires are a natural phenomenon, shouldn’t we allow them to burn freely? These are major challenges for agencies that manage forests. Since 1944, the U.S. has used an icon named Smokey the Bear to promote the suppression of fires. But it is one thing to try to prevent humans from starting fires, it is quite another to suppress all fires.

In fact, due to the suppression of forest fires, forests in many places in the U.S. have built up large fuel loads. This fuel consists of dead wood that is not removed by regular forest fires. This has consequences. When forest fires happen in forests where fuel has accumulated for decades, fires are intense and burn all trees, young and old, and even the soil. As a result, the forest will not recover. This is in stark contrast to forests that have frequent, smaller, lower intensity fires that regularly reduce the fuel load (hence, only small fires can burn). These types of fires do not burn all the trees or harm the soil, therefore forests can easily recover. Hence too much suppression of forest fires can reduce the resilience of forests such that they cannot recover from the disturbance of an inevitable fire.

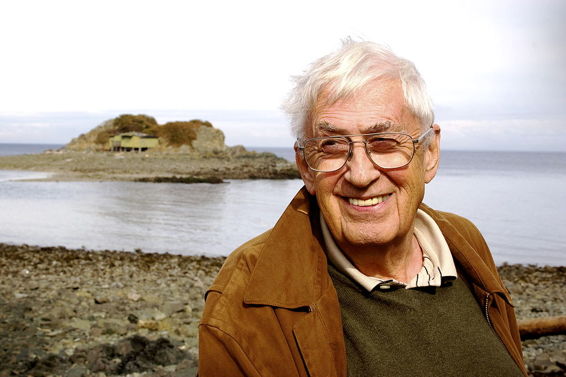

The concept of resilience in ecological systems was first introduced in 1973 by the Canadian ecologist C.S. “Buzz” Holling in order to describe the persistence of natural systems in the face of disturbances such as fires and pest outbreaks (Figure 7.4). A single system can have multiple types of states, for example a lake can be either clear or green and murky, a rangeland can have a mix of healthy tall grass and with a few trees and shrubs, or it can be covered with noxious weeds. If a system state is resilient, the system remains in that state even if it is exposed to disturbances. If a system state loses it’s resilience, for example due to fire suppression, decades of nutrient loading in lakes or overgrazing a rangeland, it may not be able to recover from even a small disturbance, which would cause it to flip into a very undesirable state.

The concept of resilience can be applied to many ecological systems. As discussed above, ecosystems often have multiple stable states. With the term stable state we refer to a certain configuration of the system—such as a healthy productive ecosystem with a lot of biodiversity—which can cope with variability such as rainfall, storms, droughts etc. An alternative stable state could be an eroded unproductive ecosystem. But there are limits to the size of the disturbances a system can cope with while in a particular stable state. If the system is in a desired stable state—such as the healthy productive state—we often want to keep it like that. Resilience can be defined as the magnitude of the largest disturbance (e.g., fire, storm, flood, nutrient shock) the system can absorb without transforming into a new state. The problem is that human activities can reduce the resilience of the system and make it more vulnerable to smaller and smaller disturbances such that it flips to another stable state (Figure 7.5). If a system is in an undesirable stable state we may want to restore the ecosystem but such states might be very difficult to get out from. Those undesirable states might be very resilient.

Rangelands, found in arid areas all over the world, are another example of systems with multiple stable states. To illustrate the possible multiple stable states we focus on the example of Australian rangelands. When Europeans came to Australia they started to use rangelands to raise sheep and cattle. Before European settlement, natural grazing pressure was the result of native herbivores such as kangaroos, wallabies, and wombats and was relatively low. When settlers added sheep and cattle to the system, the grazing pressure increased significantly. Moreover, European settlers installed watering points (simple troughs fed by water pipes) in the landscape to provide water for their sheep and cattle. Not only did these watering points benefit the sheep and cattle, they also benefited the kangaroo population which further increased grazing pressure.

In many areas of Australia, the properties (ranches) are very large and the density of grass is very low. As a result, the impacts of overgrazing may not be directly visible in the short run. Figure 7.6a shows an example of a healthy grazing area, which looks quite different compared to the green meadows in Europe. Nevertheless, the farmers made a good living out of this production strategy. But they not only increased the grazing pressure, they also suppressed fire. As a consequence, woody weeds started to blossom (Figure 7.6b) which outcompeted the grass and made the landscape useless for sheep farming. It will take decades before the woody weeds will disappear through a natural cycle, and it is too costly (given the size of the properties covering hundreds or even thousands of acres) to remove the weeds mechanically. As a consequence, a significant area of Australia’s grasslands have now flipped from a grass-producing sheep-supporting landscape into a woody, weed-dominated wasteland.

7.4 Tipping points

In this section, we focus on a specific element of systems with multiple stable states, namely tipping points. How can we explain that systems suddenly change their behavior and change their configuration? Remember that systems consist of components that are connected via feedback. Those feedback relations can change. For example, grass can compete with woody shrubs in rangelands when grass has a particular density. When the ratio of grass to woody shrubs crosses a certain threshold, grass cannot compete anymore, and woody weeds take over. This threshold in the ratio of grass biomass to woody shrubs is the tipping point. Ecological systems are not the only systems that have tipping points. We can find tipping points in social systems as well (e.g., a peaceful protest flips to a violent riot). In his bestselling book, Malcolm Gladwell (2000) uses the example of the New York subway system. When there is a lot of graffiti on the subway vehicles and trash on the ground, people are more likely to be polluters. In the 1980s, the city government started cleaning the subways every day whenever they noticed new trash and graffiti. In a clean subway car, people are less likely to put new graffiti or throw trash on the ground or on the floor of the subway car. The feedback dynamics have changed, and cleaner subways cars stimulate cleaner behavior—an example of positive feedback.

How do we know we are near a tipping point? This is a critical question, but unfortunately we cannot answer it very well. A tipping point is something we cannot directly observe—it is hidden until we pass it. But if the only way to discover it is to pass it, and the point of discovering it is to not pass it, we are in a bit of a quandary. In fact, we only observe certain features of the system that are the indirect result of getting near a tipping point. One feature we can exploit is the fact that near a tipping point, somehow the system is “balanced” (see Figure 7.7). When the system is balanced, very small disturbances persist for a long time. Imagine a marble on a perfectly flat surface. The tiniest push will make the ball roll for a long time. Imagine, on the other hand, a bowl with steep sides, with the marble resting at the bottom of the bowl. If you give the marble a push away from the bottom, it will return rather quickly and stop moving. Thus, as systems approach tipping points, the rate at which they rebalance themselves after a small disturbance slows down. This is called “critical slowing down” and is one method (albeit an imperfect one) to discover whether a system is nearing a tipping point. Discovering when complex social and ecological systems approach tipping points is extremely difficult, and remains an active area of research.

Along with the increasing use of the term resilience in sustainability debates we also see the use of the term robustness. What is robustness and how does it differ from resilience? The concept of robustness comes from engineering and is used to design systems. For example, engineers develop control systems for airplanes such that the ability of the airplane to fly is “robust” to mechanical failures, turbulence, wind shear, etc. In their design of a robust airplane, engineers include various backup systems to avoid the situation in which the “system” (i.e., the plane and the people in it) flips from cruising at a constant altitude of 30,000 feet (10 km) to a free fall. But engineers also know that there are costs related to robustness and tradeoffs need to be made.

Do we build a wall 10 meters high around New Orleans to avoid damages from the next major hurricane? As we design systems to reduce our sensitivity to damages (i.e., to be robust) from disturbances, we have to make choices about what disturbances to consider. To be robust to one type of disturbance may create vulnerabilities to other types of disturbances. For example, utilizing concrete canals instead earthen canals for irrigation may reduce the number of wash outs, but may also reduce the ability of farmers to be adaptable and spatially reconfigure water flows using temporary mud walls in earthen canal systems to deliver water to the farmers’ fields under variable circumstances.

Engineers argue that systems can be robust, yet fragile. They can become more robust to big fires but more vulnerable to small fires. Hence difficult choices have to be made.

7.5 Managing performance of systems

In our description of the IAD framework we discussed evaluation criteria associated with various outcomes. Interactions between the participants in the action arena lead to particular outcomes. Those outcomes are evaluated somehow. For example, did the participants achieve their goals? Are policy targets reached? Did the interactions lead to a fair allocation of resources? Based on the evaluation of the outcomes, the interactions in the action arena continue, and/or participants learn and change the rules-in-use. This chapter is about systems, and systems are about feedback and control. As such this chapter provides a more general perspective of the IAD framework.

Let’s discuss the example of the IAD framework applied to taking a course (Figure 4.6). The interactions of the participants lead to a grade over the course of the semester. The specifications on how the grade is calculated are specified in the syllabus. When the professor and the students generate new grade information after an exam, they evaluate this information. This can lead to a continuation of the interactions in the action arena, but may also lead to a change in the attributes of the course participants (some may drop the course, or start studying more), or a change in the rules-in-use (the professor makes adjustments to the course material for the remainder of the course).

An example for natural resources is the use of groundwater. A city may use groundwater to provide its residents and industries with the water they need. The groundwater is replenished when it rains. If in the long term less water is extracted than is replenished, the groundwater level remains the same. However, a problem in many urban areas is that water demand is increasing rapidly, while the supply of water remains the same. As a result, the groundwater level will decline. If one measures the groundwater level on a regular basis one will observe this decline. How will the city government respond to this decline? At which level of groundwater decline will new policies be implemented (i.e., changes in the rules-in-use)? Will those policies focus on increasing supply or reducing demand? If the city will not respond in an adequate way, residents may revolt against water shortages or higher water prices, and may even leave the city (this may seem an unlikely scenario to many readers, but this actually occurred in the year 2000 in Cochabamba, Bolivia). Every response may generate new or expose existing hidden fragilities. For example, reducing water demand may cause problems with the pipes, such as solid waste building up when the flow rate of water through the pipes is reduced. Another example is that importing of water, such as bringing water from the Colorado river via canals over hundreds of miles through the desert to the city, makes a city vulnerable to changes in climate in other parts of the country.

Managing a dynamically changing system is difficult. We can control the temperature of our shower at home pretty well, but may burn ourselves if confronted with a different shower in a hotel during travel. What if lots of people are trying to adjust the faucet at the same time? As a thought experiment, consider a bunch of participants in the shower. First, a goal, the desired water temperature, has to be defined through a collective choice process. Will all participants have a say, or are only certain participants in the action arena allowed to define the goals? Suppose there is a common goal, how will information about water temperature be used to adjust the faucet. Not all participants will receive the same feedback since not all participants can be under the showerhead. Do the people who adjust the faucet get reliable information from those who experience the hot water from the shower? This example shows the complexity of controlling a dynamic system when there are different participants who have different goals and positions. The institutional arrangements can enable or hinder the ability of groups to reach long term goals.

Earlier in this chapter, we mentioned that it is very difficult to know when a system is reaching a tipping point. We only know it for sure once we have passed it. How can we manage complex systems if we have incomplete information about the system? Scholars who have studied resilience and robustness of systems come to a number of insights that might be helpful for managing systems:

- Maintain diversity within the social and ecological components of the system. This includes biodiversity, but also institutional diversity. This diversity contains alternative solutions expressed in DNA or institutional arrangements. Avoid monocultures. In agriculture, a crop may be affected at a global scale if all seeds come from the same source and this particular variant becomes vulnerable to a pest. Likewise, we don’t want to have the same institutional arrangements in all jurisdictions. With institutional monocultures we cannot learn how others have addressed a similar problem in a different way.

- Maintain modularity of systems. Nobel Laureate Herbert Simon used the example of the watchmaker to illustrate the importance of modularity. Suppose a watch consists of 1000 parts. One approach to watch design is to assemble all 1000 components in one sitting. If the watchmaker is disturbed or makes an error during the assembly process, she has to start again from scratch. Another design has modules and the watchmakers can assemble the modules, and then put the modules together. If a disturbance happens the watchmaker only needs to recreate one module. Modules also relate to the governance of social-ecological systems, and therefore we have states, counties and watersheds as units of governance in which new technologies and policies can be experimented with, without impacting the rest of the system.

- Finally, it is important to keep options open. Maintain redundancy, by which we mean that it is important to maintain some breathing space for the system. If everything is organized in a very efficient way, a disturbance could eradicate a keystone species, a charismatic leader, or the one source of revenue. It is important to have some fat in the system so that a disturbance can be absorbed. Have two operators of the energy distribution system so that the system can still continue if one of the operators is sick. Have multiple suppliers of energy so that a cloudy day reducing solar energy will not lead to a blackout of the energy system. .

Managing the performance of a system is very hard. It requires practice, continuous learning, and maintaining diversity, modularity and redundancy. In fact, there is a large, very well-developed technical field called control theory that focuses on how to use feedbacks to manage systems which we will take up in the next chapter. To close this chapter, we relate the concepts of resilience and robustness back to sustainability.

7.6 Resilience, robustness, and sustainability

In recent years the concepts of resilience and robustness have been increasingly used in the debate about sustainability. How do they relate to each other? Sustainability refers to a goal one aims to achieve. Sustainability guides the discourse on the interaction between human societies and the environment. There are many dimensions of sustainability, varying from avoidance of depletion of natural resources, avoidance of inequality and stimulation of quality of life for everyone and striving for a just society. Resilience and robustness ideas can be used to define system properties that may help decision-makers to achieve sustainability. Robustness focuses on feedback systems with clearly defined boundaries. Robustness comes from engineering and robust-control systems. It can be used to address questions about how to control a system to reach a target, such as sustainability? Robustness enables us to think about decision making, which information to use, how fast to respond to changes, and to think about trade-offs in decisions to be robust to certain shocks but not to others.

Resilience provides a framework to think about how multiple systems, each operating at their characteristic temporal and spatial scales, interact across scales. Human decision making can affect the resilience of a system by changing the shape of a particular stability domain. This can be intentional with a goal, for example, catalyzing a transformation of a fossil fuel economy towards a solar powered economy. Hence, resilience of a system in a particular stability domain is not always desirable and human activities can shape the long term dynamics of the system.

7.7 Critical reflections

With a systems perspective we consider the components of the system and their dynamic interactions. The IAD framework we discuss in this book is a systems perspective of human behavior, institutions, and the environment in which they are embedded. Systems also have characteristics such as resilience and tipping points, which we can observe in social as well as ecological systems.

7.8 Make yourself think

- 1. The next time you take a shower, reflect on your ability to control the temperature.2. Do you know an example of a resilient system?

3. What would be an alternative outcome for that system?

7.9 References

Anderies, J. M., Folke, C., Walker, B., & Ostrom, E. (2013). Aligning key concepts for global change policy: robustness, resilience, and sustainability. Ecology and Society, 18(2), 8.

Gladwell, M. (2006). The tipping point: how little things can make a big difference. Little, Brown.

Holling, C. S. (1973). Resilience and stability of ecological systems. Annual Review of Ecology and Systematics, 1–23.

Simon, H. A. (1996). The sciences of the artificial. MIT press.

Walker, B. & Salt, D. (2012). Resilience thinking: sustaining ecosystems and people in a changing world. Island Press.