Revolutionizing Testing: The Impact of Computer Adaptive Testing on Accessibility and Equity in Education

Roman Naghshi

Introduction

Context/Background to the Research

Traditional testing environments often fail to accommodate the diverse needs of students, leading to significant accessibility challenges. These challenges include issues faced by students with learning disabilities, those from different cultural backgrounds, and individuals with physical impairments. Additionally, traditional tests often induce high levels of stress and anxiety, further impacting student performance negatively (Heissel et al., 2019, p. 47). The disparity in academic achievement between students with and without disabilities highlights the urgent need for more inclusive and adaptive testing methods (Newman et al., 2011, p. 48).

Traditional testing environments often fail to accommodate the diverse needs of students, leading to significant accessibility challenges. These challenges include issues faced by students with learning disabilities, those from different cultural backgrounds, and individuals with physical impairments. Additionally, traditional tests often induce high levels of stress and anxiety, further impacting student performance negatively (Heissel et al., 2019, p. 47). The disparity in academic achievement between students with and without disabilities highlights the urgent need for more inclusive and adaptive testing methods (Newman et al., 2011, p. 48).

Topic Clarification

This research focuses on Computer Adaptive Testing (CAT) as a potential solution to address the accessibility issues inherent in traditional testing environments. CAT dynamically adjusts the difficulty of test questions in real-time based on the test taker’s performance, offering a personalized assessment experience. This method aims to provide a more accurate measure of a student’s knowledge and skills, catering to their unique needs and reducing the barriers associated with traditional testing formats (Weiss, 2011, p. 10-11).

Thesis

The central thesis of this research is that Computer Adaptive Testing (CAT) significantly enhances accessibility and equity in educational assessments by providing a tailored testing experience that accommodates diverse learning needs, reduces test-related stress, and maintains academic rigor.

Road Map

This essay will first explore the various accessibility issues prevalent in traditional testing environments, highlighting their impact on student performance and outcomes. Next, it will delve into how CAT addresses these issues through its adaptive nature, multimodal support, and strategies for maintaining academic rigor (Sireci & Zenisky, 2006, p. 329). Following this, the discussion will cover the challenges and limitations of implementing CAT and propose mitigation strategies to overcome these hurdles. Finally, the essay will look ahead to future directions and innovations in CAT, emphasizing the potential for integrating advanced technologies to further enhance its effectiveness and inclusivity.

Accessibility Issues in Traditional Testing

Traditional testing environments often fail to address the diverse accessibility needs of students, leading to significant challenges for many. Students with learning disabilities, for example, may struggle with the standardized format of traditional tests that do not accommodate their specific needs, such as extended time or alternative formats. Those from different ethnic backgrounds might encounter cultural biases in test questions, which can disadvantage them if the content is not reflective of their experiences and knowledge. Diverse learning preferences also pose a challenge, as traditional tests typically cater to a narrow range of skills, such as rote memorization and written expression, rather than incorporating various ways of demonstrating understanding.

Traditional testing environments often fail to address the diverse accessibility needs of students, leading to significant challenges for many. Students with learning disabilities, for example, may struggle with the standardized format of traditional tests that do not accommodate their specific needs, such as extended time or alternative formats. Those from different ethnic backgrounds might encounter cultural biases in test questions, which can disadvantage them if the content is not reflective of their experiences and knowledge. Diverse learning preferences also pose a challenge, as traditional tests typically cater to a narrow range of skills, such as rote memorization and written expression, rather than incorporating various ways of demonstrating understanding.

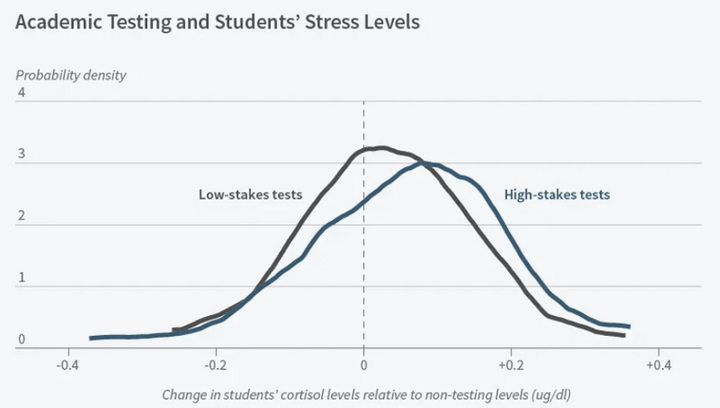

Physical disabilities add another layer of complexity, as students with mobility impairments or visual and hearing impairments may face difficulties in accessing test materials or environments designed without their needs in mind. Additionally, stress and anxiety are pervasive issues, exacerbated by high-stakes testing. The traditional testing format often amplifies these issues, leading to increased cortisol levels, a stress hormone, during high-stakes exams as compared to low-stakes ones, as demonstrated in a study involving students from a charter school network in New Orleans (Heissel et al., 2019). This heightened stress can impair cognitive function, negatively impacting performance and outcomes (see Figure 1).

Figure 1

Changes in students’ cortisol levels during low-stakes and high-stakes tests.

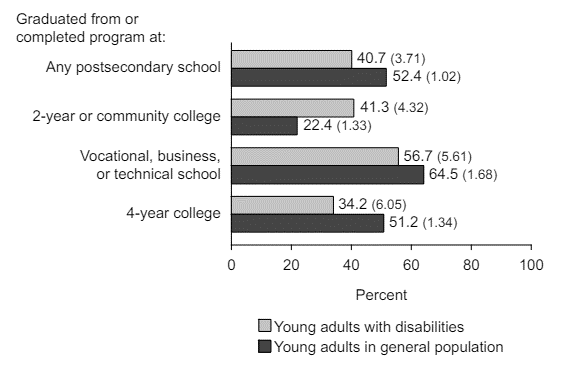

The disparity in graduation and completion rates for students with disabilities (SWDs) further illustrates the impact of inadequate accessibility. At four-year colleges, the completion rate for SWDs is 34%, significantly lower than the 51% completion rate for their non-disabled peers. Across all postsecondary institutions, the completion rate for SWDs is 41% compared to 52% for non-disabled students (Newman et al., 2011, p. 47). This gap is often due to universities not providing the necessary accommodations and support, leading to higher dropout rates among SWDs (see Figure 2).

Figure 2

Completion rates of students with disabilities from current or most recently attended postsecondary school.

Traditional testing’s impact on student performance extends beyond stress and accessibility issues. For instance, socioeconomic factors also play a significant role. Research indicates that cortical thickness, which correlates with academic achievement test scores, differs between higher- and lower-income students (Mackey et al., 2015). Lower-income students, defined as those from families earning less than $42,000 per year (Mackey et al., 2015, p. 5), show differences in brain regions related to vision and knowledge storage. These neuroanatomical disparities, influenced by environmental factors, further disadvantage lower-income students in traditional testing scenarios, exacerbating the income-achievement gap.

In summary, traditional testing environments are rife with accessibility issues that disproportionately affect students with disabilities, those from diverse backgrounds, and those experiencing high levels of stress and anxiety. These challenges contribute to lower performance and completion rates, highlighting the urgent need for more inclusive and adaptive testing methods that cater to the diverse needs of all students.

How CAT Addresses Accessibility Issues

Adaptive Nature of CAT

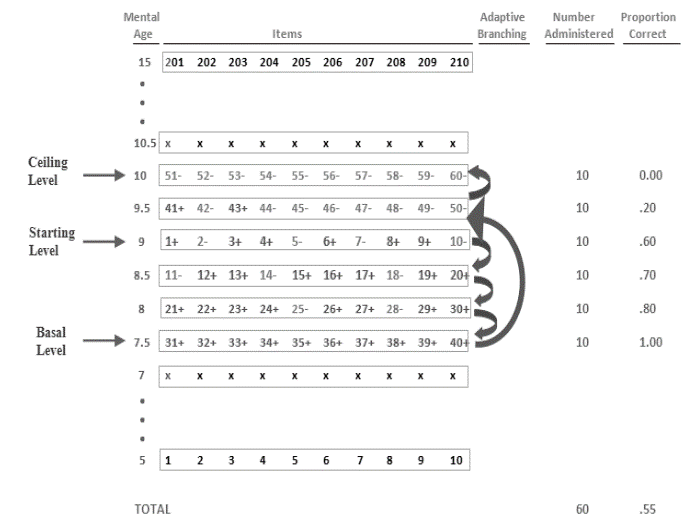

Computer Adaptive Testing (CAT) represents a significant advancement in the field of educational assessment by adapting to the test-taker’s ability level in real-time. This dynamic approach means that the difficulty of each subsequent question is determined based on the student’s performance on previous questions. For instance, if a student answers a question correctly, the next question will be more challenging. Conversely, if a student answers incorrectly, the subsequent question will be easier. This real-time adjustment helps to keep the student engaged and reduces the frustration that can arise from questions that are either too difficult or too easy (Weiss, 2011). This follows the Binet Adaptive Test created by Alfred Binet in 1905 (see Figure 3).

Figure 3

Schematic Representation of a Binet Adaptive Test

By tailoring the difficulty of the questions to the individual’s ability, CAT can provide a more accurate assessment of a student’s knowledge and skills. Traditional fixed-form tests, where all students receive the same set of questions, often fail to capture the full range of a student’s abilities. High-achieving students might find the test too easy and not fully demonstrate their knowledge while lower-achieving students might struggle and become discouraged. CAT, by contrast, creates a customized testing experience that can better pinpoint a student’s true level of understanding and competency (Wainer, 2000, pg. 10-11).

Multimodal Support

One of the most promising aspects of CAT is its ability to incorporate multimodal support, which enhances accessibility for students with diverse learning needs. CAT can present questions using videos, images, audio, and other interactive elements, making the assessment more engaging and comprehensible (Sireci & Zenisky, 2006, p. 329). For example, a question could be presented through a short video clip, followed by a series of related questions. This not only aids in comprehension but also caters to students who might have difficulty with text-based questions. Additionally, audio support can be provided for students with visual impairments or reading difficulties, allowing them to hear the questions read aloud (Thurlow et al., 2010, p. 3). Furthermore, CAT can offer multiple methods for students to express their answers. Students could type their responses, speak them aloud using speech recognition technology or select answers through interactive touchscreen interfaces. This flexibility is particularly beneficial for students with physical disabilities, learning disabilities, or language barriers, ensuring that they can demonstrate their knowledge without being hindered by the format of the test.

Maintaining Academic Rigor

While CAT provides support and accommodations to enhance accessibility, it also maintains academic rigor by ensuring that the core content and difficulty of the questions remain challenging and appropriate for the test’s objectives. CAT systems are designed to reframe questions or provide assistance without diluting the academic standards.

While CAT provides support and accommodations to enhance accessibility, it also maintains academic rigor by ensuring that the core content and difficulty of the questions remain challenging and appropriate for the test’s objectives. CAT systems are designed to reframe questions or provide assistance without diluting the academic standards.

For example, if a student is struggling with a complex math problem, the CAT system might offer a simpler version of the problem that still assesses the same underlying concept. Alternatively, the system might provide hints or scaffolding, such as breaking the problem into smaller, more manageable steps. This approach helps students to engage with the material and develop their problem-solving skills without compromising the test’s integrity. These adaptive strategies align with the general principles of maintaining academic rigor in adaptive testing.

A hypothetical scenario illustrating this process could involve a history exam where a student is asked to analyze the causes of a significant historical event. If the student struggles with the open-ended question, the CAT system might offer multiple-choice questions focusing on specific aspects of the event, gradually building up to the more complex analysis. This method ensures that the student remains challenged and engaged while receiving the necessary support to succeed. The use of innovative item formats, such as simulations and interactive tasks, supports this adaptive and supportive approach.

By integrating adaptability, multimodal support, and strategies to maintain academic rigor, CAT not only addresses accessibility issues but also enhances the overall quality and effectiveness of educational assessments. These features make CAT a powerful tool for creating more equitable and accurate evaluations of student learning.

Discussion

Challenges and Limitations of CAT

Despite the numerous benefits of Computer Adaptive Testing (CAT), several challenges and limitations need to be addressed for its successful implementation. One primary challenge is the technological infrastructure required for CAT. Implementing CAT necessitates reliable computer systems and internet connectivity, which might not be available in all educational institutions, especially in underfunded or rural areas. The digital divide can exacerbate existing educational inequalities (Thurlow et al., 2010, p. 2).

Despite the numerous benefits of Computer Adaptive Testing (CAT), several challenges and limitations need to be addressed for its successful implementation. One primary challenge is the technological infrastructure required for CAT. Implementing CAT necessitates reliable computer systems and internet connectivity, which might not be available in all educational institutions, especially in underfunded or rural areas. The digital divide can exacerbate existing educational inequalities (Thurlow et al., 2010, p. 2).

Another significant limitation is the potential for test security issues. As CAT delivers a unique set of questions to each test-taker, there is a need for a large item pool to ensure test integrity. Developing and maintaining this item pool can be resource-intensive. Additionally, the adaptive nature of CAT might make it easier for students to share specific test items, potentially compromising the test’s fairness and validity.

There is also the challenge of ensuring the validity and reliability of CAT. The adaptive algorithm must be carefully calibrated to ensure that it accurately assesses a student’s abilities without introducing biases. Any flaws in the algorithm can lead to inaccurate assessments and affect the validity of the test results (Wainer, 2000, p. 16).

Mitigation Strategies

To mitigate these challenges, several strategies can be employed. Investing in technological infrastructure is crucial. Educational institutions can seek funding and partnerships with technology companies to improve access to necessary hardware and software. Additionally, developing offline versions of CAT can help bridge the gap in areas with limited internet connectivity (Thurlow et al., 2010, p. 4).

To mitigate these challenges, several strategies can be employed. Investing in technological infrastructure is crucial. Educational institutions can seek funding and partnerships with technology companies to improve access to necessary hardware and software. Additionally, developing offline versions of CAT can help bridge the gap in areas with limited internet connectivity (Thurlow et al., 2010, p. 4).

To address security concerns, maintaining a robust and secure item pool is essential. This can be achieved by continually developing new test items and employing advanced encryption methods to protect test content. Regular training for educators and administrators on maintaining test security can also help minimize risks (Weiss, 2011).

Ensuring the validity and reliability of CAT requires ongoing research and calibration of the adaptive algorithms. This includes conducting pilot tests, gathering feedback from test-takers, and using statistical methods to identify and correct any biases. Collaborative efforts between psychometricians, educators, and technology experts are necessary to refine the testing process continually.

Future Directions and Innovations in CAT

Looking ahead, several exciting advancements and innovations in CAT technology hold the promise of further enhancing its effectiveness and accessibility. One such advancement is the integration of artificial intelligence (AI) and machine learning algorithms. Especially regarding the upcoming release of ChatGPT Edu (OpenAI, 2024). These technologies can provide more sophisticated adaptations, better predicting and responding to individual test-takers’ needs in real time. Additionally, there is potential to explore how CAT can be integrated with other educational technologies, such as learning management systems (LMS), to create a seamless and comprehensive assessment experience.

Looking ahead, several exciting advancements and innovations in CAT technology hold the promise of further enhancing its effectiveness and accessibility. One such advancement is the integration of artificial intelligence (AI) and machine learning algorithms. Especially regarding the upcoming release of ChatGPT Edu (OpenAI, 2024). These technologies can provide more sophisticated adaptations, better predicting and responding to individual test-takers’ needs in real time. Additionally, there is potential to explore how CAT can be integrated with other educational technologies, such as learning management systems (LMS), to create a seamless and comprehensive assessment experience.

Another promising direction is the development of more immersive and interactive testing environments. Virtual reality (VR) and augmented reality (AR) can create engaging, realistic scenarios for assessments, particularly in fields requiring practical skills. For example, medical students could use VR to perform virtual surgeries, providing a more accurate measure of their competencies (Zhu et al., 2014). This not only enhances the realism of the assessments but also allows for safe, controlled practice environments where students can hone their skills without the risk associated with real-life procedures.

Research is also underway to make CAT more inclusive. Advances in natural language processing (NLP) can help develop more accessible tests for students with disabilities. For instance, AI-driven speech recognition (Thurlow et al., 2010) can allow students to respond verbally to test questions, and NLP can ensure that the test content is understandable to students with diverse linguistic backgrounds.

Conclusion

The research asserts that Computer Adaptive Testing (CAT) significantly enhances accessibility and equity in educational assessments by providing a tailored testing experience that accommodates diverse learning needs, reduces test-related stress, and maintains academic rigor. The study first examined the various accessibility issues prevalent in traditional testing environments, such as difficulties faced by students with learning disabilities, cultural biases, and high levels of stress (Heissel et al., 2019). It highlighted how these issues negatively impact student performance and outcomes (Newman et al., 2011). Subsequently, the research explored how CAT addresses these challenges through its adaptive nature, multimodal support, and strategies for maintaining academic rigor (Weiss, 2011). The adaptive approach of CAT ensures that each test is tailored to the individual’s ability level, while multimodal support accommodates different learning styles and needs (Sireci & Zenisky, 2011). The study also discussed the challenges and limitations of implementing CAT, proposing mitigation strategies to overcome these hurdles, and envisioned future directions for integrating advanced technologies to further enhance CAT’s effectiveness and inclusivity.

CAT represents a significant advancement in educational assessments by creating more inclusive, engaging, and accurate evaluations of student learning. By addressing the diverse needs of students, it helps to level the playing field and promotes educational equity. The relevance of CAT is underscored by its ability to reduce the stress and anxiety associated with high-stakes testing, making the testing experience more positive and supportive for all students (Heissel et al., 2019). With the advent of generative AI and its transformative capabilities, there are abundant opportunities for further research and development in CAT. The power of generative AI can be harnessed to create even more sophisticated adaptive algorithms that provide real-time adjustments tailored to the needs of each test-taker. Efforts should be focused on integrating AI-driven technologies with CAT to develop more immersive and interactive testing environments.

References

Drasgow, F., & Mattern, K. (2006). New tests and new items: Opportunities and issues. In D. Bartram & R. K. Hambleton (Eds.), Computer-based testing and the internet: Issues and advances (pp. 57-75). John Wiley & Sons.

Heissel, J. A., Adam, E. K., Doleac, J. L., Figlio, D. N., & Meer, J. (2019). Test-related stress and student scores on high-stakes exams. NBER Digest. https://www.nber.org/digest/mar19/test-related-stress-and-student-scores-high-stakes-exams

Mackey, A. P., Finn, A. S., Leonard, J. A., Jacoby-Senghor, D. S., West, M. R., Gabrieli, C. F. O., & Gabrieli, J. D. E. (2015). Neuroanatomical correlates of the income-achievement gap. Psychological Science. https://doi.org/10.1177/0956797615572233

Newman, L., Wagner, M., Knokey, A.-M., Marder, C., Nagle, K., Shaver, D., Wei, X., Cameto, R., Contreras, E., Ferguson, K., Greene, S., & Schwarting, M. (2011). The post-high school outcomes of young adults with disabilities up to 8 years after high school. A report from the National Longitudinal Transition Study-2 (NLTS2) (NCSER 2011-3005). SRI International. www.nlts2.org/reports/

National Academies of Sciences, Engineering, and Medicine. (1997). Educating one and all: Students with disabilities and standards-based reform. The National Academies Press. https://doi.org/10.17226/5788

OpenAI (2024, May 30). Introducing ChatGPT EDU. https://openai.com/index/introducing-chatgpt-edu/

Sireci, S. G., & Zenisky, A. L. (2011). Innovative item formats in computer-based testing: In pursuit of improved construct representation. In S. G. Sireci & B. C. Clauser (Eds.), Handbook of test development (pp. 329-345). https://jeromedelisle.org/assets/docs/handbook_of_test_development_1_edition.261113437.pdf

Thompson, N. A., & Weiss, D. A. (2011). A framework for the development of computerized adaptive tests. Practical Assessment, Research, and Evaluation, 16(1), Article 1. https://doi.org/10.7275/wqzt-9427

Thurlow, M., Lazarus, S. S., Albus, D., & Hodgson, J. (2010). Computer-based testing: Practices and considerations (Synthesis Report 78). University of Minnesota, National Center on Educational Outcomes.

Wainer, H. (2000). Computerized adaptive testing: A primer (2nd ed.). Lawrence Erlbaum Associates.

Weiss, D. J. (2011). Better data from better measurements using computerized adaptive testing. Journal of Methods and Measurement in the Social Sciences, 2(1), 1-27. https://journals.librarypublishing.arizona.edu/jmmss/article/769/galley/764/view/

Zhu, E., Lilienthal, A., Shluzas, L. A., Masiello, I., & Zary, N. (2015). Design of mobile augmented reality in health care education: A theory-driven framework. JMIR Medical Education, 1(2), e10. https://doi.org/10.2196/mededu.4443