Managing Learning

15 Learning Analytics and Educational Data Mining

Azim Roussanaly; Anne Boyer; and Jiajun Pan

What are learning analytics?

More and more organizations are using data analysis to solve problems and improve decisions related to their activities. And the world of education is not an exception because, with the generalization of virtual learning environment (VLE) and learning management systems (LMS), massive learning data are now available, generated by the learners’ interaction with these tools.

Learning Analytics (LA) is a disciplinary field defined as “the measurement, collection, analysis and reporting of data about learners and their contexts, for purposes of understanding and optimising learning and the environments in which it occurs”4.

Four types of analytics are generally distinguished according to the question to solve:

- Descriptive Analytics: What happened in the past?

- Diagnostic Analytics: Why something happened in the past?

- Predictive Analytics: What is most likely to happen in the future?

- Prescriptive Analytics What actions should be taken to affect those outcomes?

What is it?

The visualisation to recommendation systems. Research in this area is currently active. We will limit ourselves to summarising the frequent issues encountered in the literature. Each issue leads to families of tools targeting mainly learners or teachers who represent most of the end users of LA-based applications.

Predict and enhance students learning outcome

One of the emblematic applications of LA is the prediction of failures.

Learning indicators are automatically computed from the digital traces and can be accessed directly by learners so that they can adapt their own learning strategies.

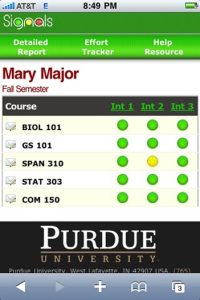

One of the first experiments was conducted at Purdue University (USA) with a mobile application designed as a traffic-light-based dashboard1.

Each student can monitor his or her own progress indicators.

A screenshot of the dashboard is shown in figure 1.

Indicators can also be addressed to teachers, as in an early warning system (EWS).

This is the choice made by the French national centre for Distance Education (CNED) in an ongoing study2.

The objective of an EWS is to alert, as soon as possible, those tutors responsible for monitoring the students, in order to implement appropriate remedial actions.

Analyze student learning process

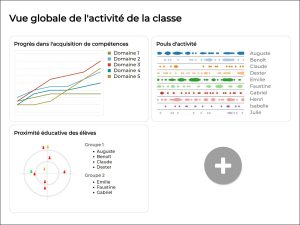

LA techniques can help to model the learning behaviour of a learner or a group of learners (ie a class). The model can be used to display learning processes in LA applications, providing additional information that will enable teachers to detect deficiencies which will help improve training materials and methods. Moreover, the analysis of learning process is a way for observing the learner engagement. For example, in the e-FRAN METAL project, the indicators were brought together in a dashboard co-designed with a team of secondary-school teachers as shown in figure 23.

Personalise learning paths

The personalisation of learning paths can occur in recommendation or adaptive learning systems. Recommendation systems aim to suggest, to each learner, best resources or appropriate behaviour that may help to achieve educational objectives.

Some systems focus on putting the teacher in the loop by first presenting proposed recommendations for their validation. Adaptive learning systems allow the learner to develop skills and knowledge in a more personalised and self-paced way by constantly adjusting the learning path towards the learner experience.

Does it work?

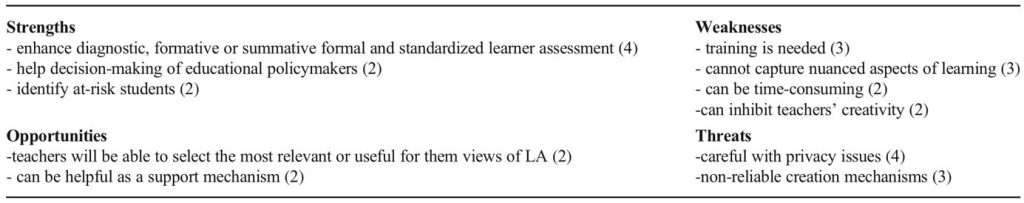

In publications, feedback focuses mainly on students, including those in higher education. Observations generally show improved performance of learners (for example, +10% of grades A and B at Purdue University, US). For teachers, the impact of LA is more complex to assess. Studies based on the technology acceptance model (TAM) suggest that teachers have a positive perception of the use of LA tools. A study shows the final Strengths, Weaknesses, Opportunities and Threats analysis (SWOT) that we reproduce here5 (see figure 3).

Some points of attention, included in the threats and weakness sections, form the basis of the reflection of the Society for Learning Analytics Research (SoLAR) community to recommend an ethics-by-design approach for LA applications (Drashler-16). The recommendations are summarised in a checklist of eight key words: Determine, Explain, Legitimate, Involve, Consent, Anonymise, Technical, External (DELICATE).

1 Arnold, K. and Pistilli, M., Course signals at Purdue: Using learning analytics to increase student success, LAK2012, ACM International Conference Proceeding Series, 2012.

2 Ben Soussia, A., Roussanaly, A., Boyer, A., Toward An Early Risk Alert In A Distance Learning Context, ICALT, 2022.

3 Brun, A., Bonnin, G., Castagnos, S., Roussanaly, A., Boyer, A., Learning Analytics Made in France: The METALproject, IJILT, 2019.

4 Long, P., and Siemens, G., 1st International Conference on Learning Analytics and Knowledge, Banff, Alberta, February 27–March 1, 2011.

5 Mavroudi, A., Teachers’ Views Regarding Learning Analytics Usage Based on the Technology Acceptance Model, TechTrends. 65, 2021.