Managing Learning

17 AI-Speak: Data-based Systems, part 2

The design and implementation of a data-centred project could be broken down to six steps. There is a lot of back and forth between the steps and the whole process may need to be repeated multiple times to get it right.

To be effective in the classroom, multi-disciplinary teams with teachers, pedagogical experts and computer scientists should be involved in each step of the process process1. Human experts are needed to identify the need and design the process, to design and prepare the data, select ML algorithms, to critically interpret the results and to plan how to use the application2.

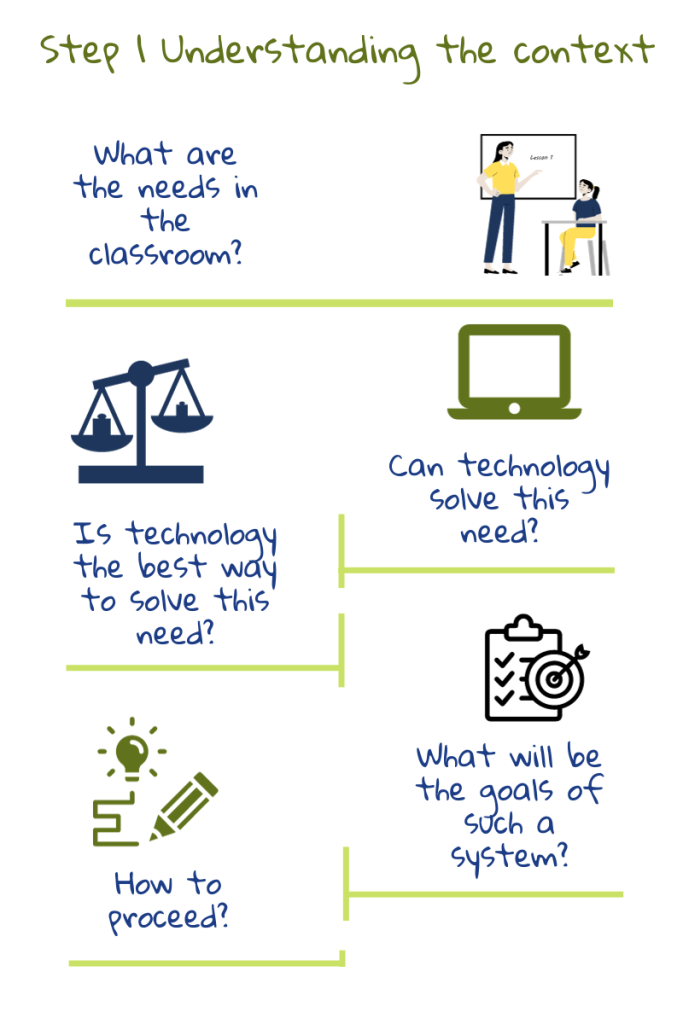

1) Understanding the educational context

The first step in the design of an AIED tool is to understand the needs in the classroom. Once the goals are set, it has to be seen how they can be achieved: what factors to consider and what to ignore. Any data based solution is biased towards phenomena that can be easily calculated and standardised3. Thus, each decision has to be discussed by teachers who will use the tool, by experts in pedagogy who can ensure that all decisions are grounded in proven theory, and by computer scientists who understand how the algorithms work.

The first step in the design of an AIED tool is to understand the needs in the classroom. Once the goals are set, it has to be seen how they can be achieved: what factors to consider and what to ignore. Any data based solution is biased towards phenomena that can be easily calculated and standardised3. Thus, each decision has to be discussed by teachers who will use the tool, by experts in pedagogy who can ensure that all decisions are grounded in proven theory, and by computer scientists who understand how the algorithms work.

There is a lot of back and forth between the two first steps since what is possible will also depend on what data is available2. Moreover, the design of educational tools is also subject to laws which place restrictions on data usage and types of algorithms that can be used.

2) Understanding the data

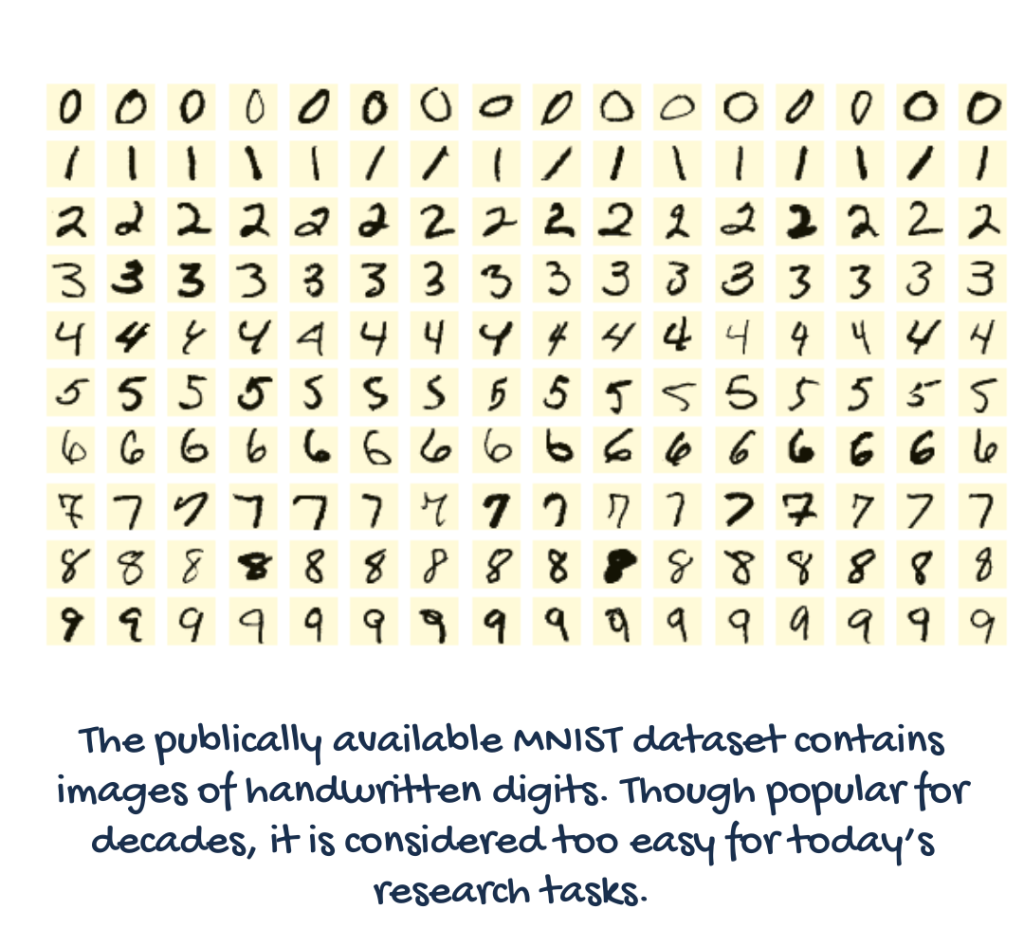

Once the goals and contributing factors are identified, the focus shifts to what data is required, how it will be sourced and labelled, how privacy will be handled and how data quality will be measured3. For a machine learning application to be successful, the datasets have to be large enough, diverse and well-labelled.

Machine learning requires data to train the model and data to work on or predict with. Some ML tasks like face recognition and object recognition already have a lot of private and public databases available for training.

If not already available in a usable learning requires data to train the model and data to work on or use for predictions. Some ML tasks, such as face and object recognition, already have a lot of private and public databases available for training.

If not already available in a usable form, existing datasets may have to be added to or relabelled to fit the needs of the project. If not, dedicated datasets may have to be created and labelled from scratch. Digital traces generated by the student while using an application could also be used as one of the data sources.

In any case, data and features relevant to the problem have to be carefully identified2. Irrelevant or redundant features can push an algorithm into finding false patterns and affect the performance of the system2. Since the machine can find patterns only in the data given to it, choosing the dataset also implicitly defines what the problem is4. If there is a lot of data available, a subset has to be selected with the help of statistical techniques and the data verified to avoid errors and biases.

As an example of a bad training data, in a story from early days of computer vision, a model was trained to discriminate between images of Russian and American tanks. Its high accuracy was later found to be due to the fact the the Russian tanks had been photographed on a cloudy day and the American ones on a sunny day4.

Therefore, the chosen dataset has to be verified for quality, taking into account why it was created, what does it contain, what are the processes used for collecting, cleaning and labelling, distribution and maintenance4. The key questions to ask include Are the datasets fit for their intended purposes and Do the datasets contain hidden hazards that can make models biased or discriminatory?3

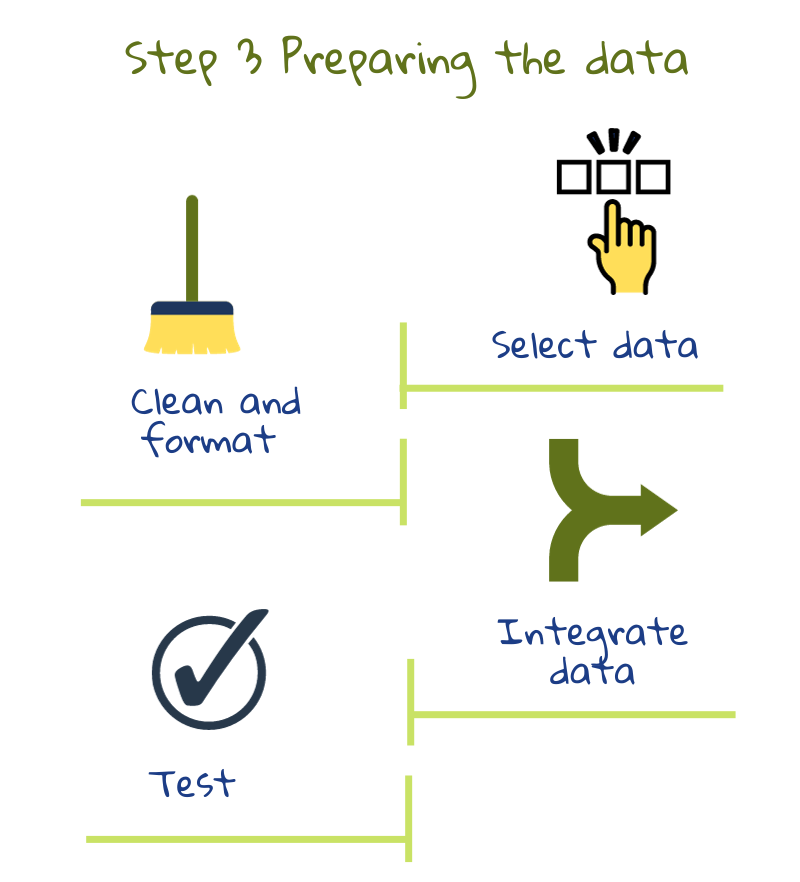

3) Preparing the data

Data-preparation involves creating data sets by merging data available from different places, adjusting for inconsistencies (For example, some test scores could be on a scale of 1 to 10 while others are given as a percentage) and looking for missing or extreme values. Then, automated tests could be performed to check the quality of datasets. This includes checking for privacy leaks and unforeseen correlations or stereotypes2. The datasets might also be split into training and test datasets at this stage. The former is used for training the model and the latter for checking its performance. Testing on the training dataset would be like giving out the exam paper the day before for homework: the student’s exam performance will not indicate their understanding2.

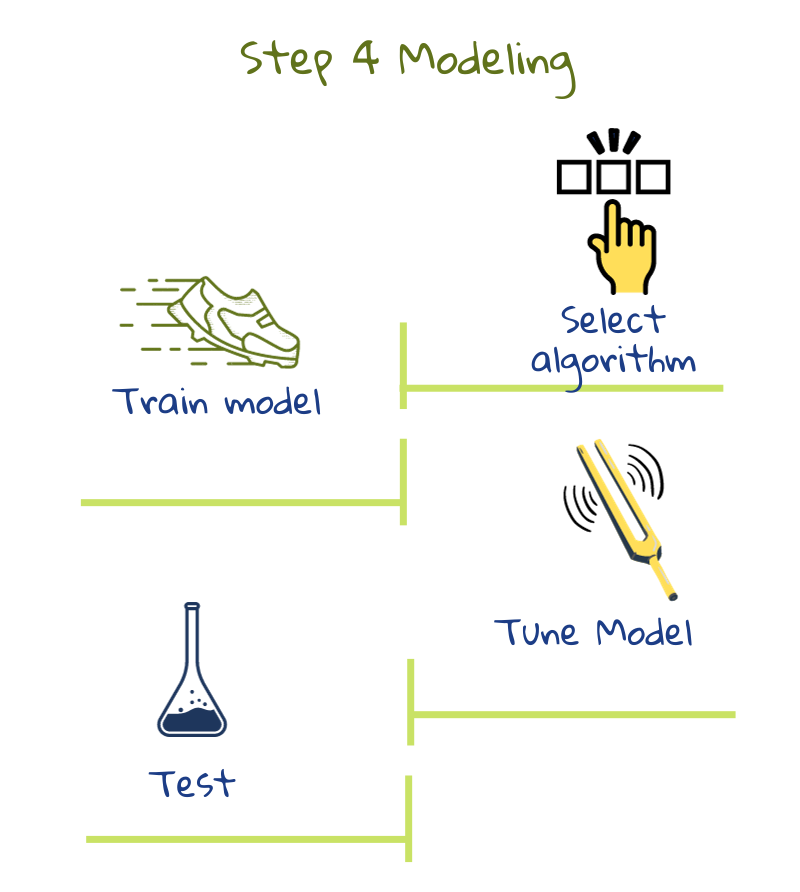

4) Modelling

In this step, algorithms are used to extract patterns in the data and create models. Usually, different algorithms are tested to see what works best. These models can then be put into use to make predictions on new data.

In this step, algorithms are used to extract patterns in the data and create models. Usually, different algorithms are tested to see what works best. These models can then be put into use to make predictions on new data.

In most projects, initial models uncover problems in the data calling for back and forth between steps 2 and 32. As long as there is a strong correlation between the features of the data and the output value, it is likely that a Machine Learning algorithm will generate good predictions.

These algorithms use advanced statistical and computing techniques to process data. The programmers have to adjust the settings and try different algorithms to get the best results. Let us take an application that detects cheating. A false positive is when a student who did not cheat is flagged. A false negative is when a student who cheats is not flagged. System designers can tune the model to minimize either false positives, where some cheating behaviour could be missed, or false negatives, where even doubtful cases are flagged5. The tuning thus depends on what we want the system to do.

5) Evaluation

During the modelling stage, each model can be tuned for accuracy of prediction on the training dataset. Then models are tested on the test dataset and one model is chosen for use. This model is also evaluated on how it meets the educational needs: Are the objectives as set out in step 1 met? Are there any unforeseen problems? Is the quality good? Could anything be improved or done in another way? Is a redesign needed? The main objective is to decide whether the application can be deployed in schools. If not, the whole process is repeated2.

During the modelling stage, each model can be tuned for accuracy of prediction on the training dataset. Then models are tested on the test dataset and one model is chosen for use. This model is also evaluated on how it meets the educational needs: Are the objectives as set out in step 1 met? Are there any unforeseen problems? Is the quality good? Could anything be improved or done in another way? Is a redesign needed? The main objective is to decide whether the application can be deployed in schools. If not, the whole process is repeated2.

6) Deployment

The final step of this process is to see how to integrate the data based application with the school system to get maximum benefits, both with respect to the technical infrastructure and teaching practices.

The final step of this process is to see how to integrate the data based application with the school system to get maximum benefits, both with respect to the technical infrastructure and teaching practices.

Though given as the final step, the whole process is iterative. After deployment, the model should be regularly reviewed to check if it is still relevant to the context. The needs, processes or modes of data capture could change affecting the output of the system. Thus, the application should be reviewed and updated when necessary. The system should be monitored continuously for its impact on learning, teaching and assessment6.

The Ethical guidelines on the use of AI and data for educators stresses that the school should be in contact with the AI service provider throughout the lifecycle of the AI system, even prior to deployment. It should ask for clear technical documentation and seek clarification on unclear points. An agreement should be made for support and maintenance, and it should be made sure that the supplier adhered to all legal obligations6.

The Ethical guidelines on the use of AI and data for educators stresses that the school should be in contact with the AI service provider throughout the lifecycle of the AI system, even prior to deployment. It should ask for clear technical documentation and seek clarification on unclear points. An agreement should be made for support and maintenance, and it should be made sure that the supplier adhered to all legal obligations6.

Note: Both the steps listed here and the illustration are adapted from the CRISP-DM Data science stages and tasks (based on figure 3 in Chapman, Clinton, Kerber, et al. 1999) as described in 2.

1 Du Boulay, B., Poulovasillis, A., Holmes, W., Mavrikis, M., Artificial Intelligence And Big Data Technologies To Close The Achievement Gap,in Luckin, R., ed. Enhancing Learning and Teaching with Technology, London: UCL Institute of Education Press, pp. 256–285, 2018.

2 Kelleher, J.D, Tierney, B, Data Science, London, 2018.

3 Hutchinson, B., Smart, A., Hanna, A., Denton, E., Greer, C., Kjartansson, O., Barnes, P., Mitchell, M., Towards Accountability for Machine Learning Datasets: Practices from Software Engineering and Infrastructure, Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency, Association for Computing Machinery, New York, 2021.

4 Barocas, S., Hardt, M., Narayanan, A., Fairness and machine learning Limitations and Opportunities, MIT Press, 2023.

5 Schneier, B., Data and Goliath: The Hidden Battles to Capture Your Data and Control Your World, W. W. Norton & Company, 2015.

6 Ethical guidelines on the use of artificial intelligence and data in teaching and learning for educators, European Commission, October 2022.