Finding Information

10 AI Speak: Search Engine Indexing

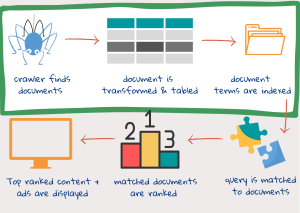

A search engine takes keywords (the search query) entered into the search box, and tries to find the web documents that answer the information. It then displays the information in an easily accessible form, with the most relevant page at the top. In order to do this, the search engine has to start by finding documents on the web and tagging them so that they are easy to retrieve. Let us see in broad strokes what is involved in this process:

Step 1: Web crawlers find and download documents

After a user enters a search query, it is too late to look at all the content available on the internet1. The web documents are looked at beforehand, and their content is broken down and stored in different slots. Once the query is available, all that needs to be done is to match what is in the query with what is in the slots.

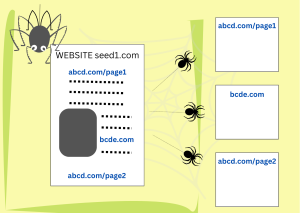

Web crawlers are pieces of code that find and download documents from the web. They start with a set of website addresses (URLs) and look inside them for links to new web pages. Then, they download and look inside the new pages for more links. Provided the starting list was diverse enough, crawlers end up visiting every site that allows access to them, often multiple times, looking for updates.

Step 2: The document gets transformed into multiple pieces

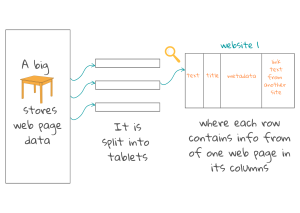

The document downloaded by the crawler might be a clearly structured web page with its own description of content, author, date, etc. It can also be a badly scanned image of an old library book. Search engines can usually read a hundred different document types1. They convert these into html or xml and store them in tables (called BigTable in the case of Google).

A table is made up of smaller sections called tablets in which each row of the tablet is dedicated to one web page. These rows are arranged in some order which is recorded along with a log for updates. Each column has specific information related to the webpage which can help with matching the document content to the contents of a future query. The columns contain:

-

- The website address which may, by itself, give a good description of the contents in the page, if it is a home page with representative content or a side page with associated content;

- Titles, headings and words in bold face that describe important content;

- Metadata of the page. This is information about the page that is not part of the main content, such as the document type (eg, email or web page), document structure and features such as document length, keywords, author names and publishing date;

- Description of links from other pages to this page which provide succinct text regarding different aspects of the page content. More links, more descriptions and more columns used. The presence of links is also used for ranking, to determine how popular a web page is. (Take a look at Google’s Pagerank, a ranking system that uses links to and from a page to gauge quality and popularity).

- People’s names, company or organization names, locations, addresses time and date expressions, quantities and monetary values etc. Machine learning algorithms can be trained to find these entities in any content using training data annotated by a human being1.

One column of the table, perhaps the most important, contains the main content of the document. This has to be identified amidst all the external links and advertisements. One technique uses a machine-learning model to “learn” which is the main content in any webpage.

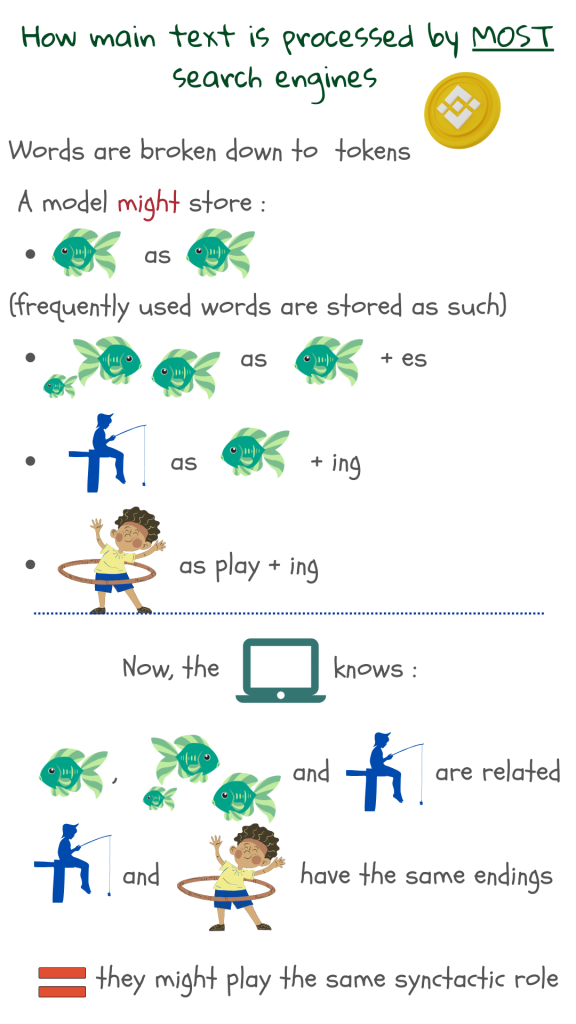

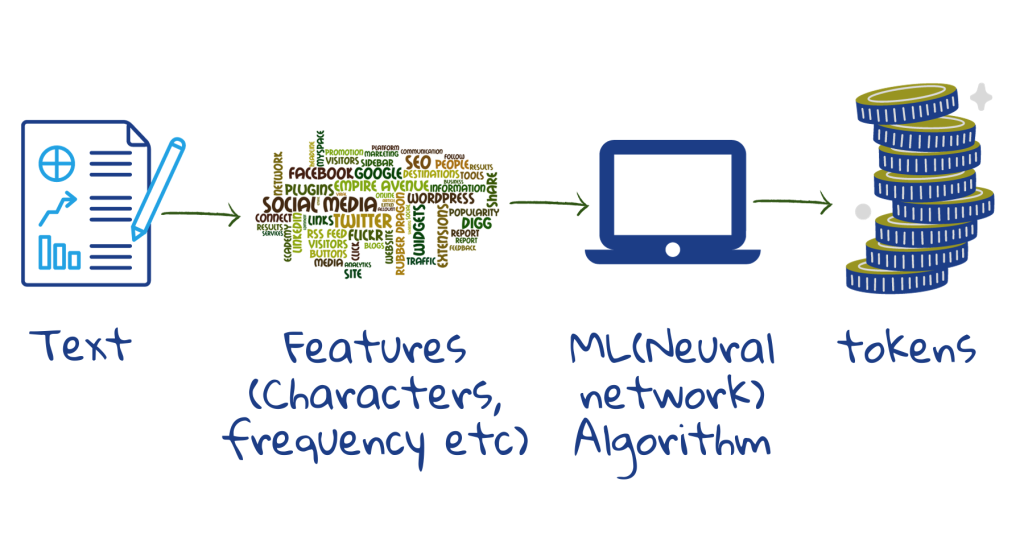

We can of course match exact words from the query to the words in a web document, like the Find button in any word processor. But this is not very effective, as people use different words to talk about the same object. Just recording the separate words will not help to capture how these words combine with each other to create meaning. It is ultimately the thought behind the words that help us communicate, and not the words themselves. Therefore, all search engines transform the text in a way that makes it easier to match with the meaning of the query text. Later, the query is processed similarly.

As parts of a word, the total number of different tokens that need to be stored is reduced. Current models store about 30,000 to 50,000 tokens2. Misspelled words can be identified because parts of them still match with the stored tokens. Unknown words may turn up search results, since their parts might match with the stored tokens.

As parts of a word, the total number of different tokens that need to be stored is reduced. Current models store about 30,000 to 50,000 tokens2. Misspelled words can be identified because parts of them still match with the stored tokens. Unknown words may turn up search results, since their parts might match with the stored tokens.

Here, the training set for machine learning is made of example texts. Starting from individual characters, space and punctuation, the model merges characters that occur frequently, to form new tokens. If the number of tokens is not high enough, it continues the merging process to cover bigger or less frequent word-parts. This way, most of the words, word endings and all prefixes can be covered. Thus, given a new text, the machine can easily split it into tokens and send it to storage.

Step 3 : An index is built for easy reference

Once the data is tucked away in BigTables , an index is created. Similar in idea to textbook indexes, the search index lists tokens and their location in a web document. Statistics show how many times a token occurs in a document and how important is it for the document, etc, and information is positions thus – is the token in the title or a heading, is it concentrated in one part of the document and does one token always follow another?

Nowadays, many search engines use a language-based model generated by deep neural networks. The latter encodes semantic details of the text and is responsible for better understanding of queries3. The neural networks help the search engines to go beyond the query, in order to capture the information-need that induced the query in the first place.

These three steps give a simplified account of what is called “indexing” – finding, preparing and storing documents and creating the index. The steps involved in “ranking” come next – matching query to content and displaying the results according to relevance.

1 Croft, B., Metzler D., Strohman, T., Search Engines, Information Retrieval in Practice, 2015

2 Sennrich,R., Haddow, B., and Birch, A., Neural Machine Translation of Rare Words with Subword Units, In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 1715–1725, Berlin, Germany. Association for Computational Linguistics, 2016.

3 Metzler, D., Tay, Y., Bahri, D., Najork, M., Rethinking Search: Making Domain Experts out of Dilettantes, SIGIR Forum 55, 1, Article 13, June 2021.