19 EXISTENCE AND UNICITY OF SOLUTIONS

19.1. Set of solutions

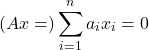

Consider the linear equation in ![]() :

:

![]()

where ![]() and

and ![]() are given, and

are given, and ![]() is the variable.

is the variable.

The set of solutions to the above equation, if it is not empty, is an affine subspace. That is, it is of the form ![]() where

where ![]() is a subspace.

is a subspace.

We’d like to be able to

- determine if a solution exists;

- if so, determine if it is unique;

- compute a solution

if one exists;

if one exists; - find an orthonormal basis of the subspace

.

.

19.2. Existence: range and rank of a matrix

Range

The range (or, image) of a ![]() matrix

matrix ![]() is defined as the following subset of

is defined as the following subset of ![]() :

:

![]()

The range describes the vectors ![]() that can be attained in the output space by an arbitrary choice of a vector

that can be attained in the output space by an arbitrary choice of a vector ![]() , in the input space. The range is simply the span of the columns of

, in the input space. The range is simply the span of the columns of ![]() .

.

If ![]() , we say that the linear equation

, we say that the linear equation ![]() is infeasible. The set of solutions to the linear equation is empty.

is infeasible. The set of solutions to the linear equation is empty.

From a matrix ![]() it is possible to find a matrix, the columns of which span the range of the matrix

it is possible to find a matrix, the columns of which span the range of the matrix ![]() , and are mutually orthogonal. Hence,

, and are mutually orthogonal. Hence, ![]() , where

, where ![]() is the dimension of the range. One algorithm to obtain the matrix

is the dimension of the range. One algorithm to obtain the matrix ![]() is the Gram-Schmidt procedure.

is the Gram-Schmidt procedure.

Example: An infeasible linear system.

Rank

The dimension of the range is called the rank of the matrix. As we will see later, the rank cannot exceed any one of the dimensions of the matrix ![]() . A matrix is said to be full rank if

. A matrix is said to be full rank if ![]() .

.

Note that the rank is a very ‘‘brittle’’ notion, in that small changes in the entries of the matrix can dramatically change its rank. Random matrices are full rank. We will develop here a better, more numerically reliable notion.

| Example 1: Range and rank of a simple matrix. |

| Let’s consider the matrix |

|

|

| Range: The columns of |

|

|

| Any linear combination of these vectors can be represented as |

| Rank: The rank of a matrix is the dimension of its range. For our matrix |

|

|

| Thus, the matrix |

See also:

Full row rank matrices

The matrix ![]() is said to be full row rank (or, onto) if the range is the whole output space,

is said to be full row rank (or, onto) if the range is the whole output space, ![]() . The name ‘‘full row rank’’ comes from the fact that the rank equals the row dimension of

. The name ‘‘full row rank’’ comes from the fact that the rank equals the row dimension of ![]() . Since the rank is always less than the smallest of the number of columns and rows, a

. Since the rank is always less than the smallest of the number of columns and rows, a ![]() matrix of full row rank has necessarily less rows than columns (that is,

matrix of full row rank has necessarily less rows than columns (that is, ![]() ).

).

An equivalent condition for ![]() to be full row rank is that the square,

to be full row rank is that the square, ![]() matrix

matrix ![]() is invertible, meaning that it has full rank,

is invertible, meaning that it has full rank, ![]() .

.

19.3. Unicity: nullspace of a matrix

Nullspace

The nullspace (or, kernel) of a ![]() matrix

matrix ![]() is the following subspace of

is the following subspace of ![]() :

:

![]()

The nullspace describes the ambiguity in ![]() given

given ![]() : any

: any ![]() will be such that

will be such that ![]() , so

, so ![]() cannot be determined by the sole knowledge of

cannot be determined by the sole knowledge of ![]() if the nullspace is not reduced to the singleton

if the nullspace is not reduced to the singleton ![]() .

.

From a matrix ![]() we can obtain a matrix, the columns of which span the nullspace of the matrix

we can obtain a matrix, the columns of which span the nullspace of the matrix ![]() , and are mutually orthogonal. Hence,

, and are mutually orthogonal. Hence, ![]() , where

, where ![]() is the dimension of the nullspace.

is the dimension of the nullspace.

| Example 2: Nullspace of a simple matrix. |

| Consider the matrix |

|

|

| The nullspace, |

|

|

| Given the matrix structure, for any vector |

| For example, the vector |

|

|

| satisfies |

Nullity

The nullity of a matrix is the dimension of the nullspace. The rank-nullity theorem states that the nullity of a ![]() matrix

matrix ![]() is

is ![]() , where

, where ![]() is the rank of

is the rank of ![]() .

.

Full column rank matrices

The matrix ![]() is said to be full column rank (or, one-to-one) if its nullspace is the singleton

is said to be full column rank (or, one-to-one) if its nullspace is the singleton ![]() . In this case, if we denote by

. In this case, if we denote by ![]() the

the ![]() columns of

columns of ![]() , the equation

, the equation

has ![]() as the unique solution. Hence,

as the unique solution. Hence, ![]() is one-to-one if and only if its columns are independent. Since the rank is always less than the smallest of the number of columns and rows, a

is one-to-one if and only if its columns are independent. Since the rank is always less than the smallest of the number of columns and rows, a ![]() matrix of full column rank has necessarily less columns than rows (that is,

matrix of full column rank has necessarily less columns than rows (that is, ![]() ).

).

The term ‘‘one-to-one’’ comes from the fact that for such matrices, the condition ![]() uniquely determines

uniquely determines ![]() , since

, since ![]() and

and ![]() implies

implies ![]() , so that the solution is unique:

, so that the solution is unique: ![]() . The name ‘‘full column rank’’ comes from the fact that the rank equals the column dimension of

. The name ‘‘full column rank’’ comes from the fact that the rank equals the column dimension of ![]() .

.

An equivalent condition for ![]() to be full column rank is that the square,

to be full column rank is that the square, ![]() matrix

matrix ![]() is invertible, meaning that it has full rank,

is invertible, meaning that it has full rank, ![]() .

.

Example: Nullspace of a transpose incidence matrix.

19.4. Fundamental facts

Two important results about the nullspace and range of a matrix.

Rank-nullity theorem

|

The nullity (dimension of the nullspace) and the rank (dimension of the range) of a |

Another important result is involves the definition of the orthogonal complement of a subspace.

Fundamental theorem of linear algebra

|

The range of a matrix is the orthogonal complement of the nullspace of its transpose. That is, for a |

![Rendered by QuickLaTeX.com \[ x = \begin{pmatrix} -2 \\ 1 \\ 0 \end{pmatrix}, \]](https://pressbooks.pub/app/uploads/quicklatex/quicklatex.com-16b9ba83b8b9b6377a117ddff5d145f8_l3.png)

![Rendered by QuickLaTeX.com \begin{align*} \begin{pmatrix} a_1 & a_2 \end{pmatrix}, \quad A^T = \begin{pmatrix} a_1^T \\[1ex] a_2^T \end{pmatrix}. \end{align*}](https://pressbooks.pub/app/uploads/quicklatex/quicklatex.com-42e661a2d325aacbd8879beb2be9a5f5_l3.png)