23 ORDINARY LEAST-SQUARES

23.1. Definition

The Ordinary Least-Squares (OLS, or LS) problem is defined as

![]()

where ![]() are given. Together, the pair

are given. Together, the pair ![]() is referred to as the problem data. The vector

is referred to as the problem data. The vector ![]() is often referred to as the ‘‘measurement” or “output” vector, and the data matrix

is often referred to as the ‘‘measurement” or “output” vector, and the data matrix ![]() as the ‘‘design‘‘ or ‘‘input‘‘ matrix. The vector

as the ‘‘design‘‘ or ‘‘input‘‘ matrix. The vector ![]() is referred to as the residual error vector.

is referred to as the residual error vector.

Note that the problem is equivalent to one where the norm is not squared. Taking the squares is done for the convenience of the solution.

23.2. Interpretations

Interpretation as projection on the range

See also: Image compression via least-squares.

Interpretation as minimum distance to feasibility

The OLS problem is usually applied to problems where the linear ![]() is not feasible, that is, there is no solution to

is not feasible, that is, there is no solution to ![]() .

.

The OLS can be interpreted as finding the smallest (in Euclidean norm sense) perturbation of the right-hand side, ![]() , such that the linear equation

, such that the linear equation

![]()

becomes feasible. In this sense, the OLS formulation implicitly assumes that the data matrix ![]() of the problem is known exactly, while only the right-hand side is subject to perturbation, or measurement errors. A more elaborate model, total least-squares, takes into account errors in both

of the problem is known exactly, while only the right-hand side is subject to perturbation, or measurement errors. A more elaborate model, total least-squares, takes into account errors in both ![]() and

and ![]() .

.

Interpretation as regression

We can also interpret the problem in terms of the rows of ![]() , as follows. Assume that

, as follows. Assume that ![]() , where

, where ![]() is the

is the ![]() -th row of

-th row of ![]() . The problem reads

. The problem reads

![]()

In this sense, we are trying to fit each component of ![]() as a linear combination of the corresponding input

as a linear combination of the corresponding input ![]() , with

, with ![]() as the coefficients of this linear combination.

as the coefficients of this linear combination.

See also:

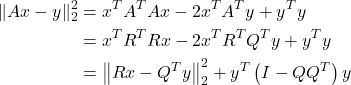

23.3. Solution via QR decomposition (full rank case)

![]()

This can be seen by simply taking the gradient (vector of derivatives) of the objective function, which leads to the optimality condition ![]() . Geometrically, the residual vector

. Geometrically, the residual vector ![]() is orthogonal to the span of the columns of

is orthogonal to the span of the columns of ![]() , as seen in the picture above.

, as seen in the picture above.

We can also prove this via the QR decomposition of the matrix ![]() with

with ![]() a

a ![]() matrix with orthonormal columns (

matrix with orthonormal columns ( ![]() ) and

) and ![]() a

a ![]() upper-triangular, invertible matrix. Noting that

upper-triangular, invertible matrix. Noting that

and exploiting the fact that ![]() is invertible, we obtain the optimal solution

is invertible, we obtain the optimal solution ![]() . This is the same as the formula above, since

. This is the same as the formula above, since

![]()

Thus, to find the solution based on the QR decomposition, we just need to implement two steps:

- Rotate the output vector: set

.

. - Solve the triangular system

by backward substitution.

by backward substitution.

23.4. Optimal solution and optimal set

Recall that the optimal set of a minimization problem is its set of minimizers. For least-squares problems, the optimal set is an affine set, which reduces to a singleton when ![]() is full column rank.

is full column rank.

In the general case (![]() is not necessarily tall, and /or not full rank) then the solution may not be unique. If

is not necessarily tall, and /or not full rank) then the solution may not be unique. If ![]() is a particular solution, then

is a particular solution, then ![]() is also a solution, if

is also a solution, if ![]() is such that

is such that ![]() , that is,

, that is, ![]() . That is, the nullspace of

. That is, the nullspace of ![]() describes the ambiguity of solutions. In mathematical terms:

describes the ambiguity of solutions. In mathematical terms:

![]()

The formal expression for the set of minimizers to the least-squares problem can be found again via the QR decomposition. This is shown here.

![Rendered by QuickLaTeX.com \[\min _x\left\|\sum_{j=1}^n x_j a_j-y\right\|_2 .\]](https://pressbooks.pub/app/uploads/quicklatex/quicklatex.com-9991a188d7e7374b521755f7b245a599_l3.png)