31 PRINCIPAL COMPONENT ANALYSIS

31.1. Projection on a line via variance maximization

Consider a data set of ![]() points

points ![]() ,

, ![]() in

in ![]() . We can represent this data set as a

. We can represent this data set as a ![]() matrix

matrix ![]() , where each

, where each ![]() is a

is a ![]() -vector. The goal of the variance maximization problem is to find a direction

-vector. The goal of the variance maximization problem is to find a direction ![]() such that the sample variance of the corresponding vector

such that the sample variance of the corresponding vector ![]() is maximal.

is maximal.

Recall that when ![]() is normalized, the scalar

is normalized, the scalar ![]() is the component of

is the component of ![]() along

along ![]() , that is, it corresponds to the projection of

, that is, it corresponds to the projection of ![]() on the line passing through

on the line passing through ![]() and with direction

and with direction ![]() .

.

Here, we seek a (normalized) direction ![]() such that the empirical variance of the projected values

such that the empirical variance of the projected values ![]() ,

, ![]() , is large. If

, is large. If ![]() is the vector of averages of the

is the vector of averages of the ![]() ‘s, then the average of the projected values is

‘s, then the average of the projected values is ![]() . Thus, the direction of maximal variance is one that solves the optimization problem

. Thus, the direction of maximal variance is one that solves the optimization problem

The above problem can be formulated as

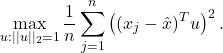

![]()

where

is the ![]() sample covariance matrix of the data.

sample covariance matrix of the data.

We have seen the above problem before, under the name of the Rayleigh quotient of a symmetric matrix. Solving the problem entails simply finding an eigenvector of the covariance matrix ![]() that corresponds to the largest eigenvalue.

that corresponds to the largest eigenvalue.

|

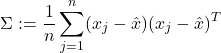

Maximal variance direction for the Senate voting data. This image shows the scores assigned to each Senator along the direction of maximal variance, |

| Note that the largest absolute score obtained in this plot is about 18 times bigger than that observed on the projection in a random direction. This is consistent with the fact that the current direction has a maximal variance. |

31.2. Principal component analysis

Main idea

The main idea behind principal component analysis is to first find a direction that corresponds to maximal variance between the data points. The data is then projected on the hyperplane orthogonal to that direction. We obtain a new data set and find a new direction of maximal variance. We may stop the process when we have collected enough directions (say, three if we want to visualize the data in 3D).

It turns out that the directions found in this way are precisely the eigenvectors of the data’s covariance matrix. The term principal components refers to the directions given by these eigenvectors. Mathematically, the process thus amounts to finding the eigenvalue decomposition of a positive semi-definite matrix, the covariance matrix of the data points.

Projection on a plane

The projection used to obtain, say, a two-dimensional view with the largest variance, is of the form ![]() , where

, where ![]() is a matrix that contains the eigenvectors corresponding to the first two eigenvalues.

is a matrix that contains the eigenvectors corresponding to the first two eigenvalues.

|

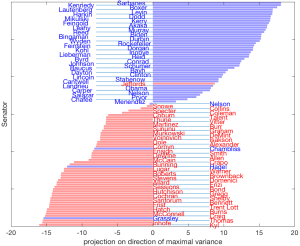

Two-dimensional projection of the Senate voting matrix: This particular planar projection uses the two eigenvectors corresponding to the largest two eigenvalues of the data’s covariance matrix. It seems to allow to cluster of the Senators along party lines and is therefore more informative than, say, the plane corresponding to the two smallest eigenvalues. |

31.3. Explained variance

The total variance in the data is defined as the sum of the variances of the individual components. This quantity is simply the trace of the covariance matrix since the diagonal elements of the latter contain the variances. If ![]() has the EVD

has the EVD ![]() , where

, where ![]() contains the eigenvalues, and

contains the eigenvalues, and ![]() an orthogonal matrix of eigenvectors, then to total variance can be expressed as the sum of all the eigenvalues:

an orthogonal matrix of eigenvectors, then to total variance can be expressed as the sum of all the eigenvalues:

![]()

When we project the data on a two-dimensional plane corresponding to the eigenvectors ![]() associated with the two largest eigenvalues

associated with the two largest eigenvalues ![]() , we get a new covariance matrix

, we get a new covariance matrix ![]() , where

, where ![]() the total variance of the projected data is

the total variance of the projected data is

![]()

Hence, we can define the ratio of variance ‘‘explained’’ by the projected data as the ratio:

![]()

If the ratio is high, we can say that much of the variation in the data can be observed on the projected plane.

|

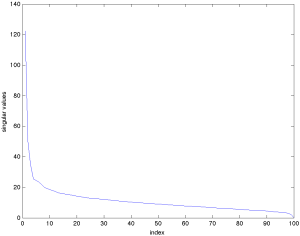

This image shows the eigenvalues of the |