Spectral theorem: Eigenvalue decomposition for symmetric matrices

Spectral theorem

|

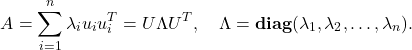

We can decompose any symmetric matrix

where the matrix of |

Proof: The proof is by induction on the size ![]() of the matrix

of the matrix ![]() . The result is trivial for

. The result is trivial for ![]() . Now let

. Now let ![]() and assume the result is true for any matrix of size

and assume the result is true for any matrix of size ![]() .

.

Consider the function of ![]() ,

, ![]() . From the basic properties of the determinant, it is a polynomial of degree

. From the basic properties of the determinant, it is a polynomial of degree ![]() , called the characteristic polynomial of

, called the characteristic polynomial of ![]() . By the fundamental theorem of algebra, any polynomial of degree

. By the fundamental theorem of algebra, any polynomial of degree ![]() has

has ![]() (possibly not distinct) complex roots; these are called the eigenvalues of

(possibly not distinct) complex roots; these are called the eigenvalues of ![]() . We denote these eigenvalues by

. We denote these eigenvalues by ![]() .

.

If ![]() is an eigenvalue of

is an eigenvalue of ![]() , that is,

, that is, ![]() , then

, then ![]() must be non-invertible (see here). This means that there exists a non-zero real vector

must be non-invertible (see here). This means that there exists a non-zero real vector ![]() such that

such that ![]() . We can always normalize

. We can always normalize ![]() so that

so that ![]() . Thus,

. Thus, ![]() is real. That is, the eigenvalues of a symmetric matrix are always real.

is real. That is, the eigenvalues of a symmetric matrix are always real.

Now consider the eigenvalue ![]() and an associated eigenvector

and an associated eigenvector ![]() . Using the Gram-Schmidt orthogonalization procedure, we can compute a

. Using the Gram-Schmidt orthogonalization procedure, we can compute a ![]() matrix

matrix ![]() such that

such that ![]() is orthogonal. By induction, we can write the

is orthogonal. By induction, we can write the ![]() symmetric matrix

symmetric matrix ![]() as,

as, ![]() where

where ![]() is a

is a ![]() matrix of eigenvectors, and

matrix of eigenvectors, and ![]() are the

are the ![]() eigenvalues of

eigenvalues of ![]() . Finally, we define the

. Finally, we define the ![]() matrix

matrix ![]() . By construction the matrix

. By construction the matrix ![]() is orthogonal.

is orthogonal.

We have

![]()

where we have exploited the fact that ![]() , and

, and ![]() .

.

We have exhibited an orthogonal ![]() matrix

matrix ![]() such that

such that ![]() is diagonal. This proves the theorem.

is diagonal. This proves the theorem.