24 Peer Feedback for Quality Assurance

Wait! Why is This in the Evaluate Section?

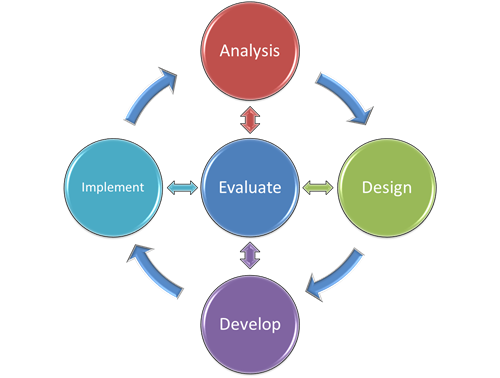

![]() Remember that in the chapter ADDIE: A Framework for Exploring ID, I noted that the ADDIE instructional design model is actually cyclical, as opposed to linear. As illustrated below, the Evaluate component forms a central hub, informing what we do in every other stage of the ADDIE process.

Remember that in the chapter ADDIE: A Framework for Exploring ID, I noted that the ADDIE instructional design model is actually cyclical, as opposed to linear. As illustrated below, the Evaluate component forms a central hub, informing what we do in every other stage of the ADDIE process.

While I seek collegial feedback and ask participants in my courses to complete peer reviews from an instructional designer’s perspective before completing the development phase of a pilot module, that feedback represents a form of Evaluation in the ADDIE process. So, why do we seek this kind of peer feedback before testing our prototypes? What should we look for? And how should we use that feedback?

Addressing Quality Assurance

No matter how well you plan before building an Online Teaching Unit, there are things you are bound to miss when you actually build it. A second set of eyes is always helpful to proofread your content, and to test out all of your interactive elements. But there is more to seeking peer feedback than just proofreading. Several schools and organizations have done a great deal of work in the development of Quality Assurance standards, to make sure the online learning experience is as good as it can be for students. Many of these standards are related to making sure the online unit is as accessible as possible. Maryland Online’s (2017) Quality Matters organization and the University of New Mexico (2015) have both provided comprehensive course review rubrics that can be used for self or peer review of an online course.

View ASC Tech (2013)’s Explanation of the Quality Matters Rubric:

The Quality Matters Peer Review Process

In the following video (Multimedia Design, 2017), faculty from the University of Toledo discuss what they see as the benefits of participating in a formal peer-review process using the Quality Matters framework:

Questions to Consider

- What are the benefits of getting a colleague to peer review your online module before piloting it with students?

- What should they look for in a peer review?

- How should you use the results?

Further Reading

- Review Maryland Online (2017). QM Rubrics & Standards.

- Review UNM Extended Learning (2015). Online Course Standards Rubric.

A Rebuttal: Are Quality Assurance Rubrics Too Rigid?

In their blog post, The Cult of Quality Matters, Burtis and Stommel (2021, August 10) lament what they see as a misguided over-reliance on quality assurance tools such as the Quality Matters rubric. They argue that such rubrics place too much emphasis on rigid conformity to prescriptive instructional design standards at the expense of an instructor’s creativity and pedagogical insight. They also see it as something that dehumanizes the learner.

Burtis and Stommel do not advocate tossing aside checklists and rubrics. Rather, they argue that we should be more flexible to meet the human-centered needs of our online courses.

Questions to Consider

- What are Burtis and Stommel’s key objections to reliance on quality assurance rubrics?

- What do they recommend?

- How do you see us in our use of checklists to provide peer feedback during the design and development phases of our pilot projects? Are we too rigid? Or are we bringing the right mix of standardized guidance and insightful feedback?

After viewing and reading the resources on peer reviews for Quality Assurance, you should know how these concepts apply to your Online Teaching Module project. What comes to mind as you review the resources on peer reviews and Quality Assurance for online teaching? What questions do you have about using Quality Assurance tools to improve your Online Teaching Module?

More Resources

I used the Quality Matters Self-Assessment Rubric to conduct peer reviews of the mobile reusable learning objects and the Collaborative Situated Active Mobile Learning Design framework Canvas course I designed as part of my doctoral dissertation research (Power, 2015). Chapter 4 describes how those reviews were conducted and how the results were used to guide revisions to the pilot version of the course before conducting the course with actual students.

I used the Quality Matters Self-Assessment Rubric to conduct peer reviews of the mobile reusable learning objects and the Collaborative Situated Active Mobile Learning Design framework Canvas course I designed as part of my doctoral dissertation research (Power, 2015). Chapter 4 describes how those reviews were conducted and how the results were used to guide revisions to the pilot version of the course before conducting the course with actual students.

- Optional – Read Power (2015). Chapter 4.

Conducting Your Own “Expert” Peer Reviews

Before pilot testing, an instructional design project with a group of “students,” participants in my instructional design courses work with an “expert” peer feedback group. That small group simulates the real-world context of being part of an ID development team, where individual members have their own ongoing projects. Just like in a real-world context, your colleagues can help you make initial design decisions, troubleshoot issues you encounter as you develop your digital resources, and provide feedback before you test your resources with students.

For the ISD projects in my instructional design courses, members of these small working groups are asked to complete an “expert” peer review of their group members’ projects. I provide them with an ISD Project Peer Feedback Form that has been developed based on the principles explored in the course and this eBook, as well as the Community of Inquiry model (Athabasca University, n.d.; Garrison et al., 2000; Kineshanko, 2016). Group members would complete the reviews, forward a copy to the respective group members, and then submit copies of all of their completed reviews to me as one of their course assignments. Afterward, participants would use the feedback they received to guide them as they tweak and finalize their projects before “going live” with the pilot testing phase.

Resources

- Download a Copy of the ISD Project Peer Feedback Form (MS Word version).

- Download a Copy of the ISD Project Peer Feedback Form (PDF version).

Activity

Course Participants

|

References

Athabasca University (n.d.). Community of Inquiry. https://coi.athabascau.ca/

ASC Tech (2013, December 19). Explanation of the Quality Matters Rubric. [YouTube video]. https://youtu.be/xy-Y26J83po

Burtis, M. & Stommel, J. (2021, August 10). The Cult of Quality Matters [Web log post]. Hybrid Pedagogy. https://hybridpedagogy.org/the-cult-of-quality-matters/

Garrison, D. R., Anderson, T., & Archer, W. (2000). Critical inquiry in a text-based environment: Computer conferencing in higher education model. The Internet and Higher Education, 2(2-3), 87-105. https://auspace.athabascau.ca/handle/2149/739

Kineshanko, M. (2016). A thematic synthesis of Community of Inquiry research 2000 to 2014. (Doctoral dissertation, Athabasca University). http://hdl.handle.net/10791/190

Maryland Online. (2017). QM Rubrics & Standards. [Web page]. https://www.qualitymatters.org/qa-resources/rubric-standards

Multimedia Design (2017, May 3). UT Online: Quality Matters Peer Review Process. https://youtu.be/YuQxsZJIYyU

Power, R. (2015). A framework for promoting teacher self-efficacy with mobile reusable learning objects (Doctoral dissertation, Athabasca University). DOI: 10.13140/RG.2.1.1160.4889. http://hdl.handle.net/10791/63

UNM Extended Learning. (2015, May 1). Online Course Standards Rubric. University of New Mexico. https://ctl.unm.edu/assets/docs/instructors/online-course-standards-rubric-pdf.pdf

Before you pilot test your digital learning resources or online course module with live students, ask a colleague to use the ISD Project Peer Feedback Form to guide a systematic review and provide you with feedback. Use their feedback to inform any tweaking or revisions you need to make your learning resources as effective as possible.

Before you pilot test your digital learning resources or online course module with live students, ask a colleague to use the ISD Project Peer Feedback Form to guide a systematic review and provide you with feedback. Use their feedback to inform any tweaking or revisions you need to make your learning resources as effective as possible.